Introduction

In this post, we will explore the use of HashiCorp Consul for distributed configuration of containerized Spring Boot services, deployed to a Docker swarm cluster. In the first half of the post, we will provision a series of virtual machines, build a Docker swarm on top of those VMs, and install Consul and Registrator on each swarm host. In the second half of the post, we will configure and deploy multiple instances of a containerized Spring Boot service, backed by MongoDB, to the swarm cluster, using Docker Compose.

The final objective of this post is to have all the deployed services registered with Consul, via Registrator, and the service’s runtime configuration provided dynamically by Consul.

Objectives

- Provision a series of virtual machine hosts, using Docker Machine and Oracle VirtualBox

- Provide distributed and highly available cluster management and service orchestration, using Docker swarm mode

- Provide distributed and highly available service discovery, health checking, and a hierarchical key/value store, using HashiCorp Consul

- Provide service discovery and automatic registration of containerized services to Consul, using Registrator, Glider Labs’ service registry bridge for Docker

- Provide distributed configuration for containerized Spring Boot services using Consul and Pivotal Spring Cloud Consul Config

- Deploy multiple instances of a Spring Boot service, backed by MongoDB, to the swarm cluster, using Docker Compose version 3

Technologies

- Docker

- Docker Compose (v3)

- Docker Hub

- Docker Machine

- Docker swarm mode

- Docker Swarm Visualizer (Mano Marks)

- Glider Labs Registrator

- Gradle

- HashiCorp Consul

- Java

- MongoDB

- Oracle VirtualBox VM Manager

- Spring Boot

- Spring Cloud Consul Config

- Travis CI

Code Sources

All code in this post exists in two GitHub repositories. I have labeled each code snippet in the post with the corresponding file in the repositories. The first repository, consul-docker-swarm-compose, contains all the code necessary for provisioning the VMs and building the Docker swarm and Consul clusters. The repo also contains code for deploying Swarm Visualizer and Registrator. Make sure you clone the swarm-mode branch.

git clone --depth 1 --branch swarm-mode \ https://github.com/garystafford/microservice-docker-demo-consul.git cd microservice-docker-demo-consul

The second repository, microservice-docker-demo-widget, contains all the code necessary for configuring Consul and deploying the Widget service stack. Make sure you clone the consul branch.

git clone --depth 1 --branch consul \ https://github.com/garystafford/microservice-docker-demo-widget.git cd microservice-docker-demo-widget

Docker Versions

With the Docker toolset evolving so quickly, features frequently change or become outmoded. At the time of this post, I am running the following versions on my Mac.

- Docker Engine version 1.13.1

- Boot2Docker version 1.13.1

- Docker Compose version 1.11.2

- Docker Machine version 0.10.0

- HashiCorp Consul version 0.7.5

Provisioning VM Hosts

First, we will provision a series of six virtual machines (aka machines, VMs, or hosts), using Docker Machine and Oracle VirtualBox.

By switching Docker Machine’s driver, you can easily switch from VirtualBox to other vendors, such as VMware, AWS, GCE, or AWS. I have explicitly set several VirtualBox driver options, using the default values, for better clarification into the VirtualBox VMs being created.

vms=( "manager1" "manager2" "manager3"

"worker1" "worker2" "worker3" )

for vm in ${vms[@]}

do

docker-machine create \

--driver virtualbox \

--virtualbox-memory "1024" \

--virtualbox-cpu-count "1" \

--virtualbox-disk-size "20000" \

${vm}

done

Using the docker-machine ls command, we should observe the resulting series of VMs looks similar to the following. Note each of the six VMs has a machine name and an IP address in the range of 192.168.99.1/24.

$ docker-machine ls NAME ACTIVE DRIVER STATE URL SWARM DOCKER ERRORS manager1 - virtualbox Running tcp://192.168.99.109:2376 v1.13.1 manager2 - virtualbox Running tcp://192.168.99.110:2376 v1.13.1 manager3 - virtualbox Running tcp://192.168.99.111:2376 v1.13.1 worker1 - virtualbox Running tcp://192.168.99.112:2376 v1.13.1 worker2 - virtualbox Running tcp://192.168.99.113:2376 v1.13.1 worker3 - virtualbox Running tcp://192.168.99.114:2376 v1.13.1

We may also view the VMs using the Oracle VM VirtualBox Manager application.

Docker Swarm Mode

Next, we will provide, amongst other capabilities, cluster management and orchestration, using Docker swarm mode. It is important to understand that the relatively new Docker swarm mode is not the same the Docker Swarm. Legacy Docker Swarm was succeeded by Docker’s integrated swarm mode, with the release of Docker v1.12.0, in July 2016.

We will create a swarm (a cluster of Docker Engines or nodes), consisting of three Manager nodes and three Worker nodes, on the six VirtualBox VMs. Using this configuration, the swarm will be distributed and highly available, able to suffer the loss of one of the Manager nodes, without failing.

Manager Nodes

First, we will create the initial Docker swarm Manager node.

SWARM_MANAGER_IP=$(docker-machine ip manager1)

echo ${SWARM_MANAGER_IP}

docker-machine ssh manager1 \

"docker swarm init \

--advertise-addr ${SWARM_MANAGER_IP}"

This initial Manager node advertises its IP address to future swarm members.

Next, we will create two additional swarm Manager nodes, which will join the initial Manager node, by using the initial Manager node’s advertised IP address. The three Manager nodes will then elect a single Leader to conduct orchestration tasks. According to Docker, Manager nodes implement the Raft Consensus Algorithm to manage the global cluster state.

vms=( "manager1" "manager2" "manager3"

"worker1" "worker2" "worker3" )

docker-machine env manager1

eval $(docker-machine env manager1)

MANAGER_SWARM_JOIN=$(docker-machine ssh ${vms[0]} "docker swarm join-token manager")

MANAGER_SWARM_JOIN=$(echo ${MANAGER_SWARM_JOIN} | grep -E "(docker).*(2377)" -o)

MANAGER_SWARM_JOIN=$(echo ${MANAGER_SWARM_JOIN//\\/''})

echo ${MANAGER_SWARM_JOIN}

for vm in ${vms[@]:1:2}

do

docker-machine ssh ${vm} ${MANAGER_SWARM_JOIN}

done

A quick note on the string manipulation of the MANAGER_SWARM_JOIN variable, above. Running the docker swarm join-token manager command, outputs something similar to the following.

To add a manager to this swarm, run the following command:

docker swarm join \

--token SWMTKN-1-1s53oajsj19lgniar3x1gtz3z9x0iwwumlew0h9ism5alt2iic-1qxo1nx24pyd0pg61hr6pp47t \

192.168.99.109:2377

Using a bit of string manipulation, the resulting value of the MANAGER_SWARM_JOIN variable will be similar to the following command. We then ssh into each host and execute this command, one for the Manager nodes and another similar command, for the Worker nodes.

docker swarm join --token SWMTKN-1-1s53oajsj19lgniar3x1gtz3z9x0iwwumlew0h9ism5alt2iic-1qxo1nx24pyd0pg61hr6pp47t 192.168.99.109:2377

Worker Nodes

Next, we will create three swarm Worker nodes, using a similar method. The three Worker nodes will join the three swarm Manager nodes, as part of the swarm cluster. The main difference, according to Docker, Worker node’s “sole purpose is to execute containers. Worker nodes don’t participate in the Raft distributed state, make in scheduling decisions, or serve the swarm mode HTTP API.”

vms=( "manager1" "manager2" "manager3"

"worker1" "worker2" "worker3" )

WORKER_SWARM_JOIN=$(docker-machine ssh manager1 "docker swarm join-token worker")

WORKER_SWARM_JOIN=$(echo ${WORKER_SWARM_JOIN} | grep -E "(docker).*(2377)" -o)

WORKER_SWARM_JOIN=$(echo ${WORKER_SWARM_JOIN//\\/''})

echo ${WORKER_SWARM_JOIN}

for vm in ${vms[@]:3:3}

do

docker-machine ssh ${vm} ${WORKER_SWARM_JOIN}

done

Using the docker node ls command, we should observe the resulting Docker swarm cluster looks similar to the following:

$ docker node ls ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS u75gflqvu7blmt5mcr2dcp4g5 * manager1 Ready Active Leader 0b7pkek76dzeedtqvvjssdt2s manager2 Ready Active Reachable s4mmra8qdgwukjsfg007rvbge manager3 Ready Active Reachable n89upik5v2xrjjaeuy9d4jybl worker1 Ready Active nsy55qzavzxv7xmanraijdw4i worker2 Ready Active hhn1l3qhej0ajmj85gp8qhpai worker3 Ready Active

Note the three swarm Manager nodes, three swarm Worker nodes, and the Manager, which was elected Leader. The other two Manager nodes are marked as Reachable. The asterisk indicates manager1 is the active machine.

I have also deployed Mano Marks’ Docker Swarm Visualizer to each of the swarm cluster’s three Manager nodes. This tool is described as a visualizer for Docker Swarm Mode using the Docker Remote API, Node.JS, and D3. It provides a great visualization the swarm cluster and its running components.

docker service create \ --name swarm-visualizer \ --publish 5001:8080/tcp \ --constraint node.role==manager \ --mode global \ --mount type=bind,src=/var/run/docker.sock,dst=/var/run/docker.sock \ manomarks/visualizer:latest

The Swarm Visualizer should be available on any of the Manager’s IP addresses, on port 5001. We constrained the Visualizer to the Manager nodes, by using the node.role==manager constraint.

HashiCorp Consul

Next, we will install HashiCorp Consul onto our swarm cluster of VirtualBox hosts. Consul will provide us with service discovery, health checking, and a hierarchical key/value store. Consul will be installed, such that we end up with six Consul Agents. Agents can run as Servers or Clients. Similar to Docker swarm mode, we will install three Consul servers and three Consul clients, all in one Consul datacenter (our set of six local VMs). This clustered configuration will ensure Consul is distributed and highly available, able to suffer the loss of one of the Consul servers instances, without failing.

Consul Servers

Again, similar to Docker swarm mode, we will install the initial Consul server. Both the Consul servers and clients run inside Docker containers, one per swarm host.

consul_server="consul-server1"

docker-machine env manager1

eval $(docker-machine env manager1)

docker run -d \

--net=host \

--hostname ${consul_server} \

--name ${consul_server} \

--env "SERVICE_IGNORE=true" \

--env "CONSUL_CLIENT_INTERFACE=eth0" \

--env "CONSUL_BIND_INTERFACE=eth1" \

--volume consul_data:/consul/data \

--publish 8500:8500 \

consul:latest \

consul agent -server -ui -bootstrap-expect=3 -client=0.0.0.0 -advertise=${SWARM_MANAGER_IP} -data-dir="/consul/data"

The first Consul server advertises itself on its host’s IP address. The bootstrap-expect=3option instructs Consul to wait until three Consul servers are available before bootstrapping the cluster. This option also allows an initial Leader to be elected automatically. The three Consul servers form a consensus quorum, using the Raft consensus algorithm.

All Consul server and Consul client Docker containers will have a data directory (/consul/data), mapped to a volume (consul_data) on their corresponding VM hosts.

Consul provides a basic browser-based user interface. By using the -ui option each Consul server will have the UI available on port 8500.

Next, we will create two additional Consul Server instances, which will join the initial Consul server, by using the first Consul server’s advertised IP address.

vms=( "manager1" "manager2" "manager3"

"worker1" "worker2" "worker3" )

consul_servers=( "consul-server2" "consul-server3" )

i=0

for vm in ${vms[@]:1:2}

do

docker-machine env ${vm}

eval $(docker-machine env ${vm})

docker run -d \

--net=host \

--hostname ${consul_servers[i]} \

--name ${consul_servers[i]} \

--env "SERVICE_IGNORE=true" \

--env "CONSUL_CLIENT_INTERFACE=eth0" \

--env "CONSUL_BIND_INTERFACE=eth1" \

--volume consul_data:/consul/data \

--publish 8500:8500 \

consul:latest \

consul agent -server -ui -client=0.0.0.0 -advertise='{{ GetInterfaceIP "eth1" }}' -retry-join=${SWARM_MANAGER_IP} -data-dir="/consul/data"

let "i++"

done

Consul Clients

Next, we will install Consul clients on the three swarm worker nodes, using a similar method to the servers. According to Consul, The client is relatively stateless. The only background activity a client performs is taking part in the LAN gossip pool. The lack of the -server option, indicates Consul will install this agent as a client.

vms=( "manager1" "manager2" "manager3"

"worker1" "worker2" "worker3" )

consul_clients=( "consul-client1" "consul-client2" "consul-client3" )

i=0

for vm in ${vms[@]:3:3}

do

docker-machine env ${vm}

eval $(docker-machine env ${vm})

docker rm -f $(docker ps -a -q)

docker run -d \

--net=host \

--hostname ${consul_clients[i]} \

--name ${consul_clients[i]} \

--env "SERVICE_IGNORE=true" \

--env "CONSUL_CLIENT_INTERFACE=eth0" \

--env "CONSUL_BIND_INTERFACE=eth1" \

--volume consul_data:/consul/data \

consul:latest \

consul agent -client=0.0.0.0 -advertise='{{ GetInterfaceIP "eth1" }}' -retry-join=${SWARM_MANAGER_IP} -data-dir="/consul/data"

let "i++"

done

From inside the consul-server1 Docker container, on the manager1 host, using the consul members command, we should observe a current list of cluster members along with their current state.

$ docker exec -it consul-server1 consul members Node Address Status Type Build Protocol DC consul-client1 192.168.99.112:8301 alive client 0.7.5 2 dc1 consul-client2 192.168.99.113:8301 alive client 0.7.5 2 dc1 consul-client3 192.168.99.114:8301 alive client 0.7.5 2 dc1 consul-server1 192.168.99.109:8301 alive server 0.7.5 2 dc1 consul-server2 192.168.99.110:8301 alive server 0.7.5 2 dc1 consul-server3 192.168.99.111:8301 alive server 0.7.5 2 dc1

Note the three Consul servers, the three Consul clients, and the single datacenter, dc1. Also, note the address of each agent matches the IP address of their swarm hosts.

To further validate Consul is installed correctly, access Consul’s web-based UI using the IP address of any of Consul’s three server’s swarm host, on port 8500. Shown below is the initial view of Consul prior to services being registered. Note the Consol instance’s names. Also, notice the IP address of each Consul instance corresponds to the swarm host’s IP address, on which it resides.

Service Container Registration

Having provisioned the VMs, and built the Docker swarm cluster and Consul cluster, there is one final step to prepare our environment for the deployment of containerized services. We want to provide service discovery and registration for Consul, using Glider Labs’ Registrator.

Registrator will be installed on each host, within a Docker container. According to Glider Labs’ website, “Registrator will automatically register and deregister services for any Docker container, as the containers come online.”

We will install Registrator on only five of our six hosts. We will not install it on manager1. Since this host is already serving as both the Docker swarm Leader and Consul Leader, we will not be installing any additional service containers on that host. This is a personal choice, not a requirement.

vms=( "manager1" "manager2" "manager3"

"worker1" "worker2" "worker3" )

for vm in ${vms[@]:1}

do

docker-machine env ${vm}

eval $(docker-machine env ${vm})

HOST_IP=$(docker-machine ip ${vm})

# echo ${HOST_IP}

docker run -d \

--name=registrator \

--net=host \

--volume=/var/run/docker.sock:/tmp/docker.sock \

gliderlabs/registrator:latest \

-internal consul://${HOST_IP:localhost}:8500

done

Multiple Service Discovery Options

You might be wondering why we are worried about service discovery and registration with Consul, using Registrator, considering Docker swarm mode already has service discovery. Deploying our services using swarm mode, we are relying on swarm mode for service discovery. I chose to include Registrator in this example, to demonstrate an alternative method of service discovery, which can be used with other tools such as Consul Template, for dynamic load-balancer templating.

We actually have a third option for automatic service registration, Spring Cloud Consul Discovery. I chose not use Spring Cloud Consul Discovery in this post, to register the Widget service. Spring Cloud Consul Discovery would have automatically registered the Spring Boot service with Consul. The actual Widget service stack contains MongoDB, as well as other non-Spring Boot service components, which I removed for this post, such as the NGINX load balancer. Using Registrator, all the containerized services, not only the Spring Boot services, are automatically registered.

You will note in the Widget source code, I commented out the @EnableDiscoveryClient annotation on the WidgetApplication class. If you want to use Spring Cloud Consul Discovery, simply uncomment this annotation.

Distributed Configuration

We are almost ready to deploy some services. For this post’s demonstration, we will deploy the Widget service stack. The Widget service stack is composed of a simple Spring Boot service, backed by MongoDB; it is easily deployed as a containerized application. I often use the service stack for testing and training.

Widgets represent inanimate objects, users purchase with points. Widgets have particular physical characteristics, such as product id, name, color, size, and price. The inventory of widgets is stored in the widgets MongoDB database.

Hierarchical Key/Value Store

Before we can deploy the Widget service, we need to store the Widget service’s Spring Profiles in Consul’s hierarchical key/value store. The Widget service’s profiles are sets of configuration the service needs to run in different environments, such as Development, QA, UAT, and Production. Profiles include configuration, such as port assignments, database connection information, and logging and security settings. The service’s active profile will be read by the service at startup, from Consul, and applied at runtime.

As opposed to the traditional Java properties’ key/value format the Widget service uses YAML to specify it’s hierarchical configuration data. Using Spring Cloud Consul Config, an alternative to Spring Cloud Config Server and Client, we will store the service’s Spring Profile in Consul as blobs of YAML.

There are multi ways to store configuration in Consul. Storing the Widget service’s profiles as YAML was the quickest method of migrating the existing application.yml file’s multiple profiles to Consul. I am not insisting YAML is the most effective method of storing configuration in Consul’s k/v store; that depends on the application’s requirements.

Using the Consul KV HTTP API, using the HTTP PUT method, we will place each profile, as a YAML blob, into the appropriate data key, in Consul. For convenience, I have separated the Widget service’s three existing Spring profiles into individual YAML files. We will load each of the YAML file’s contents into Consul, using curl.

Below is the Widget service’s default Spring profile. The default profile is activated if no other profiles are explicitly active when the application context starts.

endpoints:

enabled: true

sensitive: false

info:

java:

source: "${java.version}"

target: "${java.version}"

logging:

level:

root: DEBUG

management:

security:

enabled: false

info:

build:

enabled: true

git:

mode: full

server:

port: 8030

spring:

data:

mongodb:

database: widgets

host: localhost

port: 27017

Below is the Widget service’s docker-local Spring profile. This is the profile we will use when we deploy the Widget service to our swarm cluster. The docker-local profile overrides two properties of the default profile — the name of our MongoDB host and the logging level. All other configuration will come from the default profile.

logging:

level:

root: INFO

spring:

data:

mongodb:

host: mongodb

To load the profiles into Consul, from the root of the Widget local git repository, we will execute curl commands. We will use the Consul cluster Leader’s IP address for our HTTP PUT methods.

docker-machine env manager1

eval $(docker-machine env manager1)

CONSUL_SERVER=$(docker-machine ip $(docker node ls | grep Leader | awk '{print $3}'))

# default profile

KEY="config/widget-service/data"

VALUE="consul-configs/default.yaml"

curl -X PUT --data-binary @${VALUE} \

-H "Content-type: text/x-yaml" \

${CONSUL_SERVER:localhost}:8500/v1/kv/${KEY}

# docker-local profile

KEY="config/widget-service/docker-local/data"

VALUE="consul-configs/docker-local.yaml"

curl -X PUT --data-binary @${VALUE} \

-H "Content-type: text/x-yaml" \

${CONSUL_SERVER:localhost}:8500/v1/kv/${KEY}

# docker-production profile

KEY="config/widget-service/docker-production/data"

VALUE="consul-configs/docker-production.yaml"

curl -X PUT --data-binary @${VALUE} \

-H "Content-type: text/x-yaml" \

${CONSUL_SERVER:localhost}:8500/v1/kv/${KEY}

Returning to the Consul UI, we should now observe three Spring profiles, in the appropriate data keys, listed under the Key/Value tab. The default Spring profile YAML blob, the value, will be assigned to the config/widget-service/data key.

The docker-local profile will be assigned to the config/widget-service,docker-local/data key. The keys follow default spring.cloud.consul.config conventions.

Spring Cloud Consul Config

In order for our Spring Boot service to connect to Consul and load the requested active Spring Profile, we need to add a dependency to the gradle.build file, on Spring Cloud Consul Config.

dependencies {

compile group: 'org.springframework.cloud', name: 'spring-cloud-starter-consul-all';

...

}

Next, we need to configure the bootstrap.yml file, to connect and properly read the profile. We must properly set the CONSUL_SERVER environment variable. This value is the Consul server instance hostname or IP address, which the Widget service instances will contact to retrieve its configuration.

spring:

application:

name: widget-service

cloud:

consul:

host: ${CONSUL_SERVER:localhost}

port: 8500

config:

fail-fast: true

format: yaml

Deployment Using Docker Compose

We are almost ready to deploy the Widget service instances and the MongoDB instance (also considered a ‘service’ by Docker), in Docker containers, to the Docker swarm cluster. The Docker Compose file is written using version 3 of the Docker Compose specification. It takes advantage of some of the specification’s new features.

version: '3.0'

services:

widget:

image: garystafford/microservice-docker-demo-widget:latest

depends_on:

- widget_stack_mongodb

hostname: widget

ports:

- 8030:8030/tcp

networks:

- widget_overlay_net

deploy:

mode: global

placement:

constraints: [node.role == worker]

environment:

- "CONSUL_SERVER_URL=${CONSUL_SERVER}"

- "SERVICE_NAME=widget-service"

- "SERVICE_TAGS=service"

command: "java -Dspring.profiles.active=${WIDGET_PROFILE} -Djava.security.egd=file:/dev/./urandom -jar widget/widget-service.jar"

mongodb:

image: mongo:latest

command:

- --smallfiles

hostname: mongodb

ports:

- 27017:27017/tcp

networks:

- widget_overlay_net

volumes:

- widget_data_vol:/data/db

deploy:

replicas: 1

placement:

constraints: [node.role == worker]

environment:

- "SERVICE_NAME=mongodb"

- "SERVICE_TAGS=database"

networks:

widget_overlay_net:

external: true

volumes:

widget_data_vol:

external: true

External Container Volumes and Network

Note the external volume, widget_data_vol, which will be mounted to the MongoDB container, to the /data/db directory. The volume must be created on each host in the swarm cluster, which may contain the MongoDB instance.

Also, note the external overlay network, widget_overlay_net, which will be used by all the service containers in the service stack to communicate with each other. These must be created before deploying our services.

vms=( "manager1" "manager2" "manager3"

"worker1" "worker2" "worker3" )

for vm in ${vms[@]}

do

docker-machine env ${vm}

eval $(docker-machine env ${vm})

docker volume create --name=widget_data_vol

echo "Volume created: ${vm}..."

done

We should be able to view the new volume on one of the swarm Worker host, using the docker volume ls command.

$ docker volume ls DRIVER VOLUME NAME local widget_data_vol

The network is created once, for the whole swarm cluster.

docker-machine env manager1 eval $(docker-machine env manager1) docker network create \ --driver overlay \ --subnet=10.0.0.0/16 \ --ip-range=10.0.11.0/24 \ --opt encrypted \ --attachable=true \ widget_overlay_net

We can view the new overlay network using the docker network ls command.

$ docker network ls NETWORK ID NAME DRIVER SCOPE 03bcf76d3cc4 bridge bridge local 533285df0ce8 docker_gwbridge bridge local 1f627d848737 host host local 6wdtuhwpuy4f ingress overlay swarm b8a1f277067f none null local 4hy77vlnpkkt widget_overlay_net overlay swarm

Deploying the Services

With the profiles loaded into Consul, and the overlay network and data volumes created on the hosts, we can deploy the Widget service instances and MongoDB service instance. We will assign the Consul cluster Leader’s IP address as the CONSUL_SERVER variable. We will assign the Spring profile, docker-local, to the WIDGET_PROFILE variable. Both these variables are used by the Widget service’s Docker Compose file.

docker-machine env manager1

eval $(docker-machine env manager1)

export CONSUL_SERVER=$(docker-machine ip $(docker node ls | grep Leader | awk '{print $3}'))

export WIDGET_PROFILE=docker-local

docker stack deploy --compose-file=docker-compose.yml widget_stack

The Docker images, used to instantiate the service’s Docker containers, must be pulled from Docker Hub, by each swarm host. Consequently, the initial deployment process can take up to several minutes, depending on your Internet connection.

After deployment, using the docker stack ls command, we should observe that the widget_stack stack is deployed to the swarm cluster with two services.

$ docker stack ls NAME SERVICES widget_stack 2

After deployment, using the docker stack ps widget_stack command, we should observe that all the expected services in the widget_stack stack are running. Note this command also shows us where the services are running.

$ docker service ls 20:37:24-gstafford:~/Documents/projects/widget-docker-demo/widget-service$ docker stack ps widget_stack ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS qic4j1pl6n4l widget_stack_widget.hhn1l3qhej0ajmj85gp8qhpai garystafford/microservice-docker-demo-widget:latest worker3 Running Running 4 minutes ago zrbnyoikncja widget_stack_widget.nsy55qzavzxv7xmanraijdw4i garystafford/microservice-docker-demo-widget:latest worker2 Running Running 4 minutes ago 81z3ejqietqf widget_stack_widget.n89upik5v2xrjjaeuy9d4jybl garystafford/microservice-docker-demo-widget:latest worker1 Running Running 4 minutes ago nx8dxlib3wyk widget_stack_mongodb.1 mongo:latest worker1 Running Running 4 minutes ago

Using the docker service ls command, we should observe that all the expected service instances (replicas) running, including all the widget_stack’s services and the three instances of the swarm-visualizer service.

$ docker service ls ID NAME MODE REPLICAS IMAGE almmuqqe9v55 swarm-visualizer global 3/3 manomarks/visualizer:latest i9my74tl536n widget_stack_widget global 0/3 garystafford/microservice-docker-demo-widget:latest ju2t0yjv9ily widget_stack_mongodb replicated 1/1 mongo:latest

Since it may take a few moments for the widget_stack’s services to come up, you may need to re-run the command until you see all expected replicas running.

$ docker service ls ID NAME MODE REPLICAS IMAGE almmuqqe9v55 swarm-visualizer global 3/3 manomarks/visualizer:latest i9my74tl536n widget_stack_widget global 3/3 garystafford/microservice-docker-demo-widget:latest ju2t0yjv9ily widget_stack_mongodb replicated 1/1 mongo:latest

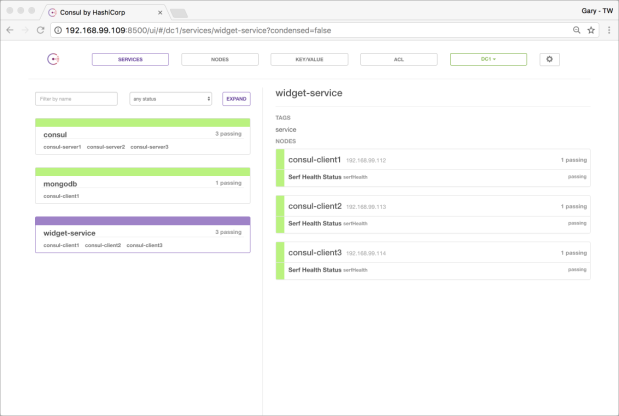

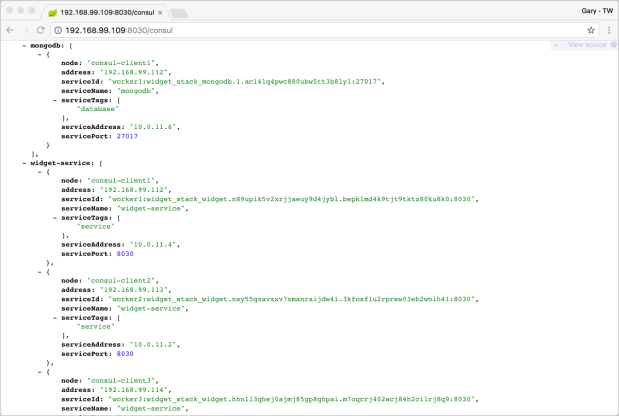

If you see 3 of 3 replicas of the Widget service running, this is a good sign everything is working! We can confirm this, by checking back in with the Consul UI. We should see the three Widget services and the single instance of MongoDB. They were all registered with Registrator. For clarity, we have purposefully did not register the Swarm Visualizer instances with Consul, using Registrator’s "SERVICE_IGNORE=true" environment variable. Registrator will also not register itself with Consul.

We can also view the Widget stack’s services, by revisiting the Swarm Visualizer, on any of the Docker swarm cluster Manager’s IP address, on port 5001.

Spring Boot Actuator

The most reliable way to confirm that the Widget service instances did indeed load their configuration from Consul, is to check the Spring Boot Actuator Environment endpoint for any of the Widget instances. As shown below, all configuration is loaded from the default profile, except for two configuration items, which override the default profile values and are loaded from the active docker-local Spring profile.

Consul Endpoint

There is yet another way to confirm Consul is working properly with our services, without accessing the Consul UI. This ability is provided by Spring Boot Actuator’s Consul endpoint. If the Spring Boot service has a dependency on Spring Cloud Consul Config, this endpoint will be available. It displays useful information about the Consul cluster and the services which have been registered with Consul.

Cleaning Up the Swarm

If you want to start over again, without destroying the Docker swarm cluster, you may use the following commands to delete all the stacks, services, stray containers, and unused images, networks, and volumes. The Docker swarm cluster and all Docker images pulled to the VMs will be left intact.

# remove all stacks and services

docker-machine env manager1

eval $(docker-machine env manager1)

for stack in $(docker stack ls | awk '{print $1}'); do docker stack rm ${stack}; done

for service in $(docker service ls | awk '{print $1}'); do docker service rm ${service}; done

# remove all containers, networks, and volumes

vms=( "manager1" "manager2" "manager3"

"worker1" "worker2" "worker3" )

for vm in ${vms[@]}

do

docker-machine env ${vm}

eval $(docker-machine env ${vm})

docker system prune -f

docker stop $(docker ps -a -q)

docker rm -f $(docker ps -a -q)

done

Conclusion

We have barely scratched the surface of the features and capabilities of Docker swarm mode or Consul. However, the post did demonstrate several key concepts, critical to configuring, deploying, and managing a modern, distributed, containerized service application platform. These concepts included cluster management, service discovery, service orchestration, distributed configuration, hierarchical key/value stores, and distributed and highly-available systems.

This post’s example was designed for demonstration purposes only and meant to simulate a typical Development or Test environment. Although the swarm cluster, Consul cluster, and the Widget service instances were deployed in a distributed and highly available configuration, this post’s example is far from being production-ready. Some things that would be considered, if you were to make this more production-like, include:

- MongoDB database is a single point of failure. It should be deployed in a sharded and clustered configuration.

- The swarm nodes were deployed to a single datacenter. For redundancy, the nodes should be spread across multiple physical hypervisors, separate availability zones and/or geographically separate datacenters (regions).

- The example needs centralized logging, monitoring, and alerting, to better understand and react to how the swarm, Docker containers, and services are performing.

- Most importantly, we made was no attempt to secure the services, containers, data, network, or hosts. Security is a critical component for moving this example to Production.

All opinions in this post are my own and not necessarily the views of my current employer or their clients.

#1 by Alexander G Castañeda E on April 22, 2017 - 12:17 pm

This is one of the best posts to start developing microservices!

Thanks Gary for your time in doing this…

#2 by alexrun on April 22, 2017 - 12:17 pm

This is one of the best posts to start developing microservices!

Thanks Gary for your time in doing this…

#3 by alexrun on April 22, 2017 - 12:23 pm

I will also suggest to include into the production-like list:

Modify the configuration to use an internal overlay network instead of the host to be able to differentiate each instance of a service on the swarm…

#4 by BorisL on April 29, 2017 - 3:23 am

Thanks for the great post, I followed all the steps and it works perfectly with the DigitalOcean drive.

However, when attempting to do the same on my local machine using virtualbox, it gets stuck at the command `docker swarm init –advertise-addr…`. Any idea why this fails with virtualbox?