Posts Tagged VirtualBox

Spring Music Revisited: Java-Spring-MongoDB Web App with Docker 1.12

Posted by Gary A. Stafford in Build Automation, Continuous Delivery, DevOps, Enterprise Software Development, Java Development, Software Development on August 7, 2016

Build, test, deploy, and monitor a multi-container, MongoDB-backed, Java Spring web application, using the new Docker 1.12.

Introduction

This post and the post’s example project represent an update to a previous post, Build and Deploy a Java-Spring-MongoDB Application using Docker. This new post incorporates many improvements made in Docker 1.12, including the use of the new Docker Compose v2 YAML format. The post’s project was also updated to use Filebeat with ELK, as opposed to Logspout, which was used previously.

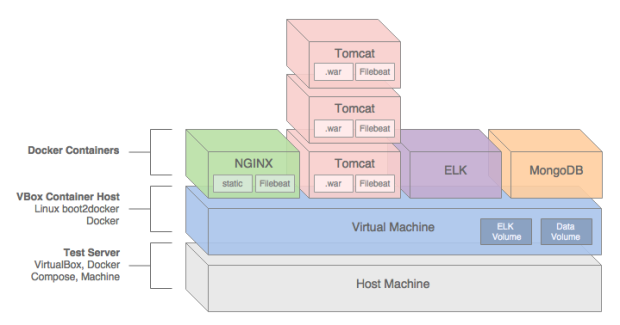

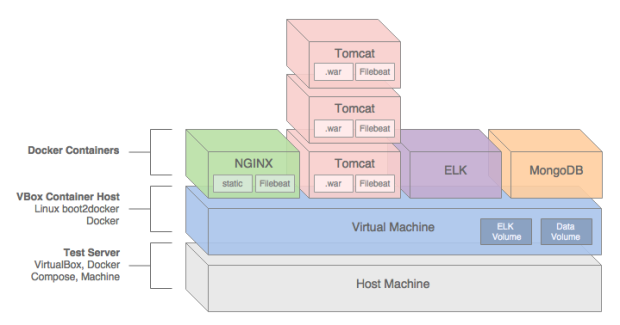

In this post, we will demonstrate how to build, test, deploy, and manage a Java Spring web application, hosted on Apache Tomcat, load-balanced by NGINX, monitored by ELK with Filebeat, and all containerized with Docker.

We will use a sample Java Spring application, Spring Music, available on GitHub from Cloud Foundry. The Spring Music sample record album collection application was originally designed to demonstrate the use of database services on Cloud Foundry, using the Spring Framework. Instead of Cloud Foundry, we will host the Spring Music application locally, using Docker on VirtualBox, and optionally on AWS.

All files necessary to build this project are stored on the docker_v2 branch of the garystafford/spring-music-docker repository on GitHub. The Spring Music source code is stored on the springmusic_v2 branch of the garystafford/spring-music repository, also on GitHub.

Application Architecture

The Java Spring Music application stack contains the following technologies: Java, Spring Framework, AngularJS, Bootstrap, jQuery, NGINX, Apache Tomcat, MongoDB, the ELK Stack, and Filebeat. Testing frameworks include the Spring MVC Test Framework, Mockito, Hamcrest, and JUnit.

A few changes were made to the original Spring Music application to make it work for this demonstration, including:

- Move from Java 1.7 to 1.8 (including newer Tomcat version)

- Add unit tests for Continuous Integration demonstration purposes

- Modify MongoDB configuration class to work with non-local, containerized MongoDB instances

- Add Gradle

warNoStatictask to build WAR without static assets - Add Gradle

zipStatictask to ZIP up the application’s static assets for deployment to NGINX - Add Gradle

zipGetVersiontask with a versioning scheme for build artifacts - Add

context.xmlfile andMANIFEST.MFfile to the WAR file - Add Log4j

RollingFileAppenderappender to send log entries to Filebeat - Update versions of several dependencies, including Gradle, Spring, and Tomcat

We will use the following technologies to build, publish, deploy, and host the Java Spring Music application: Gradle, git, GitHub, Travis CI, Oracle VirtualBox, Docker, Docker Compose, Docker Machine, Docker Hub, and optionally, Amazon Web Services (AWS).

NGINX

To increase performance, the Spring Music web application’s static content will be hosted by NGINX. The application’s WAR file will be hosted by Apache Tomcat 8.5.4. Requests for non-static content will be proxied through NGINX on the front-end, to a set of three load-balanced Tomcat instances on the back-end. To further increase application performance, NGINX will also be configured for browser caching of the static content. In many enterprise environments, the use of a Java EE application server, like Tomcat, is still not uncommon.

Reverse proxying and caching are configured thought NGINX’s default.conf file, in the server configuration section:

| server { | |

| listen 80; | |

| server_name proxy; | |

| location ~* \/assets\/(css|images|js|template)\/* { | |

| root /usr/share/nginx/; | |

| expires max; | |

| add_header Pragma public; | |

| add_header Cache-Control "public, must-revalidate, proxy-revalidate"; | |

| add_header Vary Accept-Encoding; | |

| access_log off; | |

| } |

The three Tomcat instances will be manually configured for load-balancing using NGINX’s default round-robin load-balancing algorithm. This is configured through the default.conf file, in the upstream configuration section:

| upstream backend { | |

| server music_app_1:8080; | |

| server music_app_2:8080; | |

| server music_app_3:8080; | |

| } |

Client requests are received through port 80 on the NGINX server. NGINX redirects requests, which are not for non-static assets, to one of the three Tomcat instances on port 8080.

MongoDB

The Spring Music application was designed to work with a number of data stores, including MySQL, Postgres, Oracle, MongoDB, Redis, and H2, an in-memory Java SQL database. Given the choice of both SQL and NoSQL databases, we will select MongoDB.

The Spring Music application, hosted by Tomcat, will store and modify record album data in a single instance of MongoDB. MongoDB will be populated with a collection of album data from a JSON file, when the Spring Music application first creates the MongoDB database instance.

ELK

Lastly, the ELK Stack with Filebeat, will aggregate NGINX, Tomcat, and Java Log4j log entries, providing debugging and analytics to our demonstration. A similar method for aggregating logs, using Logspout instead of Filebeat, can be found in this previous post.

Continuous Integration

In this post’s example, two build artifacts, a WAR file for the application and ZIP file for the static web content, are built automatically by Travis CI, whenever source code changes are pushed to the springmusic_v2 branch of the garystafford/spring-music repository on GitHub.

Following a successful build and a small number of unit tests, Travis CI pushes the build artifacts to the build-artifacts branch on the same GitHub project. The build-artifacts branch acts as a pseudo binary repository for the project, much like JFrog’s Artifactory. These artifacts are used later by Docker to build the project’s immutable Docker images and containers.

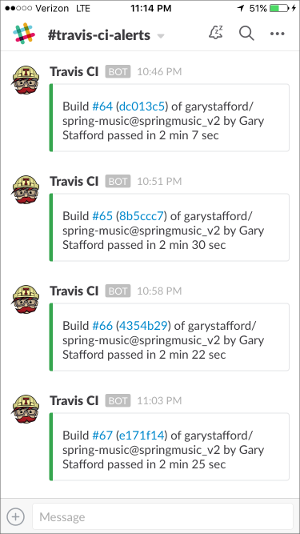

Build Notifications

Travis CI pushes build notifications to a Slack channel, which eliminates the need to actively monitor Travis CI.

Automation Scripting

The .travis.yaml file, custom gradle.build Gradle tasks, and the deploy_travisci.sh script handles the Travis CI automation described, above.

Travis CI .travis.yaml file:

| language: java | |

| jdk: oraclejdk8 | |

| before_install: | |

| - chmod +x gradlew | |

| before_deploy: | |

| - chmod ugo+x deploy_travisci.sh | |

| script: | |

| - "./gradlew clean build" | |

| - "./gradlew warNoStatic warCopy zipGetVersion zipStatic" | |

| - sh ./deploy_travisci.sh | |

| env: | |

| global: | |

| - GH_REF: github.com/garystafford/spring-music.git | |

| - secure: <GH_TOKEN_secure_hash_here> | |

| - secure: <COMMIT_AUTHOR_EMAIL_secure_hash_here> | |

| notifications: | |

| slack: | |

| - secure: <SLACK_secure_hash_here> |

Custom gradle.build tasks:

| // new Gradle build tasks | |

| task warNoStatic(type: War) { | |

| // omit the version from the war file name | |

| version = '' | |

| exclude '**/assets/**' | |

| manifest { | |

| attributes | |

| 'Manifest-Version': '1.0', | |

| 'Created-By': currentJvm, | |

| 'Gradle-Version': GradleVersion.current().getVersion(), | |

| 'Implementation-Title': archivesBaseName + '.war', | |

| 'Implementation-Version': artifact_version, | |

| 'Implementation-Vendor': 'Gary A. Stafford' | |

| } | |

| } | |

| task warCopy(type: Copy) { | |

| from 'build/libs' | |

| into 'build/distributions' | |

| include '**/*.war' | |

| } | |

| task zipGetVersion (type: Task) { | |

| ext.versionfile = | |

| new File("${projectDir}/src/main/webapp/assets/buildinfo.properties") | |

| versionfile.text = 'build.version=' + artifact_version | |

| } | |

| task zipStatic(type: Zip) { | |

| from 'src/main/webapp/assets' | |

| appendix = 'static' | |

| version = '' | |

| } |

The deploy.sh file:

| #!/bin/bash | |

| set -e | |

| cd build/distributions | |

| git init | |

| git config user.name "travis-ci" | |

| git config user.email "${COMMIT_AUTHOR_EMAIL}" | |

| git add . | |

| git commit -m "Deploy Travis CI Build #${TRAVIS_BUILD_NUMBER} artifacts to GitHub" | |

| git push --force --quiet "https://${GH_TOKEN}@${GH_REF}" master:build-artifacts > /dev/null 2>&1 |

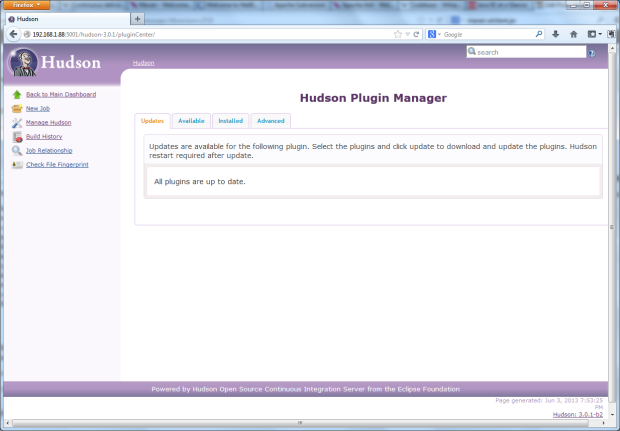

You can easily replicate the project’s continuous integration automation using your choice of toolchains. GitHub or BitBucket are good choices for distributed version control. For continuous integration and deployment, I recommend Travis CI, Semaphore, Codeship, or Jenkins. Couple those with a good persistent chat application, such as Glider Labs’ Slack or Atlassian’s HipChat.

Building the Docker Environment

Make sure VirtualBox, Docker, Docker Compose, and Docker Machine, are installed and running. At the time of this post, I have the following versions of software installed on my Mac:

- Mac OS X 10.11.6

- VirtualBox 5.0.26

- Docker 1.12.1

- Docker Compose 1.8.0

- Docker Machine 0.8.1

To build the project’s VirtualBox VM, Docker images, and Docker containers, execute the build script, using the following command: sh ./build_project.sh. A build script is useful when working with CI/CD automation tools, such as Jenkins CI or ThoughtWorks go. However, to understand the build process, I suggest first running the individual commands, locally.

| #!/bin/sh | |

| set -ex | |

| # clone project | |

| git clone -b docker_v2 --single-branch \ | |

| https://github.com/garystafford/spring-music-docker.git music \ | |

| && cd "$_" | |

| # provision VirtualBox VM | |

| docker-machine create --driver virtualbox springmusic | |

| # set new environment | |

| docker-machine env springmusic \ | |

| && eval "$(docker-machine env springmusic)" | |

| # mount a named volume on host to store mongo and elk data | |

| # ** assumes your project folder is 'music' ** | |

| docker volume create --name music_data | |

| docker volume create --name music_elk | |

| # create bridge network for project | |

| # ** assumes your project folder is 'music' ** | |

| docker network create -d bridge music_net | |

| # build images and orchestrate start-up of containers (in this order) | |

| docker-compose -p music up -d elk && sleep 15 \ | |

| && docker-compose -p music up -d mongodb && sleep 15 \ | |

| && docker-compose -p music up -d app \ | |

| && docker-compose scale app=3 && sleep 15 \ | |

| && docker-compose -p music up -d proxy && sleep 15 | |

| # optional: configure local DNS resolution for application URL | |

| #echo "$(docker-machine ip springmusic) springmusic.com" | sudo tee --append /etc/hosts | |

| # run a simple connectivity test of application | |

| for i in {1..9}; do curl -I $(docker-machine ip springmusic); done |

Deploying to AWS

By simply changing the Docker Machine driver to AWS EC2 from VirtualBox, and providing your AWS credentials, the springmusic environment may also be built on AWS.

Build Process

Docker Machine provisions a single VirtualBox springmusic VM on which host the project’s containers. VirtualBox provides a quick and easy solution that can be run locally for initial development and testing of the application.

Next, the script creates a Docker data volume and project-specific Docker bridge network.

Next, using the project’s individual Dockerfiles, Docker Compose pulls base Docker images from Docker Hub for NGINX, Tomcat, ELK, and MongoDB. Project-specific immutable Docker images are then built for NGINX, Tomcat, and MongoDB. While constructing the project-specific Docker images for NGINX and Tomcat, the latest Spring Music build artifacts are pulled and installed into the corresponding Docker images.

Docker Compose builds and deploys (6) containers onto the VirtualBox VM: (1) NGINX, (3) Tomcat, (1) MongoDB, and (1) ELK.

The NGINX Dockerfile:

| # NGINX image with build artifact | |

| FROM nginx:latest | |

| MAINTAINER Gary A. Stafford <garystafford@rochester.rr.com> | |

| ENV REFRESHED_AT 2016-09-17 | |

| ENV GITHUB_REPO https://github.com/garystafford/spring-music/raw/build-artifacts | |

| ENV STATIC_FILE spring-music-static.zip | |

| RUN apt-get update -qq \ | |

| && apt-get install -qqy curl wget unzip nano \ | |

| && apt-get clean \ | |

| \ | |

| && wget -O /tmp/${STATIC_FILE} ${GITHUB_REPO}/${STATIC_FILE} \ | |

| && unzip /tmp/${STATIC_FILE} -d /usr/share/nginx/assets/ | |

| COPY default.conf /etc/nginx/conf.d/default.conf | |

| # tweak nginx image set-up, remove log symlinks | |

| RUN rm /var/log/nginx/access.log /var/log/nginx/error.log | |

| # install Filebeat | |

| ENV FILEBEAT_VERSION=filebeat_1.2.3_amd64.deb | |

| RUN curl -L -O https://download.elastic.co/beats/filebeat/${FILEBEAT_VERSION} \ | |

| && dpkg -i ${FILEBEAT_VERSION} \ | |

| && rm ${FILEBEAT_VERSION} | |

| # configure Filebeat | |

| ADD filebeat.yml /etc/filebeat/filebeat.yml | |

| # CA cert | |

| RUN mkdir -p /etc/pki/tls/certs | |

| ADD logstash-beats.crt /etc/pki/tls/certs/logstash-beats.crt | |

| # start Filebeat | |

| ADD ./start.sh /usr/local/bin/start.sh | |

| RUN chmod +x /usr/local/bin/start.sh | |

| CMD [ "/usr/local/bin/start.sh" ] |

The Tomcat Dockerfile:

| # Apache Tomcat image with build artifact | |

| FROM tomcat:8.5.4-jre8 | |

| MAINTAINER Gary A. Stafford <garystafford@rochester.rr.com> | |

| ENV REFRESHED_AT 2016-09-17 | |

| ENV GITHUB_REPO https://github.com/garystafford/spring-music/raw/build-artifacts | |

| ENV APP_FILE spring-music.war | |

| ENV TERM xterm | |

| ENV JAVA_OPTS -Djava.security.egd=file:/dev/./urandom | |

| RUN apt-get update -qq \ | |

| && apt-get install -qqy curl wget \ | |

| && apt-get clean \ | |

| \ | |

| && touch /var/log/spring-music.log \ | |

| && chmod 666 /var/log/spring-music.log \ | |

| \ | |

| && wget -q -O /usr/local/tomcat/webapps/ROOT.war ${GITHUB_REPO}/${APP_FILE} \ | |

| && mv /usr/local/tomcat/webapps/ROOT /usr/local/tomcat/webapps/_ROOT | |

| COPY tomcat-users.xml /usr/local/tomcat/conf/tomcat-users.xml | |

| # install Filebeat | |

| ENV FILEBEAT_VERSION=filebeat_1.2.3_amd64.deb | |

| RUN curl -L -O https://download.elastic.co/beats/filebeat/${FILEBEAT_VERSION} \ | |

| && dpkg -i ${FILEBEAT_VERSION} \ | |

| && rm ${FILEBEAT_VERSION} | |

| # configure Filebeat | |

| ADD filebeat.yml /etc/filebeat/filebeat.yml | |

| # CA cert | |

| RUN mkdir -p /etc/pki/tls/certs | |

| ADD logstash-beats.crt /etc/pki/tls/certs/logstash-beats.crt | |

| # start Filebeat | |

| ADD ./start.sh /usr/local/bin/start.sh | |

| RUN chmod +x /usr/local/bin/start.sh | |

| CMD [ "/usr/local/bin/start.sh" ] |

Docker Compose v2 YAML

This post was recently updated for Docker 1.12, and to use Docker Compose v2 YAML file format. The post’s docker-compose.yml takes advantage of improvements in Docker 1.12 and Docker Compose v2 YAML. Improvements to the YAML file include eliminating the need to link containers and expose ports, and the addition of named networks and volumes.

| version: '2' | |

| services: | |

| proxy: | |

| build: nginx/ | |

| ports: | |

| - 80:80 | |

| networks: | |

| - net | |

| depends_on: | |

| - app | |

| hostname: proxy | |

| container_name: proxy | |

| app: | |

| build: tomcat/ | |

| ports: | |

| - 8080 | |

| networks: | |

| - net | |

| depends_on: | |

| - mongodb | |

| hostname: app | |

| mongodb: | |

| build: mongodb/ | |

| ports: | |

| - 27017:27017 | |

| networks: | |

| - net | |

| depends_on: | |

| - elk | |

| hostname: mongodb | |

| container_name: mongodb | |

| volumes: | |

| - music_data:/data/db | |

| - music_data:/data/configdb | |

| elk: | |

| image: sebp/elk:latest | |

| ports: | |

| - 5601:5601 | |

| - 9200:9200 | |

| - 5044:5044 | |

| - 5000:5000 | |

| networks: | |

| - net | |

| volumes: | |

| - music_elk:/var/lib/elasticsearch | |

| hostname: elk | |

| container_name: elk | |

| volumes: | |

| music_data: | |

| external: true | |

| music_elk: | |

| external: true | |

| networks: | |

| net: | |

| driver: bridge |

The Results

Below are the results of building the project.

| # Resulting Docker Machine VirtualBox VM: | |

| $ docker-machine ls | |

| NAME ACTIVE DRIVER STATE URL SWARM DOCKER ERRORS | |

| springmusic * virtualbox Running tcp://192.168.99.100:2376 v1.12.1 | |

| # Resulting external volume: | |

| $ docker volume ls | |

| DRIVER VOLUME NAME | |

| local music_data | |

| local music_elk | |

| # Resulting bridge network: | |

| $ docker network ls | |

| NETWORK ID NAME DRIVER SCOPE | |

| f564dfa1b440 music_net bridge local | |

| # Resulting Docker images - (4) base images and (3) project images: | |

| $ docker images | |

| REPOSITORY TAG IMAGE ID CREATED SIZE | |

| music_proxy latest 7a8dd90bcf32 About an hour ago 250.2 MB | |

| music_app latest c93c713d03b8 About an hour ago 393 MB | |

| music_mongodb latest fbcbbe9d4485 25 hours ago 366.4 MB | |

| tomcat 8.5.4-jre8 98cc750770ba 2 days ago 334.5 MB | |

| mongo latest 48b8b08dca4d 2 days ago 366.4 MB | |

| nginx latest 4efb2fcdb1ab 10 days ago 183.4 MB | |

| sebp/elk latest 07a3e78b01f5 13 days ago 884.5 MB | |

| # Resulting (6) Docker containers | |

| $ docker ps | |

| CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES | |

| b33922767517 music_proxy "/usr/local/bin/start" 3 hours ago Up 13 minutes 0.0.0.0:80->80/tcp, 443/tcp proxy | |

| e16d2372f2df music_app "/usr/local/bin/start" 3 hours ago Up About an hour 0.0.0.0:32770->8080/tcp music_app_3 | |

| 6b7accea7156 music_app "/usr/local/bin/start" 3 hours ago Up About an hour 0.0.0.0:32769->8080/tcp music_app_2 | |

| 2e94f766df1b music_app "/usr/local/bin/start" 3 hours ago Up About an hour 0.0.0.0:32768->8080/tcp music_app_1 | |

| 71f8dc574148 sebp/elk:latest "/usr/local/bin/start" 3 hours ago Up About an hour 0.0.0.0:5000->5000/tcp, 0.0.0.0:5044->5044/tcp, 0.0.0.0:5601->5601/tcp, 0.0.0.0:9200->9200/tcp, 9300/tcp elk | |

| f7e7d1af7cca music_mongodb "/entrypoint.sh mongo" 20 hours ago Up About an hour 0.0.0.0:27017->27017/tcp mongodb |

Testing the Application

Below are partial results of the curl test, hitting the NGINX endpoint. Note the different IP addresses in the Upstream-Address field between requests. This test proves NGINX’s round-robin load-balancing is working across the three Tomcat application instances: music_app_1, music_app_2, and music_app_3.

Also, note the sharp decrease in the Request-Time between the first three requests and subsequent three requests. The Upstream-Response-Time to the Tomcat instances doesn’t change, yet the total Request-Time is much shorter, due to caching of the application’s static assets by NGINX.

| for i in {1..6}; do curl -I $(docker-machine ip springmusic);done | |

| HTTP/1.1 200 | |

| Server: nginx/1.11.4 | |

| Date: Sat, 17 Sep 2016 18:33:50 GMT | |

| Content-Type: text/html;charset=ISO-8859-1 | |

| Content-Length: 2094 | |

| Connection: keep-alive | |

| Accept-Ranges: bytes | |

| ETag: W/"2094-1473924940000" | |

| Last-Modified: Thu, 15 Sep 2016 07:35:40 GMT | |

| Content-Language: en | |

| Request-Time: 0.575 | |

| Upstream-Address: 172.18.0.4:8080 | |

| Upstream-Response-Time: 1474137230.048 | |

| HTTP/1.1 200 | |

| Server: nginx/1.11.4 | |

| Date: Sat, 17 Sep 2016 18:33:51 GMT | |

| Content-Type: text/html;charset=ISO-8859-1 | |

| Content-Length: 2094 | |

| Connection: keep-alive | |

| Accept-Ranges: bytes | |

| ETag: W/"2094-1473924940000" | |

| Last-Modified: Thu, 15 Sep 2016 07:35:40 GMT | |

| Content-Language: en | |

| Request-Time: 0.711 | |

| Upstream-Address: 172.18.0.5:8080 | |

| Upstream-Response-Time: 1474137230.865 | |

| HTTP/1.1 200 | |

| Server: nginx/1.11.4 | |

| Date: Sat, 17 Sep 2016 18:33:52 GMT | |

| Content-Type: text/html;charset=ISO-8859-1 | |

| Content-Length: 2094 | |

| Connection: keep-alive | |

| Accept-Ranges: bytes | |

| ETag: W/"2094-1473924940000" | |

| Last-Modified: Thu, 15 Sep 2016 07:35:40 GMT | |

| Content-Language: en | |

| Request-Time: 0.326 | |

| Upstream-Address: 172.18.0.6:8080 | |

| Upstream-Response-Time: 1474137231.812 | |

| # assets now cached... | |

| HTTP/1.1 200 | |

| Server: nginx/1.11.4 | |

| Date: Sat, 17 Sep 2016 18:33:53 GMT | |

| Content-Type: text/html;charset=ISO-8859-1 | |

| Content-Length: 2094 | |

| Connection: keep-alive | |

| Accept-Ranges: bytes | |

| ETag: W/"2094-1473924940000" | |

| Last-Modified: Thu, 15 Sep 2016 07:35:40 GMT | |

| Content-Language: en | |

| Request-Time: 0.012 | |

| Upstream-Address: 172.18.0.4:8080 | |

| Upstream-Response-Time: 1474137233.111 | |

| HTTP/1.1 200 | |

| Server: nginx/1.11.4 | |

| Date: Sat, 17 Sep 2016 18:33:53 GMT | |

| Content-Type: text/html;charset=ISO-8859-1 | |

| Content-Length: 2094 | |

| Connection: keep-alive | |

| Accept-Ranges: bytes | |

| ETag: W/"2094-1473924940000" | |

| Last-Modified: Thu, 15 Sep 2016 07:35:40 GMT | |

| Content-Language: en | |

| Request-Time: 0.017 | |

| Upstream-Address: 172.18.0.5:8080 | |

| Upstream-Response-Time: 1474137233.350 | |

| HTTP/1.1 200 | |

| Server: nginx/1.11.4 | |

| Date: Sat, 17 Sep 2016 18:33:53 GMT | |

| Content-Type: text/html;charset=ISO-8859-1 | |

| Content-Length: 2094 | |

| Connection: keep-alive | |

| Accept-Ranges: bytes | |

| ETag: W/"2094-1473924940000" | |

| Last-Modified: Thu, 15 Sep 2016 07:35:40 GMT | |

| Content-Language: en | |

| Request-Time: 0.013 | |

| Upstream-Address: 172.18.0.6:8080 | |

| Upstream-Response-Time: 1474137233.594 |

Spring Music Application Links

Assuming the springmusic VM is running at 192.168.99.100, the following links can be used to access various project endpoints. Note the (3) Tomcat instances each map to randomly exposed ports. These ports are not required by NGINX, which maps to port 8080 for each instance. The port is only required if you want access to the Tomcat Web Console. The port, shown below, 32771, is merely used as an example.

- Spring Music Application: 192.168.99.100

- NGINX Status: 192.168.99.100/nginx_status

- Tomcat Web Console – music_app_1*: 192.168.99.100:32771/manager

- Environment Variables – music_app_1: 192.168.99.100:32771/env

- Album List (RESTful endpoint) – music_app_1: 192.168.99.100:32771/albums

- Elasticsearch Info: 192.168.99.100:9200

- Elasticsearch Status: 192.168.99.100:9200/_status?pretty

- Kibana Web Console: 192.168.99.100:5601

* The Tomcat user name is admin and the password is t0mcat53rv3r.

Helpful Links

- Cloud Foundry’s Spring Music Example

- Getting Started with Gradle for Java

- Introduction to Gradle

- Spring Framework

- Understanding Nginx HTTP Proxying, Load Balancing, Buffering, and Caching

- Common conversion patterns for log4j’s PatternLayout

- Spring @PropertySource example

- Java log4j logging

TODOs

- Automate the Docker image build and publish processes

- Automate the Docker container build and deploy processes

- Automate post-deployment verification testing of project infrastructure

- Add Docker Swarm multi-host capabilities with overlay networking

- Update Spring Music with latest CF project revisions

- Include scripting example to stand-up project on AWS

- Add Consul and Consul Template for NGINX configuration

Automate the Provisioning and Configuration of HAProxy and an Apache Web Server Cluster Using Foreman

Posted by Gary A. Stafford in Bash Scripting, Build Automation, Continuous Delivery, DevOps on February 21, 2015

Use Vagrant, Foreman, and Puppet to provision and configure HAProxy as a reverse proxy, load-balancer for a cluster of Apache web servers.

Introduction

In this post, we will use several technologies, including Vagrant, Foreman, and Puppet, to provision and configure a basic load-balanced web server environment. In this environment, a single node with HAProxy will act as a reverse proxy and load-balancer for two identical Apache web server nodes. All three nodes will be provisioned and bootstrapped using Vagrant, from a Linux CentOS 6.5 Vagrant Box. Afterwards, Foreman, with Puppet, will then be used to install and configure the nodes with HAProxy and Apache, using a series of Puppet modules.

For this post, I will assume you already have running instances of Vagrant with the vagrant-hostmanager plugin, VirtualBox, and Foreman. If you are unfamiliar with Vagrant, the vagrant-hostmanager plugin, VirtualBox, Foreman, or Puppet, review my recent post, Installing Foreman and Puppet Agent on Multiple VMs Using Vagrant and VirtualBox. This post demonstrates how to install and configure Foreman. In addition, the post also demonstrates how to provision and bootstrap virtual machines using Vagrant and VirtualBox. Basically, we will be repeating many of this same steps in this post, with the addition of HAProxy, Apache, and some custom configuration Puppet modules.

All code for this post is available on GitHub. However, it been updated as of 8/23/2015. Changes were required to fix compatibility issues with the latest versions of Puppet 4.x and Foreman. Additionally, the version of CentOS on all VMs was updated from 6.6 to 7.1 and the version of Foreman was updated from 1.7 to 1.9.

Steps

Here is a high-level overview of our steps in this post:

- Provision and configure the three CentOS-based virtual machines (‘nodes’) using Vagrant and VirtualBox

- Install the HAProxy and Apache Puppet modules, from Puppet Forge, onto the Foreman server

- Install the custom HAProxy and Apache Puppet configuration modules, from GitHub, onto the Foreman server

- Import the four new module’s classes to Foreman’s Puppet class library

- Add the three new virtual machines (‘hosts’) to Foreman

- Configure the new hosts in Foreman, assigning the appropriate Puppet classes

- Apply the Foreman Puppet configurations to the new hosts

- Test HAProxy is working as a reverse and proxy load-balancer for the two Apache web server nodes

In this post, I will use the terms ‘virtual machine’, ‘machine’, ‘node’, ‘agent node’, and ‘host’, interchangeable, based on each software’s own nomenclature.

Provisioning

First, using the process described in the previous post, provision and bootstrap the three new virtual machines. The new machine’s Vagrant configuration is shown below. This should be added to the JSON configuration file. All code for the earlier post is available on GitHub.

{

"nodes": {

"haproxy.example.com": {

":ip": "192.168.35.101",

"ports": [],

":memory": 512,

":bootstrap": "bootstrap-node.sh"

},

"node01.example.com": {

":ip": "192.168.35.121",

"ports": [],

":memory": 512,

":bootstrap": "bootstrap-node.sh"

},

"node02.example.com": {

":ip": "192.168.35.122",

"ports": [],

":memory": 512,

":bootstrap": "bootstrap-node.sh"

}

}

}

After provisioning and bootstrapping, observe the three machines running in Oracle’s VM VirtualBox Manager.

Installing Puppet Forge Modules

The next task is to install the HAProxy and Apache Puppet modules on the Foreman server. This allows Foreman to have access to them. I chose the puppetlabs-haproxy HAProxy module and the puppetlabs-apache Apache modules. Both modules were authored by Puppet Labs, and are available on Puppet Forge.

The exact commands to install the modules onto your Foreman server will depend on your Foreman environment configuration. In my case, I used the following two commands to install the two Puppet Forge modules into my ‘Production’ environment’s module directory.

sudo puppet module install -i /etc/puppet/environments/production/modules puppetlabs-haproxy sudo puppet module install -i /etc/puppet/environments/production/modules puppetlabs-apache # confirm module installation puppet module list --modulepath /etc/puppet/environments/production/modules

Installing Configuration Modules

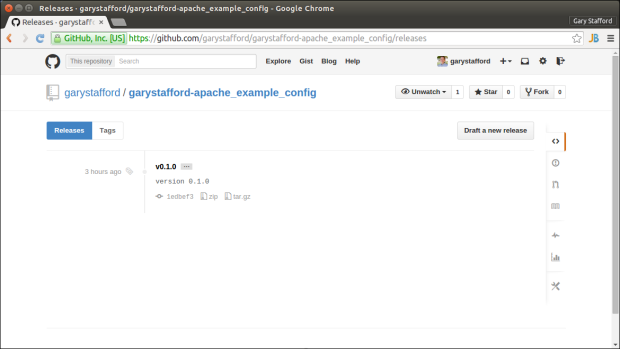

Next, install the HAProxy and Apache configuration Puppet modules on the Foreman server. Both modules are hosted on my GitHub repository. Both modules can be downloaded directly from GitHub and installed on the Foreman server, from the command line. Again, the exact commands to install the modules onto your Foreman server will depend on your Foreman environment configuration. In my case, I used the following two commands to install the two Puppet Forge modules into my ‘Production’ environment’s module directory. Also, notice I am currently downloading version 0.1.0 of both modules at the time of writing this post. Make sure to double-check for the latest versions of both modules before running the commands. Modify the commands if necessary.

# apache config module wget -N https://github.com/garystafford/garystafford-apache_example_config/archive/v0.1.0.tar.gz && \ sudo puppet module install -i /etc/puppet/environments/production/modules ~/v0.1.0.tar.gz --force # haproxy config module wget -N https://github.com/garystafford/garystafford-haproxy_node_config/archive/v0.1.0.tar.gz && \ sudo puppet module install -i /etc/puppet/environments/production/modules ~/v0.1.0.tar.gz --force # confirm module installation puppet module list --modulepath /etc/puppet/environments/production/modules

HAProxy Configuration

The HAProxy configuration module configures HAProxy’s /etc/haproxy/haproxy.cfg file. The single class in the module’s init.pp manifest is as follows:

class haproxy_node_config () inherits haproxy {

haproxy::listen { 'puppet00':

collect_exported => false,

ipaddress => '*',

ports => '80',

mode => 'http',

options => {

'option' => ['httplog'],

'balance' => 'roundrobin',

},

}

Haproxy::Balancermember <<| listening_service == 'puppet00' |>>

haproxy::balancermember { 'haproxy':

listening_service => 'puppet00',

server_names => ['node01.example.com', 'node02.example.com'],

ipaddresses => ['192.168.35.121', '192.168.35.122'],

ports => '80',

options => 'check',

}

}

The resulting /etc/haproxy/haproxy.cfg file will have the following configuration added. It defines the two Apache web server node’s hostname, ip addresses, and http port. The configuration also defines the load-balancing method, ‘round-robin‘ in our example. In this example, we are using layer 7 load-balancing (application layer – http), as opposed to layer 4 load-balancing (transport layer – tcp). Either method will work for this example. The Puppet Labs’ HAProxy module’s documentation on Puppet Forge and HAProxy’s own documentation are both excellent starting points to understand how to configure HAProxy. We are barely scraping the surface of HAProxy’s capabilities in this brief example.

listen puppet00 bind *:80 mode http balance roundrobin option httplog server node01.example.com 192.168.35.121:80 check server node02.example.com 192.168.35.122:80 check

Apache Configuration

The Apache configuration module creates default web page in Apache’s docroot directory, /var/www/html/index.html. The single class in the module’s init.pp manifest is as follows:

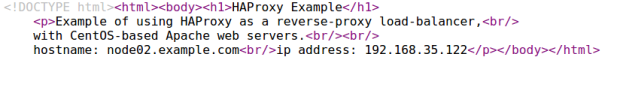

The resulting /var/www/html/index.html file will look like the following. Observe that the facter variables shown in the module manifest above have been replaced by the individual node’s hostname and ip address during application of the configuration by Puppet (ie. ${fqdn} became node01.example.com).

Both of these Puppet modules were created specifically to configure HAProxy and Apache for this post. Unlike published modules on Puppet Forge, these two modules are very simple, and don’t necessarily represent the best practices and patterns for authoring Puppet Forge modules.

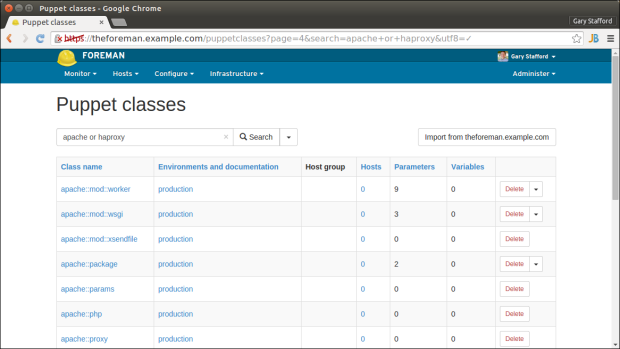

Importing into Foreman

After installing the new modules onto the Foreman server, we need to import them into Foreman. This is accomplished from the ‘Puppet classes’ tab, using the ‘Import from theforeman.example.com’ button. Once imported, the module classes are available to assign to host machines.

Add Host to Foreman

Next, add the three new hosts to Foreman. If you have questions on how to add the nodes to Foreman, start Puppet’s Certificate Signing Request (CSR) process on the hosts, signing the certificates, or other first time tasks, refer to the previous post. That post explains this process in detail.

Configure the Hosts

Next, configure the HAProxy and Apache nodes with the necessary Puppet classes. In addition to the base module classes and configuration classes, I recommend adding git and ntp modules to each of the new nodes. These modules were explained in the previous post. Refer to the screen-grabs below for correct module classes to add, specific to HAProxy and Apache.

Agent Configuration and Testing the System

Once configurations are retrieved and applied by Puppet Agent on each node, we can test our reverse proxy load-balanced environment. To start, open a browser and load haproxy.paychex.com. You should see one of the two pages below. Refresh the page a few times. You should observe HAProxy re-directing you to one Apache web server node, and then the other, using HAProxy’s round-robin algorithm. You can differentiate the Apache web servers by the hostname and ip address displayed on the web page.

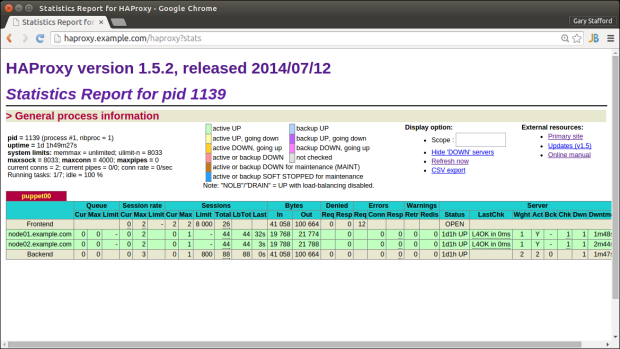

After hitting HAProxy’s URL several times successfully, view HAProxy’s built-in Statistics Report page at http://haproxy.example.com/haproxy?stats. Note below, each of the two Apache node has been hit 44 times each from HAProxy. This demonstrates the effectiveness of the reverse proxy and load-balancing features of HAProxy.

Accessing Apache Directly

If you are testing HAProxy from the same machine on which you created the virtual machines (VirtualBox host), you will likely be able to directly access either of the Apache web servers (ei. node02.example.com). The VirtualBox host file contains the ip addresses and hostnames of all three hosts. This DNS configuration was done automatically by the vagrant-hostmanager plugin. However, in an actual Production environment, only the HAProxy server’s hostname and ip address would be publicly accessible to a user. The two Apache nodes would sit behind a firewall, accessible only by the HAProxy server. HAProxy acts as a façade to public side of the network.

Testing Apache Host Failure

The main reason you would likely use a load-balancer is high-availability. With HAProxy acting as a load-balancer, we should be able to impair one of the two Apache nodes, without noticeable disruption. HAProxy will continue to serve content from the remaining Apache web server node.

Log into node01.example.com, using the following command, vagrant ssh node01.example.com. To simulate an impairment on ‘node01’, run the following command to stop Apache, sudo service httpd stop. Now, refresh the haproxy.example.com URL in your web browser. You should notice HAProxy is now redirecting all traffic to node02.example.com.

Troubleshooting

While troubleshooting HAProxy configuration issues for this demonstration, I discovered logging is not configured by default on CentOS. No worries, I recommend HAProxy: Give me some logs on CentOS 6.5!, by Stephane Combaudon, to get logging running. Once logging is active, you can more easily troubleshoot HAProxy and Apache configuration issues. Here are some example commands you might find useful:

# haproxy sudo more -f /var/log/haproxy.log sudo haproxy -f /etc/haproxy/haproxy.cfg -c # check/validate config file # apache sudo ls -1 /etc/httpd/logs/ sudo tail -50 /etc/httpd/logs/error_log sudo less /etc/httpd/logs/access_log

Redundant Proxies

In this simple example, the system’s weakest point is obviously the single HAProxy instance. It represents a single-point-of-failure (SPOF) in our environment. In an actual production environment, you would likely have more than one instance of HAProxy. They may both be in a load-balanced pool, or one active and on standby as a failover, should one instance become impaired. There are several techniques for building in proxy redundancy, often with the use of Virtual IP and Keepalived. Below is a list of articles that might help you take this post’s example to the next level.

- An Introduction to HAProxy and Load Balancing Concepts

- Install HAProxy and Keepalived (Virtual IP)

- Redundant Load Balancers – HAProxy and Keepalived

- Howto setup a haproxy as fault tolerant / high available load balancer for multiple caching web proxies on RHEL/Centos/SL

- Keepalived Module on Puppet Forge: arioch/keepalived, byTom De Vylder

Installing Foreman and Puppet Agent on Multiple VMs Using Vagrant and VirtualBox

Posted by Gary A. Stafford in DevOps, Enterprise Software Development, Software Development on January 18, 2015

Automatically install and configure Foreman, the open source infrastructure lifecycle management tool, and multiple Puppet Agent VMs using Vagrant and VirtualBox.

Introduction

In the last post, Installing Puppet Master and Agents on Multiple VM Using Vagrant and VirtualBox, we installed Puppet Master/Agent on VirtualBox VMs using Vagrant. Puppet Master is an excellent tool, but lacks the ease-of-use of Puppet Enterprise or Foreman. In this post, we will build an almost identical environment, substituting Foreman for Puppet Master.

According to Foreman’s website, “Foreman is an open source project that helps system administrators manage servers throughout their lifecycle, from provisioning and configuration to orchestration and monitoring. Using Puppet or Chef and Foreman’s smart proxy architecture, you can easily automate repetitive tasks, quickly deploy applications, and proactively manage change, both on-premise with VMs and bare-metal or in the cloud.”

Combined with Puppet Labs’ Open Source Puppet, Foreman is an effective solution to manage infrastructure and system configuration. Again, according to Foreman’s website, the Foreman installer is a collection of Puppet modules that installs everything required for a full working Foreman setup. The installer uses native OS packaging and adds necessary configuration for the complete installation. By default, the Foreman installer will configure:

- Apache HTTP with SSL (using a Puppet-signed certificate)

- Foreman running under mod_passenger

- Smart Proxy configured for Puppet, TFTP and SSL

- Puppet master running under mod_passenger

- Puppet agent configured

- TFTP server (under xinetd on Red Hat platforms)

For the average Systems Engineer or Software Developer, installing and configuring Foreman, Puppet Master, Apache, Puppet Agent, and the other associated software packages listed above, is daunting. If the installation doesn’t work properly, you must troubleshooting, or trying to remove and reinstall some or all the components.

A better solution is to automate the installation of Foreman into a Docker container, or on to a VM using Vagrant. Automating the installation process guarantees accuracy and consistency. The Vagrant VirtualBox VM can be snapshotted, moved to another host, or simply destroyed and recreated, if needed.

All code for this post is available on GitHub. However, it been updated as of 8/23/2015. Changes were required to fix compatibility issues with the latest versions of Puppet 4.x and Foreman. Additionally, the version of CentOS on all VMs was updated from 6.6 to 7.1 and the version of Foreman was updated from 1.7 to 1.9.

The Post’s Example

In this post, we will use Vagrant and VirtualBox to create three VMs. The VMs in this post will be build from a standard CentOS 6.5 x64 base Vagrant Box, located on Atlas. We will use a single JSON-format configuration file to automatically build all three VMs with Vagrant. As part of the provisioning process, using Vagrant’s shell provisioner, we will execute a bootstrap shell script. The script will install Foreman and it’s associated software on the first VM, and Puppet Agent on the two remaining VMs (aka Puppet ‘agent nodes’ or Foreman ‘hosts’).

Foreman does have the ability to provision on bare-metal infrastructure and public or private clouds. However, this example would simulate an environment where you have existing nodes you want to manage with Foreman.

The Foreman bootstrap script will also download several Puppet modules. To test Foreman once the provisioning is complete, import those module’s classes into Foreman and assign the classes to the hosts. The hosts will fetch and apply the configurations. You can then test for the installed instances of those module’s components on the puppet agent hosts.

Vagrant

To begin the process, we will use the JSON-format configuration file to create the three VMs, using Vagrant and VirtualBox.

{

"nodes": {

"theforeman.example.com": {

":ip": "192.168.35.5",

"ports": [],

":memory": 1024,

":bootstrap": "bootstrap-foreman.sh"

},

"agent01.example.com": {

":ip": "192.168.35.10",

"ports": [],

":memory": 1024,

":bootstrap": "bootstrap-node.sh"

},

"agent02.example.com": {

":ip": "192.168.35.20",

"ports": [],

":memory": 1024,

":bootstrap": "bootstrap-node.sh"

}

}

}

The Vagrantfile uses the JSON-format configuration file, to provision the three VMs, using a single ‘vagrant up‘ command. That’s it, less than 30 lines of actual code in the Vagrantfile to create as many VMs as you want. For this post’s example, we will not need to add any VirtualBox port mappings. However, that can also done from the JSON configuration file (see the READM.md for more directions).

If you have not used the CentOS Vagrant Box, it will take a few minutes the first time for Vagrant to download the it to the local Vagrant Box repository.

# -*- mode: ruby -*-

# vi: set ft=ruby :

# Builds single Foreman server and

# multiple Puppet Agent Nodes using JSON config file

# Gary A. Stafford - 01/15/2015

# read vm and chef configurations from JSON files

nodes_config = (JSON.parse(File.read("nodes.json")))['nodes']

VAGRANTFILE_API_VERSION = "2"

Vagrant.configure(VAGRANTFILE_API_VERSION) do |config|

config.vm.box = "chef/centos-6.5"

nodes_config.each do |node|

node_name = node[0] # name of node

node_values = node[1] # content of node

config.vm.define node_name do |config|

# configures all forwarding ports in JSON array

ports = node_values['ports']

ports.each do |port|

config.vm.network :forwarded_port,

host: port[':host'],

guest: port[':guest'],

id: port[':id']

end

config.vm.hostname = node_name

config.vm.network :private_network, ip: node_values[':ip']

config.vm.provider :virtualbox do |vb|

vb.customize ["modifyvm", :id, "--memory", node_values[':memory']]

vb.customize ["modifyvm", :id, "--name", node_name]

end

config.vm.provision :shell, :path => node_values[':bootstrap']

end

end

end

Once provisioned, the three VMs, also called ‘Machines’ by Vagrant, should appear in Oracle VM VirtualBox Manager.

The name of the VMs, referenced in Vagrant commands, is the parent node name in the JSON configuration file (node_name), such as, ‘vagrant ssh theforeman.example.com‘.

Bootstrapping Foreman

As part of the Vagrant provisioning process (‘vagrant up‘ command), a bootstrap script is executed on the VMs (shown below). This script will do almost of the installation and configuration work. Below is script for bootstrapping the Foreman VM.

#!/bin/sh

# Run on VM to bootstrap Foreman server

# Gary A. Stafford - 01/15/2015

if ps aux | grep "/usr/share/foreman" | grep -v grep 2> /dev/null

then

echo "Foreman appears to all already be installed. Exiting..."

else

# Configure /etc/hosts file

echo "" | sudo tee --append /etc/hosts 2> /dev/null && \

echo "192.168.35.5 theforeman.example.com theforeman" | sudo tee --append /etc/hosts 2> /dev/null

# Update system first

sudo yum update -y

# Install Foreman for CentOS 6

sudo rpm -ivh http://yum.puppetlabs.com/puppetlabs-release-el-6.noarch.rpm && \

sudo yum -y install epel-release http://yum.theforeman.org/releases/1.7/el6/x86_64/foreman-release.rpm && \

sudo yum -y install foreman-installer && \

sudo foreman-installer

# First run the Puppet agent on the Foreman host which will send the first Puppet report to Foreman,

# automatically creating the host in Foreman's database

sudo puppet agent --test --waitforcert=60

# Install some optional puppet modules on Foreman server to get started...

sudo puppet module install -i /etc/puppet/environments/production/modules puppetlabs-ntp

sudo puppet module install -i /etc/puppet/environments/production/modules puppetlabs-git

sudo puppet module install -i /etc/puppet/environments/production/modules puppetlabs-docker

fi

Bootstrapping Puppet Agent Nodes

Below is script for bootstrapping the puppet agent nodes. The agent node bootstrap script was executed as part of the Vagrant provisioning process.

#!/bin/sh

# Run on VM to bootstrap Puppet Agent nodes

# Gary A. Stafford - 01/15/2015

if ps aux | grep "puppet agent" | grep -v grep 2> /dev/null

then

echo "Puppet Agent is already installed. Moving on..."

else

# Update system first

sudo yum update -y

# Install Puppet for CentOS 6

sudo rpm -ivh http://yum.puppetlabs.com/puppetlabs-release-el-6.noarch.rpm && \

sudo yum -y install puppet

# Configure /etc/hosts file

echo "" | sudo tee --append /etc/hosts 2> /dev/null && \

echo "192.168.35.5 theforeman.example.com theforeman" | sudo tee --append /etc/hosts 2> /dev/null

# Add agent section to /etc/puppet/puppet.conf (sets run interval to 120 seconds)

echo "" | sudo tee --append /etc/puppet/puppet.conf 2> /dev/null && \

echo " server = theforeman.example.com" | sudo tee --append /etc/puppet/puppet.conf 2> /dev/null && \

echo " runinterval = 120" | sudo tee --append /etc/puppet/puppet.conf 2> /dev/null

sudo service puppet stop

sudo service puppet start

sudo puppet resource service puppet ensure=running enable=true

sudo puppet agent --enable

fi

Now that the Foreman is running, use the command, ‘vagrant ssh agent01.example.com‘, to ssh into the first puppet agent node. Run the command below.

sudo puppet agent --test --waitforcert=60

The command above manually starts Puppet’s Certificate Signing Request (CSR) process, to generate the certificates and security credentials (private and public keys) generated by Puppet’s built-in certificate authority (CA). Each puppet agent node must have it certificate signed by the Foreman, first. According to Puppet’s website, “Before puppet agent nodes can retrieve their configuration catalogs, they need a signed certificate from the local Puppet certificate authority (CA). When using Puppet’s built-in CA (that is, not using an external CA), agents will submit a certificate signing request (CSR) to the CA Puppet Master (Foreman) and will retrieve a signed certificate once one is available.”

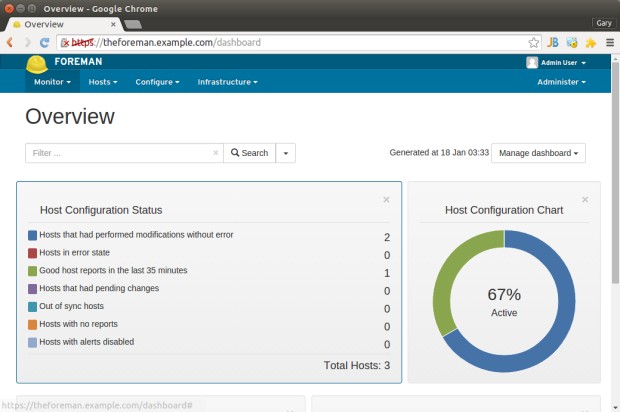

Open the Foreman browser-based interface, running at https://theforeman.example.com. Proceed to the ‘Infrastructure’ -> ‘Smart Proxies’ tab. Sign the certificate(s) from the agent nodes (shown below). The agent node will wait for the Foreman to sign the certificate, before continuing with the initial configuration.

Once the certificate signing process is complete, the host retrieves the client configuration from the Foreman and applies it to the hosts.

That’s it, you should now have one host running Foreman and two puppet agent nodes.

Testing Foreman

To test Foreman, import the classes from the Puppet modules installed with the Foreman bootstrap script.

Next, apply ntp, git, and Docker classes to both agent nodes (aka, Foreman ‘hosts’), as well as the Foreman node, itself.

Every two minutes, the two agent node hosts should fetch their latest configuration from Foreman and apply it. In a few minutes, check the times reported in the ‘Last report’ column on the ‘All Hosts’ tab. If the times are two minutes or less, Foreman and Puppet Agent are working. Note we changed the runinterval to 120 seconds (‘120s’) in the bootstrap script to speed up the Puppet Agent updates for the sake of the demo. The normal default interval is 30 minutes. I recommend changing the agent node’s runinterval back to 30 minutes (’30m’) on the hosts, once everything is working to save unnecessary use of resources.

Finally, to verify that the configuration was successfully applied to the hosts, check if ntp, git, and Docker are now running on the hosts.

Helpful Links

All the source code this project is on Github.

Foreman:

http://theforeman.org

Atlas – Discover Vagrant Boxes:

https://atlas.hashicorp.com/boxes/search

Learning Puppet – Basic Agent/Master Puppet

https://docs.puppetlabs.com/learning/agent_master_basic.html

Puppet Glossary (of terms):

https://docs.puppetlabs.com/references/glossary.html

Installing Puppet Master and Agents on Multiple VM Using Vagrant and VirtualBox

Posted by Gary A. Stafford in Bash Scripting, Build Automation, DevOps, Enterprise Software Development, Software Development on December 14, 2014

Automatically provision multiple VMs with Vagrant and VirtualBox. Automatically install, configure, and test Puppet Master and Puppet Agents on those VMs.

Introduction

Note this post and accompanying source code was updated on 12/16/2014 to v0.2.1. It contains several improvements to improve and simplify the install process.

Puppet Labs’ Open Source Puppet Agent/Master architecture is an effective solution to manage infrastructure and system configuration. However, for the average System Engineer or Software Developer, installing and configuring Puppet Master and Puppet Agent can be challenging. If the installation doesn’t work properly, the engineer’s stuck troubleshooting, or trying to remove and re-install Puppet.

A better solution, automate the installation of Puppet Master and Puppet Agent on Virtual Machines (VMs). Automating the installation process guarantees accuracy and consistency. Installing Puppet on VMs means the VMs can be snapshotted, cloned, or simply destroyed and recreated, if needed.

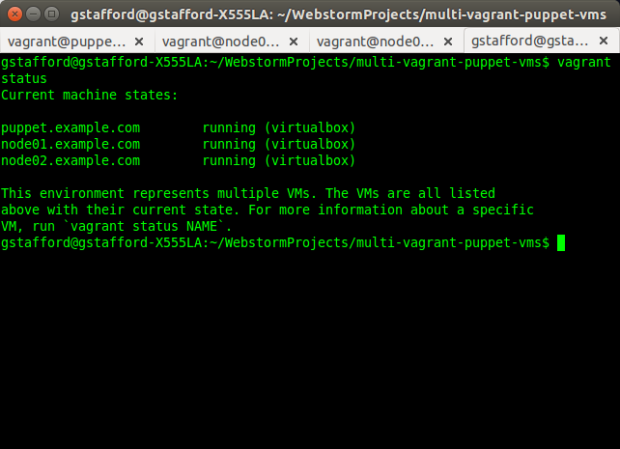

In this post, we will use Vagrant and VirtualBox to create three VMs. The VMs will be build from a Ubuntu 14.04.1 LTS (Trusty Tahr) Vagrant Box, previously on Vagrant Cloud, now on Atlas. We will use a single JSON-format configuration file to build all three VMs, automatically. As part of the Vagrant provisioning process, we will run a bootstrap shell script to install Puppet Master on the first VM (Puppet Master server) and Puppet Agent on the two remaining VMs (agent nodes).

Lastly, to test our Puppet installations, we will use Puppet to install some basic Puppet modules, including ntp and git on the server, and ntp, git, Docker and Fig, on the agent nodes.

All the source code this project is on Github.

Vagrant

To begin the process, we will use the JSON-format configuration file to create the three VMs, using Vagrant and VirtualBox.

{

"nodes": {

"puppet.example.com": {

":ip": "192.168.32.5",

"ports": [],

":memory": 1024,

":bootstrap": "bootstrap-master.sh"

},

"node01.example.com": {

":ip": "192.168.32.10",

"ports": [],

":memory": 1024,

":bootstrap": "bootstrap-node.sh"

},

"node02.example.com": {

":ip": "192.168.32.20",

"ports": [],

":memory": 1024,

":bootstrap": "bootstrap-node.sh"

}

}

}

The Vagrantfile uses the JSON-format configuration file, to provision the three VMs, using a single ‘vagrant up‘ command. That’s it, less than 30 lines of actual code in the Vagrantfile to create as many VMs as we need. For this post’s example, we will not need to add any port mappings, which can be done from the JSON configuration file (see the READM.md for more directions). The Vagrant Box we are using already has the correct ports opened.

If you have not previously used the Ubuntu Vagrant Box, it will take a few minutes the first time for Vagrant to download the it to the local Vagrant Box repository.

# vi: set ft=ruby :

# Builds Puppet Master and multiple Puppet Agent Nodes using JSON config file

# Author: Gary A. Stafford

# read vm and chef configurations from JSON files

nodes_config = (JSON.parse(File.read("nodes.json")))['nodes']

VAGRANTFILE_API_VERSION = "2"

Vagrant.configure(VAGRANTFILE_API_VERSION) do |config|

config.vm.box = "ubuntu/trusty64"

nodes_config.each do |node|

node_name = node[0] # name of node

node_values = node[1] # content of node

config.vm.define node_name do |config|

# configures all forwarding ports in JSON array

ports = node_values['ports']

ports.each do |port|

config.vm.network :forwarded_port,

host: port[':host'],

guest: port[':guest'],

id: port[':id']

end

config.vm.hostname = node_name

config.vm.network :private_network, ip: node_values[':ip']

config.vm.provider :virtualbox do |vb|

vb.customize ["modifyvm", :id, "--memory", node_values[':memory']]

vb.customize ["modifyvm", :id, "--name", node_name]

end

config.vm.provision :shell, :path => node_values[':bootstrap']

end

end

end

Once provisioned, the three VMs, also referred to as ‘Machines’ by Vagrant, should appear, as shown below, in Oracle VM VirtualBox Manager.

The name of the VMs, referenced in Vagrant commands, is the parent node name in the JSON configuration file (node_name), such as, ‘vagrant ssh puppet.example.com‘.

Bootstrapping Puppet Master Server

As part of the Vagrant provisioning process, a bootstrap script is executed on each of the VMs (script shown below). This script will do 98% of the required work for us. There is one for the Puppet Master server VM, and one for each agent node.

#!/bin/sh

# Run on VM to bootstrap Puppet Master server

if ps aux | grep "puppet master" | grep -v grep 2> /dev/null

then

echo "Puppet Master is already installed. Exiting..."

else

# Install Puppet Master

wget https://apt.puppetlabs.com/puppetlabs-release-trusty.deb && \

sudo dpkg -i puppetlabs-release-trusty.deb && \

sudo apt-get update -yq && sudo apt-get upgrade -yq && \

sudo apt-get install -yq puppetmaster

# Configure /etc/hosts file

echo "" | sudo tee --append /etc/hosts 2> /dev/null && \

echo "# Host config for Puppet Master and Agent Nodes" | sudo tee --append /etc/hosts 2> /dev/null && \

echo "192.168.32.5 puppet.example.com puppet" | sudo tee --append /etc/hosts 2> /dev/null && \

echo "192.168.32.10 node01.example.com node01" | sudo tee --append /etc/hosts 2> /dev/null && \

echo "192.168.32.20 node02.example.com node02" | sudo tee --append /etc/hosts 2> /dev/null

# Add optional alternate DNS names to /etc/puppet/puppet.conf

sudo sed -i 's/.*\[main\].*/&\ndns_alt_names = puppet,puppet.example.com/' /etc/puppet/puppet.conf

# Install some initial puppet modules on Puppet Master server

sudo puppet module install puppetlabs-ntp

sudo puppet module install garethr-docker

sudo puppet module install puppetlabs-git

sudo puppet module install puppetlabs-vcsrepo

sudo puppet module install garystafford-fig

# symlink manifest from Vagrant synced folder location

ln -s /vagrant/site.pp /etc/puppet/manifests/site.pp

fi

There are a few last commands we need to run ourselves, from within the VMs. Once the provisioning process is complete, ‘vagrant ssh puppet.example.com‘ into the newly provisioned Puppet Master server. Below are the commands we need to run within the ‘puppet.example.com‘ VM.

sudo service puppetmaster status # test that puppet master was installed sudo service puppetmaster stop sudo puppet master --verbose --no-daemonize # Ctrl+C to kill puppet master sudo service puppetmaster start sudo puppet cert list --all # check for 'puppet' cert

According to Puppet’s website, ‘these steps will create the CA certificate and the puppet master certificate, with the appropriate DNS names included.‘

Bootstrapping Puppet Agent Nodes

Now that the Puppet Master server is running, open a second terminal tab (‘Shift+Ctrl+T‘). Use the command, ‘vagrant ssh node01.example.com‘, to ssh into the new Puppet Agent node. The agent node bootstrap script should have already executed as part of the Vagrant provisioning process.

#!/bin/sh

# Run on VM to bootstrap Puppet Agent nodes

# http://blog.kloudless.com/2013/07/01/automating-development-environments-with-vagrant-and-puppet/

if ps aux | grep "puppet agent" | grep -v grep 2> /dev/null

then

echo "Puppet Agent is already installed. Moving on..."

else

sudo apt-get install -yq puppet

fi

if cat /etc/crontab | grep puppet 2> /dev/null

then

echo "Puppet Agent is already configured. Exiting..."

else

sudo apt-get update -yq && sudo apt-get upgrade -yq

sudo puppet resource cron puppet-agent ensure=present user=root minute=30 \

command='/usr/bin/puppet agent --onetime --no-daemonize --splay'

sudo puppet resource service puppet ensure=running enable=true

# Configure /etc/hosts file

echo "" | sudo tee --append /etc/hosts 2> /dev/null && \

echo "# Host config for Puppet Master and Agent Nodes" | sudo tee --append /etc/hosts 2> /dev/null && \

echo "192.168.32.5 puppet.example.com puppet" | sudo tee --append /etc/hosts 2> /dev/null && \

echo "192.168.32.10 node01.example.com node01" | sudo tee --append /etc/hosts 2> /dev/null && \

echo "192.168.32.20 node02.example.com node02" | sudo tee --append /etc/hosts 2> /dev/null

# Add agent section to /etc/puppet/puppet.conf

echo "" && echo "[agent]\nserver=puppet" | sudo tee --append /etc/puppet/puppet.conf 2> /dev/null

sudo puppet agent --enable

fi

Run the two commands below within both the ‘node01.example.com‘ and ‘node02.example.com‘ agent nodes.

sudo service puppet status # test that agent was installed sudo puppet agent --test --waitforcert=60 # initiate certificate signing request (CSR)

The second command above will manually start Puppet’s Certificate Signing Request (CSR) process, to generate the certificates and security credentials (private and public keys) generated by Puppet’s built-in certificate authority (CA). Each Puppet Agent node must have it certificate signed by the Puppet Master, first. According to Puppet’s website, “Before puppet agent nodes can retrieve their configuration catalogs, they need a signed certificate from the local Puppet certificate authority (CA). When using Puppet’s built-in CA (that is, not using an external CA), agents will submit a certificate signing request (CSR) to the CA Puppet Master and will retrieve a signed certificate once one is available.”

Back on the Puppet Master Server, run the following commands to sign the certificate(s) from the agent node(s). You may sign each node’s certificate individually, or wait and sign them all at once. Note the agent node(s) will wait for the Puppet Master to sign the certificate, before continuing with the Puppet Agent configuration run.

sudo puppet cert list # should see 'node01.example.com' cert waiting for signature sudo puppet cert sign --all # sign the agent node certs sudo puppet cert list --all # check for signed certs

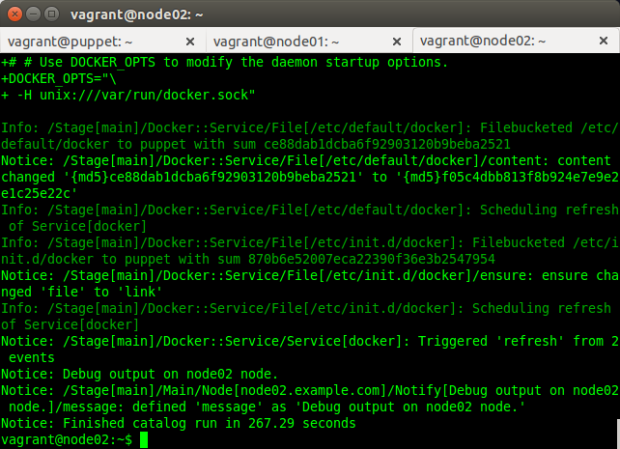

Once the certificate signing process is complete, the Puppet Agent retrieves the client configuration from the Puppet Master and applies it to the local agent node. The Puppet Agent will execute all applicable steps in the site.pp manifest on the Puppet Master server, designated for that specific Puppet Agent node (ie.’node node02.example.com {...}‘).

Below is the main site.pp manifest on the Puppet Master server, applied by Puppet Agent on the agent nodes.

node default {

# Test message

notify { "Debug output on ${hostname} node.": }

include ntp, git

}

node 'node01.example.com', 'node02.example.com' {

# Test message

notify { "Debug output on ${hostname} node.": }

include ntp, git, docker, fig

}

That’s it! You should now have one server VM running Puppet Master, and two agent node VMs running Puppet Agent. Both agent nodes should have successfully been registered with Puppet Master, and configured themselves based on the Puppet Master’s main manifest. Agent node configuration includes installing ntp, git, Fig, and Docker.

Helpful Links

All the source code this project is on Github.

Puppet Glossary (of terms):

https://docs.puppetlabs.com/references/glossary.html

Puppet Labs Open Source Automation Tools:

http://puppetlabs.com/misc/download-options

Puppet Master Overview:

http://ci.openstack.org/puppet.html

Install Puppet on Ubuntu:

https://docs.puppetlabs.com/guides/install_puppet/install_debian_ubuntu.html

Installing Puppet Master:

http://andyhan.linuxdict.com/index.php/sys-adm/item/273-puppet-371-on-centos-65-quick-start-i

Regenerating Node Certificates:

https://docs.puppetlabs.com/puppet/latest/reference/ssl_regenerate_certificates.html

Automating Development Environments with Vagrant and Puppet:

http://blog.kloudless.com/2013/07/01/automating-development-environments-with-vagrant-and-puppet

Create Multi-VM Environments Using Vagrant, Chef, and JSON

Posted by Gary A. Stafford in Build Automation, DevOps, Enterprise Software Development, Software Development on February 27, 2014

Create and manage ‘multi-machine’ environments with Vagrant, using JSON configuration files. Allow increased portability across hosts, environments, and organizations.

Introduction

As their website says, Vagrant has made it very easy to ‘create and configure lightweight, reproducible, and portable development environments.’ Based on Ruby, the elegantly simple open-source programming language, Vagrant requires a minimal learning curve to get up and running.

In this post, we will create what Vagrant refers to as a ‘multi-machine’ environment. We will provision three virtual machines (VMs). The VMs will mirror a typical three-tier architected environment, with separate web, application, and database servers.

We will move all the VM-specific information from the Vagrantfile to a separate JSON format configuration file. There are a few advantages to moving the configuration information to separate file. First, we can configure any number VMs, while keeping the Vagrantfile exactly the same. Secondly and more importantly, we can re-use the same Vagrantfile to build different VMs on another host machine.

Although certainly not required, I am also using Chef in this example. More specifically, I am using Hosted Chef to further configure the VMs. Like the VM-specific information above, I have also moved the Chef-specific information to a separate JSON configuration file. We can now use the same Vagrantfile within another Chef Environment, or even within another Chef Organization, using an alternate configuration files. If you are not a Chef user, you can disregard that part of the configuration code. Alternately, you can substitute the Chef configuration code for Puppet, if that is your configuration automation tool of choice.

The only items we will not remove from the Vagrantfile are the Vagrant Box and synced folder configurations. These items could also be moved to a separate configuration file, making the Vagrantfile even more generic and portable.

The Code

Below is the VM-specific JSON configuration file, containing all the individual configuration information necessary for Vagrant to build the three VMs: ‘apps’, dbs’, and ‘web’. Each child ‘node’ in the parent ‘nodes’ object contains key/value pairs for VM names, IP addresses, forwarding ports, host names, and memory settings. To add another VM, you would simply add another ‘node’ object.

| { | |

| "nodes": { | |

| "apps": { | |

| ":node": "ApplicationServer-201", | |

| ":ip": "192.168.33.21", | |

| ":host": "apps.server-201", | |

| "ports": [ | |

| { | |

| ":host": 2201, | |

| ":guest": 22, | |

| ":id": "ssh" | |

| }, | |

| { | |

| ":host": 7709, | |

| ":guest": 7709, | |

| ":id": "wls-listen" | |

| } | |

| ], | |

| ":memory": 2048 | |

| }, | |

| "dbs": { | |

| ":node": "DatabaseServer-301", | |

| ":ip": "192.168.33.31", | |

| ":host": "dbs.server-301", | |

| "ports": [ | |

| { | |

| ":host": 2202, | |

| ":guest": 22, | |

| ":id": "ssh" | |

| }, | |

| { | |

| ":host": 1529, | |

| ":guest": 1529, | |

| ":id": "xe-db" | |

| }, | |

| { | |

| ":host": 8380, | |

| ":guest": 8380, | |

| ":id": "xe-listen" | |

| } | |

| ], | |

| ":memory": 2048 | |

| }, | |

| "web": { | |

| ":node": "WebServer-401", | |

| ":ip": "192.168.33.41", | |

| ":host": "web.server-401", | |

| "ports": [ | |

| { | |

| ":host": 2203, | |

| ":guest": 22, | |

| ":id": "ssh" | |

| }, | |

| { | |

| ":host": 4756, | |

| ":guest": 4756, | |

| ":id": "apache" | |

| } | |

| ], | |

| ":memory": 1024 | |

| } | |

| } | |

| } |

Next, is the Chef-specific JSON configuration file, containing Chef configuration information common to all the VMs.

| { | |

| "chef": { | |

| ":chef_server_url": "https://api.opscode.com/organizations/my-organization", | |

| ":client_key_path": "/etc/chef/my-client.pem", | |

| ":environment": "my-environment", | |

| ":provisioning_path": "/etc/chef", | |

| ":validation_client_name": "my-client", | |

| ":validation_key_path": "~/.chef/my-client.pem" | |

| } | |

| } |

Lastly, the Vagrantfile, which loads both configuration files. The Vagrantfile instructs Vagrant to loop through all nodes in the nodes.json file, provisioning VMs for each node. Vagrant then uses the chef.json file to further configure the VMs.

The environment and node configuration items in the chef.json reference an actual Chef Environment and Chef Nodes. They are both part of a Chef Organization, which is configured within a Hosted Chef account.

| # -*- mode: ruby -*- | |

| # vi: set ft=ruby : | |

| # Multi-VM Configuration: Builds Web, Application, and Database Servers using JSON config file | |

| # Configures VMs based on Hosted Chef Server defined Environment and Node (vs. Roles) | |

| # Author: Gary A. Stafford | |

| # read vm and chef configurations from JSON files | |

| nodes_config = (JSON.parse(File.read("nodes.json")))['nodes'] | |

| chef_config = (JSON.parse(File.read("chef.json")))['chef'] | |

| VAGRANTFILE_API_VERSION = "2" | |

| Vagrant.require_plugin "vagrant-omnibus" | |

| Vagrant.configure(VAGRANTFILE_API_VERSION) do |config| | |

| config.vm.box = "vagrant-oracle-vm-saucy64" | |

| config.vm.box_url = "http://cloud-images.ubuntu.com/vagrant/saucy/current/saucy-server-cloudimg-amd64-vagrant-disk1.box" | |

| config.omnibus.chef_version = :latest | |

| nodes_config.each do |node| | |

| node_name = node[0] # name of node | |

| node_values = node[1] # content of node | |

| config.vm.define node_name do |config| | |

| # configures all forwarding ports in JSON array | |

| ports = node_values['ports'] | |

| ports.each do |port| | |

| config.vm.network :forwarded_port, | |

| host: port[':host'], | |

| guest: port[':guest'], | |

| id: port[':id'] | |

| end | |

| config.vm.hostname = node_values[':node'] | |

| config.vm.network :private_network, ip: node_values[':ip'] | |

| # syncs local repository of large third-party installer files (quicker than downloading each time) | |

| config.vm.synced_folder "#{ENV['HOME']}/Documents/git_repos/chef-artifacts", "/vagrant" | |

| config.vm.provider :virtualbox do |vb| | |

| vb.customize ["modifyvm", :id, "--memory", node_values[':memory']] | |

| vb.customize ["modifyvm", :id, "--name", node_values[':node']] | |

| end | |

| # chef configuration section | |

| config.vm.provision :chef_client do |chef| | |

| chef.environment = chef_config[':environment'] | |

| chef.provisioning_path = chef_config[':provisioning_path'] | |

| chef.chef_server_url = chef_config[':chef_server_url'] | |

| chef.validation_key_path = chef_config[':validation_key_path'] | |

| chef.node_name = node_values[':node'] | |

| chef.validation_client_name = chef_config[':validation_client_name'] | |

| chef.client_key_path = chef_config[':client_key_path'] | |

| end | |

| end | |

| end | |

| end |

Each VM has a varying number of ports it needs to configue and forward. To accomplish this, the Vagrantfile not only loops through the each node, it also loops through each port configuration object it finds within the node object. Shown below is the Database Server VM within VirtualBox, containing three forwarding ports.

In addition to the gists above, this repository on GitHub contains a complete copy of all the code used in the post.

The Results

Running the ‘vagrant up’ command will provision all three individually configured VMs. Once created and running in VirtualBox, Chef further configures the VMs with the necessary settings and applications specific to each server’s purposes. You can just as easily create 10, 100, or 1,000 VMs using this same process.

.

Helpful Links

Dynamically Allocated Storage Issues with Ubuntu’s Cloud Images

Posted by Gary A. Stafford in Build Automation, Enterprise Software Development on December 22, 2013

Background

According to Canonical, ‘Ubuntu Cloud Images are pre-installed disk images that have been customized by Ubuntu engineering to run on cloud-platforms such as Amazon EC2, Openstack, Windows and LXC’. Ubuntu also disk images, or ‘boxes’, built specifically for Vagrant and VirtualBox. Boxes, according to Vagrant, ‘are the skeleton from which Vagrant machines are constructed. They are portable files which can be used by others on any platform that runs Vagrant to bring up a working environment‘. Ubuntu’s images are very popular with Vagrant users to build their VMs.

Assuming you have VirtualBox and Vagrant installed on your Windows, Mac OS X, or Linux system, with a few simple commands, ‘vagrant add box…’, ‘vagrant init…’, and ‘vagrant up’, you can provision a VM from one of these boxes.

Dynamically Allocated Storage

The Ubuntu Cloud Images (boxes), are Virtual Machine Disk (VMDK) format files. These VMDK files are configured for dynamically allocated storage, with a virtual size of 40 GB. That means the VMDK format file should grow to an actual size of 40 GB, as files are added. According to VirtualBox, the VM ‘will initially be very small and not occupy any space for unused virtual disk sectors, but will grow every time a disk sector is written to for the first time, until the drive reaches the maximum capacity chosen when the drive was created’.

To illustrate dynamically allocated storage, below are three freshly provisioned VirtualBox virtual machines (VM), on three different hosts, all with different operating systems. One VM is hosted on Windows 7 Enterprise, another on Ubuntu 13.10 Desktop Edition, and the last on Mac OS X 10.6.8. The VMs were all created with Vagrant from the official Ubuntu Server 13.10 (Saucy Salamander) cloud images. The Windows and Ubuntu hosts used the 64-bit version. The Mac OS X host used the 32-bit version. According to VirtualBox Manager, on all three host platforms, the virtual size of the VMs is 40 GB and the actual size is about 1 GB.

So What’s the Problem?

After a significant amount of troubleshooting Chef recipe problems on two different Ubuntu-hosted VMs, the issue with the cloud images became painfully clear. Other than a single (seemingly charmed) Windows host, none of the VMs I tested on Windows-, Ubuntu-, and Mac OS X-hosts would expand beyond 4 GB. Below is the file system disk space usage report from four host’s VMs. All four were created with the most current version of Vagrant (1.4.1), and managed with the most current version of VirtualBox (4.3.6.x).

Windows-hosted 64-bit Cloud Image VM #1:

vagrant@vagrant-ubuntu-saucy-64:/tmp$ df -hT

Filesystem Type Size Used Avail Use% Mounted on

/dev/sda1 ext4 40G 1.1G 37G 3% /

none tmpfs 4.0K 0 4.0K 0% /sys/fs/cgroup

udev devtmpfs 241M 12K 241M 1% /dev

tmpfs tmpfs 50M 336K 49M 1% /run

none tmpfs 5.0M 0 5.0M 0% /run/lock

none tmpfs 246M 0 246M 0% /run/shm

none tmpfs 100M 0 100M 0% /run/user

/vagrant vboxsf 233G 196G 38G 85% /vagrant

Windows-hosted 32-bit Cloud Image VM #2:

vagrant@vagrant-ubuntu-saucy-32:~$ df -hT

Filesystem Type Size Used Avail Use% Mounted on

/dev/sda1 ext4 4.0G 1012M 2.8G 27% /

none tmpfs 4.0K 0 4.0K 0% /sys/fs/cgroup

udev devtmpfs 245M 8.0K 245M 1% /dev

tmpfs tmpfs 50M 336K 50M 1% /run

none tmpfs 5.0M 4.0K 5.0M 1% /run/lock

none tmpfs 248M 0 248M 0% /run/shm

none tmpfs 100M 0 100M 0% /run/user

/vagrant vboxsf 932G 209G 724G 23% /vagrant

Ubuntu-hosted 64-bit Cloud Image VM:

vagrant@vagrant-ubuntu-saucy-64:~$ df -hT

Filesystem Type Size Used Avail Use% Mounted on

/dev/sda1 ext4 4.0G 1.1G 2.7G 28% /

none tmpfs 4.0K 0 4.0K 0% /sys/fs/cgroup

udev devtmpfs 241M 8.0K 241M 1% /dev

tmpfs tmpfs 50M 336K 49M 1% /run

none tmpfs 5.0M 0 5.0M 0% /run/lock

none tmpfs 246M 0 246M 0% /run/shm

none tmpfs 100M 0 100M 0% /run/user

/vagrant vboxsf 74G 65G 9.1G 88% /vagrant

Mac OS X-hosted 32-bit Cloud Image VM:

vagrant@vagrant-ubuntu-saucy-32:~$ df -hT

Filesystem Type Size Used Avail Use% Mounted on

/dev/sda1 ext4 4.0G 1012M 2.8G 27% /

none tmpfs 4.0K 0 4.0K 0% /sys/fs/cgroup

udev devtmpfs 245M 12K 245M 1% /dev

tmpfs tmpfs 50M 336K 50M 1% /run

none tmpfs 5.0M 0 5.0M 0% /run/lock

none tmpfs 248M 0 248M 0% /run/shm

none tmpfs 100M 0 100M 0% /run/user

/vagrant vboxsf 149G 71G 79G 48% /vagrant

On the first Windows-hosted VM (the only host that actually worked), the virtual SCSI disk device (sda1), formatted ‘ext4‘, had a capacity of 40 GB. But, on the other three hosts, the same virtual device only had a capacity of 4 GB. I tested the various 32- and 64-bit Ubuntu Server 12.10 (Quantal Quetzal), 13.04 (Raring Ringtail), and 13.10 (Saucy Salamander) cloud images. They all exhibited the same issue. However, the Ubuntu 12.04.3 LTS (Precise Pangolin) worked fine on all three host OS systems.

To prove the issue was specifically with Ubuntu’s cloud images, I also tested boxes from Vagrant’s own repository, as well as other third-party providers. They all worked as expected, with no storage discrepancies. This was suggested in the only post I found on this issue, from StackExchange.

To confirm the Ubuntu-hosted VM will not expand beyond 4 GB, I also created a few multi-gigabyte files on each VM, totally 4 GB. The VMs virtual drive would not expand beyond 4 GB limit to accommodate the new files, as demonstrated below on a Ubuntu-hosted VM:

vagrant@vagrant-ubuntu-saucy-64:~$ dd if=/dev/zero of=/tmp/big_file2.bin bs=1M count=2000 dd: writing '/tmp/big_file2.bin': No space left on device 742+0 records in 741+0 records out 777560064 bytes (778 MB) copied, 1.81098 s, 429 MB/s vagrant@vagrant-ubuntu-saucy-64:~$ df -hT Filesystem Type Size Used Avail Use% Mounted on /dev/sda1 ext4 4.0G 3.7G 196K 100% /

The exact cause eludes me, but I tend to think the cloud images are the issue. I know they are capable of working, since the Ubuntu 12.04.3 cloud images expand to 40 GB, but the three most recent releases are limited to 4 GB. Whatever the cause, it’s a significant problem. Imagine you’ve provisioned a 100 or a 1,000 server nodes on your network from any of these cloud images, expecting them to grow to 40 GB, but really only having 10% of that potential. Worse, they have live production data on them, and suddenly run out of space.

Test Results

Below is the complete shell sessions from three hosts.

Windows-hosted 64-bit Cloud Image VM #1:

| gstafford@windows-host: ~/Documents/GitHub | |

| $ vagrant --version | |