Posts Tagged Database

Monolith to Microservices: Refactoring Relational Databases

Posted by Gary A. Stafford in Enterprise Software Development, SQL, Technology Consulting on April 14, 2022

Exploring common patterns for refactoring relational database models as part of a microservices architecture

Introduction

There is no shortage of books, articles, tutorials, and presentations on migrating existing monolithic applications to microservices, nor designing new applications using a microservices architecture. It has been one of the most popular IT topics for the last several years. Unfortunately, monolithic architectures often have equally monolithic database models. As organizations evolve from monolithic to microservices architectures, refactoring the application’s database model is often overlooked or deprioritized. Similarly, as organizations develop new microservices-based applications, they frequently neglect to apply a similar strategy to their databases.

The following post will examine several basic patterns for refactoring relational databases for microservices-based applications.

Terminology

Monolithic Architecture

A monolithic architecture is “the traditional unified model for the design of a software program. Monolithic, in this context, means composed all in one piece.” (TechTarget). A monolithic application “has all or most of its functionality within a single process or container, and it’s componentized in internal layers or libraries” (Microsoft). A monolith is usually built, deployed, and upgraded as a single unit of code.

Microservices Architecture

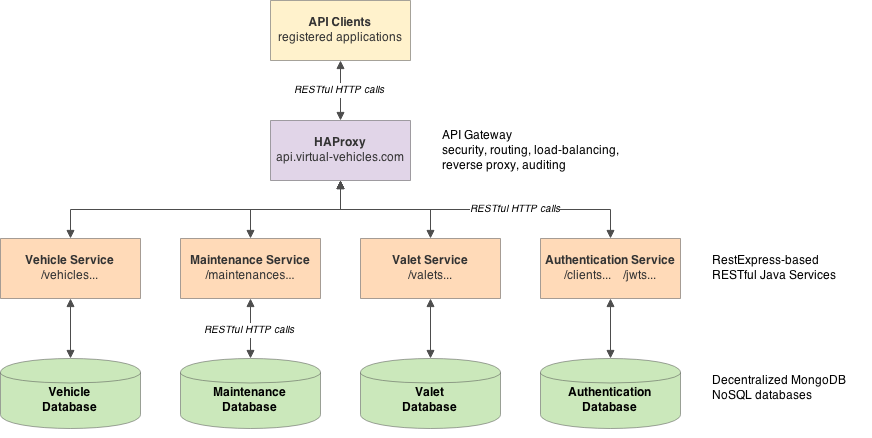

A microservices architecture (aka microservices) refers to “an architectural style for developing applications. Microservices allow a large application to be separated into smaller independent parts, with each part having its own realm of responsibility” (Google Cloud).

According to microservices.io, the advantages of microservices include:

- Highly maintainable and testable

- Loosely coupled

- Independently deployable

- Organized around business capabilities

- Owned by a small team

- Enables rapid, frequent, and reliable delivery

- Allows an organization to [more easily] evolve its technology stack

Database

A database is “an organized collection of structured information, or data, typically stored electronically in a computer system” (Oracle). There are many types of databases. The most common database engines include relational, NoSQL, key-value, document, in-memory, graph, time series, wide column, and ledger.

PostgreSQL

In this post, we will use PostgreSQL (aka Postgres), a popular open-source object-relational database. A relational database is “a collection of data items with pre-defined relationships between them. These items are organized as a set of tables with columns and rows. Tables are used to hold information about the objects to be represented in the database” (AWS).

Amazon RDS for PostgreSQL

We will use the fully managed Amazon RDS for PostgreSQL in this post. Amazon RDS makes it easy to set up, operate, and scale PostgreSQL deployments in the cloud. With Amazon RDS, you can deploy scalable PostgreSQL deployments in minutes with cost-efficient and resizable hardware capacity. In addition, Amazon RDS offers multiple versions of PostgreSQL, including the latest version used for this post, 14.2.

The patterns discussed here are not specific to Amazon RDS for PostgreSQL. There are many options for using PostgreSQL on the public cloud or within your private data center. Alternately, you could choose Amazon Aurora PostgreSQL-Compatible Edition, Google Cloud’s Cloud SQL for PostgreSQL, Microsoft’s Azure Database for PostgreSQL, ElephantSQL, or your own self-manage PostgreSQL deployed to bare metal servers, virtual machine (VM), or container.

Database Refactoring Patterns

There are many ways in which a relational database, such as PostgreSQL, can be refactored to optimize efficiency in microservices-based application architectures. As stated earlier, a database is an organized collection of structured data. Therefore, most refactoring patterns reorganize the data to optimize for an organization’s functional requirements, such as database access efficiency, performance, resilience, security, compliance, and manageability.

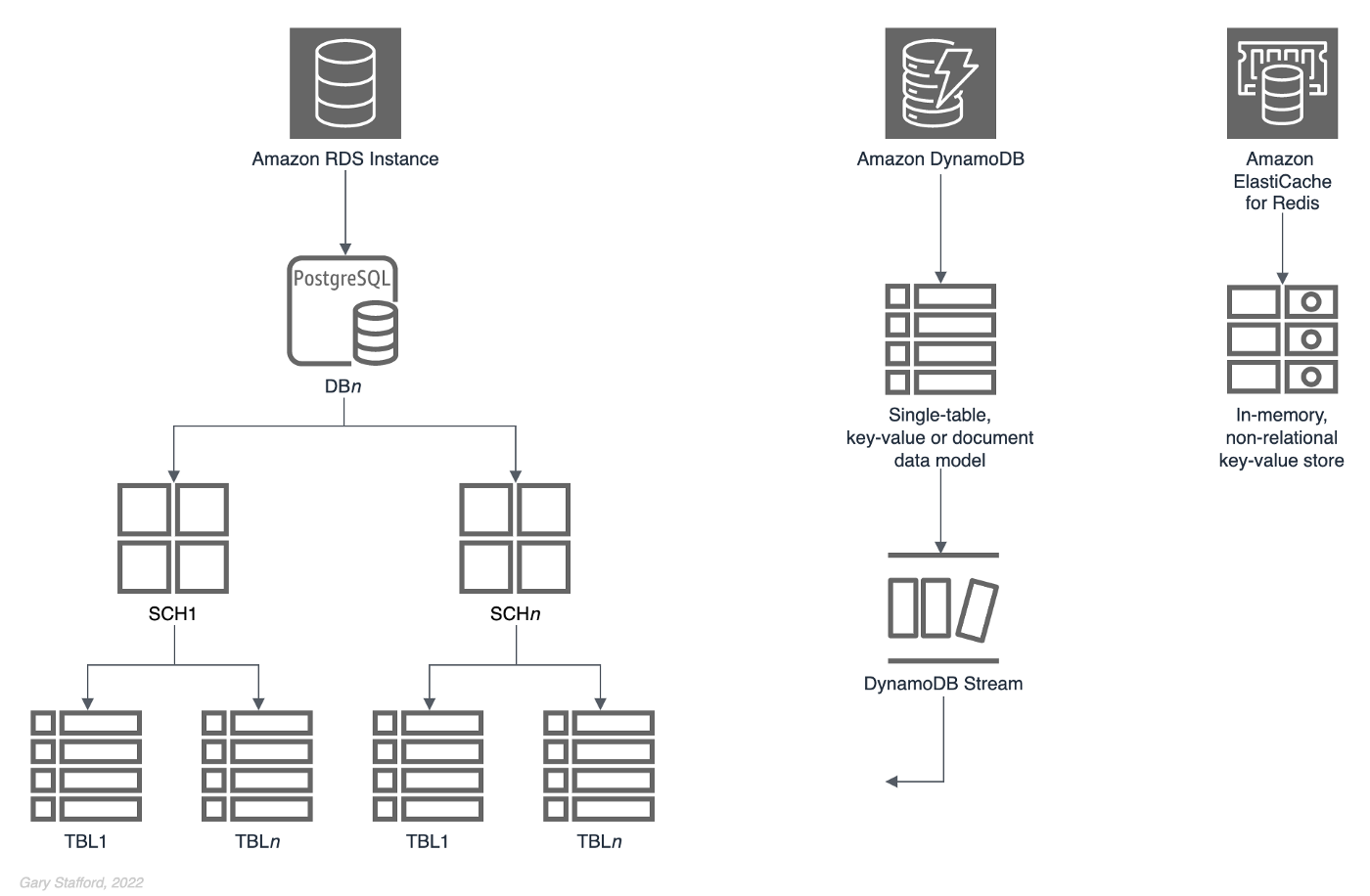

The basic building block of Amazon RDS is the DB instance, where you create your databases. You choose the engine-specific characteristics of the DB instance when you create it, such as storage capacity, CPU, memory, and EC2 instance type on which the database server runs. A single Amazon RDS database instance can contain multiple databases. Those databases contain numerous object types, including tables, views, functions, procedures, and types. Tables and other object types are organized into schemas. These hierarchal constructs — instances, databases, schemas, and tables — can be arranged in different ways depending on the requirements of the database data producers and consumers.

Sample Database

To demonstrate different patterns, we need data. Specifically, we need a database with data. Conveniently, due to the popularity of PostgreSQL, there are many available sample databases, including the Pagila database. I have used it in many previous articles and demonstrations. The Pagila database is available for download from several sources.

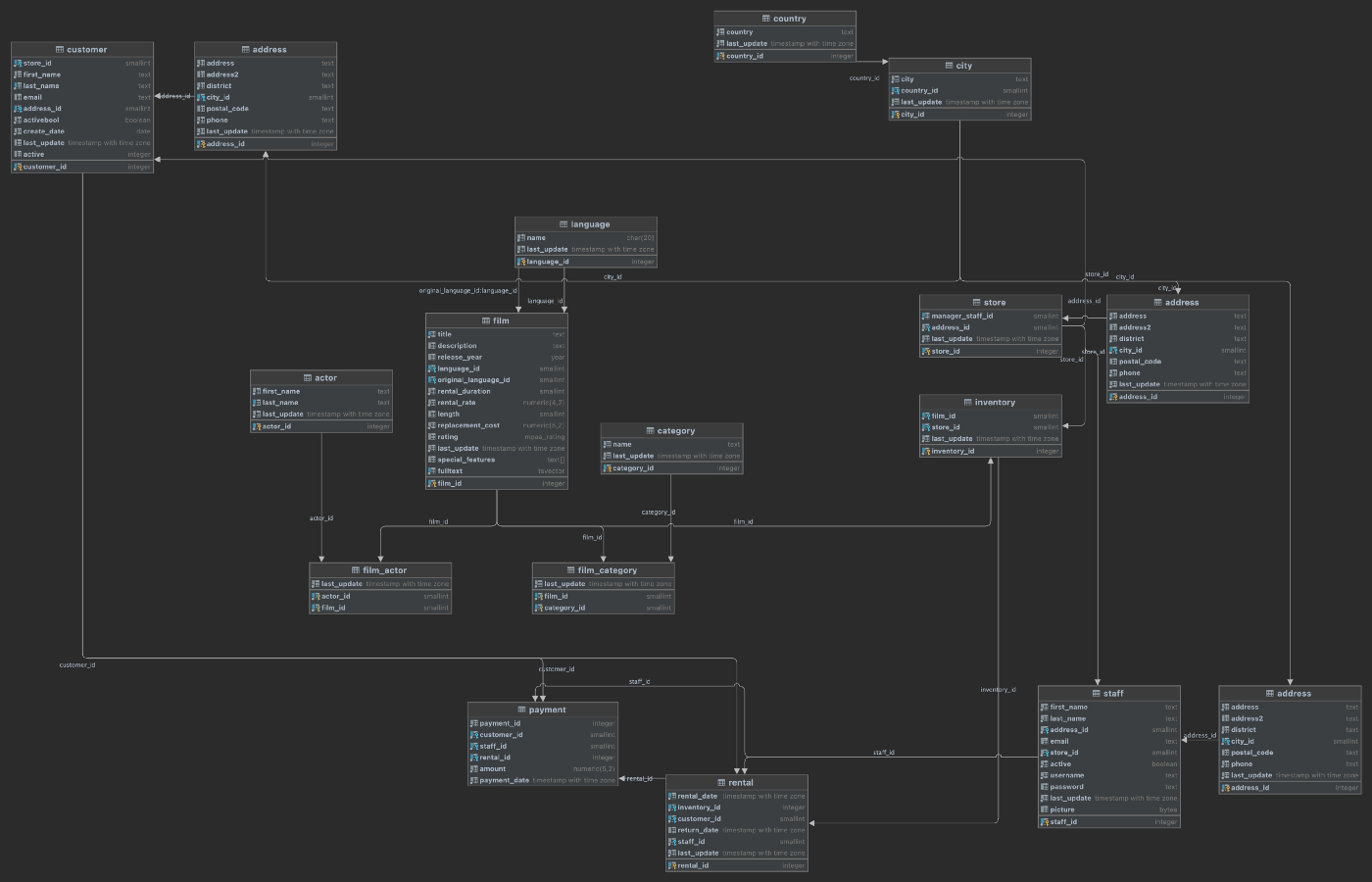

The Pagila database represents a DVD rental business. The database is well-built, small, and adheres to a third normal form (3NF) database schema design. The Pagila database has many objects, including 1 schema, 15 tables, 1 trigger, 7 views, 8 functions, 1 domain, 1 type, 1 aggregate, and 13 sequences. Pagila’s tables contain between 2 and 16K rows.

Pattern 1: Single Schema

Pattern 1: Single Schema is one of the most basic database patterns. There is one database instance containing a single database. That database has a single schema containing all tables and other database objects.

As organizations begin to move from monolithic to microservices architectures, they often retain their monolithic database architecture for some time.

Frequently, the monolithic database’s data model is equally monolithic, lacking proper separation of concerns using simple database constructs such as schemas. The Pagila database is an example of this first pattern. The Pagila database has a single schema containing all database object types, including tables, functions, views, procedures, sequences, and triggers.

To create a copy of the Pagila database, we can use pg_restore to restore any of several publically available custom-format database archive files. If you already have the Pagila database running, simply create a copy with pg_dump.

Below we see the table layout of the Pagila database, which contains the single, default public schema.

-----------+----------+--------+------------

Instance | Database | Schema | Table

-----------+----------+--------+------------

postgres1 | pagila | public | actor

postgres1 | pagila | public | address

postgres1 | pagila | public | category

postgres1 | pagila | public | city

postgres1 | pagila | public | country

postgres1 | pagila | public | customer

postgres1 | pagila | public | film

postgres1 | pagila | public | film_actor

postgres1 | pagila | public | film_category

postgres1 | pagila | public | inventory

postgres1 | pagila | public | language

postgres1 | pagila | public | payment

postgres1 | pagila | public | rental

postgres1 | pagila | public | staff

postgres1 | pagila | public | store

Using a single schema to house all tables, especially the public schema is generally considered poor database design. As a database grows in complexity, creating, organizing, managing, and securing dozens, hundreds, or thousands of database objects, including tables, within a single schema becomes impossible. For example, given a single schema, the only way to organize large numbers of database objects is by using lengthy and cryptic naming conventions.

Public Schema

According to the PostgreSQL docs, if tables or other object types are created without specifying a schema name, they are automatically assigned to the default public schema. Every new database contains a public schema. By default, users cannot access any objects in schemas they do not own. To allow that, the schema owner must grant the USAGE privilege on the schema. by default, everyone has CREATE and USAGE privileges on the schema public. These default privileges enable all users to connect to a given database to create objects in its public schema. Some usage patterns call for revoking that privilege, which is a compelling reason not to use the public schema as part of your database design.

Pattern 2: Multiple Schemas

Separating tables and other database objects into multiple schemas is an excellent first step to refactoring a database to support microservices. As application complexity and databases naturally grow over time, schemas to separate functionality by business subdomain or teams will benefit significantly.

According to the PostgreSQL docs, there are several reasons why one might want to use schemas:

- To allow many users to use one database without interfering with each other.

- To organize database objects into logical groups to make them more manageable.

- Third-party applications can be put into separate schemas, so they do not collide with the names of other objects.

Schemas are analogous to directories at the operating system level, except schemas cannot be nested.

With Pattern 2, as an organization continues to decompose its monolithic application architecture to a microservices-based application, it could transition to a schema-per-microservice or similar level or organizational granularity.

Applying Domain-driven Design Principles

Domain-driven design (DDD) is “a software design approach focusing on modeling software to match a domain according to input from that domain’s experts” (Wikipedia). Architects often apply DDD principles to decompose a monolithic application into microservices. For example, a microservice or set of related microservices might represent a Bounded Context. In DDD, a Bounded Context is “a description of a boundary, typically a subsystem or the work of a particular team, within which a particular model is defined and applicable.” (hackernoon.com). Examples of Bounded Context might include Sales, Shipping, and Support.

One technique to apply schemas when refactoring a database is to mirror the Bounded Contexts, which reflect the microservices. For each microservice or set of closely related microservices, there is a schema. Unfortunately, there is no absolute way to define the Bounded Contexts of a Domain, and henceforth, schemas to a database. It depends on many factors, including your application architecture, features, security requirements, and often an organization’s functional team structure.

Reviewing the purpose of each table in the Pagila database and their relationships to each other, we could infer Bounded Contexts, such as Films, Stores, Customers, and Sales. We can represent these Bounded Contexts as schemas within the database as a way to organize the data. The individual tables in a schema mirror DDD concepts, such as aggregates, entities, or value objects.

As shown below, the tables of the Pagila database have been relocated into six new schemas: common, customers, films, sales, staff, and stores. The common schema contains tables with address data references tables in several other schemas. There are now no tables left in the public schema. We will assume other database objects (e.g., functions, views, and triggers) have also been moved and modified if necessary to reflect new table locations.

-----------+----------+-----------+---------------

Instance | Database | Schema | Table

-----------+----------+-----------+---------------

postgres1 | pagila | common | address

postgres1 | pagila | common | city

postgres1 | pagila | common | country

-----------+----------+-----------+---------------

postgres1 | pagila | customers | customer

-----------+----------+-----------+---------------

postgres1 | pagila | films | actor

postgres1 | pagila | films | category

postgres1 | pagila | films | film

postgres1 | pagila | films | film_actor

postgres1 | pagila | films | film_category

postgres1 | pagila | films | language

-----------+----------+-----------+---------------

postgres1 | pagila | sales | payment

postgres1 | pagila | sales | rental

-----------+----------+-----------+---------------

postgres1 | pagila | staff | staff

-----------+----------+-----------+---------------

postgres1 | pagila | stores | inventory

postgres1 | pagila | stores | store

By applying schemas, we align tables and other database objects to individual microservices or functional teams that own the microservices and the associated data. Schemas allow us to apply fine-grain access control over objects and data within the database more effectively.

Refactoring other Database Objects

Typically with psql, when moving tables across schemas using an ALTER TABLE...SET SCHEMA... SQL statement, objects such as database views will be updated to the table’s new location. For example, take Pagila’s sales_by_store view. Note the schemas have been automatically updated for multiple tables from their original location in the public schema. The view was also moved to the sales schema.

Splitting Table Data Across Multiple Schemas

When refactoring a database, you may have to split data by replicating table definitions across multiple schemas. Take, for example, Pagila’s address table, which contains the addresses of customers, staff, and stores. The customers.customer, stores.staff, and stores.store all have foreign key relationships with the common.address table. The address table has a foreign key relationship with both the city and country tables. Thus for convenience, the address, city, and country tables were all placed into the common schema in the example above.

Although, at first, storing all the addresses in a single table might appear to be sound database normalization, consider the risks of having the address table’s data exposed. The store addresses are not considered sensitive data. However, the home addresses of customers and staff are likely considered sensitive personally identifiable information (PII). Also, consider as an application evolves, you may have fields unique to one type of address that does not apply to other categories of addresses. The table definitions for a store’s address may be defined differently than the address of a customer. For example, we might choose to add a county column to the customers.address table for e-commerce tax purposes, or an on_site_parking boolean column to the stores.address table.

In the example below, a new staff schema was added. The address table definition was replicated in the customers, staff, and stores schemas. The assumption is that the mixed address data in the original table was distributed to the appropriate address tables. Note the way schemas help us avoid table name collisions.

-----------+----------+-----------+---------------

Instance | Database | Schema | Table

-----------+----------+-----------+---------------

postgres1 | pagila | common | city

postgres1 | pagila | common | country

-----------+----------+-----------+---------------

postgres1 | pagila | customers | address

postgres1 | pagila | customers | customer

-----------+----------+-----------+---------------

postgres1 | pagila | films | actor

postgres1 | pagila | films | category

postgres1 | pagila | films | film

postgres1 | pagila | films | film_actor

postgres1 | pagila | films | film_category

postgres1 | pagila | films | language

-----------+----------+-----------+---------------

postgres1 | pagila | sales | payment

postgres1 | pagila | sales | rental

-----------+----------+-----------+---------------

postgres1 | pagila | staff | address

postgres1 | pagila | staff | staff

-----------+----------+-----------+---------------

postgres1 | pagila | stores | address

postgres1 | pagila | stores | inventory

postgres1 | pagila | stores | store

To create the new customers.address table, we could use the following SQL statements. The statements to create the other two address tables are nearly identical.

Although we now have two additional tables with identical table definitions, we do not duplicate any data. We could use the following SQL statements to migrate unique address data into the appropriate tables and confirm the results.

Lastly, alter the existing foreign key constraints to point to the new address tables. The SQL statements for the other two address tables are nearly identical.

There is now a reduced risk of exposing sensitive customer or staff data when querying store addresses, and the three address entities can evolve independently. Individual functional teams separately responsible customers, staff, and stores, can own and manage just the data within their domain.

Before dropping the common.address tables, you would still need to modify the remaining database objects that have dependencies on this table, such as views and functions. For example, take Pagila’s sales_by_store view we saw previously. Note line 9, below, the schema of the address table has been updated from common.address to stores.address. The stores.address table only contains addresses of stores, not customers or staff.

Below, we see the final table structure for the Pagila database after refactoring. Tables have been loosely grouped together schema in the diagram.

Pattern 3: Multiple Databases

Similar to how individual schemas allow us to organize tables and other database objects and provide better separation of concerns, we can use databases the same way. For example, we could choose to spread the Pagila data across more than one database within a single RDS database instance. Again, using DDD concepts, while schemas might represent Bounded Contexts, databases most closely align to Domains, which are “spheres of knowledge and activity where the application logic revolves” (hackernoon.com).

With Pattern 3, as an organization continues to refine its microservices-based application architecture, it might find that multiple databases within the same database instance are advantageous to further separate and organize application data.

Let’s assume that the data in the films schema is owned and managed by a completely separate team who should never have access to sensitive data stored in the customers, stores, and sales schemas. According to the PostgreSQL docs, database access permissions are managed using the concept of roles. Depending on how the role is set up, a role can be thought of as either a database user or a group of users.

To provide greater separation of concerns than just schemas, we can create a second, completely separate database within the same RDS database instance for data related to films. With two separate databases, it is easier to create and manage distinct roles and ensure access to customers, stores, or sales data is only accessible to teams that need access.

Below, we see the new layout of tables now spread across two databases within the same RDS database instance. Two new tables, highlighted in bold, are explained below.

-----------+----------+-----------+---------------

Instance | Database | Schema | Table

-----------+----------+-----------+---------------

postgres1 | pagila | common | city

postgres1 | pagila | common | country

-----------+----------+-----------+---------------

postgres1 | pagila | customers | address

postgres1 | pagila | customers | customer

-----------+----------+-----------+---------------

postgres1 | pagila | films | film

-----------+----------+-----------+---------------

postgres1 | pagila | sales | payment

postgres1 | pagila | sales | rental

-----------+----------+-----------+---------------

postgres1 | pagila | staff | address

postgres1 | pagila | staff | staff

-----------+----------+-----------+---------------

postgres1 | pagila | stores | address

postgres1 | pagila | stores | inventory

postgres1 | pagila | stores | store

-----------+----------+-----------+---------------

postgres1 | products | films | actor

postgres1 | products | films | category

postgres1 | products | films | film

postgres1 | products | films | film_actor

postgres1 | products | films | film_category

postgres1 | products | films | language

postgres1 | products | films | outbox

Change Data Capture and the Outbox Pattern

Inserts, updates, and deletes of film data can be replicated between the two databases using several methods, including Change Data Capture (CDC) with the Outbox Pattern. CDC is “a pattern that enables database changes to be monitored and propagated to downstream systems” (RedHat). The Outbox Pattern uses the PostgreSQL database’s ability to perform an commit to two tables atomically using a transaction. Transactions bundles multiple steps into a single, all-or-nothing operation.

In this example, data is written to existing tables in the products.films schema (updated aggregate’s state) as well as a new products.films.outbox table (new domain events), wrapped in a transaction. Using CDC, the domain events from the products.films.outbox table are replicated to the pagila.films.film table. The replication of data between the two databases using CDC is also referred to as eventual consistency.

In this example, films in the pagila.films.film and products.films.outbox tables are represented in a denormalized, aggregated view of a film instead of the original, normalized relational multi-table structure. The table definition of the new pagila.films.film table is very different than that of the original Pagila products.films.films table. A concept such as a film, represented as an aggregate or entity, can be common to multiple Bounded Contexts, yet have a different definition.

Note the Confluent JDBC Source Connector (io.confluent.connect.jdbc.JdbcSourceConnector) used here will not work with PostgreSQL arrays, which would be ideal for one-to-many categories and actors columns. Arrays can be converted to text using ::text or by building value-delimited strings using string_agg aggregate function.

Given this table definition, the resulting data would look as follows.

The existing pagila.stores.inventory table has a foreign key constraint on the the pagila.films.film table. However, the films schema and associated tables have been migrated to the products database’s films schema. To overcome this challenge, we can:

- Create a new

pagila.films.filmtable - Continuously replicate data from the

productsdatabase to thepagila.films.filmtable data using CDC (see below) - Modify the

pagila.stores.inventorytable to take a dependency on the newfilmtable - Drop the duplicate tables and other objects from the

pagila.filmsschema

Debezium and Confluent for CDC

There are several technology choices for performing CDC. For this post, I have used RedHat’s Debezium connector for PostgreSQL and Debezium Outbox Event Router, and Confluent’s JDBC Sink Connector. Below, we see a typical example of a Kafka Connect Source Connector using the Debezium connector for PostgreSQL and a Sink Connector using the Confluent JDBC Sink Connector. The Source Connector streams changes from the products logs, using PostgreSQL’s Write-Ahead Logging (WAL) feature, to an Apache Kafka topic. A corresponding Sink Connector streams the changes from the Kafka topic to the pagila database.

Pattern 4: Multiple Database Instances

At some point in the evolution of a microservices-based application, it might become advantageous to separate the data into multiple database instances using the same database engine. Although managing numerous database instances may require more resources, there are also advantages. Each database instance will have independent connection configurations, roles, and administrators. Each database instance could run different versions of the database engine, and each could be upgraded and maintained independently.

With Pattern 4, as an organization continues to refine its application architecture, it might find that multiple database instances are beneficial to further separate and organize application data.

Below is one possible refactoring of the Pagila database, splitting the data between two database engines. The first database instance, postgres1, contains two databases, pagila and products. The second database instance, postgres2, contains a single database, products.

-----------+----------+-----------+---------------

Instance | Database | Schema | Table

-----------+----------+-----------+---------------

postgres1 | pagila | common | city

postgres1 | pagila | common | country

-----------+----------+-----------+---------------

postgres1 | pagila | customers | address

postgres1 | pagila | customers | customer

-----------+----------+-----------+---------------

postgres1 | pagila | films | actor

postgres1 | pagila | films | category

postgres1 | pagila | films | film

postgres1 | pagila | films | film_actor

postgres1 | pagila | films | film_category

postgres1 | pagila | films | language

-----------+----------+-----------+---------------

postgres1 | pagila | staff | address

postgres1 | pagila | staff | staff

-----------+----------+-----------+---------------

postgres1 | pagila | stores | address

postgres1 | pagila | stores | inventory

postgres1 | pagila | stores | store

-----------+----------+-----------+---------------

postgres1 | pagila | sales | payment

postgres1 | pagila | sales | rental

-----------+----------+-----------+---------------

postgres2 | products | films | actor

postgres2 | products | films | category

postgres2 | products | films | film

postgres2 | products | films | film_actor

postgres2 | products | films | film_category

postgres2 | products | films | language

Data Replication with CDC

Note the films schema is duplicated between the two databases, shown above. Again, using the CDC allows us to keep the six postgres1.pagila.films tables in sync with the six postgres2.products.films tables using CDC. In this example, we are not using the OutBox Pattern, as used previously in Pattern 3. Instead, we are replicating any changes to any of the tables in postgres2.products.films schema to the corresponding tables in the postgres1.pagila.films schema.

To ensure the tables stay in sync, the tables and other objects in the postgres1.pagila.films schema should be limited to read-only access (SELECT) for all users. The postgres2.products.films tables represent the authoritative source of data, the System of Record (SoR). Any inserts, updates, or deletes, must be made to these tables and replicated using CDC.

Pattern 5: Multiple Database Engines

AWS commonly uses the term ‘purpose-built databases.’ AWS offers over fifteen purpose-built database engines to support diverse data models, including relational, key-value, document, in-memory, graph, time series, wide column, and ledger. There may be instances where using multiple, purpose-built databases makes sense. Using different database engines allows architects to take advantage of the unique characteristics of each engine type to support diverse application requirements.

With Pattern 5, as an organization continues to refine its application architecture, it might choose to leverage multiple, different database engines.

Take for example an application that uses a combination of relational, NoSQL, and in-memory databases to persist data. In addition to PostgreSQL, the application benefits from moving a certain subset of its relational data to a non-relational, high-performance key-value store, such as Amazon DynamoDB. Furthermore, the application implements a database cache using an ultra-fast in-memory database, such as Amazon ElastiCache for Redis.

Below is one possible refactoring of the Pagila database, splitting the data between two different database engines, PostgreSQL and Amazon DynamoDB.

-----------+----------+-----------+-----------

Instance | Database | Schema | Table

-----------+----------+-----------+-----------

postgres1 | pagila | common | city

postgres1 | pagila | common | country

-----------+----------+-----------+-----------

postgres1 | pagila | customers | address

postgres1 | pagila | customers | customer

-----------+----------+-----------+-----------

postgres1 | pagila | films | film

-----------+----------+-----------+-----------

postgres1 | sales | sales | payment

postgres1 | sales | sales | rental

-----------+----------+-----------+-----------

postgres1 | pagila | staff | address

postgres1 | pagila | staff | staff

-----------+----------+-----------+-----------

postgres1 | pagila | stores | address

postgres1 | pagila | stores | film

postgres1 | pagila | stores | inventory

postgres1 | pagila | stores | store

-----------+----------+-----------+-----------

DynamoDB | - | - | Films

The assumption is that based on the application’s access patterns for film data, the application could benefit from the addition of a non-relational, high-performance key-value store. Further, the film-related data entities, such as a film , category, and actor, could be modeled using DynamoDB’s single-table data model architecture. In this model, multiple entity types can be stored in the same table. If necessary, to replicate data back to the PostgreSQL instance from the DynamoBD instance, we can perform CDC with DynamoDB Streams.

Films data model for DynamoDB using NoSQL Workbench

Films data modelCQRS

Command Query Responsibility Segregation (CQRS), a popular software architectural pattern, is another use case for multiple database engines. The CQRS pattern is, as the name implies, “a software design pattern that separates command activities from query activities. In CQRS parlance, a command writes data to a data source. A query reads data from a data source. CQRS addresses the problem of data access performance degradation when applications running at web-scale have too much burden placed on the physical database and the network on which it resides” (RedHat). CQRS commonly uses one database engine optimized for writes and a separate database optimized for reads.

Conclusion

Embracing a microservices-based application architecture may have many business advantages for an organization. However, ignoring the application’s existing databases can negate many of the benefits of microservices. This post examined several common patterns for refactoring relational databases to match a modern microservices-based application architecture.

This blog represents my own viewpoints and not of my employer, Amazon Web Services (AWS). All product names, logos, and brands are the property of their respective owners. All diagrams and illustrations are property of the author.

Java Development with Microsoft SQL Server: Calling Microsoft SQL Server Stored Procedures from Java Applications Using JDBC

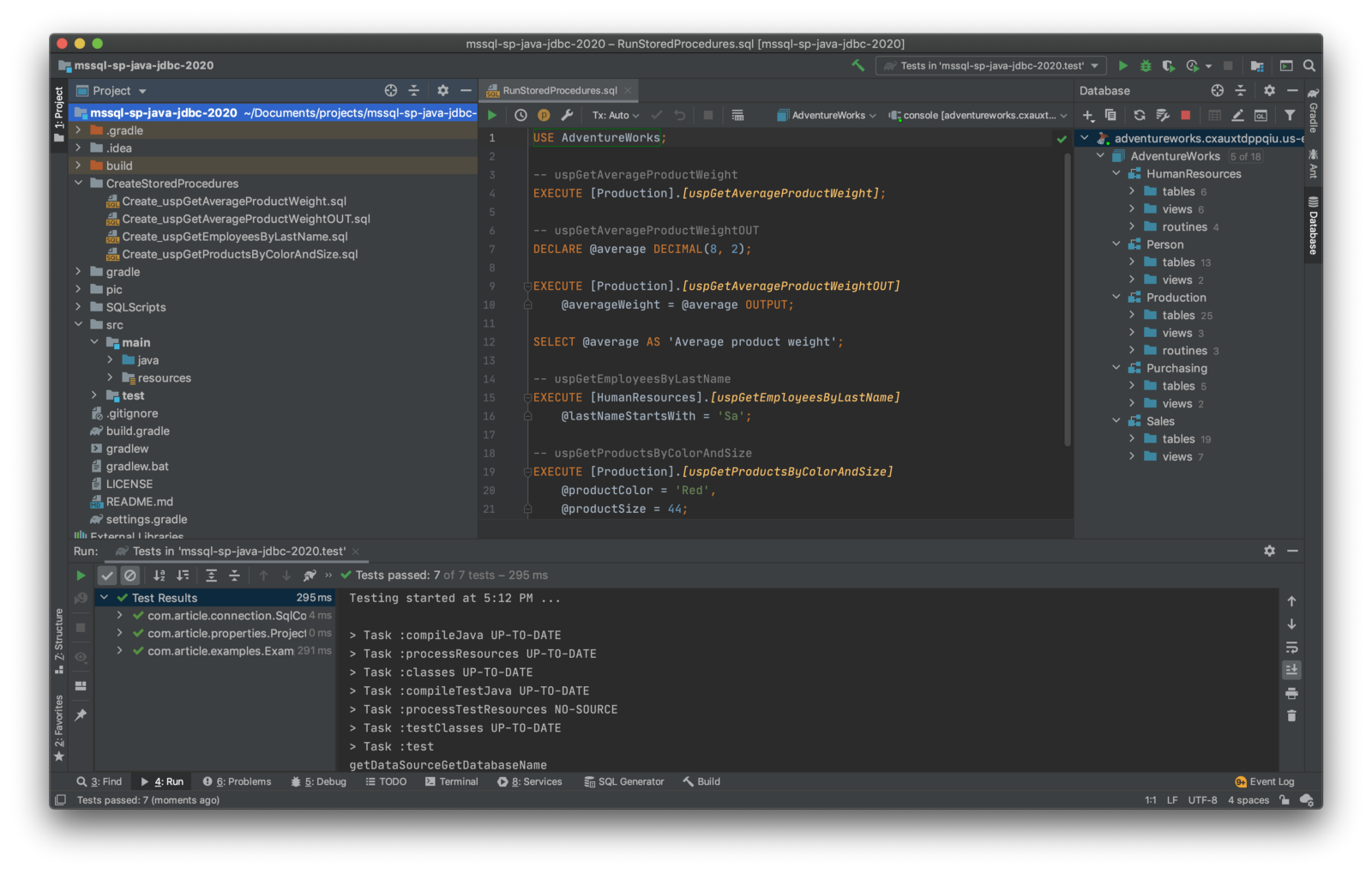

Posted by Gary A. Stafford in AWS, Java Development, Software Development, SQL, SQL Server Development on September 9, 2020

Introduction

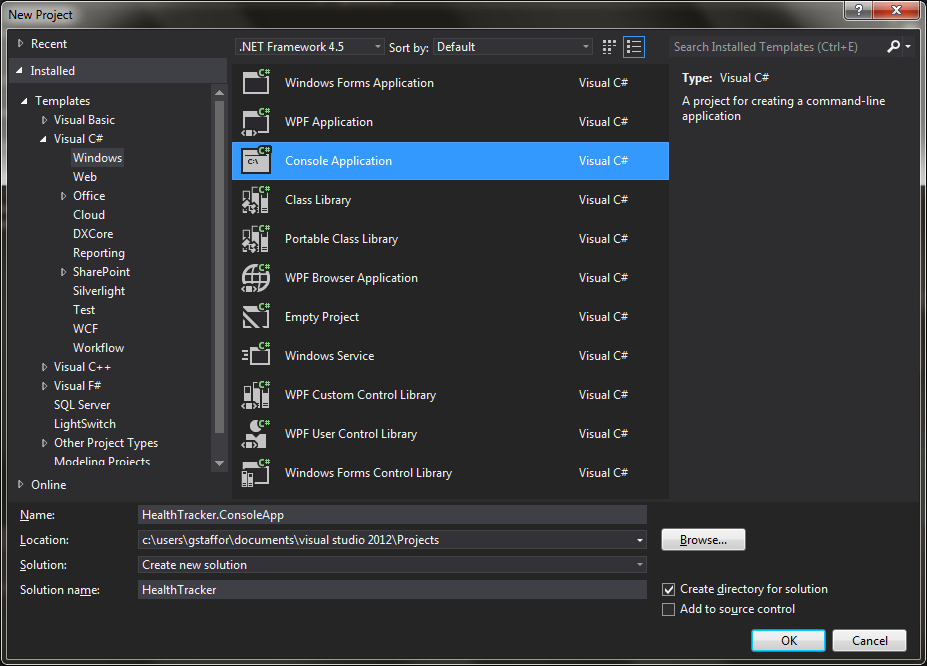

Enterprise software solutions often combine multiple technology platforms. Accessing an Oracle database via a Microsoft .NET application and vice-versa, accessing Microsoft SQL Server from a Java-based application is common. In this post, we will explore the use of the JDBC (Java Database Connectivity) API to call stored procedures from a Microsoft SQL Server 2017 database and return data to a Java 11-based console application.

The objectives of this post include:

- Demonstrate the differences between using static SQL statements and stored procedures to return data.

- Demonstrate three types of JDBC statements to return data:

Statement,PreparedStatement, andCallableStatement. - Demonstrate how to call stored procedures with input and output parameters.

- Demonstrate how to return single values and a result set from a database using stored procedures.

Why Stored Procedures?

To access data, many enterprise software organizations require their developers to call stored procedures within their code as opposed to executing static T-SQL (Transact-SQL) statements against the database. There are several reasons stored procedures are preferred:

- Optimization: Stored procedures are often written by DBAs or database developers who specialize in database development. They understand the best way to construct queries for optimal performance and minimal load on the database server. Think of it as a developer using an API to interact with the database.

- Safety and Security: Stored procedures are considered safer and more secure than static SQL statements. The stored procedure provides tight control over the content of the queries, preventing malicious or unintentionally destructive code from being executed against the database.

- Error Handling: Stored procedures can contain logic for handling errors before they bubble up to the application layer and possibly to the end-user.

AdventureWorks 2017 Database

For brevity, I will use an existing and well-known Microsoft SQL Server database, AdventureWorks. The AdventureWorks database was originally published by Microsoft for SQL Server 2008. Although a bit dated architecturally, the database comes prepopulated with plenty of data for demonstration purposes.

HumanResources schema, one of five schemas within the AdventureWorks databaseFor the demonstration, I have created an Amazon RDS for SQL Server 2017 Express Edition instance on AWS. You have several options for deploying SQL Server, including AWS, Microsoft Azure, Google Cloud, or installed on your local workstation.

There are many methods to deploy the AdventureWorks database to Microsoft SQL Server. For this post’s demonstration, I used the AdventureWorks2017.bak backup file, which I copied to Amazon S3. Then, I enabled and configured the native backup and restore feature of Amazon RDS for SQL Server to import and install the backup.

DROP DATABASE IF EXISTS AdventureWorks;

GO

EXECUTE msdb.dbo.rds_restore_database

@restore_db_name='AdventureWorks',

@s3_arn_to_restore_from='arn:aws:s3:::my-bucket/AdventureWorks2017.bak',

@type='FULL',

@with_norecovery=0;

-- get task_id from output (e.g. 1)

EXECUTE msdb.dbo.rds_task_status

@db_name='AdventureWorks',

@task_id=1;

Install Stored Procedures

For the demonstration, I have added four stored procedures to the AdventureWorks database to use in this post. To follow along, you will need to install these stored procedures, which are included in the GitHub project.

Data Sources, Connections, and Properties

Using the latest Microsoft JDBC Driver 8.4 for SQL Server (ver. 8.4.1.jre11), we create a SQL Server data source, com.microsoft.sqlserver.jdbc.SQLServerDataSource, and database connection, java.sql.Connection. There are several patterns for creating and working with JDBC data sources and connections. This post does not necessarily focus on the best practices for creating or using either. In this example, the application instantiates a connection class, SqlConnection.java, which in turn instantiates the java.sql.Connection and com.microsoft.sqlserver.jdbc.SQLServerDataSource objects. The data source’s properties are supplied from an instance of a singleton class, ProjectProperties.java. This properties class instantiates the java.util.Properties class, which reads values from a configuration properties file, config.properties. On startup, the application creates the database connection, calls each of the example methods, and then closes the connection.

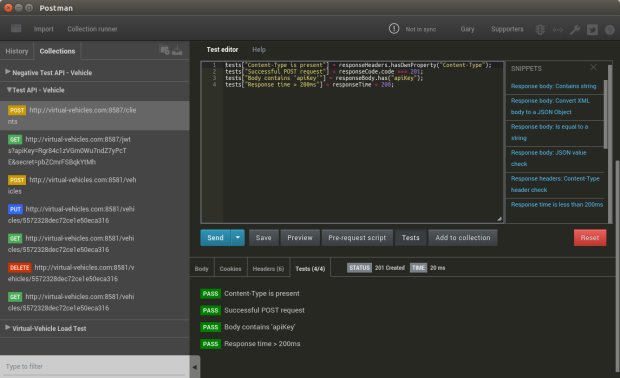

Examples

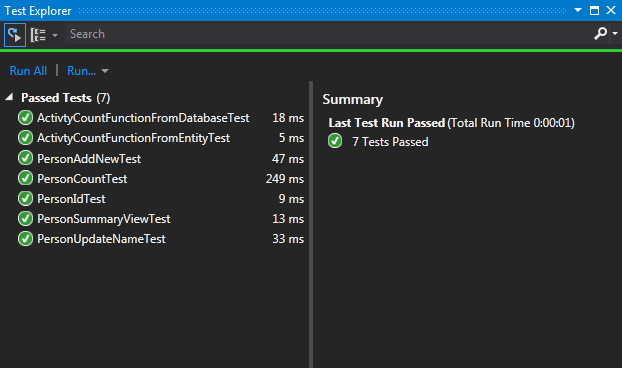

For each example, I will show the stored procedure, if applicable, followed by the Java method that calls the procedure or executes the static SQL statement. I have left out the data source and connection code in the article. Again, a complete copy of all the code for this article is available on GitHub, including Java source code, SQL statements, helper SQL scripts, and a set of basic JUnit tests.

To run the JUnit unit tests, using Gradle, which the project is based on, use the ./gradlew cleanTest test --warning-mode none command.

To build and run the application, using Gradle, which the project is based on, use the ./gradlew run --warning-mode none command.

Example 1: SQL Statement

Before jumping into stored procedures, we will start with a simple static SQL statement. This example’s method, getAverageProductWeightST, uses the java.sql.Statement class. According to Oracle’s JDBC documentation, the Statement object is used for executing a static SQL statement and returning the results it produces. This SQL statement calculates the average weight of all products in the AdventureWorks database. It returns a solitary double numeric value. This example demonstrates one of the simplest methods for returning data from SQL Server.

/**

* Statement example, no parameters, returns Integer

*

* @return Average weight of all products

*/

public double getAverageProductWeightST() {

double averageWeight = 0;

Statement stmt = null;

ResultSet rs = null;

try {

stmt = connection.getConnection().createStatement();

String sql = "WITH Weights_CTE(AverageWeight) AS" +

"(" +

" SELECT [Weight] AS [AverageWeight]" +

" FROM [Production].[Product]" +

" WHERE [Weight] > 0" +

" AND [WeightUnitMeasureCode] = 'LB'" +

" UNION" +

" SELECT [Weight] * 0.00220462262185 AS [AverageWeight]" +

" FROM [Production].[Product]" +

" WHERE [Weight] > 0" +

" AND [WeightUnitMeasureCode] = 'G')" +

"SELECT ROUND(AVG([AverageWeight]), 2)" +

"FROM [Weights_CTE];";

rs = stmt.executeQuery(sql);

if (rs.next()) {

averageWeight = rs.getDouble(1);

}

} catch (Exception ex) {

Logger.getLogger(RunExamples.class.getName()).

log(Level.SEVERE, null, ex);

} finally {

if (rs != null) {

try {

rs.close();

} catch (SQLException ex) {

Logger.getLogger(RunExamples.class.getName()).

log(Level.WARNING, null, ex);

}

}

if (stmt != null) {

try {

stmt.close();

} catch (SQLException ex) {

Logger.getLogger(RunExamples.class.getName()).

log(Level.WARNING, null, ex);

}

}

}

return averageWeight;

}

Example 2: Prepared Statement

Next, we will execute almost the same static SQL statement as in Example 1. The only change is the addition of the column name, averageWeight. This allows us to parse the results by column name, making the code easier to understand as opposed to using the numeric index of the column as in Example 1.

Also, instead of using the java.sql.Statement class, we use the java.sql.PreparedStatement class. According to Oracle’s documentation, a SQL statement is precompiled and stored in a PreparedStatement object. This object can then be used to execute this statement multiple times efficiently.

/**

* PreparedStatement example, no parameters, returns Integer

*

* @return Average weight of all products

*/

public double getAverageProductWeightPS() {

double averageWeight = 0;

PreparedStatement pstmt = null;

ResultSet rs = null;

try {

String sql = "WITH Weights_CTE(averageWeight) AS" +

"(" +

" SELECT [Weight] AS [AverageWeight]" +

" FROM [Production].[Product]" +

" WHERE [Weight] > 0" +

" AND [WeightUnitMeasureCode] = 'LB'" +

" UNION" +

" SELECT [Weight] * 0.00220462262185 AS [AverageWeight]" +

" FROM [Production].[Product]" +

" WHERE [Weight] > 0" +

" AND [WeightUnitMeasureCode] = 'G')" +

"SELECT ROUND(AVG([AverageWeight]), 2) AS [averageWeight]" +

"FROM [Weights_CTE];";

pstmt = connection.getConnection().prepareStatement(sql);

rs = pstmt.executeQuery();

if (rs.next()) {

averageWeight = rs.getDouble("averageWeight");

}

} catch (Exception ex) {

Logger.getLogger(RunExamples.class.getName()).

log(Level.SEVERE, null, ex);

} finally {

if (rs != null) {

try {

rs.close();

} catch (SQLException ex) {

Logger.getLogger(RunExamples.class.getName()).

log(Level.WARNING, null, ex);

}

}

if (pstmt != null) {

try {

pstmt.close();

} catch (SQLException ex) {

Logger.getLogger(RunExamples.class.getName()).

log(Level.WARNING, null, ex);

}

}

}

return averageWeight;

}

Example 3: Callable Statement

In this example, the average product weight query has been moved into a stored procedure. The procedure is identical in functionality to the static statement in the first two examples. To call the stored procedure, we use the java.sql.CallableStatement class. According to Oracle’s documentation, the CallableStatement extends PreparedStatement. It is the interface used to execute SQL stored procedures. The CallableStatement accepts both input and output parameters; however, this simple example does not use either. Like the previous two examples, the procedure returns a double numeric value.

CREATE OR

ALTER PROCEDURE [Production].[uspGetAverageProductWeight]

AS

BEGIN

SET NOCOUNT ON;

WITH

Weights_CTE(AverageWeight)

AS

(

SELECT [Weight] AS [AverageWeight]

FROM [Production].[Product]

WHERE [Weight] > 0

AND [WeightUnitMeasureCode] = 'LB'

UNION

SELECT [Weight] * 0.00220462262185 AS [AverageWeight]

FROM [Production].[Product]

WHERE [Weight] > 0

AND [WeightUnitMeasureCode] = 'G'

)

SELECT ROUND(AVG([AverageWeight]), 2)

FROM [Weights_CTE];

END

GO

The calling Java method is shown below.

/**

* CallableStatement, no parameters, returns Integer

*

* @return Average weight of all products

*/

public double getAverageProductWeightCS() {

CallableStatement cstmt = null;

double averageWeight = 0;

ResultSet rs = null;

try {

cstmt = connection.getConnection().prepareCall(

"{call [Production].[uspGetAverageProductWeight]}");

cstmt.execute();

rs = cstmt.getResultSet();

if (rs.next()) {

averageWeight = rs.getDouble(1);

}

} catch (Exception ex) {

Logger.getLogger(RunExamples.class.getName()).

log(Level.SEVERE, null, ex);

} finally {

if (rs != null) {

try {

rs.close();

} catch (SQLException ex) {

Logger.getLogger(RunExamples.class.getName()).

log(Level.SEVERE, null, ex);

}

}

if (cstmt != null) {

try {

cstmt.close();

} catch (SQLException ex) {

Logger.getLogger(RunExamples.class.getName()).

log(Level.WARNING, null, ex);

}

}

}

return averageWeight;

}

Example 4: Calling a Stored Procedure with an Output Parameter

In this example, we use almost the same stored procedure as in Example 3. The only difference is the inclusion of an output parameter. This time, instead of returning a result set with a value in a single unnamed column, the column has a name, averageWeight. We can now call that column by name when retrieving the value.

The stored procedure patterns found in Examples 3 and 4 are both commonly used. One procedure uses an output parameter, and one not, both return the same value(s). You can use the CallableStatement to for either type.

CREATE OR

ALTER PROCEDURE [Production].[uspGetAverageProductWeightOUT]@averageWeight DECIMAL(8, 2) OUT

AS

BEGIN

SET NOCOUNT ON;

WITH

Weights_CTE(AverageWeight)

AS

(

SELECT [Weight] AS [AverageWeight]

FROM [Production].[Product]

WHERE [Weight] > 0

AND [WeightUnitMeasureCode] = 'LB'

UNION

SELECT [Weight] * 0.00220462262185 AS [AverageWeight]

FROM [Production].[Product]

WHERE [Weight] > 0

AND [WeightUnitMeasureCode] = 'G'

)

SELECT @averageWeight = ROUND(AVG([AverageWeight]), 2)

FROM [Weights_CTE];

END

GO

The calling Java method is shown below.

/**

* CallableStatement example, (1) output parameter, returns Integer

*

* @return Average weight of all products

*/

public double getAverageProductWeightOutCS() {

CallableStatement cstmt = null;

double averageWeight = 0;

try {

cstmt = connection.getConnection().prepareCall(

"{call [Production].[uspGetAverageProductWeightOUT](?)}");

cstmt.registerOutParameter("averageWeight", Types.DECIMAL);

cstmt.execute();

averageWeight = cstmt.getDouble("averageWeight");

} catch (Exception ex) {

Logger.getLogger(RunExamples.class.getName()).

log(Level.SEVERE, null, ex);

} finally {

if (cstmt != null) {

try {

cstmt.close();

} catch (SQLException ex) {

Logger.getLogger(RunExamples.class.getName()).

log(Level.WARNING, null, ex);

}

}

}

return averageWeight;

}

Example 5: Calling a Stored Procedure with an Input Parameter

In this example, the procedure returns a result set, java.sql.ResultSet, of employees whose last name starts with a particular sequence of characters (e.g., ‘M’ or ‘Sa’). The sequence of characters is passed as an input parameter, lastNameStartsWith, to the stored procedure using the CallableStatement.

The method making the call iterates through the rows of the result set returned by the stored procedure, concatenating multiple columns to form the employee’s full name as a string. Each full name string is then added to an ordered collection of strings, a List<String> object. The List instance is returned by the method. You will notice this procedure takes a little longer to run because of the use of the LIKE operator. The database server has to perform pattern matching on each last name value in the table to determine the result set.

CREATE OR

ALTER PROCEDURE [HumanResources].[uspGetEmployeesByLastName]

@lastNameStartsWith VARCHAR(20) = 'A'

AS

BEGIN

SET NOCOUNT ON;

SELECT p.[FirstName], p.[MiddleName], p.[LastName], p.[Suffix], e.[JobTitle], m.[EmailAddress]

FROM [HumanResources].[Employee] AS e

LEFT JOIN [Person].[Person] p ON e.[BusinessEntityID] = p.[BusinessEntityID]

LEFT JOIN [Person].[EmailAddress] m ON e.[BusinessEntityID] = m.[BusinessEntityID]

WHERE e.[CurrentFlag] = 1

AND p.[PersonType] = 'EM'

AND p.[LastName] LIKE @lastNameStartsWith + '%'

ORDER BY p.[LastName], p.[FirstName], p.[MiddleName]

END

GO

The calling Java method is shown below.

/**

* CallableStatement example, (1) input parameter, returns ResultSet

*

* @param lastNameStartsWith

* @return List of employee names

*/

public List<String> getEmployeesByLastNameCS(String lastNameStartsWith) {

CallableStatement cstmt = null;

ResultSet rs = null;

List<String> employeeFullName = new ArrayList<>();

try {

cstmt = connection.getConnection().prepareCall(

"{call [HumanResources].[uspGetEmployeesByLastName](?)}",

ResultSet.TYPE_SCROLL_INSENSITIVE,

ResultSet.CONCUR_READ_ONLY);

cstmt.setString("lastNameStartsWith", lastNameStartsWith);

boolean results = cstmt.execute();

int rowsAffected = 0;

// Protects against lack of SET NOCOUNT in stored procedure

while (results || rowsAffected != -1) {

if (results) {

rs = cstmt.getResultSet();

break;

} else {

rowsAffected = cstmt.getUpdateCount();

}

results = cstmt.getMoreResults();

}

while (rs.next()) {

employeeFullName.add(

rs.getString("LastName") + ", "

+ rs.getString("FirstName") + " "

+ rs.getString("MiddleName"));

}

} catch (Exception ex) {

Logger.getLogger(RunExamples.class.getName()).

log(Level.SEVERE, null, ex);

} finally {

if (rs != null) {

try {

rs.close();

} catch (SQLException ex) {

Logger.getLogger(RunExamples.class.getName()).

log(Level.WARNING, null, ex);

}

}

if (cstmt != null) {

try {

cstmt.close();

} catch (SQLException ex) {

Logger.getLogger(RunExamples.class.getName()).

log(Level.WARNING, null, ex);

}

}

}

return employeeFullName;

}

Example 6: Converting a Result Set to Ordered Collection of Objects

In this last example, we pass two input parameters, productColor and productSize, to a slightly more complex stored procedure. The stored procedure returns a result set containing several columns of product information. This time, the example’s method iterates through the result set returned by the procedure and constructs an ordered collection of products, List<Product> object. The Product objects in the list are instances of the Product.java POJO class. The method converts each results set’s row’s field value into a Product property (e.g., Product.Size, Product.Model). Using a collection is a common method for persisting data from a result set in an application.

CREATE OR

ALTER PROCEDURE [Production].[uspGetProductsByColorAndSize]

@productColor VARCHAR(20),

@productSize INTEGER

AS

BEGIN

SET NOCOUNT ON;

SELECT p.[ProductNumber], m.[Name] AS [Model], p.[Name] AS [Product], p.[Color], p.[Size]

FROM [Production].[ProductModel] AS m

INNER JOIN

[Production].[Product] AS p ON m.[ProductModelID] = p.[ProductModelID]

WHERE (p.[Color] = @productColor)

AND (p.[Size] = @productSize)

ORDER BY p.[ProductNumber], [Model], [Product]

END

GO

The calling Java method is shown below.

/**

* CallableStatement example, (2) input parameters, returns ResultSet

*

* @param color

* @param size

* @return List of Product objects

*/

public List<Product> getProductsByColorAndSizeCS(String color, String size) {

CallableStatement cstmt = null;

ResultSet rs = null;

List<Product> productList = new ArrayList<>();

try {

cstmt = connection.getConnection().prepareCall(

"{call [Production].[uspGetProductsByColorAndSize](?, ?)}",

ResultSet.TYPE_SCROLL_INSENSITIVE,

ResultSet.CONCUR_READ_ONLY);

cstmt.setString("productColor", color);

cstmt.setString("productSize", size);

boolean results = cstmt.execute();

int rowsAffected = 0;

// Protects against lack of SET NOCOUNT in stored procedure

while (results || rowsAffected != -1) {

if (results) {

rs = cstmt.getResultSet();

break;

} else {

rowsAffected = cstmt.getUpdateCount();

}

results = cstmt.getMoreResults();

}

while (rs.next()) {

Product product = new Product(

rs.getString("Product"),

rs.getString("ProductNumber"),

rs.getString("Color"),

rs.getString("Size"),

rs.getString("Model"));

productList.add(product);

}

} catch (Exception ex) {

Logger.getLogger(RunExamples.class.getName()).

log(Level.SEVERE, null, ex);

} finally {

if (rs != null) {

try {

rs.close();

} catch (SQLException ex) {

Logger.getLogger(RunExamples.class.getName()).

log(Level.WARNING, null, ex);

}

}

if (cstmt != null) {

try {

cstmt.close();

} catch (SQLException ex) {

Logger.getLogger(RunExamples.class.getName()).

log(Level.WARNING, null, ex);

}

}

}

return productList;

}

Proper T-SQL: Schema Reference and Brackets

You will notice in all T-SQL statements, I refer to the schema as well as the table or stored procedure name (e.g., {call [Production].[uspGetAverageProductWeightOUT](?)}). According to Microsoft, it is always good practice to refer to database objects by a schema name and the object name, separated by a period; that even includes the default schema (e.g., dbo).

You will also notice I wrap the schema and object names in square brackets (e.g., SELECT [ProductNumber] FROM [Production].[ProductModel]). The square brackets are to indicate that the name represents an object and not a reserved word (e.g, CURRENT or NATIONAL). By default, SQL Server adds these to make sure the scripts it generates run correctly.

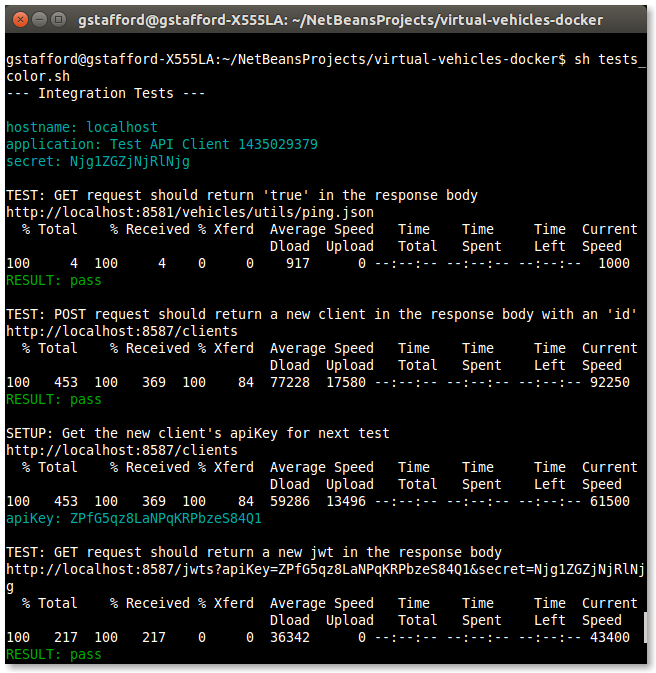

Running the Examples

The application will display the name of the method being called, a description, the duration of time it took to retrieve the data, and the results returned by the method.

Below, we see the results.

SQL Statement Performance

This post is certainly not about SQL performance, demonstrated by the fact I am only using Amazon RDS for SQL Server 2017 Express Edition on a single, very underpowered db.t2.micro Amazon RDS instance types. However, I have added a timer feature, ProcessTimer.java class, to capture the duration of time each example takes to return data, measured in milliseconds. The ProcessTimer.java class is part of the project code. Using the timer, you should observe significant differences between the first run and proceeding runs of the application for several of the called methods. The time difference is a result of several factors, primarily pre-compilation of the SQL statements and SQL Server plan caching.

The effects of these two factors are easily demonstrated by clearing the SQL Server plan cache (see SQL script below) using DBCC (Database Console Commands) statements. and then running the application twice in a row. The second time, pre-compilation and plan caching should result in significantly faster times for the prepared statements and callable statements, in Examples 2–6. In the two random runs shown below, we see up to a 497% improvement in query time.

USE AdventureWorks;

DBCC FREESYSTEMCACHE('SQL Plans');

GO

CHECKPOINT;

GO

-- Impossible to run with Amazon RDS for Microsoft SQL Server on AWS

-- DBCC DROPCLEANBUFFERS;

-- GO

The first run results are shown below.

SQL SERVER STATEMENT EXAMPLES

======================================

Method: GetAverageProductWeightST

Description: Statement, no parameters, returns Integer

Duration (ms): 122

Results: Average product weight (lb): 12.43

---

Method: GetAverageProductWeightPS

Description: PreparedStatement, no parameters, returns Integer

Duration (ms): 146

Results: Average product weight (lb): 12.43

---

Method: GetAverageProductWeightCS

Description: CallableStatement, no parameters, returns Integer

Duration (ms): 72

Results: Average product weight (lb): 12.43

---

Method: GetAverageProductWeightOutCS

Description: CallableStatement, (1) output parameter, returns Integer

Duration (ms): 623

Results: Average product weight (lb): 12.43

---

Method: GetEmployeesByLastNameCS

Description: CallableStatement, (1) input parameter, returns ResultSet

Duration (ms): 830

Results: Last names starting with 'Sa': 7

Last employee found: Sandberg, Mikael Q

---

Method: GetProductsByColorAndSizeCS

Description: CallableStatement, (2) input parameter, returns ResultSet

Duration (ms): 427

Results: Products found (color: 'Red', size: '44'): 7

First product: Road-650 Red, 44 (BK-R50R-44)

---

The second run results are shown below.

SQL SERVER STATEMENT EXAMPLES

======================================

Method: GetAverageProductWeightST

Description: Statement, no parameters, returns Integer

Duration (ms): 116

Results: Average product weight (lb): 12.43

---

Method: GetAverageProductWeightPS

Description: PreparedStatement, no parameters, returns Integer

Duration (ms): 89

Results: Average product weight (lb): 12.43

---

Method: GetAverageProductWeightCS

Description: CallableStatement, no parameters, returns Integer

Duration (ms): 80

Results: Average product weight (lb): 12.43

---

Method: GetAverageProductWeightOutCS

Description: CallableStatement, (1) output parameter, returns Integer

Duration (ms): 340

Results: Average product weight (lb): 12.43

---

Method: GetEmployeesByLastNameCS

Description: CallableStatement, (1) input parameter, returns ResultSet

Duration (ms): 139

Results: Last names starting with 'Sa': 7

Last employee found: Sandberg, Mikael Q

---

Method: GetProductsByColorAndSizeCS

Description: CallableStatement, (2) input parameter, returns ResultSet

Duration (ms): 208

Results: Products found (color: 'Red', size: '44'): 7

First product: Road-650 Red, 44 (BK-R50R-44)

---

Conclusion

This post has demonstrated several methods for querying and calling stored procedures from a SQL Server 2017 database using JDBC with the Microsoft JDBC Driver 8.4 for SQL Server. Although the examples are quite simple, the same patterns can be used with more complex stored procedures, with multiple input and output parameters, which not only select, but insert, update, and delete data.

There are some limitations of the Microsoft JDBC Driver for SQL Server you should be aware of by reading the documentation. However, for most tasks that require database interaction, the Driver provides adequate functionality with SQL Server.

This blog represents my own viewpoints and not of my employer, Amazon Web Services.

Getting Started with PostgreSQL using Amazon RDS, CloudFormation, pgAdmin, and Python

Posted by Gary A. Stafford in AWS, Cloud, Python, Software Development on August 9, 2019

Introduction

In the following post, we will explore how to get started with Amazon Relational Database Service (RDS) for PostgreSQL. CloudFormation will be used to build a PostgreSQL master database instance and a single read replica in a new VPC. AWS Systems Manager Parameter Store will be used to store our CloudFormation configuration values. Amazon RDS Event Notifications will send text messages to our mobile device to let us know when the RDS instances are ready for use. Once running, we will examine a variety of methods to interact with our database instances, including pgAdmin, Adminer, and Python.

Technologies

The primary technologies used in this post include the following.

PostgreSQL

According to its website, PostgreSQL, commonly known as Postgres, is the world’s most advanced Open Source relational database. Originating at UC Berkeley in 1986, PostgreSQL has more than 30 years of active core development. PostgreSQL has earned a strong reputation for its proven architecture, reliability, data integrity, robust feature set, extensibility. PostgreSQL runs on all major operating systems and has been ACID-compliant since 2001.

According to its website, PostgreSQL, commonly known as Postgres, is the world’s most advanced Open Source relational database. Originating at UC Berkeley in 1986, PostgreSQL has more than 30 years of active core development. PostgreSQL has earned a strong reputation for its proven architecture, reliability, data integrity, robust feature set, extensibility. PostgreSQL runs on all major operating systems and has been ACID-compliant since 2001.

Amazon RDS for PostgreSQL

Amazon RDS for PostgreSQL makes it easy to set up, operate, and scale PostgreSQL deployments in the cloud. Amazon RDS supports the latest PostgreSQL version 11, which includes several enhancements to performance, robustness, transaction management, query parallelism, and more.

AWS CloudFormation

According to Amazon, CloudFormation provides a common language to describe and provision all the infrastructure resources within AWS-based cloud environments. CloudFormation allows you to use a JSON- or YAML-based template to model and provision all the resources needed for your applications across all AWS regions and accounts, in an automated and secure manner.

Demonstration

Architecture

Below, we see an architectural representation of what will be built in the demonstration. This is not a typical three-tier AWS architecture, wherein the RDS instances would be placed in private subnets (data tier) and accessible only by the application tier, running on AWS. The architecture for the demonstration is designed for interacting with RDS through external database clients such as pgAdmin, and applications like our local Python scripts, detailed later in the post.

Source Code

All source code for this post is available on GitHub in a single public repository, postgres-rds-demo.

. ├── LICENSE.md ├── README.md ├── cfn-templates │ ├── event.template │ ├── rds.template ├── parameter_store_values.sh ├── python-scripts │ ├── create_pagila_data.py │ ├── database.ini │ ├── db_config.py │ ├── query_postgres.py │ ├── requirements.txt │ └── unit_tests.py ├── sql-scripts │ ├── pagila-insert-data.sql │ └── pagila-schema.sql └── stack.yml

To clone the GitHub repository, execute the following command.

git clone --branch master --single-branch --depth 1 --no-tags \ https://github.com/garystafford/aws-rds-postgres.git

Prerequisites

For this demonstration, I will assume you already have an AWS account. Further, that you have the latest copy of the AWS CLI and Python 3 installed on your development machine. Optionally, for pgAdmin and Adminer, you will also need to have Docker installed.

Steps

In this demonstration, we will perform the following steps.

- Put CloudFormation configuration values in Parameter Store;

- Execute CloudFormation templates to create AWS resources;

- Execute SQL scripts using Python to populate the new database with sample data;

- Configure pgAdmin and Python connections to RDS PostgreSQL instances;

AWS Systems Manager Parameter Store

With AWS, it is typical to use services like AWS Systems Manager Parameter Store and AWS Secrets Manager to store overt, sensitive, and secret configuration values. These values are utilized by your code, or from AWS services like CloudFormation. Parameter Store allows us to follow the proper twelve-factor, cloud-native practice of separating configuration from code.

To demonstrate the use of Parameter Store, we will place a few of our CloudFormation configuration items into Parameter Store. The demonstration’s GitHub repository includes a shell script, parameter_store_values.sh, which will put the necessary parameters into Parameter Store.

Below, we see several of the demo’s configuration values, which have been put into Parameter Store.

SecureString

Whereas our other parameters are stored in Parameter Store as String datatypes, the database’s master user password is stored as a SecureString data-type. Parameter Store uses an AWS Key Management Service (KMS) customer master key (CMK) to encrypt the SecureString parameter value.

SMS Text Alert Option

Before running the Parameter Store script, you will need to change the /rds_demo/alert_phone parameter value in the script (shown below) to your mobile device number, including country code, such as ‘+12038675309’. Amazon SNS will use it to send SMS messages, using Amazon RDS Event Notification. If you don’t want to use this messaging feature, simply ignore this parameter and do not execute the event.template CloudFormation template in the proceeding step.

aws ssm put-parameter \ --name /rds_demo/alert_phone \ --type String \ --value "your_phone_number_here" \ --description "RDS alert SMS phone number" \ --overwrite

Run the following command to execute the shell script, parameter_store_values.sh, which will put the necessary parameters into Parameter Store.

sh ./parameter_store_values.sh

CloudFormation Templates

The GitHub repository includes two CloudFormation templates, cfn-templates/event.template and cfn-templates/rds.template. This event template contains two resources, which are an AWS SNS Topic and an AWS RDS Event Subscription. The RDS template also includes several resources, including a VPC, Internet Gateway, VPC security group, two public subnets, one RDS master database instance, and an AWS RDS Read Replica database instance.

The resources are split into two CloudFormation templates so we can create the notification resources, first, independently of creating or deleting the RDS instances. This will ensure we get all our SMS alerts about both the creation and deletion of the databases.

Template Parameters

The two CloudFormation templates contain a total of approximately fifteen parameters. For most, you can use the default values I have set or chose to override them. Four of the parameters will be fulfilled from Parameter Store. Of these, the master database password is treated slightly differently because it is secure (encrypted in Parameter Store). Below is a snippet of the template showing both types of parameters. The last two are fulfilled from Parameter Store.

DBInstanceClass:

Type: String

Default: "db.t3.small"

DBStorageType:

Type: String

Default: "gp2"

DBUser:

Type: String

Default: "{{resolve:ssm:/rds_demo/master_username:1}}"

DBPassword:

Type: String

Default: "{{resolve:ssm-secure:/rds_demo/master_password:1}}"

NoEcho: True

Choosing the default CloudFormation parameter values will result in two minimally-configured RDS instances running the PostgreSQL 11.4 database engine on a db.t3.small instance with 10 GiB of General Purpose (SSD) storage. The db.t3 DB instance is part of the latest generation burstable performance instance class. The master instance is not configured for Multi-AZ high availability. However, the master and read replica each run in a different Availability Zone (AZ) within the same AWS Region.

Parameter Versioning

When placing parameters into Parameter Store, subsequent updates to a parameter result in the version number of that parameter being incremented. Note in the examples above, the version of the parameter is required by CloudFormation, here, ‘1’. If you chose to update a value in Parameter Store, thus incrementing the parameter’s version, you will also need to update the corresponding version number in the CloudFormation template’s parameter.

{

"Parameter": {

"Name": "/rds_demo/rds_username",

"Type": "String",

"Value": "masteruser",

"Version": 1,

"LastModifiedDate": 1564962073.774,

"ARN": "arn:aws:ssm:us-east-1:1234567890:parameter/rds_demo/rds_username"

}

}

Validating Templates

Although I have tested both templates, I suggest validating the templates yourself, as you usually would for any CloudFormation template you are creating. You can use the AWS CLI CloudFormation validate-template CLI command to validate the template. Alternately, or I suggest additionally, you can use CloudFormation Linter, cfn-lint command.

aws cloudformation validate-template \ --template-body file://cfn-templates/rds.template cfn-lint -t cfn-templates/cfn-templates/rds.template

Create the Stacks

To execute the first CloudFormation template and create a CloudFormation Stack containing the two event notification resources, run the following create-stack CLI command.

aws cloudformation create-stack \ --template-body file://cfn-templates/event.template \ --stack-name RDSEventDemoStack

The first stack only takes less than one minute to create. Using the AWS CloudFormation Console, make sure the first stack completes successfully before creating the second stack with the command, below.

aws cloudformation create-stack \ --template-body file://cfn-templates/rds.template \ --stack-name RDSDemoStack

Wait for my Text

In my tests, the CloudFormation RDS stack takes an average of 25–30 minutes to create and 15–20 minutes to delete, which can seem like an eternity. You could use the AWS CloudFormation console (shown below) or continue to use the CLI to follow the progress of the RDS stack creation.

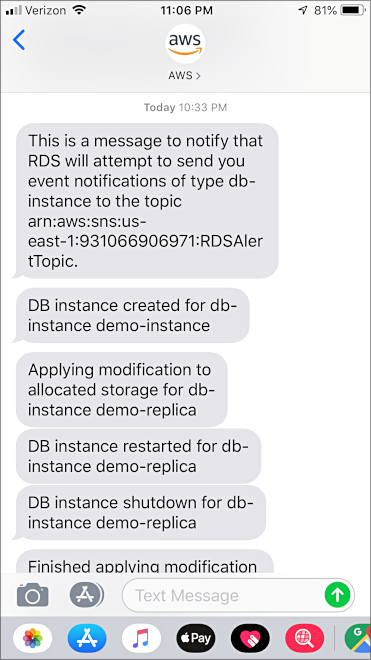

However, if you recall, the CloudFormation event template creates an AWS RDS Event Subscription. This resource will notify us when the databases are ready by sending text messages to our mobile device.

In the CloudFormation events template, the RDS Event Subscription is configured to generate Amazon Simple Notification Service (SNS) notifications for several specific event types, including RDS instance creation and deletion.

MyEventSubscription:

Properties:

Enabled: true

EventCategories:

- availability

- configuration change

- creation

- deletion

- failover

- failure

- recovery

SnsTopicArn:

Ref: MyDBSNSTopic

SourceType: db-instance

Type: AWS::RDS::EventSubscription

Amazon SNS will send SMS messages to the mobile number you placed into Parameter Store. Below, we see messages generated during the creation of the two instances, displayed on an Apple iPhone.

Amazon RDS Dashboard

Once the RDS CloudFormation stack has successfully been built, the easiest way to view the results is using the Amazon RDS Dashboard, as shown below. Here we see both the master and read replica instances have been created and are available for our use.

The RDS dashboard offers CloudWatch monitoring of each RDS instance.

The RDS dashboard also provides detailed configuration information about each RDS instance.

The RDS dashboard’s Connection & security tab is where we can obtain connection information about our RDS instances, including the RDS instance’s endpoints. Endpoints information will be required in the next part of the demonstration.

Sample Data

Now that we have our PostgreSQL database instance and read replica successfully provisioned and configured on AWS, with an empty database, we need some test data. There are several sources of sample PostgreSQL databases available on the internet to explore. We will use the Pagila sample movie rental database by pgFoundry. Although the database is several years old, it provides a relatively complex relational schema (table relationships shown below) and plenty of sample data to query, about 100 database objects and 46K rows of data.

In the GitHub repository, I have included the two Pagila database SQL scripts required to install the sample database’s data structures (DDL), sql-scripts/pagila-schema.sql, and the data itself (DML), sql-scripts/pagila-insert-data.sql.

To execute the Pagila SQL scripts and install the sample data, I have included a Python script. If you do not want to use Python, you can skip to the Adminer section of this post. Adminer also has the capability to import SQL scripts.

Before running any of the included Python scripts, you will need to install the required Python packages and configure the database.ini file.

Python Packages

To install the required Python packages using the supplied python-scripts/requirements.txt file, run the below commands.

cd python-scripts pip3 install --upgrade -r requirements.txt

We are using two packages, psycopg2 and configparser, for the scripts. Psycopg is a PostgreSQL database adapter for Python. According to their website, Psycopg is the most popular PostgreSQL database adapter for the Python programming language. The configparser module allows us to read configuration from files similar to Microsoft Windows INI files. The unittest package is required for a set of unit tests includes the project, but not discussed as part of the demo.

Database Configuration

The python-scripts/database.ini file, read by configparser, provides the required connection information to our RDS master and read replica instance’s databases. Use the input parameters and output values from the CloudFormation RDS template, or the Amazon RDS Dashboard to obtain the required connection information, as shown in the example, below. Your host values will be unique for your master and read replica. The host values are the instance’s endpoint, listed in the RDS Dashboard’s Configuration tab.

[docker] host=localhost port=5432 database=pagila user=masteruser password=5up3r53cr3tPa55w0rd [master] host=demo-instance.dkfvbjrazxmd.us-east-1.rds.amazonaws.com port=5432 database=pagila user=masteruser password=5up3r53cr3tPa55w0rd [replica] host=demo-replica.dkfvbjrazxmd.us-east-1.rds.amazonaws.com port=5432 database=pagila user=masteruser password=5up3r53cr3tPa55w0rd

With the INI file configured, run the following command, which executes a supplied Python script, python-scripts/create_pagila_data.py, to create the data structure and insert sample data into the master RDS instance’s Pagila database. The database will be automatically replicated to the RDS read replica instance. From my local laptop, I found the Python script takes approximately 40 seconds to create all 100 database objects and insert 46K rows of movie rental data. That is compared to about 13 seconds locally, using a Docker-based instance of PostgreSQL.

python3 ./create_pagila_data.py --instance master

The Python script’s primary function, create_pagila_db(), reads and executes the two external SQL scripts.

def create_pagila_db():

"""

Creates Pagila database by running DDL and DML scripts

"""

try:

global conn

with conn:

with conn.cursor() as curs:

curs.execute(open("../sql-scripts/pagila-schema.sql", "r").read())

curs.execute(open("../sql-scripts/pagila-insert-data.sql", "r").read())

conn.commit()

print('Pagila SQL scripts executed')

except (psycopg2.OperationalError, psycopg2.DatabaseError, FileNotFoundError) as err:

print(create_pagila_db.__name__, err)

close_conn()

exit(1)

If the Python script executes correctly, you should see output indicating there are now 28 tables in our master RDS instance’s database.

pgAdmin

pgAdmin is a favorite tool for interacting with and managing PostgreSQL databases. According to its website, pgAdmin is the most popular and feature-rich Open Source administration and development platform for PostgreSQL.

The project includes an optional Docker Swarm stack.yml file. The stack will create a set of three Docker containers, including a local copy of PostgreSQL 11.4, Adminer, and pgAdmin 4. Having a local copy of PostgreSQL, using the official Docker image, is helpful for development and trouble-shooting RDS issues.

Use the following commands to deploy the Swarm stack.

# create stack docker swarm init docker stack deploy -c stack.yml postgres # get status of new containers docker stack ps postgres --no-trunc docker container ls

If you do not want to spin up the whole Docker Swarm stack, you could use the docker run command to create just a single pgAdmin Docker container. The pgAdmin 4 Docker image being used is the image recommended by pgAdmin.

docker pull dpage/pgadmin4 docker run -p 81:80 \ -e "PGADMIN_DEFAULT_EMAIL=user@domain.com" \ -e "PGADMIN_DEFAULT_PASSWORD=SuperSecret" \ -d dpage/pgadmin4 docker container ls | grep pgadmin4

Database Server Configuration

Once pgAdmin is up and running, we can configure the master and read replica database servers (RDS instances) using the connection string information from your database.ini file or from the Amazon RDS Dashboard. Below, I am configuring the master RDS instance (server).

With that task complete, below, we see the master RDS instance and the read replica, as well as my local Docker instance configured in pgAdmin (left side of screengrab). Note how the Pagila database has been replicated automatically, from the RDS master to the read replica instance.

Building SQL Queries

Switching to the Query tab, we can run regular SQL queries against any of the database instances. Below, I have run a simple SELECT query against the master RDS instance’s Pagila database, returning the complete list of movie titles, along with their genre and release date.

The pgAdmin Query tool even includes an Explain tab to view a graphical representation of the same query, very useful for optimization. Here we see the same query, showing an analysis of the execution order. A popup window displays information about the selected object.

Query the Read Replica

To demonstrate the use of the read replica, below I’ve run the same query against the RDS read replica’s copy of the Pagila database. Any schema and data changes against the master instance are replicated to the read replica(s).

Adminer

Adminer is another good general-purpose database management tool, similar to pgAdmin, but with a few different capabilities. According to its website, with Adminer, you get a tidy user interface, rich support for multiple databases, performance, and security, all from a single PHP file. Adminer is my preferred tool for database admin tasks. Amazingly, Adminer works with MySQL, MariaDB, PostgreSQL, SQLite, MS SQL, Oracle, SimpleDB, Elasticsearch, and MongoDB.

Below, we see the Pagila database’s tables and views displayed in Adminer, along with some useful statistical information about each database object.

Similar to pgAdmin, we can also run queries, along with other common development and management tasks, from within the Adminer interface.

Import Pagila with Adminer

Another great feature of Adminer is the ability to easily import and export data. As an alternative to Python, you could import the Pagila data using Adminer’s SQL file import function. Below, you see an example of importing the Pagila database objects into the Pagila database, using the file upload function.

IDE