Posts Tagged docker

Continuous Integration and Deployment of Docker Images using GitHub Actions

Posted by Gary A. Stafford in Build Automation, Cloud, Continuous Delivery, DevOps, Go, Kubernetes, Software Development on June 16, 2021

According to GitHub, GitHub Actions allows you to automate, customize, and execute your software development workflows right in your repository. You can discover, create, and share actions to perform any job you would like, including continuous integration (CI) and continuous deployment (CD), and combine actions in a completely customized workflow.

This brief post will examine a simple use case for GitHub Actions — automatically building and pushing a new Docker image to Docker Hub. A GitHub Actions workflow will be triggered every time a new Git tag is pushed to the GitHub project repository.

GitHub Project Repository

For the demonstration, we will be using the public NLP Client microservice GitHub project repository. The NLP Client, written in Go, is part of five microservices that comprise the Natural Language Processing (NLP) API. I developed this API to demonstrate architectural principles and DevOps practices. The API’s microservices are designed to be run as a distributed system using container orchestration platforms such as Docker Swarm, Red Hat OpenShift, Amazon ECS, and Kubernetes.

Encrypted Secrets

To push new images to Docker Hub, the workflow must be logged in to your Docker Hub account. GitHub recommends storing your Docker Hub username and password as encrypted secrets, so they are not exposed in your workflow file. Encrypted secrets allow you to store sensitive information as encrypted environment variables in your organization, repository, or repository environment. The secrets that you create will be available to use in GitHub Actions workflows. To allow the workflow to log in to Docker Hub, I created two secrets, DOCKERHUB_USERNAME and DOCKERHUB_PASSWORD using my organization’s credentials, which I then reference in the workflow.

GitHub Actions Workflow

According to GitHub, a workflow is a configurable automated process made up of one or more jobs. You must create a YAML file to define your workflow configuration. GitHub contains many searchable code examples you can use to bootstrap your workflow development. For this demonstration, I started with the example shown in the GitHub Actions Guide, Publishing Docker images, and modified it to meet my needs. Workflow files are checked into the project’s repository within the .github/workflows directory.https://itnext.io/media/0e27d26012167bab83def6ef3595a74f

Workflow Development

Visual Studio Code (VS Code) is an excellent, full-featured, and free IDE for software development and writing Infrastructure as Code (IaC). VS Code has a large ecosystem of extensions, including extensions for GitHub Actions. Currently, I am using the GitHub Actions extension, cschleiden.vscode-github-actions, by Christopher Schleiden.

The extension features auto-complete, as shown below in the GitHub Actions workflow YAML file.

Git Tags

The demonstration’s workflow is designed to be triggered when a new Git tag is pushed to the NLP Client project repository. Using the workflow, you can perform normal pushes (git push) to the repository without triggering the workflow. For example, you would not typically want to trigger a new image build and push when updating the project’s README file. Thus, we use the new Git tag as the workflow trigger.

For consistency, I also designed the workflow to be triggered only when the format of the Git tag follows the common Semantic Versioning (SemVer) convention of version number MAJOR.MINOR.PATCH (v*.*.*).

on:

push:

tags:

- 'v*.*.*'

Also, following common Docker conventions in the workflow, the Git tag (e.g., v1.2.3) is truncated to remove the letter ‘v’ and used as the tag for the Docker image (e.g., 1.2.3). In the workflow, theGITHUB_REF:11 portion of the command truncates the Git tag reference of refs/tags/v1.2.3 to just 1.2.3.

run: echo "RELEASE_VERSION=${GITHUB_REF:11}" >> $GITHUB_ENV

Workflow Run

Pushing the Git tag triggers the workflow to run automatically, as seen in the Actions tab.

Detailed logs show you how each step in the workflow was processed.

The example below shows that the workflow has successfully built and pushed a new Docker image to Docker Hub for the NLP Client microservice.

Failure Notifications

You can choose to receive a notification when a workflow fails. GitHub Actions notifications are a configurable option found in the GitHub account owner’s Settings tab.

Status Badge

You can display a status badge in your repository to indicate the status of your workflows. The badge can be added as Markdown to your README file.

Docker Hub

As a result of the successful completion of the workflow, we now have a new image tagged as 1.2.3 in the NLP Client Docker Hub repository: garystafford/nlp-client.

Conclusion

In this brief post, we saw a simple example of how GitHub Actions allows you to automate, customize, and execute your software development workflows right in your GitHub repository. We can easily extend this post’s GitHub Actions example to include updating the service’s Kubernetes Deployment resource file to the latest image tag in Docker Hub. Further, we can trigger a GitOps workflow with tools such as Weaveworks’ Flux or Argo CD to deploy the revised workload to a Kubernetes cluster.

This blog represents my own viewpoints and not of my employer, Amazon Web Services (AWS). All product names, logos, and brands are the property of their respective owners.

Getting Started with Data Analytics using Jupyter Notebooks, PySpark, and Docker

Posted by Gary A. Stafford in Bash Scripting, Big Data, Build Automation, DevOps, Python, Software Development on December 6, 2019

There is little question, big data analytics, data science, artificial intelligence (AI), and machine learning (ML), a subcategory of AI, have all experienced a tremendous surge in popularity over the last few years. Behind the marketing hype, these technologies are having a significant influence on many aspects of our modern lives. Due to their popularity and potential benefits, commercial enterprises, academic institutions, and the public sector are rushing to develop hardware and software solutions to lower the barriers to entry and increase the velocity of ML and Data Scientists and Engineers.

(courtesy Google Trends and Plotly)

Many open-source software projects are also lowering the barriers to entry into these technologies. An excellent example of one such open-source project working on this challenge is Project Jupyter. Similar to Apache Zeppelin and the newly open-sourced Netflix’s Polynote, Jupyter Notebooks enables data-driven, interactive, and collaborative data analytics.

This post will demonstrate the creation of a containerized data analytics environment using Jupyter Docker Stacks. The particular environment will be suited for learning and developing applications for Apache Spark using the Python, Scala, and R programming languages. We will focus on Python and Spark, using PySpark.

Featured Technologies

The following technologies are featured prominently in this post.

Jupyter Notebooks

According to Project Jupyter, the Jupyter Notebook, formerly known as the IPython Notebook, is an open-source web application that allows users to create and share documents that contain live code, equations, visualizations, and narrative text. Uses include data cleansing and transformation, numerical simulation, statistical modeling, data visualization, machine learning, and much more. The word, Jupyter, is a loose acronym for Julia, Python, and R, but today, Jupyter supports many programming languages.

Interest in Jupyter Notebooks has grown dramatically over the last 3–5 years, fueled in part by the major Cloud providers, AWS, Google Cloud, and Azure. Amazon Sagemaker, Amazon EMR (Elastic MapReduce), Google Cloud Dataproc, Google Colab (Collaboratory), and Microsoft Azure Notebooks all have direct integrations with Jupyter notebooks for big data analytics and machine learning.

(courtesy Google Trends and Plotly)

Jupyter Docker Stacks

To enable quick and easy access to Jupyter Notebooks, Project Jupyter has created Jupyter Docker Stacks. The stacks are ready-to-run Docker images containing Jupyter applications, along with accompanying technologies. Currently, the Jupyter Docker Stacks focus on a variety of specializations, including the r-notebook, scipy-notebook, tensorflow-notebook, datascience-notebook, pyspark-notebook, and the subject of this post, the all-spark-notebook. The stacks include a wide variety of well-known packages to extend their functionality, such as scikit-learn, pandas, Matplotlib, Bokeh, NumPy, and Facets.

Apache Spark

According to Apache, Spark is a unified analytics engine for large-scale data processing. Starting as a research project at the UC Berkeley AMPLab in 2009, Spark was open-sourced in early 2010 and moved to the Apache Software Foundation in 2013. Reviewing the postings on any major career site will confirm that Spark is widely used by well-known modern enterprises, such as Netflix, Adobe, Capital One, Lockheed Martin, JetBlue Airways, Visa, and Databricks. At the time of this post, LinkedIn, alone, had approximately 3,500 listings for jobs that reference the use of Apache Spark, just in the United States.

With speeds up to 100 times faster than Hadoop, Apache Spark achieves high performance for static, batch, and streaming data, using a state-of-the-art DAG (Directed Acyclic Graph) scheduler, a query optimizer, and a physical execution engine. Spark’s polyglot programming model allows users to write applications quickly in Scala, Java, Python, R, and SQL. Spark includes libraries for Spark SQL (DataFrames and Datasets), MLlib (Machine Learning), GraphX (Graph Processing), and DStreams (Spark Streaming). You can run Spark using its standalone cluster mode, Apache Hadoop YARN, Mesos, or Kubernetes.

PySpark

The Spark Python API, PySpark, exposes the Spark programming model to Python. PySpark is built on top of Spark’s Java API and uses Py4J. According to Apache, Py4J, a bridge between Python and Java, enables Python programs running in a Python interpreter to dynamically access Java objects in a Java Virtual Machine (JVM). Data is processed in Python and cached and shuffled in the JVM.

Docker

According to Docker, their technology gives developers and IT the freedom to build, manage, and secure business-critical applications without the fear of technology or infrastructure lock-in. For this post, I am using the current stable version of Docker Desktop Community version for macOS, as of March 2020.

Docker Swarm

Current versions of Docker include both a Kubernetes and Swarm orchestrator for deploying and managing containers. We will choose Swarm for this demonstration. According to Docker, the cluster management and orchestration features embedded in the Docker Engine are built using swarmkit. Swarmkit is a separate project that implements Docker’s orchestration layer and is used directly within Docker.

PostgreSQL

PostgreSQL is a powerful, open-source, object-relational database system. According to their website, PostgreSQL comes with many features aimed to help developers build applications, administrators to protect data integrity and build fault-tolerant environments, and help manage data no matter how big or small the dataset.

Demonstration

In this demonstration, we will explore the capabilities of the Spark Jupyter Docker Stack to provide an effective data analytics development environment. We will explore a few everyday uses, including executing Python scripts, submitting PySpark jobs, and working with Jupyter Notebooks, and reading and writing data to and from different file formats and a database. We will be using the latest jupyter/all-spark-notebook Docker Image. This image includes Python, R, and Scala support for Apache Spark, using Apache Toree.

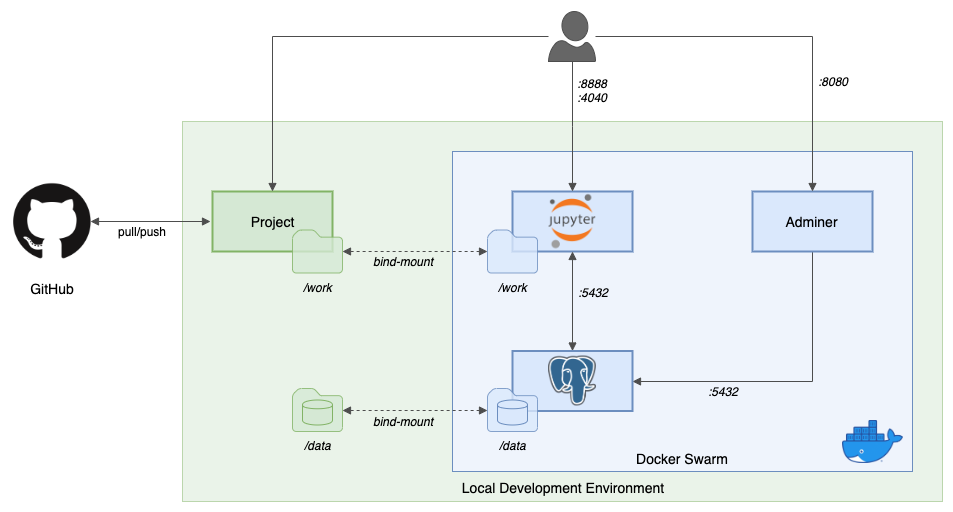

Architecture

As shown below, we will deploy a Docker stack to a single-node Docker swarm. The stack consists of a Jupyter All-Spark-Notebook, PostgreSQL (Alpine Linux version 12), and Adminer container. The Docker stack will have two local directories bind-mounted into the containers. Files from our GitHub project will be shared with the Jupyter application container through a bind-mounted directory. Our PostgreSQL data will also be persisted through a bind-mounted directory. This allows us to persist data external to the ephemeral containers. If the containers are restarted or recreated, the data is preserved locally.

Source Code

All source code for this post can be found on GitHub. Use the following command to clone the project. Note this post uses the v2 branch.

git clone \ --branch v2 --single-branch --depth 1 --no-tags \ https://github.com/garystafford/pyspark-setup-demo.git

Source code samples are displayed as GitHub Gists, which may not display correctly on some mobile and social media browsers.

Deploy Docker Stack

To start, create the $HOME/data/postgres directory to store PostgreSQL data files.

mkdir -p ~/data/postgres

This directory will be bind-mounted into the PostgreSQL container on line 41 of the stack.yml file, $HOME/data/postgres:/var/lib/postgresql/data. The HOME environment variable assumes you are working on Linux or macOS and is equivalent to HOMEPATH on Windows.

The Jupyter container’s working directory is set on line 15 of the stack.yml file, working_dir: /home/$USER/work. The local bind-mounted working directory is $PWD/work. This path is bind-mounted to the working directory in the Jupyter container, on line 29 of the Docker stack file, $PWD/work:/home/$USER/work. The PWD environment variable assumes you are working on Linux or macOS (CD on Windows).

By default, the user within the Jupyter container is jovyan. We will override that user with our own local host’s user account, as shown on line 21 of the Docker stack file, NB_USER: $USER. We will use the host’s USER environment variable value (equivalent to USERNAME on Windows). There are additional options for configuring the Jupyter container. Several of those options are used on lines 17–22 of the Docker stack file (gist).

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| # docker stack deploy -c stack.yml jupyter | |

| # optional pgadmin container | |

| version: "3.7" | |

| networks: | |

| demo-net: | |

| services: | |

| spark: | |

| image: jupyter/all-spark-notebook:latest | |

| ports: | |

| – "8888:8888/tcp" | |

| – "4040:4040/tcp" | |

| networks: | |

| – demo-net | |

| working_dir: /home/$USER/work | |

| environment: | |

| CHOWN_HOME: "yes" | |

| GRANT_SUDO: "yes" | |

| NB_UID: 1000 | |

| NB_GID: 100 | |

| NB_USER: $USER | |

| NB_GROUP: staff | |

| user: root | |

| deploy: | |

| replicas: 1 | |

| restart_policy: | |

| condition: on-failure | |

| volumes: | |

| – $PWD/work:/home/$USER/work | |

| postgres: | |

| image: postgres:12-alpine | |

| environment: | |

| POSTGRES_USERNAME: postgres | |

| POSTGRES_PASSWORD: postgres1234 | |

| POSTGRES_DB: bakery | |

| ports: | |

| – "5432:5432/tcp" | |

| networks: | |

| – demo-net | |

| volumes: | |

| – $HOME/data/postgres:/var/lib/postgresql/data | |

| deploy: | |

| restart_policy: | |

| condition: on-failure | |

| adminer: | |

| image: adminer:latest | |

| ports: | |

| – "8080:8080/tcp" | |

| networks: | |

| – demo-net | |

| deploy: | |

| restart_policy: | |

| condition: on-failure | |

| # pgadmin: | |

| # image: dpage/pgadmin4:latest | |

| # environment: | |

| # PGADMIN_DEFAULT_EMAIL: user@domain.com | |

| # PGADMIN_DEFAULT_PASSWORD: 5up3rS3cr3t! | |

| # ports: | |

| # – "8180:80/tcp" | |

| # networks: | |

| # – demo-net | |

| # deploy: | |

| # restart_policy: | |

| # condition: on-failure |

The jupyter/all-spark-notebook Docker image is large, approximately 5 GB. Depending on your Internet connection, if this is the first time you have pulled this image, the stack may take several minutes to enter a running state. Although not required, I usually pull new Docker images in advance.

docker pull jupyter/all-spark-notebook:latest docker pull postgres:12-alpine docker pull adminer:latest

Assuming you have a recent version of Docker installed on your local development machine and running in swarm mode, standing up the stack is as easy as running the following docker command from the root directory of the project.

docker stack deploy -c stack.yml jupyter

The Docker stack consists of a new overlay network, jupyter_demo-net, and three containers. To confirm the stack deployed successfully, run the following docker command.

docker stack ps jupyter --no-trunc

To access the Jupyter Notebook application, you need to obtain the Jupyter URL and access token. The Jupyter URL and the access token are output to the Jupyter container log, which can be accessed with the following command.

docker logs $(docker ps | grep jupyter_spark | awk '{print $NF}')

You should observe log output similar to the following. Retrieve the complete URL, for example, http://127.0.0.1:8888/?token=f78cbe..., to access the Jupyter web-based user interface.

From the Jupyter dashboard landing page, you should see all the files in the project’s work/ directory. Note the types of files you can create from the dashboard, including Python 3, R, and Scala (using Apache Toree or spylon-kernal) notebooks, and text. You can also open a Jupyter terminal or create a new Folder from the drop-down menu. At the time of this post (March 2020), the latest jupyter/all-spark-notebook Docker Image runs Spark 2.4.5, Scala 2.11.12, Python 3.7.6, and OpenJDK 64-Bit Server VM, Java 1.8.0 Update 242.

Bootstrap Environment

Included in the project is a bootstrap script, bootstrap_jupyter.sh. The script will install the required Python packages using pip, the Python package installer, and a requirement.txt file. The bootstrap script also installs the latest PostgreSQL driver JAR, configures Apache Log4j to reduce log verbosity when submitting Spark jobs, and installs htop. Although these tasks could also be done from a Jupyter terminal or from within a Jupyter notebook, using a bootstrap script ensures you will have a consistent work environment every time you spin up the Jupyter Docker stack. Add or remove items from the bootstrap script as necessary.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| #!/bin/bash | |

| set -ex | |

| # update/upgrade and install htop | |

| sudo apt-get update -y && sudo apt-get upgrade -y | |

| sudo apt-get install htop | |

| # install required python packages | |

| python3 -m pip install –user –upgrade pip | |

| python3 -m pip install -r requirements.txt –upgrade | |

| # download latest postgres driver jar | |

| POSTGRES_JAR="postgresql-42.2.17.jar" | |

| if [ -f "$POSTGRES_JAR" ]; then | |

| echo "$POSTGRES_JAR exist" | |

| else | |

| wget -nv "https://jdbc.postgresql.org/download/${POSTGRES_JAR}" | |

| fi | |

| # spark-submit logging level from INFO to WARN | |

| sudo cp log4j.properties /usr/local/spark/conf/log4j.properties |

That’s it, our new Jupyter environment is ready to start exploring.

Running Python Scripts

One of the simplest tasks we could perform in our new Jupyter environment is running Python scripts. Instead of worrying about installing and maintaining the correct versions of Python and multiple Python packages on your own development machine, we can run Python scripts from within the Jupyter container. At the time of this post update, the latest jupyter/all-spark-notebook Docker image runs Python 3.7.3 and Conda 4.7.12. Let’s start with a simple Python script, 01_simple_script.py.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| #!/usr/bin/python3 | |

| import random | |

| technologies = [ | |

| 'PySpark', 'Python', 'Spark', 'Scala', 'Java', 'Project Jupyter', 'R' | |

| ] | |

| print("Technologies: %s\n" % technologies) | |

| technologies.sort() | |

| print("Sorted: %s\n" % technologies) | |

| print("I'm interested in learning about %s." % random.choice(technologies)) |

From a Jupyter terminal window, use the following command to run the script.

python3 01_simple_script.py

You should observe the following output.

Kaggle Datasets

To explore more features of the Jupyter and PySpark, we will use a publicly available dataset from Kaggle. Kaggle is an excellent open-source resource for datasets used for big-data and ML projects. Their tagline is ‘Kaggle is the place to do data science projects’. For this demonstration, we will use the Transactions from a bakery dataset from Kaggle. The dataset is available as a single CSV-format file. A copy is also included in the project.

The ‘Transactions from a bakery’ dataset contains 21,294 rows with 4 columns of data. Although certainly not big data, the dataset is large enough to test out the Spark Jupyter Docker Stack functionality. The data consists of 9,531 customer transactions for 21,294 bakery items between 2016-10-30 and 2017-04-09 (gist).

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Date | Time | Transaction | Item | |

|---|---|---|---|---|

| 2016-10-30 | 09:58:11 | 1 | Bread | |

| 2016-10-30 | 10:05:34 | 2 | Scandinavian | |

| 2016-10-30 | 10:05:34 | 2 | Scandinavian | |

| 2016-10-30 | 10:07:57 | 3 | Hot chocolate | |

| 2016-10-30 | 10:07:57 | 3 | Jam | |

| 2016-10-30 | 10:07:57 | 3 | Cookies | |

| 2016-10-30 | 10:08:41 | 4 | Muffin | |

| 2016-10-30 | 10:13:03 | 5 | Coffee | |

| 2016-10-30 | 10:13:03 | 5 | Pastry | |

| 2016-10-30 | 10:13:03 | 5 | Bread |

Submitting Spark Jobs

We are not limited to Jupyter notebooks to interact with Spark. We can also submit scripts directly to Spark from the Jupyter terminal. This is typically how Spark is used in a Production for performing analysis on large datasets, often on a regular schedule, using tools such as Apache Airflow. With Spark, you are load data from one or more data sources. After performing operations and transformations on the data, the data is persisted to a datastore, such as a file or a database, or conveyed to another system for further processing.

The project includes a simple Python PySpark ETL script, 02_pyspark_job.py. The ETL script loads the original Kaggle Bakery dataset from the CSV file into memory, into a Spark DataFrame. The script then performs a simple Spark SQL query, calculating the total quantity of each type of bakery item sold, sorted in descending order. Finally, the script writes the results of the query to a new CSV file, output/items-sold.csv.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| #!/usr/bin/python3 | |

| from pyspark.sql import SparkSession | |

| spark = SparkSession \ | |

| .builder \ | |

| .appName('spark-demo') \ | |

| .getOrCreate() | |

| df_bakery = spark.read \ | |

| .format('csv') \ | |

| .option('header', 'true') \ | |

| .option('delimiter', ',') \ | |

| .option('inferSchema', 'true') \ | |

| .load('BreadBasket_DMS.csv') | |

| df_sorted = df_bakery.cube('item').count() \ | |

| .filter('item NOT LIKE \'NONE\'') \ | |

| .filter('item NOT LIKE \'Adjustment\'') \ | |

| .orderBy(['count', 'item'], ascending=[False, True]) | |

| df_sorted.show(10, False) | |

| df_sorted.coalesce(1) \ | |

| .write.format('csv') \ | |

| .option('header', 'true') \ | |

| .save('output/items-sold.csv', mode='overwrite') |

Run the script directly from a Jupyter terminal using the following command.

python3 02_pyspark_job.py

An example of the output of the Spark job is shown below.

Typically, you would submit the Spark job using the spark-submit command. Use a Jupyter terminal to run the following command.

$SPARK_HOME/bin/spark-submit 02_pyspark_job.py

Below, we see the output from the spark-submit command. Printing the results in the output is merely for the purposes of the demo. Typically, Spark jobs are submitted non-interactively, and the results are persisted directly to a datastore or conveyed to another system.

Using the following commands, we can view the resulting CVS file, created by the spark job.

ls -alh output/items-sold.csv/ head -5 output/items-sold.csv/*.csv

An example of the files created by the spark job is shown below. We should have discovered that coffee is the most commonly sold bakery item with 5,471 sales, followed by bread with 3,325 sales.

Interacting with Databases

To demonstrate the flexibility of Jupyter to work with databases, PostgreSQL is part of the Docker Stack. We can read and write data from the Jupyter container to the PostgreSQL instance, running in a separate container. To begin, we will run a SQL script, written in Python, to create our database schema and some test data in a new database table. To do so, we will use the psycopg2, the PostgreSQL database adapter package for the Python, we previously installed into our Jupyter container using the bootstrap script. The below Python script, 03_load_sql.py, will execute a set of SQL statements contained in a SQL file, bakery.sql, against the PostgreSQL container instance.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| #!/usr/bin/python3 | |

| import psycopg2 | |

| # connect to database | |

| connect_str = 'host=postgres port=5432 dbname=bakery user=postgres password=postgres1234' | |

| conn = psycopg2.connect(connect_str) | |

| conn.autocommit = True | |

| cursor = conn.cursor() | |

| # execute sql script | |

| sql_file = open('bakery.sql', 'r') | |

| sqlFile = sql_file.read() | |

| sql_file.close() | |

| sqlCommands = sqlFile.split(';') | |

| for command in sqlCommands: | |

| print(command) | |

| if command.strip() != '': | |

| cursor.execute(command) | |

| # import data from csv file | |

| with open('BreadBasket_DMS.csv', 'r') as f: | |

| next(f) # Skip the header row. | |

| cursor.copy_from( | |

| f, | |

| 'transactions', | |

| sep=',', | |

| columns=('date', 'time', 'transaction', 'item') | |

| ) | |

| conn.commit() | |

| # confirm by selecting record | |

| command = 'SELECT COUNT(*) FROM public.transactions;' | |

| cursor.execute(command) | |

| recs = cursor.fetchall() | |

| print('Row count: %d' % recs[0]) |

The SQL file, bakery.sql.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| DROP TABLE IF EXISTS "transactions"; | |

| DROP SEQUENCE IF EXISTS transactions_id_seq; | |

| CREATE SEQUENCE transactions_id_seq INCREMENT 1 MINVALUE 1 MAXVALUE 2147483647 START 1 CACHE 1; | |

| CREATE TABLE "public"."transactions" | |

| ( | |

| "id" integer DEFAULT nextval('transactions_id_seq') NOT NULL, | |

| "date" character varying(10) NOT NULL, | |

| "time" character varying(8) NOT NULL, | |

| "transaction" integer NOT NULL, | |

| "item" character varying(50) NOT NULL | |

| ) WITH (oids = false); |

To execute the script, run the following command.

python3 03_load_sql.py

This should result in the following output, if successful.

Adminer

To confirm the SQL script’s success, use Adminer. Adminer (formerly phpMinAdmin) is a full-featured database management tool written in PHP. Adminer natively recognizes PostgreSQL, MySQL, SQLite, and MongoDB, among other database engines. The current version is 4.7.6 (March 2020).

Adminer should be available on localhost port 8080. The password credentials, shown below, are located in the stack.yml file. The server name, postgres, is the name of the PostgreSQL Docker container. This is the domain name the Jupyter container will use to communicate with the PostgreSQL container.

Connecting to the new bakery database with Adminer, we should see the transactions table.

The table should contain 21,293 rows, each with 5 columns of data.

pgAdmin

Another excellent choice for interacting with PostgreSQL, in particular, is pgAdmin 4. It is my favorite tool for the administration of PostgreSQL. Although limited to PostgreSQL, the user interface and administrative capabilities of pgAdmin is superior to Adminer, in my opinion. For brevity, I chose not to include pgAdmin in this post. The Docker stack also contains a pgAdmin container, which has been commented out. To use pgAdmin, just uncomment the container and re-run the Docker stack deploy command. pgAdmin should then be available on localhost port 81. The pgAdmin login credentials are in the Docker stack file.

Developing Jupyter Notebooks

The real power of the Jupyter Docker Stacks containers is Jupyter Notebooks. According to the Jupyter Project, the notebook extends the console-based approach to interactive computing in a qualitatively new direction, providing a web-based application suitable for capturing the whole computation process, including developing, documenting, and executing code, as well as communicating the results. Notebook documents contain the inputs and outputs of an interactive session as well as additional text that accompanies the code but is not meant for execution.

To explore the capabilities of Jupyter notebooks, the project includes two simple Jupyter notebooks. The first notebooks, 04_notebook.ipynb, demonstrates typical PySpark functions, such as loading data from a CSV file and from the PostgreSQL database, performing basic data analysis with Spark SQL including the use of PySpark user-defined functions (UDF), graphing the data using BokehJS, and finally, saving data back to the database, as well as to the fast and efficient Apache Parquet file format. Below we see several notebook cells demonstrating these features.

IDE Integration

Recall, the working directory, containing the GitHub source code for the project, is bind-mounted to the Jupyter container. Therefore, you can also edit any of the files, including notebooks, in your favorite IDE, such as JetBrains PyCharm and Microsoft Visual Studio Code. PyCharm has built-in language support for Jupyter Notebooks, as shown below.

As does Visual Studio Code using the Python extension.

Using Additional Packages

As mentioned in the Introduction, the Jupyter Docker Stacks come ready-to-run, with a wide variety of Python packages to extend their functionality. To demonstrate the use of these packages, the project contains a second Jupyter notebook document, 05_notebook.ipynb. This notebook uses SciPy, the well-known Python package for mathematics, science, and engineering, NumPy, the well-known Python package for scientific computing, and the Plotly Python Graphing Library. While NumPy and SciPy are included on the Jupyter Docker Image, the bootstrap script uses pip to install the required Plotly packages. Similar to Bokeh, shown in the previous notebook, we can use these libraries to create richly interactive data visualizations.

Plotly

To use Plotly from within the notebook, you will first need to sign up for a free account and obtain a username and API key. To ensure we do not accidentally save sensitive Plotly credentials in the notebook, we are using python-dotenv. This Python package reads key/value pairs from a .env file, making them available as environment variables to our notebook. Modify and run the following two commands from a Jupyter terminal to create the .env file and set you Plotly username and API key credentials. Note that the .env file is part of the .gitignore file and will not be committed to back to git, potentially compromising the credentials.

echo "PLOTLY_USERNAME=your-username" >> .env echo "PLOTLY_API_KEY=your-api-key" >> .env

The notebook expects to find the two environment variables, which it uses to authenticate with Plotly.

Shown below, we use Plotly to construct a bar chart of daily bakery items sold. The chart uses SciPy and NumPy to construct a linear fit (regression) and plot a line of best fit for the bakery data and overlaying the vertical bars. The chart also uses SciPy’s Savitzky-Golay Filter to plot the second line, illustrating a smoothing of our bakery data.

Plotly also provides Chart Studio Online Chart Maker. Plotly describes Chart Studio as the world’s most modern enterprise data visualization solutions. We can enhance, stylize, and share our data visualizations using the free version of Chart Studio Cloud.

Jupyter Notebook Viewer

Notebooks can also be viewed using nbviewer, an open-source project under Project Jupyter. Thanks to Rackspace hosting, the nbviewer instance is a free service.

Using nbviewer, below, we see the output of a cell within the 04_notebook.ipynb notebook. View this notebook, here, using nbviewer.

Monitoring Spark Jobs

The Jupyter Docker container exposes Spark’s monitoring and instrumentation web user interface. We can review each completed Spark Job in detail.

We can review details of each stage of the Spark job, including a visualization of the DAG (Directed Acyclic Graph), which Spark constructs as part of the job execution plan, using the DAG Scheduler.

We can also review the task composition and timing of each event occurring as part of the stages of the Spark job.

We can also use the Spark interface to review and confirm the runtime environment configuration, including versions of Java, Scala, and Spark, as well as packages available on the Java classpath.

Local Spark Performance

Running Spark on a single node within the Jupyter Docker container on your local development system is not a substitute for a true Spark cluster, Production-grade, multi-node Spark clusters running on bare metal or robust virtualized hardware, and managed with Apache Hadoop YARN, Apache Mesos, or Kubernetes. In my opinion, you should only adjust your Docker resources limits to support an acceptable level of Spark performance for running small exploratory workloads. You will not realistically replace the need to process big data and execute jobs requiring complex calculations on a Production-grade, multi-node Spark cluster.

We can use the following docker stats command to examine the container’s CPU and memory metrics.

docker stats --format "table {{.Name}}\t{{.CPUPerc}}\t{{.MemUsage}}\t{{.MemPerc}}"

Below, we see the stats from the Docker stack’s three containers showing little or no activity.

Compare those stats with the ones shown below, recorded while a notebook was reading and writing data, and executing Spark SQL queries. The CPU and memory output show spikes, but both appear to be within acceptable ranges.

Linux Process Monitors

Another option to examine container performance metrics is top, which is pre-installed in our Jupyter container. For example, execute the following top command from a Jupyter terminal, sorting processes by CPU usage.

top -o %CPU

We should observe the individual performance of each process running in the Jupyter container.

A step up from top is htop, an interactive process viewer for Unix. It was installed in the container by the bootstrap script. For example, we can execute the htop command from a Jupyter terminal, sorting processes by CPU % usage.

htop --sort-key PERCENT_CPU

With htop, observe the individual CPU activity. Here, the four CPUs at the top left of the htop window are the CPUs assigned to Docker. We get insight into the way Spark is using multiple CPUs, as well as other performance metrics, such as memory and swap.

Assuming your development machine is robust, it is easy to allocate and deallocate additional compute resources to Docker if required. Be careful not to allocate excessive resources to Docker, starving your host machine of available compute resources for other applications.

Notebook Extensions

There are many ways to extend the Jupyter Docker Stacks. A popular option is jupyter-contrib-nbextensions. According to their website, the jupyter-contrib-nbextensions package contains a collection of community-contributed unofficial extensions that add functionality to the Jupyter notebook. These extensions are mostly written in JavaScript and will be loaded locally in your browser. Installed notebook extensions can be enabled, either by using built-in Jupyter commands, or more conveniently by using the jupyter_nbextensions_configurator server extension.

The project contains an alternate Docker stack file, stack-nbext.yml. The stack uses an alternative Docker image, garystafford/all-spark-notebook-nbext:latest, This Dockerfile, which builds it, uses the jupyter/all-spark-notebook:latest image as a base image. The alternate image adds in the jupyter-contrib-nbextensions and jupyter_nbextensions_configurator packages. Since Jupyter would need to be restarted after nbextensions is deployed, it cannot be done from within a running jupyter/all-spark-notebook container.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| FROM jupyter/all-spark-notebook:latest | |

| USER root | |

| RUN pip install jupyter_contrib_nbextensions \ | |

| && jupyter contrib nbextension install –system \ | |

| && pip install jupyter_nbextensions_configurator \ | |

| && jupyter nbextensions_configurator enable –system \ | |

| && pip install yapf # for code pretty | |

| USER $NB_UID |

Using this alternate stack, below in our Jupyter container, we see the sizable list of extensions available. Some of my favorite extensions include ‘spellchecker’, ‘Codefolding’, ‘Code pretty’, ‘ExecutionTime’, ‘Table of Contents’, and ‘Toggle all line numbers’.

Below, we see five new extension icons that have been added to the menu bar of 04_notebook.ipynb. You can also observe the extensions have been applied to the notebook, including the table of contents, code-folding, execution time, and line numbering. The spellchecking and pretty code extensions were both also applied.

Conclusion

In this brief post, we have seen how easy it is to get started learning and performing data analytics using Jupyter notebooks, Python, Spark, and PySpark, all thanks to the Jupyter Docker Stacks. We could use this same stack to learn and perform machine learning using Scala and R. Extending the stack’s capabilities is as simple as swapping out this Jupyter image for another, with a different set of tools, as well as adding additional containers to the stack, such as MySQL, MongoDB, RabbitMQ, Apache Kafka, and Apache Cassandra.

All opinions expressed in this post are my own and not necessarily the views of my current or past employers, their clients.

Getting Started with PySpark for Big Data Analytics using Jupyter Notebooks and Jupyter Docker Stacks

Posted by Gary A. Stafford in Big Data, Python, Software Development on November 19, 2018

There is little question, big data analytics, data science, artificial intelligence (AI), and machine learning (ML), a subcategory of AI, have all experienced a tremendous surge in popularity over the last few years. Behind the hype curves and marketing buzz, these technologies are having a significant influence on all aspects of our modern lives. Due to their popularity and potential benefits, academic institutions and commercial enterprises are rushing to train large numbers of Data Scientists and ML and AI Engineers.

Learning popular programming paradigms, such as Python, Scala, R, Apache Hadoop, Apache Spark, and Apache Kafka, requires the use of multiple complex technologies. Installing, configuring, and managing these technologies often demands an advanced level of familiarity with Linux, distributed systems, cloud- and container-based platforms, databases, and data-streaming applications. These barriers may prove a deterrent to Students, Mathematicians, Statisticians, and Data Scientists.

Driven by the explosive growth of these technologies and the need to train individuals, many commercial enterprises are lowering the barriers to entry, making it easier to get started. The three major cloud providers, AWS, Azure, and Google Cloud, all have multiple Big Data-, AI- and ML-as-a-Service offerings.

Similarly, many open-source projects are also lowering the barriers to entry into these technologies. An excellent example of an open-source project working on this challenge is Project Jupyter. Similar to the Spark Notebook and Apache Zeppelin projects, Jupyter Notebooks enables data-driven, interactive, and collaborative data analytics with Julia, Scala, Python, R, and SQL.

This post will demonstrate the creation of a containerized development environment, using Jupyter Docker Stacks. The environment will be suited for learning and developing applications for Apache Spark, using the Python, Scala, and R programming languages. This post is not intended to be a tutorial on Spark, PySpark, or Jupyter Notebooks.

Featured Technologies

The following technologies are featured prominently in this post.

Jupyter Notebooks

According to Project Jupyter, the Jupyter Notebook, formerly known as the IPython Notebook, is an open-source web application that allows users to create and share documents that contain live code, equations, visualizations, and narrative text. Uses include data cleaning and transformation, numerical simulation, statistical modeling, data visualization, machine learning, and much more. The word, Jupyter, is a loose acronym for Julia, Python, and R, but today, the Jupyter supports many programming languages. Interest in Jupyter Notebooks has grown dramatically.

Jupyter Docker Stacks

To enable quick and easy access to Jupyter Notebooks, Project Jupyter has created Jupyter Docker Stacks. The stacks are ready-to-run Docker images containing Jupyter applications, along with accompanying technologies. Currently, eight different Jupyter Docker Stacks focus on a particular area of practice. They include SciPy (Python-based mathematics, science, and engineering), TensorFlow, R Project for statistical computing, Data Science with Julia, and the main subject of this post, PySpark. The stacks also include a rich variety of well-known packages to extend their functionality, such as scikit-learn, pandas, Matplotlib, Bokeh, ipywidgets (interactive HTML widgets), and Facets.

Apache Spark

According to Apache, Spark is a unified analytics engine for large-scale data processing, used by well-known, modern enterprises, such as Netflix, Yahoo, and eBay. With speeds up to 100x faster than Hadoop, Apache Spark achieves high performance for static, batch, and streaming data, using a state-of-the-art DAG (Directed Acyclic Graph) scheduler, a query optimizer, and a physical execution engine.

Spark’s polyglot programming model allows users to write applications quickly in Scala, Java, Python, R, and SQL. Spark includes libraries for Spark SQL (DataFrames and Datasets), MLlib (Machine Learning), GraphX (Graph Processing), and DStreams (Spark Streaming). You can run Spark using its standalone cluster mode, on Amazon EC2, Apache Hadoop YARN, Mesos, or Kubernetes.

PySpark

The Spark Python API, PySpark, exposes the Spark programming model to Python. PySpark is built on top of Spark’s Java API. Data is processed in Python and cached and shuffled in the JVM. According to Apache, Py4J enables Python programs running in a Python interpreter to dynamically access Java objects in a JVM.

Docker

According to Docker, their technology developers and IT the freedom to build, manage and secure business-critical applications without the fear of technology or infrastructure lock-in. Although Kubernetes is now the leading open-source container orchestration platform, Docker is still the predominant underlying container engine technology. For this post, I am using Docker Desktop Community version for macOS.

Docker Swarm

Current versions of Docker include both a Kubernetes and Swarm orchestrator for deploying and managing containers. We will choose Swarm for this demonstration. According to Docker, Swarm is the cluster management and orchestration features embedded in the Docker Engine are built using swarmkit. Swarmkit is a separate project which implements Docker’s orchestration layer and is used directly within Docker.

PostgreSQL

PostgreSQL is a powerful, open-source object-relational database system. According to their website, PostgreSQL comes with many features aimed to help developers build applications, administrators to protect data integrity and build fault-tolerant environments, and help manage data no matter how big or small the dataset.

Demonstration

To show the capabilities of the Jupyter development environment, I will demonstrate a few typical use cases, such as executing Python scripts, submitting PySpark jobs, working with Jupyter Notebooks, and reading and writing data to and from different format files and to a database. We will be using the jupyter/all-spark-notebook:latest Docker Image. This image includes Python, R, and Scala support for Apache Spark, using Apache Toree.

Architecture

As shown below, we will stand-up a Docker stack, consisting of Jupyter All-Spark-Notebook, PostgreSQL 10.5, and Adminer containers. The Docker stack will have local directories bind-mounted into the containers. Files from our GitHub project will be shared with the Jupyter application container through a bind-mounted directory. Our PostgreSQL data will also be persisted through a bind-mounted directory. This allows us to persist data external to the ephemeral containers.

Source Code

All open-sourced code for this post can be found on GitHub. Use the following command to clone the project. The post and project code was last updated on 9/28/2019.

git clone \ --branch master --single-branch --depth 1 --no-tags \ https://github.com/garystafford/pyspark-setup-demo.git

Source code samples are displayed as GitHub Gists, which may not display correctly on some mobile and social media browsers.

Deploy Docker Stack

To start, create the $HOME/data/postgres directory to store PostgreSQL data files. This directory will be bind-mounted into the PostgreSQL container on line 36 of the stack.yml file, $HOME/data/postgres:/var/lib/postgresql/data. The HOME environment variable assumes you are working on Linux or macOS and is equivalent to HOMEPATH on Windows.

The Jupyter container’s working directory is set on line 10 of the stack.yml file, working_dir:/home/$USER/work. The local bind-mounted working directory is $PWD/work. This path is bind-mounted to the working directory in the Jupyter container, on line 24 of the stack.yml file, $PWD/work:/home/$USER/work. The PWD environment variable assumes you are working on Linux or macOS (CD on Windows).

By default, the user within the Jupyter container is jovyan. Optionally, I have chosen to override that user with my own local host’s user account, as shown on line 16 of the stack.yml file, NB_USER: $USER. I have used the macOS host’s USER environment variable value (equivalent to USERNAME on Windows). There are many options for configuring the Jupyter container, detailed here. Several of those options are shown on lines 12-18 of the stack.yml file (gist).

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| version: "3.7" | |

| services: | |

| pyspark: | |

| image: jupyter/all-spark-notebook:latest | |

| ports: | |

| – "8888:8888/tcp" | |

| – "4040:4040/tcp" | |

| networks: | |

| – pyspark-net | |

| working_dir: /home/$USER/work | |

| environment: | |

| CHOWN_HOME: "yes" | |

| GRANT_SUDO: "yes" | |

| NB_UID: 1000 | |

| NB_GID: 100 | |

| NB_USER: $USER | |

| NB_GROUP: staff | |

| user: root | |

| deploy: | |

| replicas: 1 | |

| restart_policy: | |

| condition: on-failure | |

| volumes: | |

| – $PWD/work:/home/$USER/work | |

| postgres: | |

| image: postgres:11.3 | |

| environment: | |

| POSTGRES_USERNAME: postgres | |

| POSTGRES_PASSWORD: postgres1234 | |

| POSTGRES_DB: demo | |

| ports: | |

| – "5432:5432/tcp" | |

| networks: | |

| – pyspark-net | |

| volumes: | |

| – $HOME/data/postgres:/var/lib/postgresql/data | |

| deploy: | |

| restart_policy: | |

| condition: on-failure | |

| adminer: | |

| image: adminer:latest | |

| ports: | |

| – "8080:8080/tcp" | |

| networks: | |

| – pyspark-net | |

| deploy: | |

| restart_policy: | |

| condition: on-failure | |

| networks: | |

| pyspark-net: |

Assuming you have a recent version of Docker installed on your local development machine, and running in swarm mode, standing up the stack is as easy as running the following command from the root directory of the project:

docker stack deploy -c stack.yml pyspark

The Docker stack consists of a new overlay network, pyspark-net, and three containers. To confirm the stack deployed, you can run the following command:

docker stack ps pyspark --no-trunc

Note the jupyter/all-spark-notebook container is quite large. Depending on your Internet connection, if this is the first time you have pulled this Docker image, the stack may take several minutes to enter a running state.

To access the Jupyter Notebook application, you need to obtain the Jupyter URL and access token (read more here). This information is output in the Jupyter container log, which can be accessed with the following command:

docker logs $(docker ps | grep pyspark_pyspark | awk '{print $NF}')

Using the URL and token shown in the log output, you will be able to access the Jupyter web-based user interface on localhost port 8888. Once there, from the Jupyter dashboard landing page, you should see all the files in the project’s work/ directory.

Also shown below, note the types of files you are able to create from the dashboard, including Python 3, R, Scala (using Apache Toree or spylon-kernal), and text. You can also open a Jupyter Terminal or create a new Folder.

Running Python Scripts

Instead of worrying about installing and maintaining the latest version of Python and packages on your own development machine, we can run our Python scripts from the Jupyter container. At the time of this post update, the latest jupyter/all-spark-notebook Docker Image runs Python 3.7.3 and Conda 4.6.14. Let’s start with a simple example of the Jupyter container’s capabilities by running a Python script. I have included a sample Python script, 01_simple_script.py.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| #!/usr/bin/python | |

| import random | |

| technologies = ['PySpark', 'Python', 'Spark', 'Scala', 'JVM', | |

| 'Project Jupyter', 'PostgreSQL'] | |

| print("Technologies: %s" % technologies) | |

| technologies.sort() | |

| print("Sorted: %s" % technologies) | |

| print("I'm interested in learning %s." % random.choice(technologies)) |

Run the script from within the Jupyter container, from a Jupyter Terminal window:

python ./01_simple_script.py

You should observe the following output.

Kaggle Datasets

To explore the features of the Jupyter Notebook container and PySpark, we will use a publicly available dataset from Kaggle. Kaggle is a fantastic open-source resource for datasets used for big-data and ML applications. Their tagline is ‘Kaggle is the place to do data science projects’.

For this demonstration, I chose the ‘Transactions from a Bakery’ dataset from Kaggle.

The dataset contains 21,294 rows, each with four columns of data. Although certainly nowhere near ‘big data’, the dataset is large enough to test out the Jupyter container functionality (gist).

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Date | Time | Transaction | Item | |

|---|---|---|---|---|

| 2016-10-30 | 09:58:11 | 1 | Bread | |

| 2016-10-30 | 10:05:34 | 2 | Scandinavian | |

| 2016-10-30 | 10:05:34 | 2 | Scandinavian | |

| 2016-10-30 | 10:07:57 | 3 | Hot chocolate | |

| 2016-10-30 | 10:07:57 | 3 | Jam | |

| 2016-10-30 | 10:07:57 | 3 | Cookies | |

| 2016-10-30 | 10:08:41 | 4 | Muffin | |

| 2016-10-30 | 10:13:03 | 5 | Coffee | |

| 2016-10-30 | 10:13:03 | 5 | Pastry | |

| 2016-10-30 | 10:13:03 | 5 | Bread |

Submitting Spark Jobs

We are not limited to Jupyter Notebooks to interact with Spark, we can also submit scripts directly to Spark from a Jupyter Terminal, or from our IDE. I have included a simple Python script, 02_bakery_dataframes.py. The script loads the Kaggle Bakery dataset from the CSV file into a Spark DataFrame. The script then prints out the top ten rows of data, along with a count of the total number of rows in the DataFrame.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| #!/usr/bin/python | |

| from pyspark.sql import SparkSession | |

| from pyspark.sql.types import StructType, StructField, StringType, IntegerType | |

| spark = SparkSession \ | |

| .builder \ | |

| .getOrCreate() | |

| sc = spark.sparkContext | |

| bakery_schema = StructType([ | |

| StructField('date', StringType(), True), | |

| StructField('time', StringType(), True), | |

| StructField('transaction', IntegerType(), True), | |

| StructField('item', StringType(), True) | |

| ]) | |

| df3 = spark.read \ | |

| .format('csv') \ | |

| .option('header', 'true') \ | |

| .load('BreadBasket_DMS.csv', schema=bakery_schema) | |

| df3.show(10) | |

| df3.count() |

Run the script directly from a Jupyter Terminal window:

python ./02_bakery_dataframes.py

An example of the output of the Spark job is shown below. At the time of this post update (6/7/2019), the latest jupyter/all-spark-notebook Docker Image runs Spark 2.4.3, Scala 2.11.12, and Java 1.8.0_191 using the OpenJDK.

More typically, you would submit the Spark job, using the spark-submit command. Use a Jupyter Terminal window to run the following command:

$SPARK_HOME/bin/spark-submit 02_bakery_dataframes.py

Below, we see the beginning of the output from Spark, using the spark-submit command.

Below, we see the scheduled tasks executing and the output of the print statement, displaying the top 10 rows of bakery data.

Interacting with Databases

Often with Spark, you are loading data from one or more data sources (input). After performing operations and transformations on the data, the data is persisted or conveyed to another system for further processing (output).

To demonstrate the flexibility of the Jupyter Docker Stacks to work with databases, I have added PostgreSQL to the Docker Stack. We can read and write data from the Jupyter container to the PostgreSQL instance, running in a separate container.

To begin, we will run a SQL script, written in Python, to create our database schema and some test data in a new database table. To do so, we will need to install the psycopg2 package into our Jupyter container. You can use the docker exec command from your terminal. Alternatively, as a superuser, your user has administrative access to install Python packages within the Jupyter container using the Jupyter Terminal window. Both pip and conda are available to install packages, see details here.

Run the following command to install psycopg2:

# using pip

docker exec -it \

$(docker ps | grep pyspark_pyspark | awk '{print $NF}') \

pip install psycopg2-binary

These packages give Python the ability to interact with PostgreSQL. The included Python script, 03_load_sql.py, will execute a set of SQL statements, contained in a SQL file, bakery_sample.sql, against the PostgreSQL container instance.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| #!/usr/bin/python | |

| import psycopg2 | |

| # source: https://stackoverflow.com/questions/45805871/python3-psycopg2-execute-sql-file | |

| connect_str = 'host=postgres port=5432 dbname=demo user=postgres password=postgres1234' | |

| conn = psycopg2.connect(connect_str) | |

| conn.autocommit = True | |

| cursor = conn.cursor() | |

| sql_file = open('bakery_sample.sql', 'r') | |

| sqlFile = sql_file.read() | |

| sql_file.close() | |

| sqlCommands = sqlFile.split(';') | |

| for command in sqlCommands: | |

| print(command) | |

| if command.strip() != '': | |

| cursor.execute(command) |

To execute the script, run the following command:

python ./03_load_sql.py

This should result in the following output, if successful.

To confirm the SQL script’s success, I have included Adminer. Adminer (formerly phpMinAdmin) is a full-featured database management tool written in PHP. Adminer natively recognizes PostgreSQL, MySQL, SQLite, and MongoDB, among other database engines.

Adminer should be available on localhost port 8080. The password credentials, shown below, are available in the stack.yml file. The server name, postgres, is the name of the PostgreSQL container. This is the domain name the Jupyter container will use to communicate with the PostgreSQL container.

Connecting to the demo database with Adminer, we should see the bakery_basket table. The table should contain three rows of data, as shown below.

Developing Jupyter NoteBooks

The true power of the Jupyter Docker Stacks containers is Jupyter Notebooks. According to the Jupyter Project, the notebook extends the console-based approach to interactive computing in a qualitatively new direction, providing a web-based application suitable for capturing the whole computation process: developing, documenting, and executing code, as well as communicating the results. Notebook documents contain the inputs and outputs of an interactive session as well as additional text that accompanies the code but is not meant for execution.

To see the power of Jupyter Notebooks, I have written a basic notebook document, 04_pyspark_demo_notebook.ipynb. The document performs some typical PySpark functions, such as loading data from a CSV file and from the PostgreSQL database, performing some basic data analytics with Spark SQL, graphing the data using BokehJS, and finally, saving data back to the database, as well as to the popular Apache Parquet file format. Below we see the notebook document, using the Jupyter Notebook user interface.

PostgreSQL Driver

The only notebook document dependency, not natively part of the Jupyter Image, is the PostgreSQL JDBC driver. The driver, postgresql-42.2.8.jar, is included in the project and referenced in the configuration of the notebook’s Spark Session. The JAR is added to the spark.driver.extraClassPath runtime environment property. This ensures the JAR is available to Spark (written in Scala) when the job is run.

PyCharm

Since the working directory for the project is shared with the container, you can also edit files, including notebook documents, in your favorite IDE, such as JetBrains PyCharm. PyCharm has built-in language support for Jupyter Notebooks, as shown below.

As mentioned earlier, a key feature of Jupyter Notebooks is their ability to save the output from each Cell as part of the notebook document. Below, we see the notebook document on GitHub. The output is saved, as part of the notebook document. Not only can you distribute the notebook document, but you can also preserve and share the output from each cell.

Using Additional Packages

As mentioned in the Introduction, the Jupyter Docker Stacks come ready-to-run, with a rich variety of Python packages to extend their functionality. To demonstrate the use of these packages, I have created a second Jupyter notebook document, 05_pyspark_demo_notebook.ipynb. This notebook document uses SciPy (Python-based mathematics, science, and engineering), NumPy (Python-based scientific computing), and the Plotly Python Graphing Library. While NumPy and SciPy are included on the Jupyter Docker Image, the Notebook used pip to install Plotly. Similar to Bokeh, shown previously, we can combine these libraries to create richly interactive data visualizations. To use Plotly, you will need to sign up for a free account and obtain a username and API key.

Shown below, we use Plotly to construct a bar chart of daily bakery items sold for the year 2017 based on the Kaggle dataset. The chart uses SciPy and NumPy to construct a linear fit (regression) and plot a line of best fit for the bakery data. The chart also uses SciPy’s Savitzky-Golay Filter to plot the second line, illustrating a smoothing of our bakery data.

Plotly also provides Chart Studio Online Chart Maker. Plotly describes Chart Studio as the world’s most sophisticated editor for creating d3.js and WebGL charts. Shown below, we have the ability to enhance, stylize, and share our bakery data visualization using the free version of Chart Studio Cloud.

nbviewer

Notebooks can also be viewed using Jupyter nbviewer, hosted on Rackspace. Below, we see the output of a cell from this project’s notebook document, showing a BokehJS chart, using nbviewer. You can view this project’s actual notebook document, using nbviewer, here.

Monitoring Spark Jobs

The Jupyter Docker container exposes Spark’s monitoring and instrumentation web user interface. We can observe each Spark Job in great detail.

We can review details of each stage of the Spark job, including a visualization of the DAG, which Spark constructs as part of the job execution plan, using the DAG Scheduler.

We can also review the timing of each event, occurring as part of the stages of the Spark job.

We can also use the Spark interface to review and confirm the runtime environment, including versions of Java, Scala, and Spark, as well as packages available on the Java classpath.

Spark Performance

Spark, running on a single node within the Jupyter container, on your development system, is not a substitute for a full Spark cluster, running on bare metal or robust virtualized hardware, with YARN, Mesos, or Kubernetes. In my opinion, you should adjust Docker to support an acceptable performance profile for the stack, running only a modest workload. You are not trying to replace the need to run real jobs on a Production Spark cluster.

We can use the docker stats command to examine the container’s CPU and memory metrics:

docker stats --format "table {{.Name}}\t{{.CPUPerc}}\t{{.MemUsage}}\t{{.MemPerc}}"

Below, we see the stats from the stack’s three containers immediately after being deployed, showing little or no activity. Here, Docker has been allocated 2 CPUs, 3GB of RAM, and 2 GB of swap space available, from the host machine.

Compare the stats above with the same three containers, while the example notebook document is running on Spark. The CPU shows a spike, but memory usage appears to be within acceptable ranges.

Linux top and htop

Another option to examine container performance metrics is with top. We can use the docker exec command to execute the top command within the Jupyter container, sorting processes by CPU usage:

docker exec -it \

$(docker ps | grep pyspark_pyspark | awk '{print $NF}') \

top -o %CPU

With top, we can observe the individual performance of each process running in the Jupyter container.

Lastly, htop, an interactive process viewer for Unix, can be installed into the container and ran with the following set of bash commands, from a Jupyter Terminal window or using docker exec:

docker exec -it \

$(docker ps | grep pyspark_pyspark | awk '{print $NF}') \

sh -c "apt-get update && apt-get install htop && htop --sort-key PERCENT_CPU"

With htop, we can observe individual CPU activity. The two CPUs at the top left of the htop window are the two CPUs assigned to Docker. We get insight into the way Docker is using each CPU, as well as other basic performance metrics, like memory and swap.

Assuming your development machine host has them available, it is easy to allocate more compute resources to Docker if required. However, in my opinion, this stack is optimized for development and learning, using reasonably sized datasets for data analysis and ML. It should not be necessary to allocate excessive resources to Docker, possibly starving your host machine’s own compute capabilities.

Conclusion

In this brief post, we have seen how easy it is to get started learning and developing applications for big data analytics, using Python, Spark, and PySpark, thanks to the Jupyter Docker Stacks. We could use the same stack to learn and develop for machine learning, using Python, Scala, and R. Extending the stack’s capabilities is as simple as swapping out this Jupyter image for another, with a different set of tools, as well as adding additional containers to the stack, such as Apache Kafka or Apache Cassandra.

Search results courtesy GoogleTrends (https://trends.google.com)

All opinions expressed in this post are my own and not necessarily the views of my current or past employers, their clients.

Integrating Search Capabilities with Actions for Google Assistant, using GKE and Elasticsearch: Part 2

Posted by Gary A. Stafford in Cloud, GCP, Java Development, JavaScript, Serverless, Software Development on September 24, 2018

Voice and text-based conversational interfaces, such as chatbots, have recently seen tremendous growth in popularity. Much of this growth can be attributed to leading Cloud providers, such as Google, Amazon, and Microsoft, who now provide affordable, end-to-end development, machine learning-based training, and hosting platforms for conversational interfaces.

Cloud-based machine learning services greatly improve a conversational interface’s ability to interpret user intent with greater accuracy. However, the ability to return relevant responses to user inquiries, also requires interfaces have access to rich informational datastores, and the ability to quickly and efficiently query and analyze that data.

In this two-part post, we will enhance the capabilities of a voice and text-based conversational interface by integrating it with a search and analytics engine. By interfacing an Action for Google Assistant conversational interface with Elasticsearch, we will improve the Action’s ability to provide relevant results to the end-user. Instead of querying a traditional database for static responses to user intent, our Action will access a Near Real-time (NRT) Elasticsearch index of searchable documents. The Action will leverage Elasticsearch’s advanced search and analytics capabilities to optimize and shape user responses, based on their intent.

Action Preview

Here is a brief YouTube video preview of the final Action for Google Assistant, integrated with Elasticsearch, running on an Apple iPhone.

Architecture

If you recall from part one of this post, the high-level architecture of our search engine-enhanced Action for Google Assistant resembles the following. Most of the components are running on Google Cloud.

Source Code

All open-sourced code for this post can be found on GitHub in two repositories, one for the Spring Boot Service and one for the Action for Google Assistant. Code samples in this post are displayed as GitHub Gists, which may not display correctly on some mobile and social media browsers. Links to gists are also provided.

Development Process

In part two of this post, we will tie everything together by creating and integrating our Action for Google Assistant:

- Create the new Actions for Google Assistant project using the Actions on Google console;

- Develop the Action’s Intents and Entities using the Dialogflow console;

- Develop, deploy, and test the Cloud Function to GCP;

Let’s explore each step in more detail.

New ‘Actions on Google’ Project

With Elasticsearch running and the Spring Boot Service deployed to our GKE cluster, we can start building our Actions for Google Assistant. Using the Actions on Google web console, we first create a new Actions project.

The Directory Information tab is where we define metadata about the project. This information determines how it will look in the Actions directory and is required to publish your project. The Actions directory is where users discover published Actions on the web and mobile devices.

The Directory Information tab also includes sample invocations, which may be used to invoke our Actions.

Actions and Intents

Our project will contain a series of related Actions. According to Google, an Action is ‘an interaction you build for the Assistant that supports a specific intent and has a corresponding fulfillment that processes the intent.’ To build our Actions, we first want to create our Intents. To do so, we will want to switch from the Actions on Google console to the Dialogflow console. Actions on Google provides a link for switching to Dialogflow in the Actions tab.

We will build our Action’s Intents in Dialogflow. The term Intent, used by Dialogflow, is standard terminology across other voice-assistant platforms, such as Amazon’s Alexa and Microsoft’s Azure Bot Service and LUIS. In Dialogflow, will be building Intents — the Find Multiple Posts Intent, Find Post Intent, Find By ID Intent, and so forth.

Below, we see the Find Post Intent. The Find Post Intent is responsible for handling our user’s requests for a single post about a topic, for example, ‘Find a post about Docker.’ The Intent shown below contains a fair number, but indeed not an exhaustive list, of training phrases. These represent possible ways a user might express intent when invoking the Action.

Below, we see the Find Multiple Posts Intent. The Find Multiple Posts Intent is responsible for handling our user’s requests for a list of posts about a topic, for example, ‘I’m interested in Docker.’ Similar to the Find Post Intent above, the Find Multiple Posts Intent contains a list of training phrases.

Dialog Model Training

According to Google, the greater the number of natural language examples in the Training Phrases section of Intents, the better the classification accuracy. Every time a user interacts with our Action, the user’s utterances are logged. Using the Training tab in the Dialogflow console, we can train our model by reviewing and approving or correcting how the Action handled the user’s utterances.

Below we see the user’s utterances, part of an interaction with the Action. We have the option to review and approve the Intent that was called to handle the utterance, re-assign it, or delete it. This helps improve our accuracy of our dialog model.

Dialogflow Entities

Each of the highlighted words in the training phrases maps to the facts parameter, which maps to a collection of @topic Entities. Entities represent a list of intents the Action is trained to understand. According to Google, there are three types of entities: ‘system’ (defined by Dialogflow), ‘developer’ (defined by a developer), and ‘user’ (built for each individual end-user in every request) objects. We will be creating ‘developer’ type entities for our Action’s Intents.

Automated Expansion

We do not have to define all possible topics a user might search for, as an entity. By enabling the Allow Automated Expansion option, an Agent will recognize values that have not been explicitly listed in the entity list. Google describes Agents as NLU (Natural Language Understanding) modules.

Entity Synonyms

An entity may contain synonyms. Multiple synonyms are mapped to a single reference value. The reference value is the value passed to the Cloud Function by the Action. For example, take the reference value of ‘GCP.’ The user might ask Google about ‘GCP’. However, the user might also substitute the words ‘Google Cloud’ or ‘Google Cloud Platform.’ Using synonyms, if the user utters any of these three synonymous words or phrase in their intent, the reference value, ‘GCP’, is passed in the request.

But, what if the post contains the phrase, ‘Google Cloud Platform’ more frequently than, or instead of, ‘GCP’? If the acronym, ‘GCP’, is defined as the entity reference value, then it is the value passed to the function, even if you ask for ‘Google Cloud Platform’. In the use case of searching blog posts by topic, entity synonyms are not an effective search strategy.

Elasticsearch Synonyms

A better way to solve for synonyms is by using the synonyms feature of Elasticsearch. Take, for example, the topic of ‘Istio’, Istio is also considered a Service Mesh. If I ask for posts about ‘Service Mesh’, I would like to get back posts that contain the phrase ‘Service Mesh’, but also the word ‘Istio’. To accomplish this, you would define an association between ‘Istio’ and ‘Service Mesh’, as part of the Elasticsearch WordPress posts index.

Searches for ‘Istio’ against that index would return results that contain ‘Istio’ and/or contain ‘Service Mesh’; the reverse is also true. Having created and applied a custom synonyms filter to the index, we see how Elasticsearch responds to an analysis of the natural language style phrase, ‘What is a Service Mesh?’. As shown by the tokens output in Kibana’s Dev Tools Console, Elasticsearch understands that ‘service mesh’ is synonymous with ‘istio’.

If we query the same five fields as our Action, for the topic of ‘service mesh’, we get four hits for posts (indexed documents) that contain ‘service mesh’ and/or ‘istio’.

Actions on Google Integration

Another configuration item in Dialogflow that needs to be completed is the Dialogflow’s Actions on Google integration. This will integrate our Action with Google Assistant. Google currently provides more than fifteen different integrations, including Google Assistant, Slack, Facebook Messanger, Twitter, and Twilio, as shown below.

To configure the Google Assistant integration, choose the Welcome Intent as our Action’s Explicit Invocation intent. Then we designate our other Intents as Implicit Invocation intents. According to Google, this Google Assistant Integration allows our Action to reach users on every device where the Google Assistant is available.

Action Fulfillment

When a user’s intent is received, it is fulfilled by the Action. In the Dialogflow Fulfillment console, we see the Action has two fulfillment options, a Webhook or an inline-editable Cloud Function, edited inline. A Webhook allows us to pass information from a matched intent into a web service and get a result back from the service. Our Action’s Webhook will call our Cloud Function on GCP, using the Cloud Function’s URL endpoint (we’ll get this URL in the next section).

Google Cloud Functions

Our Cloud Function, called by our Action, is written in Node.js. Our function, index.js, is divided into four sections, which are: constants and environment variables, intent handlers, helper functions, and the function’s entry point. The helper functions are part of the Helper module, contained in the helper.js file.

Constants and Environment Variables