Posts Tagged SQL

Executing Amazon Athena Queries from JetBrains PyCharm

Posted by Gary A. Stafford in AWS, Big Data, Cloud, Python, Software Development, SQL on January 8, 2020

Amazon Athena

According to Amazon, Athena is an interactive query service that makes it easy to analyze data in Amazon S3 using standard SQL. Amazon Athena supports and works with a variety of popular data file formats, including CSV, JSON, Apache ORC, Apache Avro, and Apache Parquet.

The underlying technology behind Amazon Athena is Presto, the popular, open-source distributed SQL query engine for big data, created by Facebook. According to AWS, the Athena query engine is based on Presto 0.172. Athena is ideal for quick, ad-hoc querying, but it can also handle complex analysis, including large joins, window functions, and arrays. In addition to Presto, Athena also uses Apache Hive to define tables.

Athena Query Editor

In the previous post, Getting Started with Data Analysis on AWS using AWS Glue, Amazon Athena, and QuickSight, we used the Athena Query Editor to construct and test SQL queries against semi-structured data in an S3-based Data Lake. The Athena Query Editor has many of the basic features Data Engineers and Analysts expect, including SQL syntax highlighting, code auto-completion, and query formatting. Queries can be run directly from the Editor, saved for future reference, and query results downloaded. The Editor can convert SELECT queries to CREATE TABLE AS (CTAS) and CREATE VIEW AS statements. Access to AWS Glue data sources is also available from within the Editor.

Full-Featured IDE

Although the Athena Query Editor is fairly functional, many Engineers perform a majority of their software development work in a fuller-featured IDE. The choice of IDE may depend on one’s predominant programming language. According to the PYPL Index, the ten most popular, current IDEs are:

- Microsoft Visual Studio

- Android Studio

- Eclipse

- Visual Studio Code

- Apache NetBeans

- JetBrains PyCharm

- JetBrains IntelliJ

- Apple Xcode

- Sublime Text

- Atom

Within the domains of data science, big data analytics, and data analysis, languages such as SQL, Python, Java, Scala, and R are common. Although I work in a variety of IDEs, my go-to choices are JetBrains PyCharm for Python (including for PySpark and Jupyter Notebook development) and JetBrains IntelliJ for Java and Scala (including Apache Spark development). Both these IDEs also support many common SQL-based technologies, out-of-the-box, and are easily extendable to add new technologies.

Athena Integration with PyCharm

Utilizing the extensibility of the JetBrains suite of professional development IDEs, it is simple to add Amazon Athena to the list of available database drivers and make JDBC (Java Database Connectivity) connections to Athena instances on AWS.

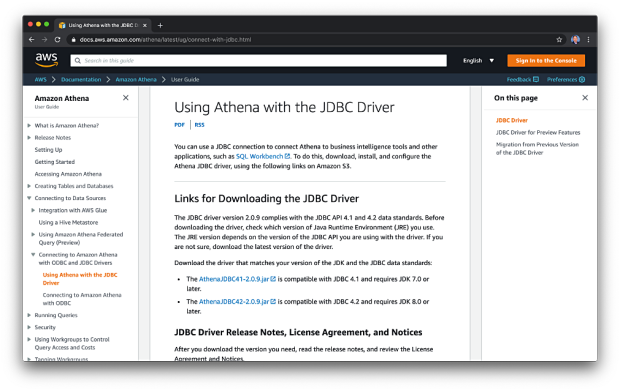

Downloading the Athena JDBC Driver

To start, download the Athena JDBC Driver from Amazon. There are two versions, based on your choice of Java JDKs. Considering Java 8 was released six years ago (March 2014), most users will likely want the AthenaJDBC42-2.0.9.jar is compatible with JDBC 4.2 and JDK 8.0 or later.

Installation Guide

AWS also supplies a JDBC Driver Installation and Configuration Guide. The guide, as well as the Athena JDBC and ODBC Drivers, are produced by Simba Technologies (acquired by Magnitude Software). Instructions for creating an Athena Driver starts on page 23.

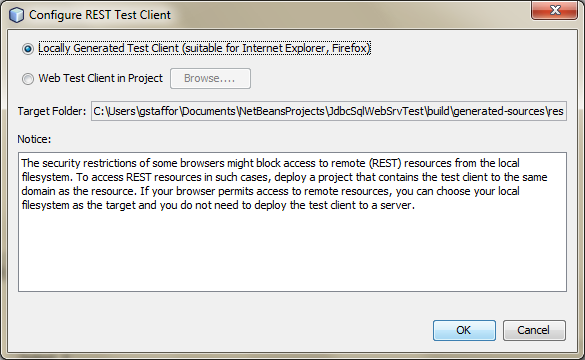

Creating a New Athena Driver

From PyCharm’s Database Tool Window, select the Drivers dialog box, select the downloaded Athena JDBC Driver JAR. Select com.simba.athena.jdbc.Driver in the Class dropdown. Name the Driver, ‘Amazon Athena.’

You can configure the Athena Driver further, using the Options and Advanced tabs.

Creating a New Athena Data Source

From PyCharm’s Database Tool Window, select the Data Source dialog box to create a new connection to your Athena instance. Choose ‘Amazon Athena’ from the list of available Database Drivers.

You will need four items to create an Athena Data Source:

- Your IAM User Access Key ID

- Your IAM User Secret Access Key

- The AWS Region of your Athena instance (e.g., us-east-1)

- An existing S3 bucket location to store query results

The Athena connection URL is a combination of the AWS Region and the S3 bucket, items 3 and 4, above. The format of the Athena connection URL is as follows.

jdbc:awsathena://AwsRegion=your-region;S3OutputLocation=s3://your-bucket-name/query-results-path

Give the new Athena Data Source a logical Name, input the User (Access Key ID), Password (Secret Access Key), and the Athena URL. To test the Athena Data Source, use the ‘Test Connection’ button.

You can create multiple Athena Data Sources using the Athena Driver. For example, you may have separate Development, Test, and Production instances of Athena, each in a different AWS Account.

Data Access

Once a successful connection has been made, switching to the Schemas tab, you should see a list of available AWS Glue Data Catalog databases. Below, we see the AWS Glue Catalog, which we created in the prior post. This Glue Data Catalog database contains ten metadata tables, each corresponding to a semi-structured, file-based data source in an S3-based data lake.

In the example below, I have chosen to limit the new Athena Data Source to a single Data Catalog database, to which the Data Source’s IAM User has access. Applying the core AWS security principle of granting least privilege, IAM Users should only have the permissions required to perform a specific set of approved tasks. This principle applies to the Glue Data Catalog databases, metadata tables, and the underlying S3 data sources.

Querying Athena from PyCharm

From within the PyCharm’s Database Tool Window, you should now see a list of the metadata tables defined in your AWS Glue Data Catalog database(s), as well as the individual columns within each table.

Similar to the Athena Query Editor, you can write SQL queries against the database tables in PyCharm. Like the Athena Query Editor, PyCharm has standard features SQL syntax highlighting, code auto-completion, and query formatting. Right-click on the Athena Data Source and choose New, then Console, to start.

Be mindful when writing queries and searching the Internet for SQL references, the Athena query engine is based on Presto 0.172. The current version of Presto, 0.234, is more than 50 releases ahead of the current Athena version. Both Athena and Presto functionality continue to change and diverge. There are also additional considerations and limitations for SQL queries in Athena to be aware of.

Whereas the Athena Query Editor is limited to only one query per query tab, in PyCharm, we can write and run multiple SQL queries in the same console window and have multiple console sessions opened to Athena at the same time.

By default, PyCharm’s query results are limited to the first ten rows of data. The number of rows displayed, as well as many other preferences, can be changed in the PyCharm’s Database Preferences dialog box.

Saving Queries and Exporting Results

In PyCharm, Athena queries can be saved as part of your PyCharm projects, as .sql files. Whereas the Athena Query Editor is limited to CSV, in PyCharm, query results can be exported in a variety of standard data file formats.

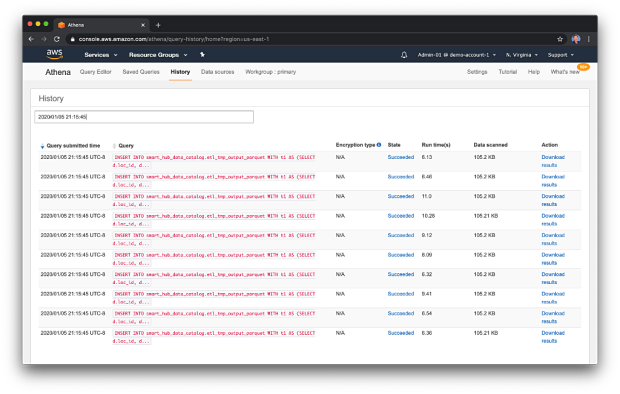

Athena Query History

All Athena queries ran from PyCharm are recorded in the History tab of the Athena Console. Although PyCharm shows query run times, the Athena History tab also displays the amount of data scanned. Knowing the query run time and volume of data scanned is useful when performance tuning queries.

Other IDEs

The technique shown for JetBrains PyCharm can also be applied to other JetBrains products, including GoLand, DataGrip, PhpStorm, and IntelliJ (shown below).

This blog represents my own view points and not of my employer, Amazon Web Services.

Getting Started with Data Analysis on AWS using AWS Glue, Amazon Athena, and QuickSight: Part 1

Posted by Gary A. Stafford in AWS, Bash Scripting, Big Data, Cloud, Python, Serverless, Software Development on January 5, 2020

Introduction

According to Wikipedia, data analysis is “a process of inspecting, cleansing, transforming, and modeling data with the goal of discovering useful information, informing conclusion, and supporting decision-making.” In this two-part post, we will explore how to get started with data analysis on AWS, using the serverless capabilities of Amazon Athena, AWS Glue, Amazon QuickSight, Amazon S3, and AWS Lambda. We will learn how to use these complementary services to transform, enrich, analyze, and visualize semi-structured data.

Data Analysis—discovering useful information, informing conclusion, and supporting decision-making. –Wikipedia

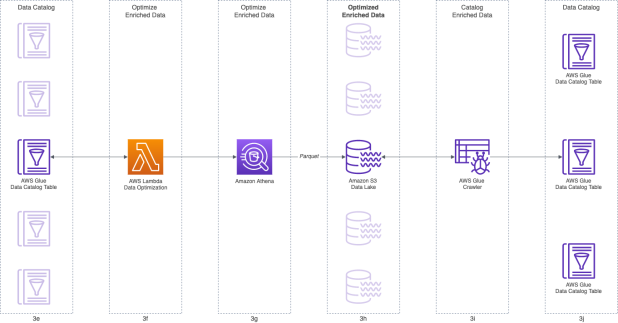

In part one, we will begin with raw, semi-structured data in multiple formats. We will discover how to ingest, transform, and enrich that data using Amazon S3, AWS Glue, Amazon Athena, and AWS Lambda. We will build an S3-based data lake, and learn how AWS leverages open-source technologies, such as Presto, Apache Hive, and Apache Parquet. In part two, we will learn how to further analyze and visualize the data using Amazon QuickSight. Here’s a quick preview of what we will build in part one of the post.

Demonstration

In this demonstration, we will adopt the persona of a large, US-based electric energy provider. The energy provider has developed its next-generation Smart Electrical Monitoring Hub (Smart Hub). They have sold the Smart Hub to a large number of residential customers throughout the United States. The hypothetical Smart Hub wirelessly collects detailed electrical usage data from individual, smart electrical receptacles and electrical circuit meters, spread throughout the residence. Electrical usage data is encrypted and securely transmitted from the customer’s Smart Hub to the electric provider, who is running their business on AWS.

Customers are able to analyze their electrical usage with fine granularity, per device, and over time. The goal of the Smart Hub is to enable the customers, using data, to reduce their electrical costs. The provider benefits from a reduction in load on the existing electrical grid and a better distribution of daily electrical load as customers shift usage to off-peak times to save money.

Preview of post’s data in Amazon QuickSight.

Preview of post’s data in Amazon QuickSight.

The original concept for the Smart Hub was developed as part of a multi-day training and hackathon, I recently attended with an AWSome group of AWS Solutions Architects in San Francisco. As a team, we developed the concept of the Smart Hub integrated with a real-time, serverless, streaming data architecture, leveraging AWS IoT Core, Amazon Kinesis, AWS Lambda, and Amazon DynamoDB.

From left: Bruno Giorgini, Mahalingam (‘Mahali’) Sivaprakasam, Gary Stafford, Amit Kumar Agrawal, and Manish Agarwal.

From left: Bruno Giorgini, Mahalingam (‘Mahali’) Sivaprakasam, Gary Stafford, Amit Kumar Agrawal, and Manish Agarwal.

This post will focus on data analysis, as opposed to the real-time streaming aspect of data capture or how the data is persisted on AWS.

High-level AWS architecture diagram of the demonstration.

High-level AWS architecture diagram of the demonstration.

Featured Technologies

The following AWS services and open-source technologies are featured prominently in this post.

Amazon S3-based Data Lake

An Amazon S3-based Data Lake uses Amazon S3 as its primary storage platform. Amazon S3 provides an optimal foundation for a data lake because of its virtually unlimited scalability, from gigabytes to petabytes of content. Amazon S3 provides ‘11 nines’ (99.999999999%) durability. It has scalable performance, ease-of-use features, and native encryption and access control capabilities.

An Amazon S3-based Data Lake uses Amazon S3 as its primary storage platform. Amazon S3 provides an optimal foundation for a data lake because of its virtually unlimited scalability, from gigabytes to petabytes of content. Amazon S3 provides ‘11 nines’ (99.999999999%) durability. It has scalable performance, ease-of-use features, and native encryption and access control capabilities.

AWS Glue

AWS Glue is a fully managed extract, transform, and load (ETL) service to prepare and load data for analytics. AWS Glue discovers your data and stores the associated metadata (e.g., table definition and schema) in the AWS Glue Data Catalog. Once cataloged, your data is immediately searchable, queryable, and available for ETL.

AWS Glue is a fully managed extract, transform, and load (ETL) service to prepare and load data for analytics. AWS Glue discovers your data and stores the associated metadata (e.g., table definition and schema) in the AWS Glue Data Catalog. Once cataloged, your data is immediately searchable, queryable, and available for ETL.

AWS Glue Data Catalog

The AWS Glue Data Catalog is an Apache Hive Metastore compatible, central repository to store structural and operational metadata for data assets. For a given data set, store table definition, physical location, add business-relevant attributes, as well as track how the data has changed over time.

The AWS Glue Data Catalog is an Apache Hive Metastore compatible, central repository to store structural and operational metadata for data assets. For a given data set, store table definition, physical location, add business-relevant attributes, as well as track how the data has changed over time.

AWS Glue Crawler

An AWS Glue Crawler connects to a data store, progresses through a prioritized list of classifiers to extract the schema of your data and other statistics, and then populates the Glue Data Catalog with this metadata. Crawlers can run periodically to detect the availability of new data as well as changes to existing data, including table definition changes. Crawlers automatically add new tables, new partitions to an existing table, and new versions of table definitions. You can even customize Glue Crawlers to classify your own file types.

An AWS Glue Crawler connects to a data store, progresses through a prioritized list of classifiers to extract the schema of your data and other statistics, and then populates the Glue Data Catalog with this metadata. Crawlers can run periodically to detect the availability of new data as well as changes to existing data, including table definition changes. Crawlers automatically add new tables, new partitions to an existing table, and new versions of table definitions. You can even customize Glue Crawlers to classify your own file types.

AWS Glue ETL Job

An AWS Glue ETL Job is the business logic that performs extract, transform, and load (ETL) work in AWS Glue. When you start a job, AWS Glue runs a script that extracts data from sources, transforms the data, and loads it into targets. AWS Glue generates a PySpark or Scala script, which runs on Apache Spark.

An AWS Glue ETL Job is the business logic that performs extract, transform, and load (ETL) work in AWS Glue. When you start a job, AWS Glue runs a script that extracts data from sources, transforms the data, and loads it into targets. AWS Glue generates a PySpark or Scala script, which runs on Apache Spark.

Amazon Athena

Amazon Athena is an interactive query service that makes it easy to analyze data in Amazon S3 using standard SQL. Athena supports and works with a variety of standard data formats, including CSV, JSON, Apache ORC, Apache Avro, and Apache Parquet. Athena is integrated, out-of-the-box, with AWS Glue Data Catalog. Athena is serverless, so there is no infrastructure to manage, and you pay only for the queries that you run.

Amazon Athena is an interactive query service that makes it easy to analyze data in Amazon S3 using standard SQL. Athena supports and works with a variety of standard data formats, including CSV, JSON, Apache ORC, Apache Avro, and Apache Parquet. Athena is integrated, out-of-the-box, with AWS Glue Data Catalog. Athena is serverless, so there is no infrastructure to manage, and you pay only for the queries that you run.

The underlying technology behind Amazon Athena is Presto, the open-source distributed SQL query engine for big data, created by Facebook. According to the AWS, the Athena query engine is based on Presto 0.172 (released April 9, 2017). In addition to Presto, Athena uses Apache Hive to define tables.

Amazon QuickSight

Amazon QuickSight is a fully managed business intelligence (BI) service. QuickSight lets you create and publish interactive dashboards that can then be accessed from any device, and embedded into your applications, portals, and websites.

Amazon QuickSight is a fully managed business intelligence (BI) service. QuickSight lets you create and publish interactive dashboards that can then be accessed from any device, and embedded into your applications, portals, and websites.

AWS Lambda

AWS Lambda automatically runs code without requiring the provisioning or management servers. AWS Lambda automatically scales applications by running code in response to triggers. Lambda code runs in parallel. With AWS Lambda, you are charged for every 100ms your code executes and the number of times your code is triggered. You pay only for the compute time you consume.

AWS Lambda automatically runs code without requiring the provisioning or management servers. AWS Lambda automatically scales applications by running code in response to triggers. Lambda code runs in parallel. With AWS Lambda, you are charged for every 100ms your code executes and the number of times your code is triggered. You pay only for the compute time you consume.

Smart Hub Data

Everything in this post revolves around data. For the post’s demonstration, we will start with four categories of raw, synthetic data. Those data categories include Smart Hub electrical usage data, Smart Hub sensor mapping data, Smart Hub residential locations data, and electrical rate data. To demonstrate the capabilities of AWS Glue to handle multiple data formats, the four categories of raw data consist of three distinct file formats: XML, JSON, and CSV. I have attempted to incorporate as many ‘real-world’ complexities into the data without losing focus on the main subject of the post. The sample datasets are intentionally small to keep your AWS costs to a minimum for the demonstration.

To further reduce costs, we will use a variety of data partitioning schemes. According to AWS, by partitioning your data, you can restrict the amount of data scanned by each query, thus improving performance and reducing cost. We have very little data for the demonstration, in which case partitioning may negatively impact query performance. However, in a ‘real-world’ scenario, there would be millions of potential residential customers generating terabytes of data. In that case, data partitioning would be essential for both cost and performance.

Smart Hub Electrical Usage Data

The Smart Hub’s time-series electrical usage data is collected from the customer’s Smart Hub. In the demonstration’s sample electrical usage data, each row represents a completely arbitrary five-minute time interval. There are a total of ten electrical sensors whose electrical usage in kilowatt-hours (kW) is recorded and transmitted. Each Smart Hub records and transmits electrical usage for 10 device sensors, 288 times per day (24 hr / 5 min intervals), for a total of 2,880 data points per day, per Smart Hub. There are two days worth of usage data for the demonstration, for a total of 5,760 data points. The data is stored in JSON Lines format. The usage data will be partitioned in the Amazon S3-based data lake by date (e.g., ‘dt=2019-12-21’).

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| {"loc_id":"b6a8d42425fde548","ts":1576915200,"data":{"s_01":0,"s_02":0.00502,"s_03":0,"s_04":0,"s_05":0,"s_06":0,"s_07":0,"s_08":0,"s_09":0,"s_10":0.04167}} | |

| {"loc_id":"b6a8d42425fde548","ts":1576915500,"data":{"s_01":0,"s_02":0.00552,"s_03":0,"s_04":0,"s_05":0,"s_06":0,"s_07":0,"s_08":0,"s_09":0,"s_10":0.04147}} | |

| {"loc_id":"b6a8d42425fde548","ts":1576915800,"data":{"s_01":0.29267,"s_02":0.00642,"s_03":0,"s_04":0,"s_05":0,"s_06":0,"s_07":0,"s_08":0,"s_09":0,"s_10":0.04207}} | |

| {"loc_id":"b6a8d42425fde548","ts":1576916100,"data":{"s_01":0.29207,"s_02":0.00592,"s_03":0,"s_04":0,"s_05":0,"s_06":0,"s_07":0,"s_08":0,"s_09":0,"s_10":0.04137}} | |

| {"loc_id":"b6a8d42425fde548","ts":1576916400,"data":{"s_01":0.29217,"s_02":0.00622,"s_03":0,"s_04":0,"s_05":0,"s_06":0,"s_07":0,"s_08":0,"s_09":0,"s_10":0.04157}} | |

| {"loc_id":"b6a8d42425fde548","ts":1576916700,"data":{"s_01":0,"s_02":0.00562,"s_03":0,"s_04":0,"s_05":0,"s_06":0,"s_07":0,"s_08":0,"s_09":0,"s_10":0.04197}} | |

| {"loc_id":"b6a8d42425fde548","ts":1576917000,"data":{"s_01":0,"s_02":0.00512,"s_03":0,"s_04":0,"s_05":0,"s_06":0,"s_07":0,"s_08":0,"s_09":0,"s_10":0.04257}} | |

| {"loc_id":"b6a8d42425fde548","ts":1576917300,"data":{"s_01":0,"s_02":0.00522,"s_03":0,"s_04":0,"s_05":0,"s_06":0,"s_07":0,"s_08":0,"s_09":0,"s_10":0.04177}} | |

| {"loc_id":"b6a8d42425fde548","ts":1576917600,"data":{"s_01":0,"s_02":0.00502,"s_03":0,"s_04":0,"s_05":0,"s_06":0,"s_07":0,"s_08":0,"s_09":0,"s_10":0.04267}} | |

| {"loc_id":"b6a8d42425fde548","ts":1576917900,"data":{"s_01":0,"s_02":0.00612,"s_03":0,"s_04":0,"s_05":0,"s_06":0,"s_07":0,"s_08":0,"s_09":0,"s_10":0.04237}} |

Note the electrical usage data contains nested data. The electrical usage for each of the ten sensors is contained in a JSON array, within each time series entry. The array contains ten numeric values of type, double.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| { | |

| "loc_id": "b6a8d42425fde548", | |

| "ts": 1576916400, | |

| "data": { | |

| "s_01": 0.29217, | |

| "s_02": 0.00622, | |

| "s_03": 0, | |

| "s_04": 0, | |

| "s_05": 0, | |

| "s_06": 0, | |

| "s_07": 0, | |

| "s_08": 0, | |

| "s_09": 0, | |

| "s_10": 0.04157 | |

| } | |

| } |

Real data is often complex and deeply nested. Later in the post, we will see that AWS Glue can map many common data types, including nested data objects, as illustrated below.

Smart Hub Sensor Mappings

The Smart Hub sensor mappings data maps a sensor column in the usage data (e.g., ‘s_01’ to the corresponding actual device (e.g., ‘Central Air Conditioner’). The data contains the device location, wattage, and the last time the record was modified. The data is also stored in JSON Lines format. The sensor mappings data will be partitioned in the Amazon S3-based data lake by the state of the residence (e.g., ‘state=or’ for Oregon).

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| {"loc_id":"b6a8d42425fde548","id":"s_01","description":"Central Air Conditioner","location":"N/A","watts":3500,"last_modified":1559347200} | |

| {"loc_id":"b6a8d42425fde548","id":"s_02","description":"Ceiling Fan","location":"Master Bedroom","watts":65,"last_modified":1559347200} | |

| {"loc_id":"b6a8d42425fde548","id":"s_03","description":"Clothes Dryer","location":"Basement","watts":5000,"last_modified":1559347200} | |

| {"loc_id":"b6a8d42425fde548","id":"s_04","description":"Clothes Washer","location":"Basement","watts":1800,"last_modified":1559347200} | |

| {"loc_id":"b6a8d42425fde548","id":"s_05","description":"Dishwasher","location":"Kitchen","watts":900,"last_modified":1559347200} | |

| {"loc_id":"b6a8d42425fde548","id":"s_06","description":"Flat Screen TV","location":"Living Room","watts":120,"last_modified":1559347200} | |

| {"loc_id":"b6a8d42425fde548","id":"s_07","description":"Microwave Oven","location":"Kitchen","watts":1000,"last_modified":1559347200} | |

| {"loc_id":"b6a8d42425fde548","id":"s_08","description":"Coffee Maker","location":"Kitchen","watts":900,"last_modified":1559347200} | |

| {"loc_id":"b6a8d42425fde548","id":"s_09","description":"Hair Dryer","location":"Master Bathroom","watts":2000,"last_modified":1559347200} | |

| {"loc_id":"b6a8d42425fde548","id":"s_10","description":"Refrigerator","location":"Kitchen","watts":500,"last_modified":1559347200} |

Smart Hub Locations

The Smart Hub locations data contains the geospatial coordinates, home address, and timezone for each residential Smart Hub. The data is stored in CSV format. The data for the four cities included in this demonstration originated from OpenAddresses, ‘the free and open global address collection.’ There are approximately 4k location records. The location data will be partitioned in the Amazon S3-based data lake by the state of the residence where the Smart Hub is installed (e.g., ‘state=or’ for Oregon).

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| lon | lat | number | street | unit | city | district | region | postcode | id | hash | tz | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| -122.8077278 | 45.4715614 | 6635 | SW JUNIPER TER | 97008 | b6a8d42425fde548 | America/Los_Angeles | ||||||

| -122.8356634 | 45.4385864 | 11225 | SW PINTAIL LOOP | 97007 | 08ae3df798df8b90 | America/Los_Angeles | ||||||

| -122.8252379 | 45.4481709 | 9930 | SW WRANGLER PL | 97008 | 1c7e1f7df752663e | America/Los_Angeles | ||||||

| -122.8354211 | 45.4535977 | 9174 | SW PLATINUM PL | 97007 | b364854408ee431e | America/Los_Angeles | ||||||

| -122.8315771 | 45.4949449 | 15040 | SW MILLIKAN WAY | # 233 | 97003 | 0e97796ba31ba3b4 | America/Los_Angeles | |||||

| -122.7950339 | 45.4470259 | 10006 | SW CONESTOGA DR | # 113 | 97008 | 2b5307be5bfeb026 | America/Los_Angeles | |||||

| -122.8072836 | 45.4908594 | 12600 | SW CRESCENT ST | # 126 | 97005 | 4d74167f00f63f50 | America/Los_Angeles | |||||

| -122.8211801 | 45.4689303 | 7100 | SW 140TH PL | 97008 | c5568631f0b9de9c | America/Los_Angeles | ||||||

| -122.831154 | 45.4317057 | 15050 | SW MALLARD DR | # 101 | 97007 | dbd1321080ce9682 | America/Los_Angeles | |||||

| -122.8162856 | 45.4442878 | 10460 | SW 136TH PL | 97008 | 008faab8a9a3e519 | America/Los_Angeles |

Electrical Rates

Lastly, the electrical rate data contains the cost of electricity. In this demonstration, the assumption is that the rate varies by state, by month, and by the hour of the day. The data is stored in XML, a data export format still common to older, legacy systems. The electrical rate data will not be partitioned in the Amazon S3-based data lake.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| <?xml version="1.0" encoding="UTF-8"?> | |

| <root> | |

| <row> | |

| <state>or</state> | |

| <year>2019</year> | |

| <month>12</month> | |

| <from>19:00:00</from> | |

| <to>19:59:59</to> | |

| <type>peak</type> | |

| <rate>12.623</rate> | |

| </row> | |

| <row> | |

| <state>or</state> | |

| <year>2019</year> | |

| <month>12</month> | |

| <from>20:00:00</from> | |

| <to>20:59:59</to> | |

| <type>partial-peak</type> | |

| <rate>7.232</rate> | |

| </row> | |

| <row> | |

| <state>or</state> | |

| <year>2019</year> | |

| <month>12</month> | |

| <from>21:00:00</from> | |

| <to>21:59:59</to> | |

| <type>partial-peak</type> | |

| <rate>7.232</rate> | |

| </row> | |

| <row> | |

| <state>or</state> | |

| <year>2019</year> | |

| <month>12</month> | |

| <from>22:00:00</from> | |

| <to>22:59:59</to> | |

| <type>off-peak</type> | |

| <rate>4.209</rate> | |

| </row> | |

| </root> |

Data Analysis Process

Due to the number of steps involved in the data analysis process in the demonstration, I have divided the process into four logical stages: 1) Raw Data Ingestion, 2) Data Transformation, 3) Data Enrichment, and 4) Data Visualization and Business Intelligence (BI).

Full data analysis workflow diagram (click to enlarge…)

Full data analysis workflow diagram (click to enlarge…)

Raw Data Ingestion

In the Raw Data Ingestion stage, semi-structured CSV-, XML-, and JSON-format data files are copied to a secure Amazon Simple Storage Service (S3) bucket. Within the bucket, data files are organized into folders based on their physical data structure (schema). Due to the potentially unlimited number of data files, files are further organized (partitioned) into subfolders. Organizational strategies for data files are based on date, time, geographic location, customer id, or other common data characteristics.

This collection of semi-structured data files, S3 buckets, and partitions form what is referred to as a Data Lake. According to AWS, a data lake is a centralized repository that allows you to store all your structured and unstructured data at any scale.

A series of AWS Glue Crawlers process the raw CSV-, XML-, and JSON-format files, extracting metadata, and creating table definitions in the AWS Glue Data Catalog. According to AWS, an AWS Glue Data Catalog contains metadata tables, where each table specifies a single data store.

Data Transformation

In the Data Transformation stage, the raw data in the previous stage is transformed. Data transformation may include both modifying the data and changing the data format. Data modifications include data cleansing, re-casting data types, changing date formats, field-level computations, and field concatenation.

The data is then converted from CSV-, XML-, and JSON-format to Apache Parquet format and written back to the Amazon S3-based data lake. Apache Parquet is a compressed, efficient columnar storage format. Amazon Athena, like many Cloud-based services, charges you by the amount of data scanned per query. Hence, using data partitioning, bucketing, compression, and columnar storage formats, like Parquet, will reduce query cost.

Lastly, the transformed Parquet-format data is cataloged to new tables, alongside the raw CSV, XML, and JSON data, in the Glue Data Catalog.

Data Enrichment

According to ScienceDirect, data enrichment or augmentation is the process of enhancing existing information by supplementing missing or incomplete data. Typically, data enrichment is achieved by using external data sources, but that is not always the case.

Data Enrichment—the process of enhancing existing information by supplementing missing or incomplete data. –ScienceDirect

In the Data Enrichment stage, the Parquet-format Smart Hub usage data is augmented with related data from the three other data sources: sensor mappings, locations, and electrical rates. The customer’s Smart Hub usage data is enriched with the customer’s device types, the customer’s timezone, and customer’s electricity cost per monitored period based on the customer’s geographic location and time of day.

Once the data is enriched, it is converted to Parquet and optimized for query performance, stored in the data lake, and cataloged. At this point, the original CSV-, XML-, and JSON-format raw data files, the transformed Parquet-format data files, and the Parquet-format enriched data files are all stored in the Amazon S3-based data lake and cataloged in the Glue Data Catalog.

Data Visualization

In the final Data Visualization and Business Intelligence (BI) stage, the enriched data is presented and analyzed. There are many enterprise-grade services available for visualization and Business Intelligence, which integrate with Athena. Amazon services include Amazon QuickSight, Amazon EMR, and Amazon SageMaker. Third-party solutions from AWS Partners, available on the AWS Marketplace, include Tableau, Looker, Sisense, and Domo. In this demonstration, we will focus on Amazon QuickSight.

Getting Started

Requirements

To follow along with the demonstration, you will need an AWS Account and a current version of the AWS CLI. To get the most from the demonstration, you should also have Python 3 and jq installed in your work environment.

Source Code

All source code for this post can be found on GitHub. Use the following command to clone a copy of the project.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| git clone \ | |

| –branch master –single-branch –depth 1 –no-tags \ | |

| https://github.com/garystafford/athena-glue-quicksight-demo.git |

Source code samples in this post are displayed as GitHub Gists, which will not display correctly on some mobile and social media browsers.

TL;DR?

Just want the jump in without reading the instructions? All the AWS CLI commands, found within the post, are consolidated in the GitHub project’s README file.

CloudFormation Stack

To start, create the ‘smart-hub-athena-glue-stack’ CloudFormation stack using the smart-hub-athena-glue.yml template. The template will create (3) Amazon S3 buckets, (1) AWS Glue Data Catalog Database, (5) Data Catalog Database Tables, (6) AWS Glue Crawlers, (1) AWS Glue ETL Job, and (1) IAM Service Role for AWS Glue.

Make sure to change the DATA_BUCKET, SCRIPT_BUCKET, and LOG_BUCKET variables, first, to your own unique S3 bucket names. I always suggest using the standard AWS 3-part convention of 1) descriptive name, 2) AWS Account ID or Account Alias, and 3) AWS Region, to name your bucket (e.g. ‘smart-hub-data-123456789012-us-east-1’).

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| # *** CHANGE ME *** | |

| BUCKET_SUFFIX="123456789012-us-east-1" | |

| DATA_BUCKET="smart-hub-data-${BUCKET_SUFFIX}" | |

| SCRIPT_BUCKET="smart-hub-scripts-${BUCKET_SUFFIX}" | |

| LOG_BUCKET="smart-hub-logs-${BUCKET_SUFFIX}" | |

| aws cloudformation create-stack \ | |

| –stack-name smart-hub-athena-glue-stack \ | |

| –template-body file://cloudformation/smart-hub-athena-glue.yml \ | |

| –parameters ParameterKey=DataBucketName,ParameterValue=${DATA_BUCKET} \ | |

| ParameterKey=ScriptBucketName,ParameterValue=${SCRIPT_BUCKET} \ | |

| ParameterKey=LogBucketName,ParameterValue=${LOG_BUCKET} \ | |

| –capabilities CAPABILITY_NAMED_IAM |

Raw Data Files

Next, copy the raw CSV-, XML-, and JSON-format data files from the local project to the DATA_BUCKET S3 bucket (steps 1a-1b in workflow diagram). These files represent the beginnings of the S3-based data lake. Each category of data uses a different strategy for organizing and separating the files. Note the use of the Apache Hive-style partitions (e.g., /smart_hub_data_json/dt=2019-12-21). As discussed earlier, the assumption is that the actual, large volume of data in the data lake would necessitate using partitioning to improve query performance.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| # location data | |

| aws s3 cp data/locations/denver_co_1576656000.csv \ | |

| s3://${DATA_BUCKET}/smart_hub_locations_csv/state=co/ | |

| aws s3 cp data/locations/palo_alto_ca_1576742400.csv \ | |

| s3://${DATA_BUCKET}/smart_hub_locations_csv/state=ca/ | |

| aws s3 cp data/locations/portland_metro_or_1576742400.csv \ | |

| s3://${DATA_BUCKET}/smart_hub_locations_csv/state=or/ | |

| aws s3 cp data/locations/stamford_ct_1576569600.csv \ | |

| s3://${DATA_BUCKET}/smart_hub_locations_csv/state=ct/ | |

| # sensor mapping data | |

| aws s3 cp data/mappings/ \ | |

| s3://${DATA_BUCKET}/sensor_mappings_json/state=or/ \ | |

| –recursive | |

| # electrical usage data | |

| aws s3 cp data/usage/2019-12-21/ \ | |

| s3://${DATA_BUCKET}/smart_hub_data_json/dt=2019-12-21/ \ | |

| –recursive | |

| aws s3 cp data/usage/2019-12-22/ \ | |

| s3://${DATA_BUCKET}/smart_hub_data_json/dt=2019-12-22/ \ | |

| –recursive | |

| # electricity rates data | |

| aws s3 cp data/rates/ \ | |

| s3://${DATA_BUCKET}/electricity_rates_xml/ \ | |

| –recursive |

Confirm the contents of the DATA_BUCKET S3 bucket with the following command.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| aws s3 ls s3://${DATA_BUCKET}/ \ | |

| –recursive –human-readable –summarize |

There should be a total of (14) raw data files in the DATA_BUCKET S3 bucket.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| 2020-01-04 14:39:51 20.0 KiB electricity_rates_xml/2019_12_1575270000.xml | |

| 2020-01-04 14:39:46 1.3 KiB sensor_mappings_json/state=or/08ae3df798df8b90_1550908800.json | |

| 2020-01-04 14:39:46 1.3 KiB sensor_mappings_json/state=or/1c7e1f7df752663e_1559347200.json | |

| 2020-01-04 14:39:46 1.3 KiB sensor_mappings_json/state=or/b6a8d42425fde548_1568314800.json | |

| 2020-01-04 14:39:47 44.9 KiB smart_hub_data_json/dt=2019-12-21/08ae3df798df8b90_1576915200.json | |

| 2020-01-04 14:39:47 44.9 KiB smart_hub_data_json/dt=2019-12-21/1c7e1f7df752663e_1576915200.json | |

| 2020-01-04 14:39:47 44.9 KiB smart_hub_data_json/dt=2019-12-21/b6a8d42425fde548_1576915200.json | |

| 2020-01-04 14:39:49 44.6 KiB smart_hub_data_json/dt=2019-12-22/08ae3df798df8b90_15770016000.json | |

| 2020-01-04 14:39:49 44.6 KiB smart_hub_data_json/dt=2019-12-22/1c7e1f7df752663e_1577001600.json | |

| 2020-01-04 14:39:49 44.6 KiB smart_hub_data_json/dt=2019-12-22/b6a8d42425fde548_15770016001.json | |

| 2020-01-04 14:39:39 89.7 KiB smart_hub_locations_csv/state=ca/palo_alto_ca_1576742400.csv | |

| 2020-01-04 14:39:37 84.2 KiB smart_hub_locations_csv/state=co/denver_co_1576656000.csv | |

| 2020-01-04 14:39:44 78.6 KiB smart_hub_locations_csv/state=ct/stamford_ct_1576569600.csv | |

| 2020-01-04 14:39:42 91.6 KiB smart_hub_locations_csv/state=or/portland_metro_or_1576742400.csv | |

| Total Objects: 14 | |

| Total Size: 636.7 KiB |

Lambda Functions

Next, package the (5) Python3.8-based AWS Lambda functions for deployment.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| pushd lambdas/athena-json-to-parquet-data || exit | |

| zip -r package.zip index.py | |

| popd || exit | |

| pushd lambdas/athena-csv-to-parquet-locations || exit | |

| zip -r package.zip index.py | |

| popd || exit | |

| pushd lambdas/athena-json-to-parquet-mappings || exit | |

| zip -r package.zip index.py | |

| popd || exit | |

| pushd lambdas/athena-complex-etl-query || exit | |

| zip -r package.zip index.py | |

| popd || exit | |

| pushd lambdas/athena-parquet-to-parquet-elt-data || exit | |

| zip -r package.zip index.py | |

| popd || exit |

Copy the five Lambda packages to the SCRIPT_BUCKET S3 bucket. The ZIP archive Lambda packages are accessed by the second CloudFormation stack, smart-hub-serverless. This CloudFormation stack, which creates the Lambda functions, will fail to deploy if the packages are not found in the SCRIPT_BUCKET S3 bucket.

I have chosen to place the packages in a different S3 bucket then the raw data files. In a real production environment, these two types of files would be separated, minimally, into separate buckets for security. Remember, only data should go into the data lake.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| aws s3 cp lambdas/athena-json-to-parquet-data/package.zip \ | |

| s3://${SCRIPT_BUCKET}/lambdas/athena_json_to_parquet_data/ | |

| aws s3 cp lambdas/athena-csv-to-parquet-locations/package.zip \ | |

| s3://${SCRIPT_BUCKET}/lambdas/athena_csv_to_parquet_locations/ | |

| aws s3 cp lambdas/athena-json-to-parquet-mappings/package.zip \ | |

| s3://${SCRIPT_BUCKET}/lambdas/athena_json_to_parquet_mappings/ | |

| aws s3 cp lambdas/athena-complex-etl-query/package.zip \ | |

| s3://${SCRIPT_BUCKET}/lambdas/athena_complex_etl_query/ | |

| aws s3 cp lambdas/athena-parquet-to-parquet-elt-data/package.zip \ | |

| s3://${SCRIPT_BUCKET}/lambdas/athena_parquet_to_parquet_elt_data/ |

Create the second ‘smart-hub-lambda-stack’ CloudFormation stack using the smart-hub-lambda.yml CloudFormation template. The template will create (5) AWS Lambda functions and (1) Lambda execution IAM Service Role.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| aws cloudformation create-stack \ | |

| –stack-name smart-hub-lambda-stack \ | |

| –template-body file://cloudformation/smart-hub-lambda.yml \ | |

| –capabilities CAPABILITY_NAMED_IAM |

At this point, we have deployed all of the AWS resources required for the demonstration using CloudFormation. We have also copied all of the raw CSV-, XML-, and JSON-format data files in the Amazon S3-based data lake.

AWS Glue Crawlers

If you recall, we created five tables in the Glue Data Catalog database as part of the CloudFormation stack. One table for each of the four raw data types and one table to hold temporary ELT data later in the demonstration. To confirm the five tables were created in the Glue Data Catalog database, use the Glue Data Catalog Console, or run the following AWS CLI / jq command.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| aws glue get-tables \ | |

| –database-name smart_hub_data_catalog \ | |

| | jq -r '.TableList[].Name' |

The five data catalog tables should be as follows.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| electricity_rates_xml | |

| etl_tmp_output_parquet | |

| sensor_mappings_json | |

| smart_hub_data_json | |

| smart_hub_locations_csv |

We also created six Glue Crawlers as part of the CloudFormation template. Four of these Crawlers are responsible for cataloging the raw CSV-, XML-, and JSON-format data from S3 into the corresponding, existing Glue Data Catalog database tables. The Crawlers will detect any new partitions and add those to the tables as well. Each Crawler corresponds to one of the four raw data types. Crawlers can be scheduled to run periodically, cataloging new data and updating data partitions. Crawlers will also create a Data Catalog database tables. We use Crawlers to create new tables, later in the post.

Run the four Glue Crawlers using the AWS CLI (step 1c in workflow diagram).

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| aws glue start-crawler –name smart-hub-locations-csv | |

| aws glue start-crawler –name smart-hub-sensor-mappings-json | |

| aws glue start-crawler –name smart-hub-data-json | |

| aws glue start-crawler –name smart-hub-rates-xml |

You can check the Glue Crawler Console to ensure the four Crawlers finished successfully.

Alternately, use another AWS CLI / jq command.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| aws glue get-crawler-metrics \ | |

| | jq -r '.CrawlerMetricsList[] | "\(.CrawlerName): \(.StillEstimating), \(.TimeLeftSeconds)"' \ | |

| | grep "^smart-hub-[A-Za-z-]*" |

When complete, all Crawlers should all be in a state of ‘Still Estimating = false’ and ‘TimeLeftSeconds = 0’. In my experience, the Crawlers can take up one minute to start, after the estimation stage, and one minute to stop when complete.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| smart-hub-data-json: true, 0 | |

| smart-hub-etl-tmp-output-parquet: false, 0 | |

| smart-hub-locations-csv: false, 15 | |

| smart-hub-rates-parquet: false, 0 | |

| smart-hub-rates-xml: false, 15 | |

| smart-hub-sensor-mappings-json: false, 15 |

Successfully running the four Crawlers completes the Raw Data Ingestion stage of the demonstration.

Converting to Parquet with CTAS

With the Raw Data Ingestion stage completed, we will now transform the raw Smart Hub usage data, sensor mapping data, and locations data into Parquet-format using three AWS Lambda functions. Each Lambda subsequently calls Athena, which executes a CREATE TABLE AS SELECT SQL statement (aka CTAS) . Each Lambda executes a similar command, varying only by data source, data destination, and partitioning scheme. Below, is an example of the command used for the Smart Hub electrical usage data, taken from the Python-based Lambda, athena-json-to-parquet-data/index.py.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| query = \ | |

| "CREATE TABLE IF NOT EXISTS " + data_catalog + "." + output_directory + " " \ | |

| "WITH ( " \ | |

| " format = 'PARQUET', " \ | |

| " parquet_compression = 'SNAPPY', " \ | |

| " partitioned_by = ARRAY['dt'], " \ | |

| " external_location = 's3://" + data_bucket + "/" + output_directory + "' " \ | |

| ") AS " \ | |

| "SELECT * " \ | |

| "FROM " + data_catalog + "." + input_directory + ";" |

This compact, yet powerful CTAS statement converts a copy of the raw JSON- and CSV-format data files into Parquet-format, and partitions and stores the resulting files back into the S3-based data lake. Additionally, the CTAS SQL statement catalogs the Parquet-format data files into the Glue Data Catalog database, into new tables. Unfortunately, this method will not work for the XML-format raw data files, which we will tackle next.

The five deployed Lambda functions should be visible from the Lambda Console’s Functions tab.

Invoke the three Lambda functions using the AWS CLI. (part of step 2a in workflow diagram).

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| aws lambda invoke \ | |

| –function-name athena-json-to-parquet-data \ | |

| response.json | |

| aws lambda invoke \ | |

| –function-name athena-csv-to-parquet-locations \ | |

| response.json | |

| aws lambda invoke \ | |

| –function-name athena-json-to-parquet-mappings \ | |

| response.json |

Here is an example of the same CTAS command, shown above for the Smart Hub electrical usage data, as it is was executed successfully by Athena.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| CREATE TABLE IF NOT EXISTS smart_hub_data_catalog.smart_hub_data_parquet | |

| WITH (format = 'PARQUET', | |

| parquet_compression = 'SNAPPY', | |

| partitioned_by = ARRAY['dt'], | |

| external_location = 's3://smart-hub-data-demo-account-1-us-east-1/smart_hub_data_parquet') | |

| AS | |

| SELECT * | |

| FROM smart_hub_data_catalog.smart_hub_data_json |

We can view any Athena SQL query from the Athena Console’s History tab. Clicking on a query (in pink) will copy it to the Query Editor tab and execute it. Below, we see the three SQL statements executed by the Lamba functions.

AWS Glue ETL Job for XML

If you recall, the electrical rate data is in XML format. The Lambda functions we just executed, converted the CSV and JSON data to Parquet using Athena. Currently, unlike CSV, JSON, ORC, Parquet, and Avro, Athena does not support the older XML data format. For the XML data files, we will use an AWS Glue ETL Job to convert the XML data to Parquet. The Glue ETL Job is written in Python and uses Apache Spark, along with several AWS Glue PySpark extensions. For this job, I used an existing script created in the Glue ETL Jobs Console as a base, then modified the script to meet my needs.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| import sys | |

| from awsglue.transforms import * | |

| from awsglue.utils import getResolvedOptions | |

| from pyspark.context import SparkContext | |

| from awsglue.context import GlueContext | |

| from awsglue.job import Job | |

| args = getResolvedOptions(sys.argv, [ | |

| 'JOB_NAME', | |

| 's3_output_path', | |

| 'source_glue_database', | |

| 'source_glue_table' | |

| ]) | |

| s3_output_path = args['s3_output_path'] | |

| source_glue_database = args['source_glue_database'] | |

| source_glue_table = args['source_glue_table'] | |

| sc = SparkContext() | |

| glueContext = GlueContext(sc) | |

| spark = glueContext.spark_session | |

| job = Job(glueContext) | |

| job.init(args['JOB_NAME'], args) | |

| datasource0 = glueContext. \ | |

| create_dynamic_frame. \ | |

| from_catalog(database=source_glue_database, | |

| table_name=source_glue_table, | |

| transformation_ctx="datasource0") | |

| applymapping1 = ApplyMapping.apply( | |

| frame=datasource0, | |

| mappings=[("from", "string", "from", "string"), | |

| ("to", "string", "to", "string"), | |

| ("type", "string", "type", "string"), | |

| ("rate", "double", "rate", "double"), | |

| ("year", "int", "year", "int"), | |

| ("month", "int", "month", "int"), | |

| ("state", "string", "state", "string")], | |

| transformation_ctx="applymapping1") | |

| resolvechoice2 = ResolveChoice.apply( | |

| frame=applymapping1, | |

| choice="make_struct", | |

| transformation_ctx="resolvechoice2") | |

| dropnullfields3 = DropNullFields.apply( | |

| frame=resolvechoice2, | |

| transformation_ctx="dropnullfields3") | |

| datasink4 = glueContext.write_dynamic_frame.from_options( | |

| frame=dropnullfields3, | |

| connection_type="s3", | |

| connection_options={ | |

| "path": s3_output_path, | |

| "partitionKeys": ["state"] | |

| }, | |

| format="parquet", | |

| transformation_ctx="datasink4") | |

| job.commit() |

The three Python command-line arguments the script expects (lines 10–12, above) are defined in the CloudFormation template, smart-hub-athena-glue.yml. Below, we see them on lines 10–12 of the CloudFormation snippet. They are injected automatically when the job is run and can be overridden from the command line when starting the job.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| GlueJobRatesToParquet: | |

| Type: AWS::Glue::Job | |

| Properties: | |

| GlueVersion: 1.0 | |

| Command: | |

| Name: glueetl | |

| PythonVersion: 3 | |

| ScriptLocation: !Sub "s3://${ScriptBucketName}/glue_scripts/rates_xml_to_parquet.py" | |

| DefaultArguments: { | |

| "–s3_output_path": !Sub "s3://${DataBucketName}/electricity_rates_parquet", | |

| "–source_glue_database": !Ref GlueDatabase, | |

| "–source_glue_table": "electricity_rates_xml", | |

| "–job-bookmark-option": "job-bookmark-enable", | |

| "–enable-spark-ui": "true", | |

| "–spark-event-logs-path": !Sub "s3://${LogBucketName}/glue-etl-jobs/" | |

| } | |

| Description: "Convert electrical rates XML data to Parquet" | |

| ExecutionProperty: | |

| MaxConcurrentRuns: 2 | |

| MaxRetries: 0 | |

| Name: rates-xml-to-parquet | |

| Role: !GetAtt "CrawlerRole.Arn" | |

| DependsOn: | |

| – CrawlerRole | |

| – GlueDatabase | |

| – DataBucket | |

| – ScriptBucket | |

| – LogBucket |

First, copy the Glue ETL Job Python script to the SCRIPT_BUCKET S3 bucket.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| aws s3 cp glue-scripts/rates_xml_to_parquet.py \ | |

| s3://${SCRIPT_BUCKET}/glue_scripts/ |

Next, start the Glue ETL Job (part of step 2a in workflow diagram). Although the conversion is a relatively simple set of tasks, the creation of the Apache Spark environment, to execute the tasks, will take several minutes. Whereas the Glue Crawlers took about 2 minutes on average, the Glue ETL Job could take 10–15 minutes in my experience. The actual execution time only takes about 1–2 minutes of the 10–15 minutes to complete. In my opinion, waiting up to 15 minutes is too long to be viable for ad-hoc jobs against smaller datasets; Glue ETL Jobs are definitely targeted for big data.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| aws glue start-job-run –job-name rates-xml-to-parquet |

To check on the status of the job, use the Glue ETL Jobs Console, or use the AWS CLI.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| # get status of most recent job (the one that is running) | |

| aws glue get-job-run \ | |

| –job-name rates-xml-to-parquet \ | |

| –run-id "$(aws glue get-job-runs \ | |

| –job-name rates-xml-to-parquet \ | |

| | jq -r '.JobRuns[0].Id')" |

When complete, you should see results similar to the following. Note the ‘JobRunState’ is ‘SUCCEEDED.’ This particular job ran for a total of 14.92 minutes, while the actual execution time was 2.25 minutes.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| { | |

| "JobRun": { | |

| "Id": "jr_f7186b26bf042ea7773ad08704d012d05299f080e7ac9b696ca8dd575f79506b", | |

| "Attempt": 0, | |

| "JobName": "rates-xml-to-parquet", | |

| "StartedOn": 1578022390.301, | |

| "LastModifiedOn": 1578023285.632, | |

| "CompletedOn": 1578023285.632, | |

| "JobRunState": "SUCCEEDED", | |

| "PredecessorRuns": [], | |

| "AllocatedCapacity": 10, | |

| "ExecutionTime": 135, | |

| "Timeout": 2880, | |

| "MaxCapacity": 10.0, | |

| "LogGroupName": "/aws-glue/jobs", | |

| "GlueVersion": "1.0" | |

| } | |

| } |

The job’s progress and the results are also visible in the AWS Glue Console’s ETL Jobs tab.

Detailed Apache Spark logs are also available in CloudWatch Management Console, which is accessible directly from the Logs link in the AWS Glue Console’s ETL Jobs tab.

The last step in the Data Transformation stage is to convert catalog the Parquet-format electrical rates data, created with the previous Glue ETL Job, using yet another Glue Crawler (part of step 2b in workflow diagram). Start the following Glue Crawler to catalog the Parquet-format electrical rates data.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| aws glue start-crawler –name smart-hub-rates-parquet |

This concludes the Data Transformation stage. The raw and transformed data is in the data lake, and the following nine tables should exist in the Glue Data Catalog.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| electricity_rates_parquet | |

| electricity_rates_xml | |

| etl_tmp_output_parquet | |

| sensor_mappings_json | |

| sensor_mappings_parquet | |

| smart_hub_data_json | |

| smart_hub_data_parquet | |

| smart_hub_locations_csv | |

| smart_hub_locations_parquet |

If we examine the tables, we should observe the data partitions we used to organize the data files in the Amazon S3-based data lake are contained in the table metadata. Below, we see the four partitions, based on state, of the Parquet-format locations data.

Data Enrichment

To begin the Data Enrichment stage, we will invoke the AWS Lambda, athena-complex-etl-query/index.py. This Lambda accepts input parameters (lines 28–30, below), passed in the Lambda handler’s event parameter. The arguments include the Smart Hub ID, the start date for the data requested, and the end date for the data requested. The scenario for the demonstration is that a customer with the location id value, using the electrical provider’s application, has requested data for a particular range of days (start date and end date), to visualize and analyze.

The Lambda executes a series of Athena INSERT INTO SQL statements, one statement for each of the possible Smart Hub connected electrical sensors, s_01 through s_10, for which there are values in the Smart Hub electrical usage data. Amazon just released the Amazon Athena INSERT INTO a table using the results of a SELECT query capability in September 2019, an essential addition to Athena. New Athena features are listed in the release notes.

Here, the SELECT query is actually a series of chained subqueries, using Presto SQL’s WITH clause capability. The queries join the Parquet-format Smart Hub electrical usage data sources in the S3-based data lake, with the other three Parquet-format, S3-based data sources: sensor mappings, locations, and electrical rates. The Parquet-format data is written as individual files to S3 and inserted into the existing ‘etl_tmp_output_parquet’ Glue Data Catalog database table. Compared to traditional relational database-based queries, the capabilities of Glue and Athena to enable complex SQL queries across multiple semi-structured data files, stored in S3, is truly amazing!

The capabilities of Glue and Athena to enable complex SQL queries across multiple semi-structured data files, stored in S3, is truly amazing!

Below, we see the SQL statement starting on line 43.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| import boto3 | |

| import os | |

| import logging | |

| import json | |

| from typing import Dict | |

| # environment variables | |

| data_catalog = os.getenv('DATA_CATALOG') | |

| data_bucket = os.getenv('DATA_BUCKET') | |

| # variables | |

| output_directory = 'etl_tmp_output_parquet' | |

| # uses list comprehension to generate the equivalent of: | |

| # ['s_01', 's_02', …, 's_09', 's_10'] | |

| sensors = [f's_{i:02d}' for i in range(1, 11)] | |

| # logging | |

| logger = logging.getLogger() | |

| logger.setLevel(logging.INFO) | |

| # athena client | |

| athena_client = boto3.client('athena') | |

| def handler(event, context): | |

| args = { | |

| "loc_id": event['loc_id'], | |

| "date_from": event['date_from'], | |

| "date_to": event['date_to'] | |

| } | |

| athena_query(args) | |

| return { | |

| 'statusCode': 200, | |

| 'body': json.dumps("function 'athena-complex-etl-query' complete") | |

| } | |

| def athena_query(args: Dict[str, str]): | |

| for sensor in sensors: | |

| query = \ | |

| "INSERT INTO " + data_catalog + "." + output_directory + " " \ | |

| "WITH " \ | |

| " t1 AS " \ | |

| " (SELECT d.loc_id, d.ts, d.data." + sensor + " AS kwh, l.state, l.tz " \ | |

| " FROM smart_hub_data_catalog.smart_hub_data_parquet d " \ | |

| " LEFT OUTER JOIN smart_hub_data_catalog.smart_hub_locations_parquet l " \ | |

| " ON d.loc_id = l.hash " \ | |

| " WHERE d.loc_id = '" + args['loc_id'] + "' " \ | |

| " AND d.dt BETWEEN cast('" + args['date_from'] + \ | |

| "' AS date) AND cast('" + args['date_to'] + "' AS date)), " \ | |

| " t2 AS " \ | |

| " (SELECT at_timezone(from_unixtime(t1.ts, 'UTC'), t1.tz) AS ts, " \ | |

| " date_format(at_timezone(from_unixtime(t1.ts, 'UTC'), t1.tz), '%H') AS rate_period, " \ | |

| " m.description AS device, m.location, t1.loc_id, t1.state, t1.tz, t1.kwh " \ | |

| " FROM t1 LEFT OUTER JOIN smart_hub_data_catalog.sensor_mappings_parquet m " \ | |

| " ON t1.loc_id = m.loc_id " \ | |

| " WHERE t1.loc_id = '" + args['loc_id'] + "' " \ | |

| " AND m.state = t1.state " \ | |

| " AND m.description = (SELECT m2.description " \ | |

| " FROM smart_hub_data_catalog.sensor_mappings_parquet m2 " \ | |

| " WHERE m2.loc_id = '" + args['loc_id'] + "' AND m2.id = '" + sensor + "')), " \ | |

| " t3 AS " \ | |

| " (SELECT substr(r.to, 1, 2) AS rate_period, r.type, r.rate, r.year, r.month, r.state " \ | |

| " FROM smart_hub_data_catalog.electricity_rates_parquet r " \ | |

| " WHERE r.year BETWEEN cast(date_format(cast('" + args['date_from'] + \ | |

| "' AS date), '%Y') AS integer) AND cast(date_format(cast('" + args['date_to'] + \ | |

| "' AS date), '%Y') AS integer)) " \ | |

| "SELECT replace(cast(t2.ts AS VARCHAR), concat(' ', t2.tz), '') AS ts, " \ | |

| " t2.device, t2.location, t3.type, t2.kwh, t3.rate AS cents_per_kwh, " \ | |

| " round(t2.kwh * t3.rate, 4) AS cost, t2.state, t2.loc_id " \ | |

| "FROM t2 LEFT OUTER JOIN t3 " \ | |

| " ON t2.rate_period = t3.rate_period " \ | |

| "WHERE t3.state = t2.state " \ | |

| "ORDER BY t2.ts, t2.device;" | |

| logger.info(query) | |

| response = athena_client.start_query_execution( | |

| QueryString=query, | |

| QueryExecutionContext={ | |

| 'Database': data_catalog | |

| }, | |

| ResultConfiguration={ | |

| 'OutputLocation': 's3://' + data_bucket + '/tmp/' + output_directory | |

| }, | |

| WorkGroup='primary' | |

| ) | |

| logger.info(response) |

Below, is an example of one of the final queries, for the s_10 sensor, as executed by Athena. All the input parameter values, Python variables, and environment variables have been resolved into the query.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| INSERT INTO smart_hub_data_catalog.etl_tmp_output_parquet | |

| WITH t1 AS (SELECT d.loc_id, d.ts, d.data.s_10 AS kwh, l.state, l.tz | |

| FROM smart_hub_data_catalog.smart_hub_data_parquet d | |

| LEFT OUTER JOIN smart_hub_data_catalog.smart_hub_locations_parquet l ON d.loc_id = l.hash | |

| WHERE d.loc_id = 'b6a8d42425fde548' | |

| AND d.dt BETWEEN cast('2019-12-21' AS date) AND cast('2019-12-22' AS date)), | |

| t2 AS (SELECT at_timezone(from_unixtime(t1.ts, 'UTC'), t1.tz) AS ts, | |

| date_format(at_timezone(from_unixtime(t1.ts, 'UTC'), t1.tz), '%H') AS rate_period, | |

| m.description AS device, | |

| m.location, | |

| t1.loc_id, | |

| t1.state, | |

| t1.tz, | |

| t1.kwh | |

| FROM t1 | |

| LEFT OUTER JOIN smart_hub_data_catalog.sensor_mappings_parquet m ON t1.loc_id = m.loc_id | |

| WHERE t1.loc_id = 'b6a8d42425fde548' | |

| AND m.state = t1.state | |

| AND m.description = (SELECT m2.description | |

| FROM smart_hub_data_catalog.sensor_mappings_parquet m2 | |

| WHERE m2.loc_id = 'b6a8d42425fde548' | |

| AND m2.id = 's_10')), | |

| t3 AS (SELECT substr(r.to, 1, 2) AS rate_period, r.type, r.rate, r.year, r.month, r.state | |

| FROM smart_hub_data_catalog.electricity_rates_parquet r | |

| WHERE r.year BETWEEN cast(date_format(cast('2019-12-21' AS date), '%Y') AS integer) | |

| AND cast(date_format(cast('2019-12-22' AS date), '%Y') AS integer)) | |

| SELECT replace(cast(t2.ts AS VARCHAR), concat(' ', t2.tz), '') AS ts, | |

| t2.device, | |

| t2.location, | |

| t3.type, | |

| t2.kwh, | |

| t3.rate AS cents_per_kwh, | |

| round(t2.kwh * t3.rate, 4) AS cost, | |

| t2.state, | |

| t2.loc_id | |

| FROM t2 | |

| LEFT OUTER JOIN t3 ON t2.rate_period = t3.rate_period | |

| WHERE t3.state = t2.state | |

| ORDER BY t2.ts, t2.device; |

Along with enriching the data, the query performs additional data transformation using the other data sources. For example, the Unix timestamp is converted to a localized timestamp containing the date and time, according to the customer’s location (line 7, above). Transforming dates and times is a frequent, often painful, data analysis task. Another example of data enrichment is the augmentation of the data with a new, computed column. The column’s values are calculated using the values of two other columns (line 33, above).

Invoke the Lambda with the following three parameters in the payload (step 3a in workflow diagram).

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| aws lambda invoke \ | |

| –function-name athena-complex-etl-query \ | |

| –payload "{ \"loc_id\": \"b6a8d42425fde548\", | |

| \"date_from\": \"2019-12-21\", \"date_to\": \"2019-12-22\"}" \ | |

| response.json |

The ten INSERT INTO SQL statement’s result statuses (one per device sensor) are visible from the Athena Console’s History tab.

Each Athena query execution saves that query’s results to the S3-based data lake as individual, uncompressed Parquet-format data files. The data is partitioned in the Amazon S3-based data lake by the Smart Meter location ID (e.g., ‘loc_id=b6a8d42425fde548’).

Below is a snippet of the enriched data for a customer’s clothes washer (sensor ‘s_04’). Note the timestamp is now an actual date and time in the local timezone of the customer (e.g., ‘2019-12-21 20:10:00.000’). The sensor ID (‘s_04’) is replaced with the actual device name (‘Clothes Washer’). The location of the device (‘Basement’) and the type of electrical usage period (e.g. ‘peak’ or ‘partial-peak’) has been added. Finally, the cost column has been computed.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| ts | device | location | type | kwh | cents_per_kwh | cost | state | loc_id | |

|---|---|---|---|---|---|---|---|---|---|

| 2019-12-21 19:40:00.000 | Clothes Washer | Basement | peak | 0.0 | 12.623 | 0.0 | or | b6a8d42425fde548 | |

| 2019-12-21 19:45:00.000 | Clothes Washer | Basement | peak | 0.0 | 12.623 | 0.0 | or | b6a8d42425fde548 | |

| 2019-12-21 19:50:00.000 | Clothes Washer | Basement | peak | 0.1501 | 12.623 | 1.8947 | or | b6a8d42425fde548 | |

| 2019-12-21 19:55:00.000 | Clothes Washer | Basement | peak | 0.1497 | 12.623 | 1.8897 | or | b6a8d42425fde548 | |

| 2019-12-21 20:00:00.000 | Clothes Washer | Basement | partial-peak | 0.1501 | 7.232 | 1.0855 | or | b6a8d42425fde548 | |

| 2019-12-21 20:05:00.000 | Clothes Washer | Basement | partial-peak | 0.2248 | 7.232 | 1.6258 | or | b6a8d42425fde548 | |

| 2019-12-21 20:10:00.000 | Clothes Washer | Basement | partial-peak | 0.2247 | 7.232 | 1.625 | or | b6a8d42425fde548 | |

| 2019-12-21 20:15:00.000 | Clothes Washer | Basement | partial-peak | 0.2248 | 7.232 | 1.6258 | or | b6a8d42425fde548 | |

| 2019-12-21 20:20:00.000 | Clothes Washer | Basement | partial-peak | 0.2253 | 7.232 | 1.6294 | or | b6a8d42425fde548 | |

| 2019-12-21 20:25:00.000 | Clothes Washer | Basement | partial-peak | 0.151 | 7.232 | 1.092 | or | b6a8d42425fde548 |

To transform the enriched CSV-format data to Parquet-format, we need to catalog the CSV-format results using another Crawler, first (step 3d in workflow diagram).

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| aws glue start-crawler –name smart-hub-etl-tmp-output-parquet |

Optimizing Enriched Data

The previous step created enriched Parquet-format data. However, this data is not as optimized for query efficiency as it should be. Using the Athena INSERT INTO WITH SQL statement, allowed the data to be partitioned. However, the method does not allow the Parquet data to be easily combined into larger files and compressed. To perform both these optimizations, we will use one last Lambda, athena-parquet-to-parquet-elt-data/index.py. The Lambda will create a new location in the Amazon S3-based data lake, containing all the enriched data, in a single file and compressed using Snappy compression.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| aws lambda invoke \ | |

| –function-name athena-parquet-to-parquet-elt-data \ | |

| response.json |

The resulting Parquet file is visible in the S3 Management Console.

The final step in the Data Enrichment stage is to catalog the optimized Parquet-format enriched ETL data. To catalog the data, run the following Glue Crawler (step 3i in workflow diagram

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| aws glue start-crawler –name smart-hub-etl-output-parquet |

Final Data Lake and Data Catalog

We should now have the following ten top-level folders of partitioned data in the S3-based data lake. The ‘tmp’ folder may be ignored.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| aws s3 ls s3://${DATA_BUCKET}/ |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| PRE electricity_rates_parquet/ | |

| PRE electricity_rates_xml/ | |

| PRE etl_output_parquet/ | |

| PRE etl_tmp_output_parquet/ | |

| PRE sensor_mappings_json/ | |

| PRE sensor_mappings_parquet/ | |

| PRE smart_hub_data_json/ | |

| PRE smart_hub_data_parquet/ | |

| PRE smart_hub_locations_csv/ | |

| PRE smart_hub_locations_parquet/ |

Similarly, we should now have the following ten corresponding tables in the Glue Data Catalog. Use the AWS Glue Console to confirm the tables exist.

Alternately, use the following AWS CLI / jq command to list the table names.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| aws glue get-tables \ | |

| –database-name smart_hub_data_catalog \ | |

| | jq -r '.TableList[].Name' |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| electricity_rates_parquet | |

| electricity_rates_xml | |

| etl_output_parquet | |

| etl_tmp_output_parquet | |

| sensor_mappings_json | |

| sensor_mappings_parquet | |

| smart_hub_data_json | |

| smart_hub_data_parquet | |

| smart_hub_locations_csv | |

| smart_hub_locations_parquet |

‘Unknown’ Bug

You may have noticed the four tables created with the AWS Lambda functions, using the CTAS SQL statement, erroneously have the ‘Classification’ of ‘Unknown’ as opposed to ‘parquet’. I am not sure why, I believe it is a possible bug with the CTAS feature. It seems to have no adverse impact on the table’s functionality. However, to fix the issue, run the following set of commands. This aws glue update-table hack will switch the table’s ‘Classification’ to ‘parquet’.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| database=smart_hub_data_catalog | |

| tables=(smart_hub_locations_parquet sensor_mappings_parquet smart_hub_data_parquet etl_output_parquet) | |

| for table in ${tables}; do | |

| fixed_table=$(aws glue get-table \ | |

| –database-name "${database}" \ | |

| –name "${table}" \ | |

| | jq '.Table.Parameters.classification = "parquet" | del(.Table.DatabaseName) | del(.Table.CreateTime) | del(.Table.UpdateTime) | del(.Table.CreatedBy) | del(.Table.IsRegisteredWithLakeFormation)') | |

| fixed_table=$(echo ${fixed_table} | jq .Table) | |

| aws glue update-table \ | |

| –database-name "${database}" \ | |

| –table-input "${fixed_table}" | |

| echo "table '${table}' classification changed to 'parquet'" | |

| done |

The results of the fix may be seen from the AWS Glue Console. All ten tables are now classified correctly.

Explore the Data