Archive for category DevOps

Ten Ways to Leverage Generative AI for Development on AWS

Posted by Gary A. Stafford in AI/ML, AWS, Bash Scripting, Big Data, Build Automation, Client-Side Development, Cloud, DevOps, Enterprise Software Development, Kubernetes, Python, Serverless, Software Development, SQL on April 3, 2023

Explore ten ways you can use Generative AI coding tools to accelerate development and increase your productivity on AWS

Generative AI coding tools are a new class of software development tools that leverage machine learning algorithms to assist developers in writing code. These tools use AI models trained on vast amounts of code to offer suggestions for completing code snippets, writing functions, and even entire blocks of code.

Quote generated by OpenAI ChatGPT

Introduction

Combining the latest Generative AI coding tools with a feature-rich and extensible IDE and your coding skills will accelerate development and increase your productivity. In this post, we will look at ten examples of how you can use Generative AI coding tools on AWS:

- Application Development: Code, unit tests, and documentation

- Infrastructure as Code (IaC): AWS CloudFormation, AWS CDK, Terraform, and Ansible

- AWS Lambda: Serverless, event-driven functions

- IAM Policies: AWS IAM policies and Amazon S3 bucket policies

- Structured Query Language (SQL): Amazon RDS, Amazon Redshift, Amazon Athena, and Amazon EMR

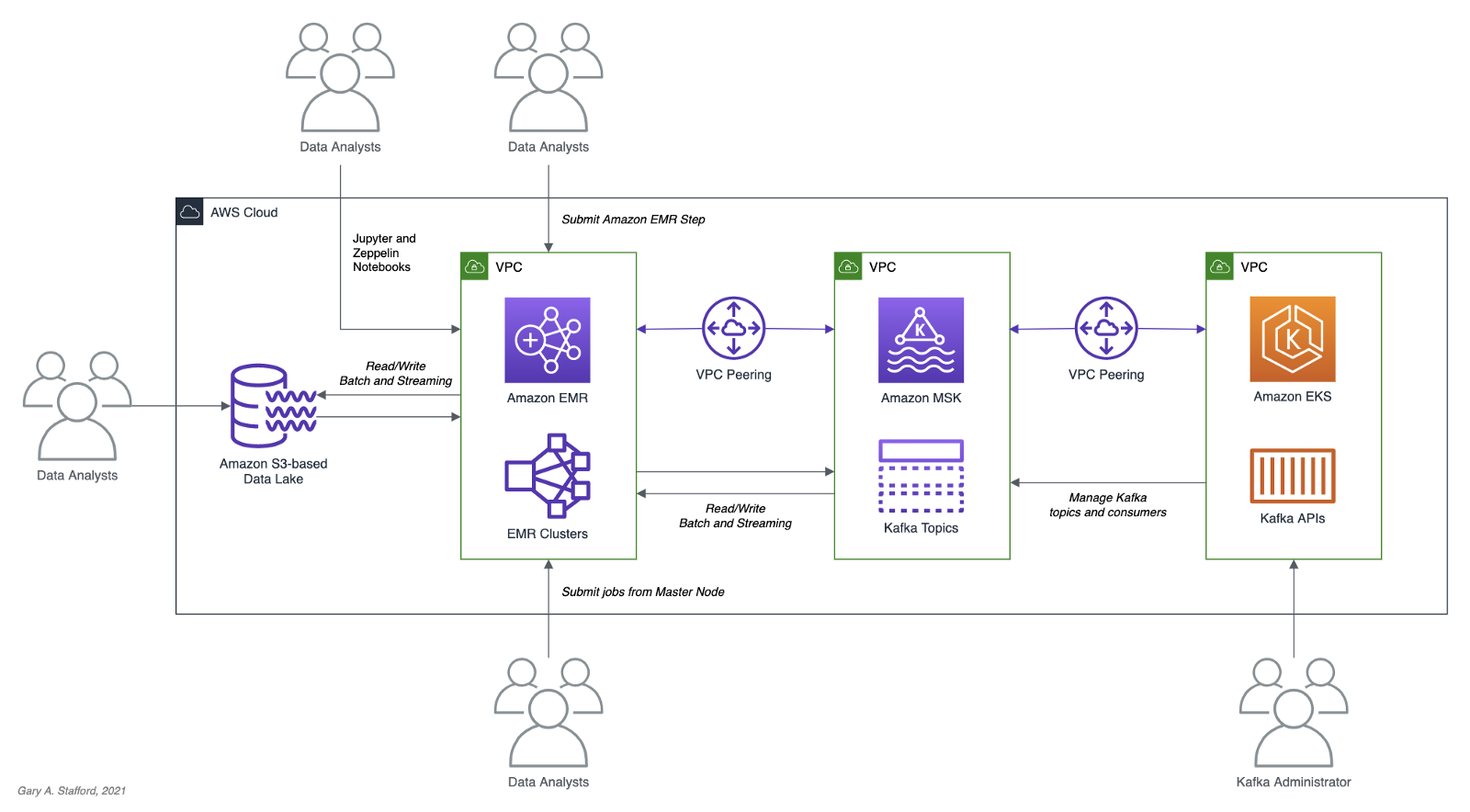

- Big Data: Apache Spark and Flink on Amazon EMR, AWS Glue, and Kinesis Data Analytics

- Configuration and Properties files: Amazon MSK, Amazon EMR, and Amazon OpenSearch

- Apache Airflow DAGs: Amazon MWAA

- Containerization: Kubernetes resources, Helm Charts, Dockerfiles for Amazon EKS

- Utility Scripts: PowerShell, Bash, Shell, and Python

Choosing a Generative AI Coding Tool

In my recent post, Accelerating Development with Generative AI-Powered Coding Tools, I reviewed six popular tools: ChatGPT, Copilot, CodeWhisperer, Tabnine, Bing, and ChatSonic.

For this post, we will use GitHub Copilot, powered by OpenAI Codex, a new AI system created by OpenAI. Copilot suggests code and entire functions in real-time, right from your IDE. Copilot is trained in all languages that appear in GitHub’s public repositories. GitHub points out that the quality of suggestions you receive may depend on the volume and diversity of training data for that language. Similar tools in this category are limited in the number of languages they support compared to Copilot.

Copilot is currently available as an extension for Visual Studio Code, Visual Studio, Neovim, and JetBrains suite of IDEs. The GitHub Copilot extension for Visual Studio Code (VS Code) already has 4.8 million downloads, and the GitHub Copilot Nightly extension, used for this post, has almost 280,000 downloads. I am also using the GitHub Copilot Labs extension in this post.

Ten Ways to Leverage Generative AI

Take a look at ten examples of how you can use Generative AI coding tools to increase your development productivity on AWS. All the code samples in this post can be found on GitHub.

1. Application Development

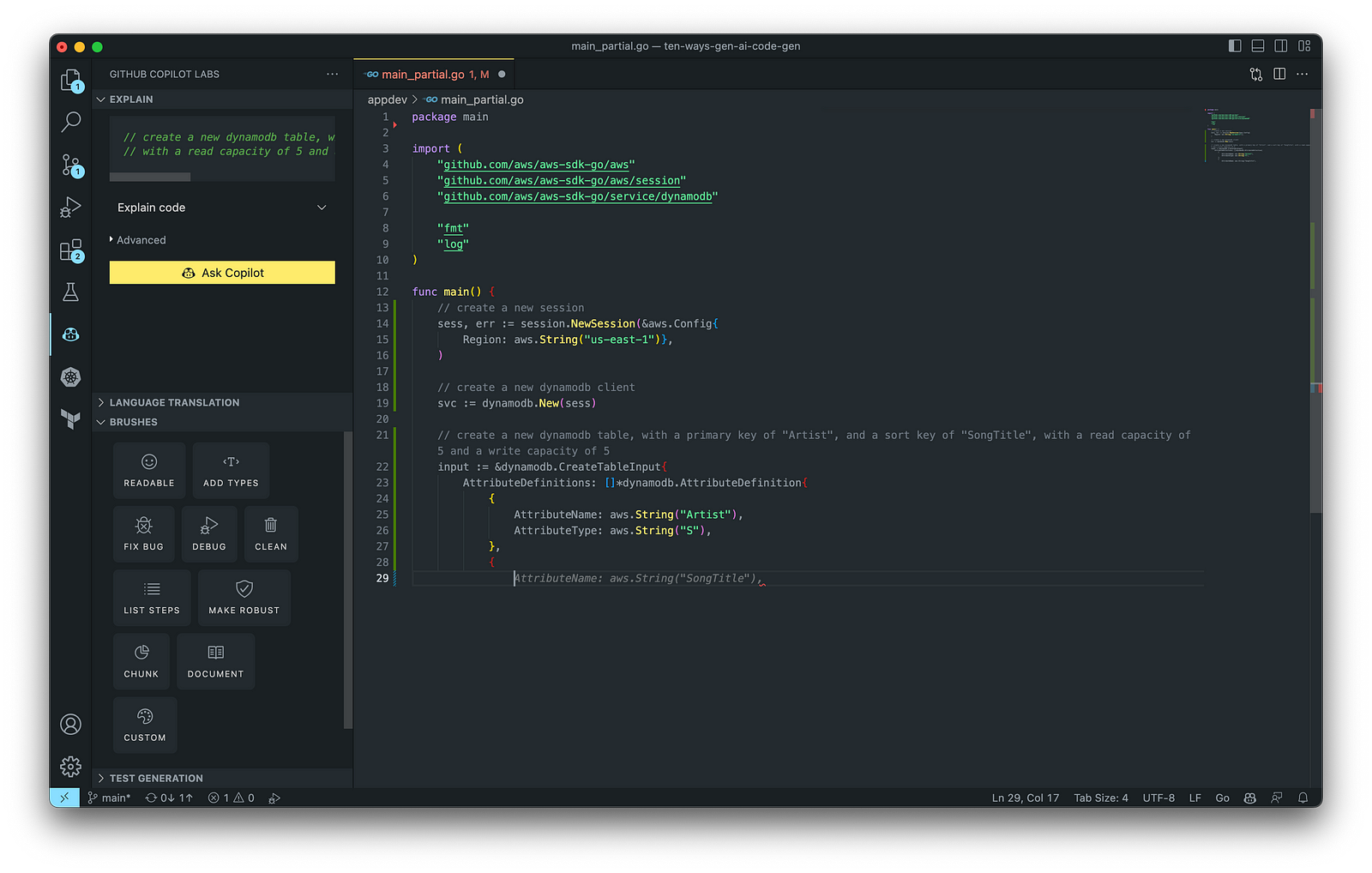

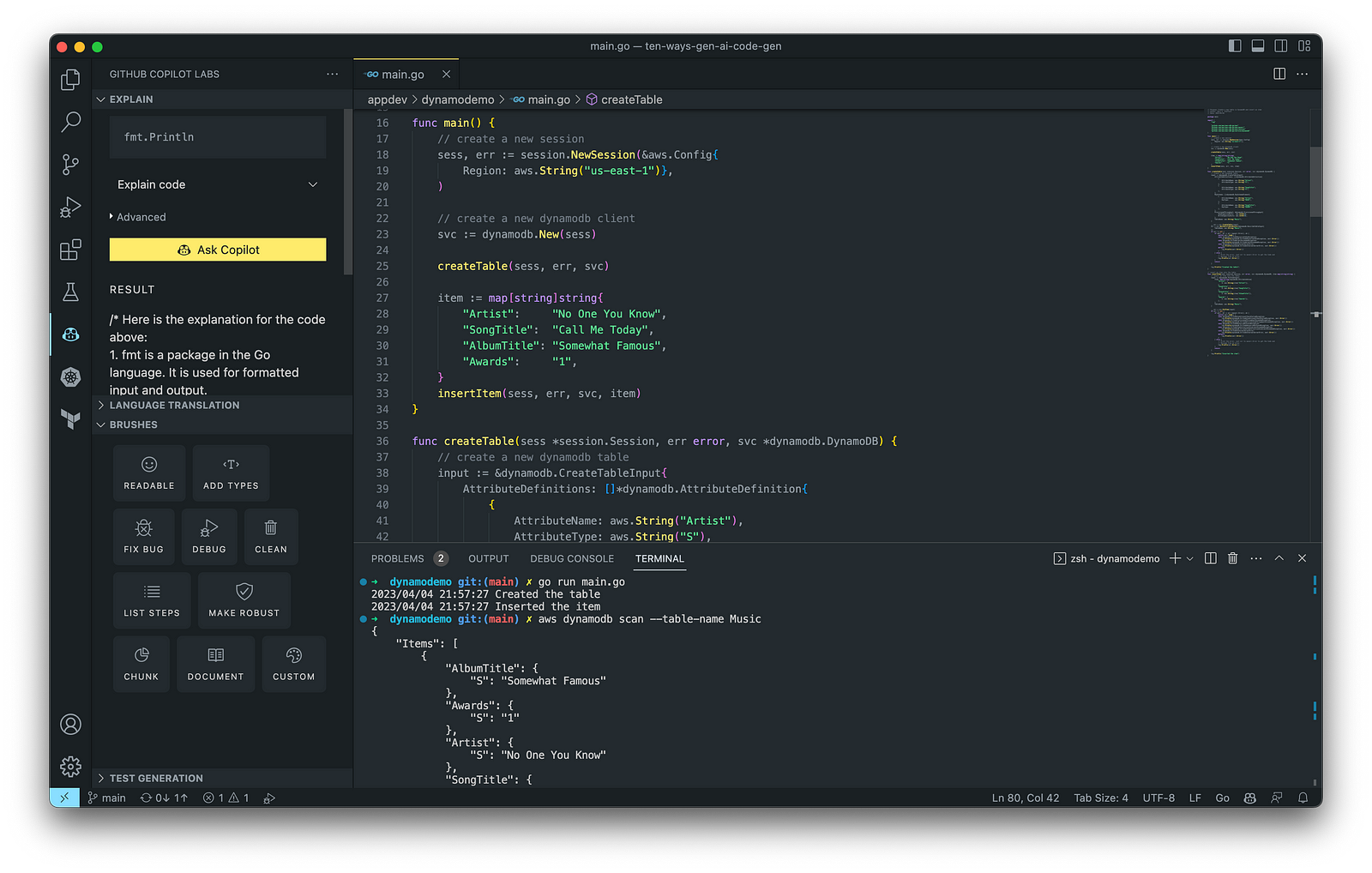

According to GitHub, trained on billions of lines of code, GitHub Copilot turns natural language prompts into coding suggestions across dozens of languages. These features make Copilot ideal for developing applications, writing unit tests, and authoring documentation. You can use GitHub Copilot to assist with writing software applications in nearly any popular language, including Go.

The final application, which uses the AWS SDK for Go to create an Amazon DynamoDB table, shown below, was formatted using the Go extension by Google and optimized using the ‘Readable,’ ‘Make Robust,’ and ‘Fix Bug’ GitHub Code Brushes.

Generating Unit Tests

Using JavaScript and TypeScript, you can take advantage of TestPilot to generate unit tests based on your existing code and documentation. TestPilot, part of GitHub Copilot Labs, uses GitHub Copilot’s AI technology.

2. Infrastructure as Code (IaC)

Widespread Infrastructure as Code (IaC) tools include Pulumi, AWS CloudFormation, Azure ARM Templates, Google Deployment Manager, AWS Cloud Development Kit (AWS CDK), Microsoft Bicep, and Ansible. Many IaC tools, except AWS CDK, use JSON- or YAML-based domain-specific languages (DSLs).

AWS CloudFormation

AWS CloudFormation is an Infrastructure as Code (IaC) service that allows you to easily model, provision, and manage AWS and third-party resources. The CloudFormation template is a JSON or YAML formatted text file. You can use GitHub Copilot to assist with writing IaC, including AWS CloudFormation in either JSON or YAML.

You can use the YAML Language Support by Red Hat extension to write YAML in VS Code.

VS Code has native JSON support with JSON Schema Store, which includes AWS CloudFormation. VS Code uses the CloudFormation schema for IntelliSense and flag schema errors in templates.

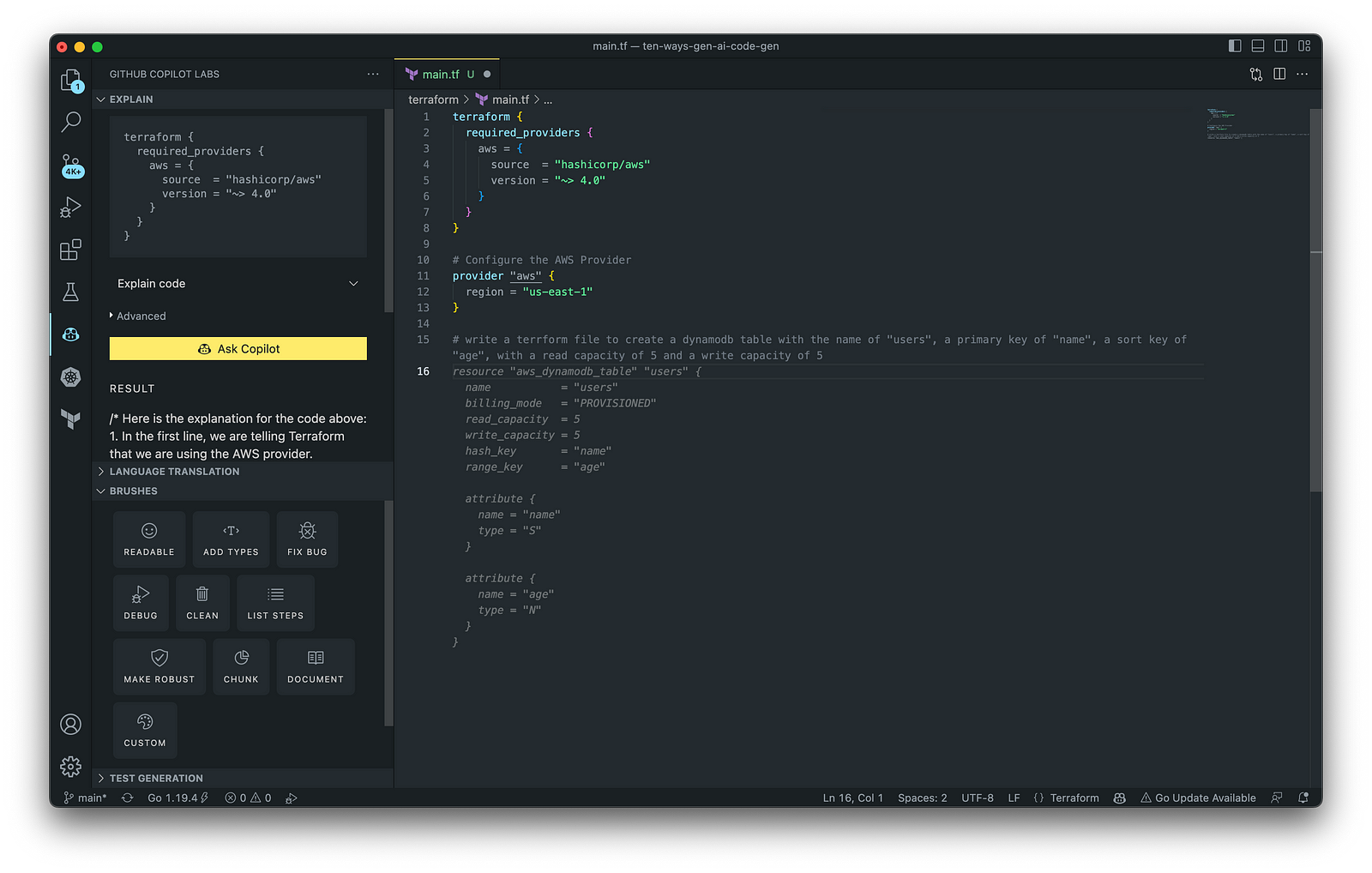

HashiCorp Terraform

In addition to AWS CloudFormation, HashiCorp Terraform is an extremely popular IaC tool. According to HashiCorp, Terraform lets you define resources and infrastructure in human-readable, declarative configuration files and manages your infrastructure’s lifecycle. Using Terraform has several advantages over manually managing your infrastructure.

Terraform plugins called providers let Terraform interact with cloud platforms and other services via their application programming interfaces (APIs). You can use the AWS Provider to interact with the many resources supported by AWS.

3. AWS Lambda

Lambda, according to AWS, is a serverless, event-driven compute service that lets you run code for virtually any application or backend service without provisioning or managing servers. You can trigger Lambda from over 200 AWS services and software as a service (SaaS) applications and only pay for what you use. AWS Lambda natively supports Java, Go, PowerShell, Node.js, C#, Python, and Ruby. AWS Lambda also provides a Runtime API allowing you to use additional programming languages to author your functions.

You can use GitHub Copilot to assist with writing AWS Lambda functions in any of the natively supported languages. You can further optimize the resulting Lambda code with GitHub’s Code Brushes.

The final Python-based AWS Lambda, below, was formatted using the Black Formatter and Flake8 extensions and optimized using the ‘Readable,’ ‘Debug,’ ‘Make Robust,’ and ‘Fix Bug’ GitHub Code Brushes.

You can easily convert the Python-based AWS Lambda to Java using GitHub Copilot Lab’s ability to translate code between languages. Install the GitHub Copilot Labs extension for VS Code to try out language translation.

4. IAM Policies

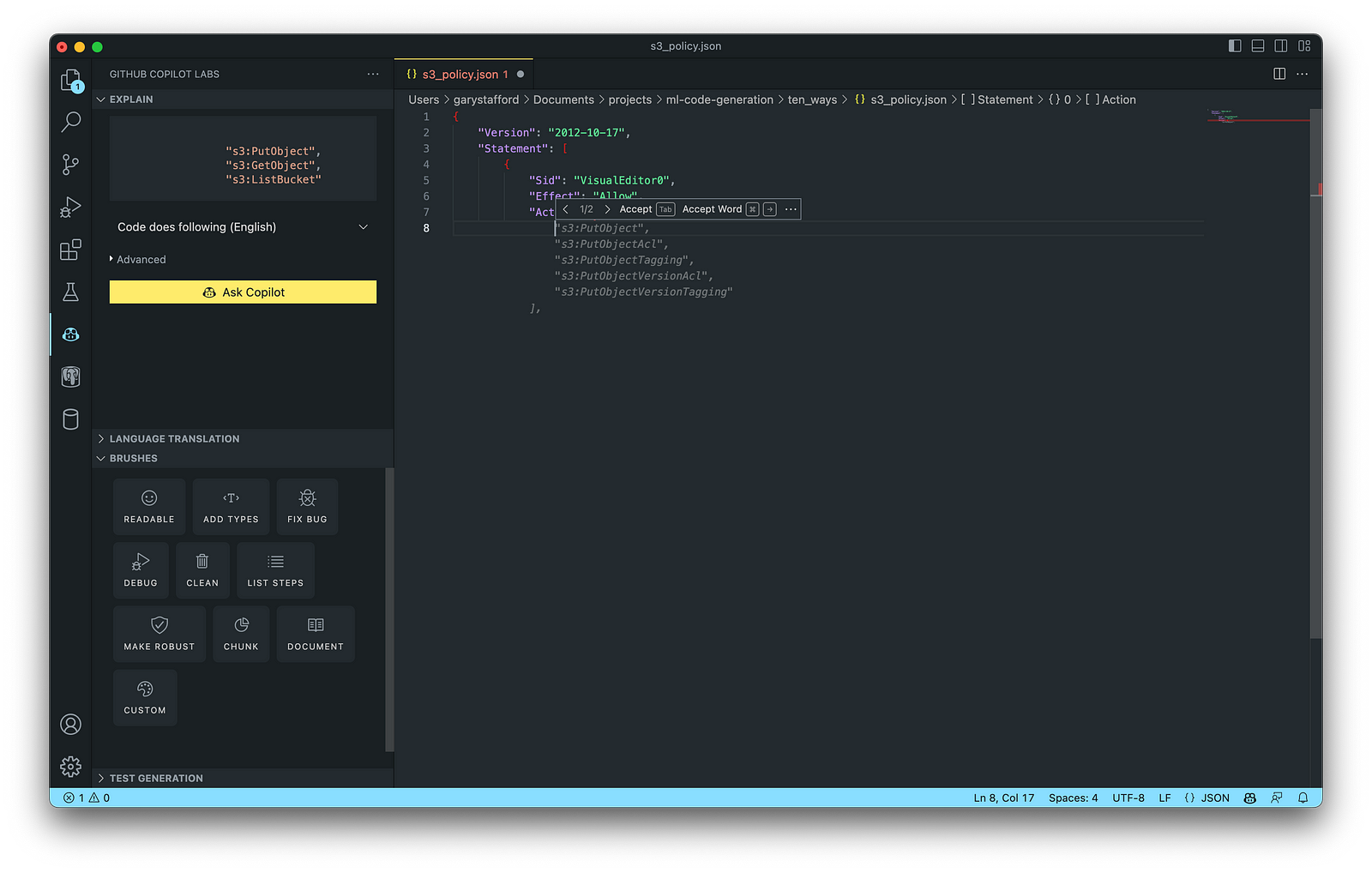

AWS Identity and Access Management (AWS IAM) is a web service that helps you securely control access to AWS resources. According to AWS, you manage access in AWS by creating policies and attaching them to IAM identities (users, groups of users, or roles) or AWS resources. A policy is an object in AWS that defines its permissions when associated with an identity or resource. IAM policies are stored on AWS as JSON documents. You can use GitHub Copilot to assist in writing IAM Policies.

The final AWS IAM Policy, below, was formatted using VS Code’s built-in JSON support.

5. Structured Query Language (SQL)

SQL has many use cases on AWS, including Amazon Relational Database Service (RDS) for MySQL, PostgreSQL, MariaDB, Oracle, and SQL Server databases. SQL is also used with Amazon Aurora, Amazon Redshift, Amazon Athena, Apache Presto, Trino (PrestoSQL), and Apache Hive on Amazon EMR.

You can use IDEs like VS Code with its SQL dialect-specific language support and formatted extensions. You can further optimize the resulting SQL statements with GitHub’s Code Brushes.

The final PostgreSQL script, below, was formatted using the Sql Formatter extension and optimized using the ‘Readable’ and ‘Fix Bug’ GitHub Code Brushes.

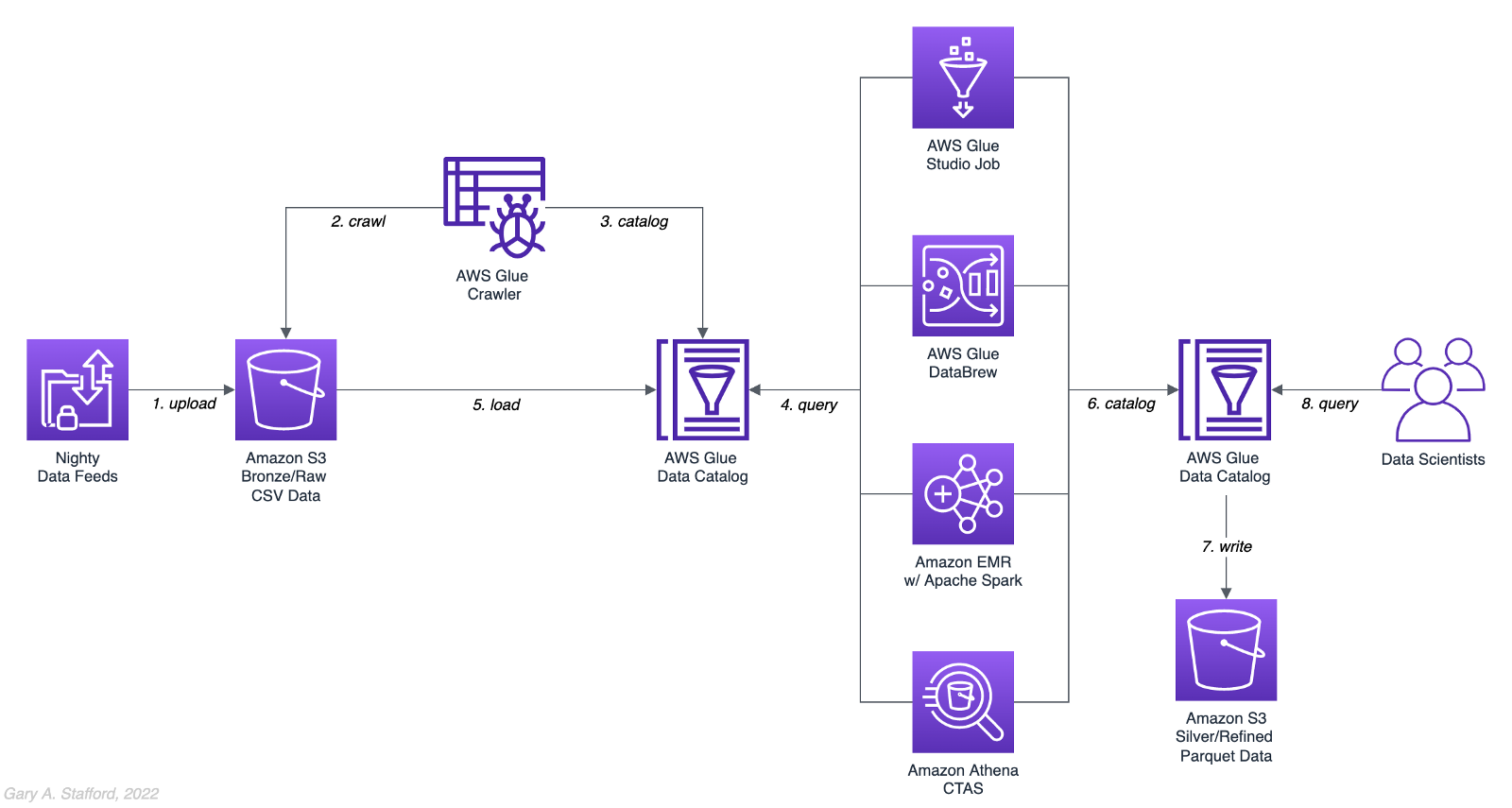

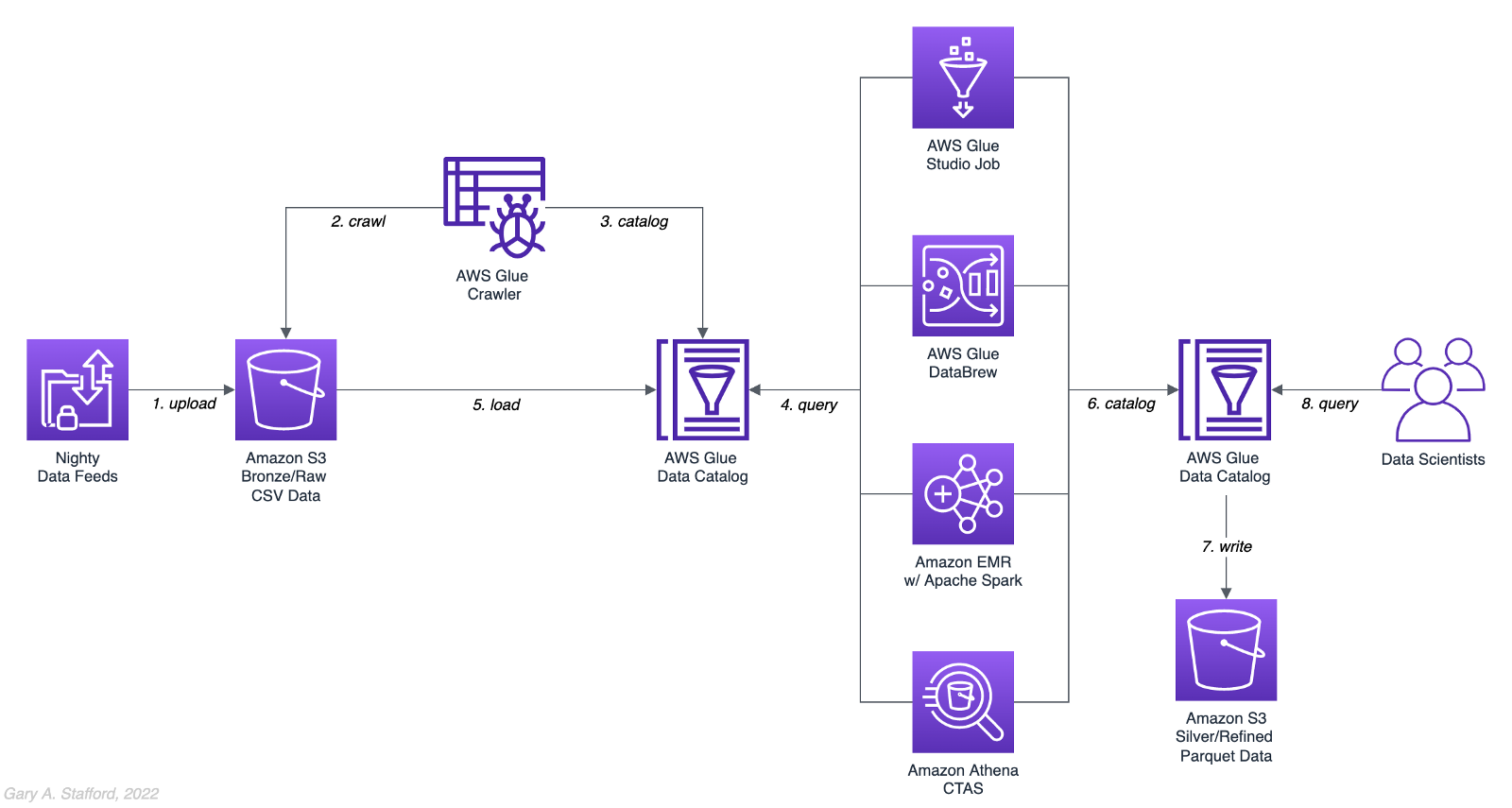

6. Big Data

Big Data, according to AWS, can be described in terms of data management challenges that — due to increasing volume, velocity, and variety of data — cannot be solved with traditional databases. AWS offers managed versions of Apache Spark, Apache Flink, Apache Zepplin, and Jupyter Notebooks on Amazon EMR, AWS Glue, and Amazon Kinesis Data Analytics (KDA).

Apache Spark

According to their website, Apache Spark is a multi-language engine for executing data engineering, data science, and machine learning on single-node machines or clusters. Spark jobs can be written in various languages, including Python (PySpark), SQL, Scala, Java, and R. Apache Spark is available on a growing number of AWS services, including Amazon EMR and AWS Glue.

The final Python-based Apache Spark job, below, was formatted using the Black Formatter extension and optimized using the ‘Readable,’ ‘Document,’ ‘Make Robust,’ and ‘Fix Bug’ GitHub Code Brushes.

7. Configuration and Properties Files

According to TechTarget, a configuration file (aka config) defines the parameters, options, settings, and preferences applied to operating systems, infrastructure devices, and applications. There are many examples of configuration and properties files on AWS, including Amazon MSK Connect (Kafka Connect Source/Sink Connectors), Amazon OpenSearch (Filebeat, Logstash), and Amazon EMR (Apache Log4j, Hive, and Spark).

Kafka Connect

Kafka Connect is a tool for scalably and reliably streaming data between Apache Kafka and other systems. It makes it simple to quickly define connectors that move large collections of data into and out of Kafka. AWS offers a fully-managed version of Kafka Connect: Amazon MSK Connect. You can use GitHub Copilot to write Kafka Connect Source and Sink Connectors with Kafka Connect and Amazon MSK Connect.

The final Kafka Connect Source Connector, below, was formatted using VS Code’s built-in JSON support. It incorporates the Debezium connector for MySQL, Avro file format, schema registry, and message transformation. Debezium is a popular open source distributed platform for performing change data capture (CDC) with Kafka Connect.

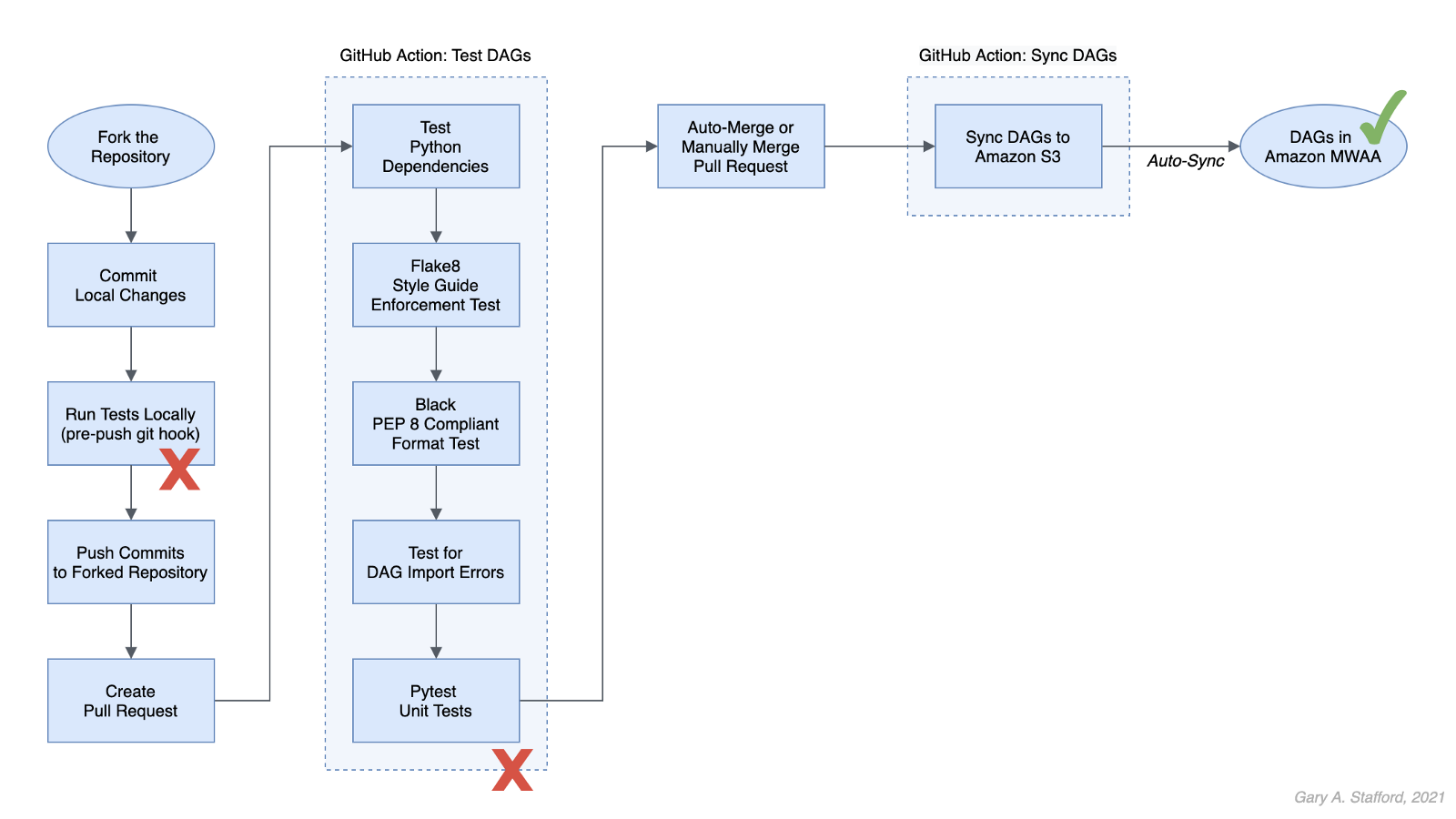

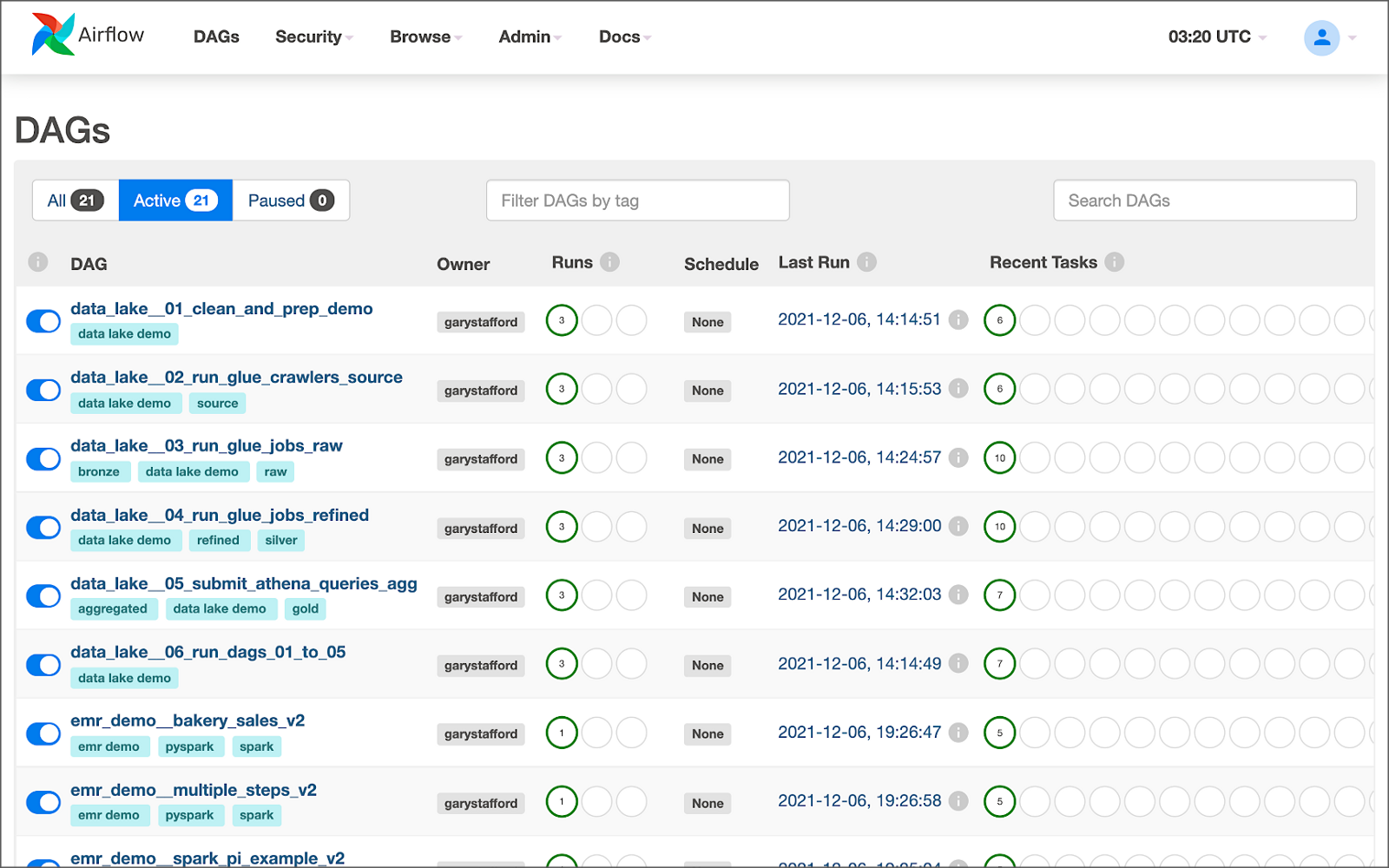

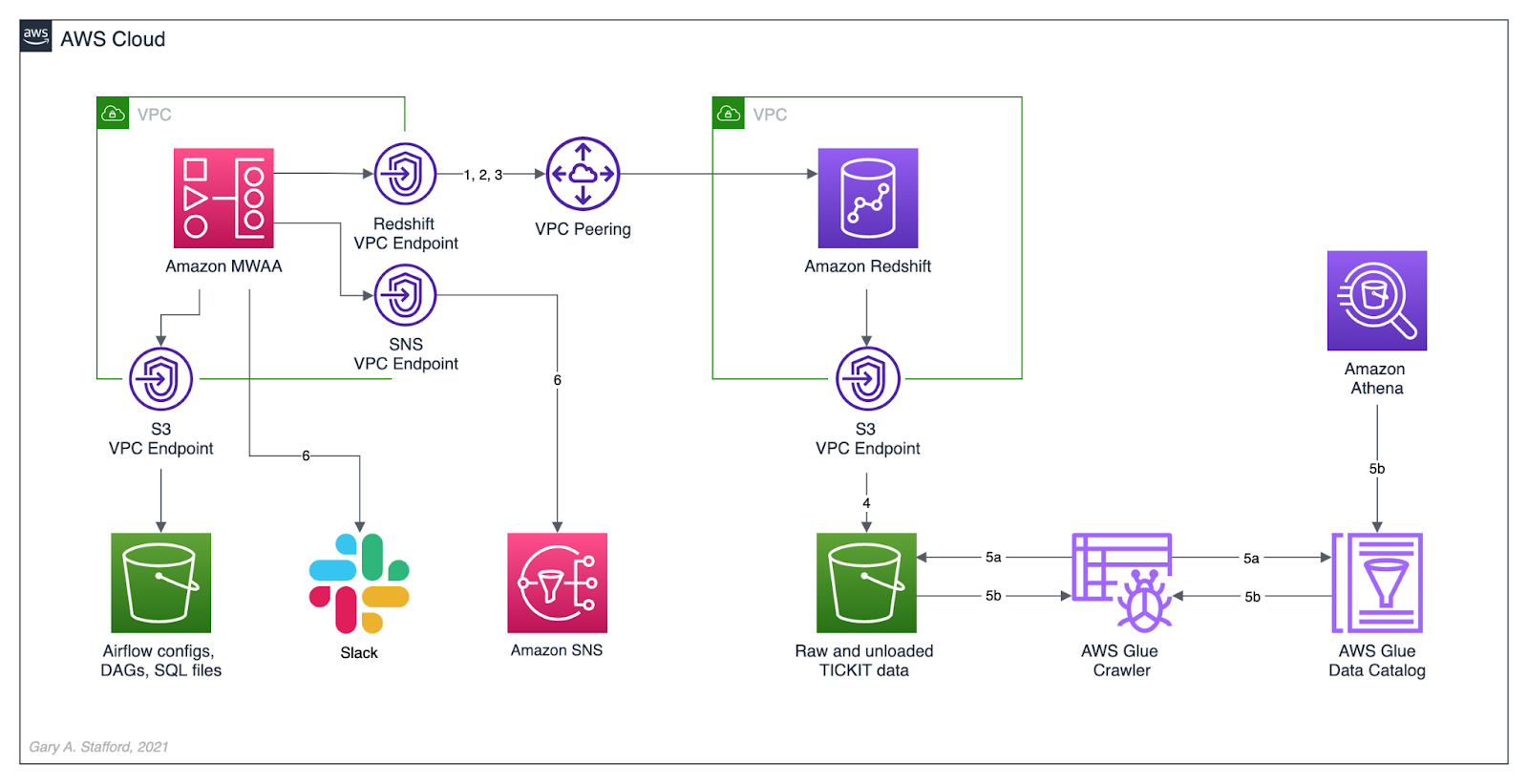

8. Apache Airflow DAGs

Apache Airflow is an open-source platform for developing, scheduling, and monitoring batch-oriented workflows. Airflow’s extensible Python framework enables you to build workflows connecting with virtually any technology. DAG (Directed Acyclic Graph) is the core concept of Airflow, collecting Tasks together, organized with dependencies and relationships to say how they should run.

Amazon Managed Workflows for Apache Airflow (Amazon MWAA) is a managed orchestration service for Apache Airflow. You can use GitHub Copilot to assist in writing DAGs for Apache Airflow, to be used with Amazon MWAA.

The final Python-based Apache Spark job, below, was formatted using the Black Formatter extension. Unfortunately, based on my testing, code optimization with GitHub’s Code Brushes is impossible with Airflow DAGs.

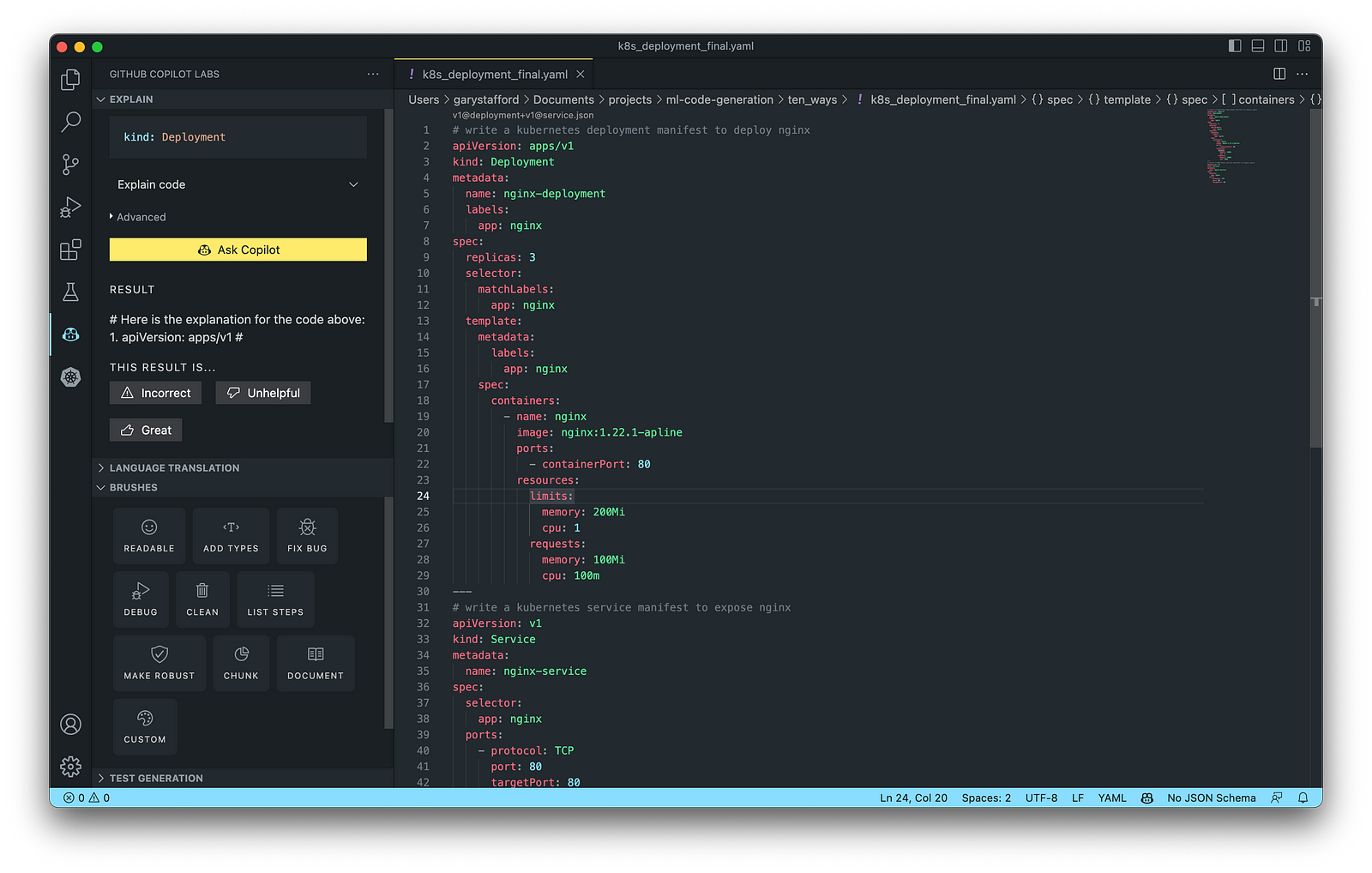

9. Containerization

According to Check Point Software, Containerization is a type of virtualization in which all the components of an application are bundled into a single container image and can be run in isolated user space on the same shared operating system. Containers are lightweight, portable, and highly conducive to automation. AWS describes containerization as a software deployment process that bundles an application’s code with all the files and libraries it needs to run on any infrastructure.

AWS has several container services, including Amazon Elastic Container Service (Amazon ECS), Amazon Elastic Kubernetes Service (Amazon EKS), Amazon Elastic Container Registry (Amazon ECR), and AWS Fargate. Several code-based resources can benefit from a Generative AI coding tool like GitHub Copilot, including Dockerfiles, Kubernetes resources, Helm Charts, Weaveworks Flux, and ArgoCD configuration.

Kubernetes

Kubernetes objects are represented in the Kubernetes API and expressed in YAML format. Below is a Kubernetes Deployment resource file, which creates a ReplicaSet to bring up multiple replicas of nginx Pods.

The final Kubernetes resource file below contains Deployment and Service resources. In addition to GitHub Copilot, you can use Microsoft’s Kubernetes extension for VS Code to use IntelliSense and flag schema errors in the file.

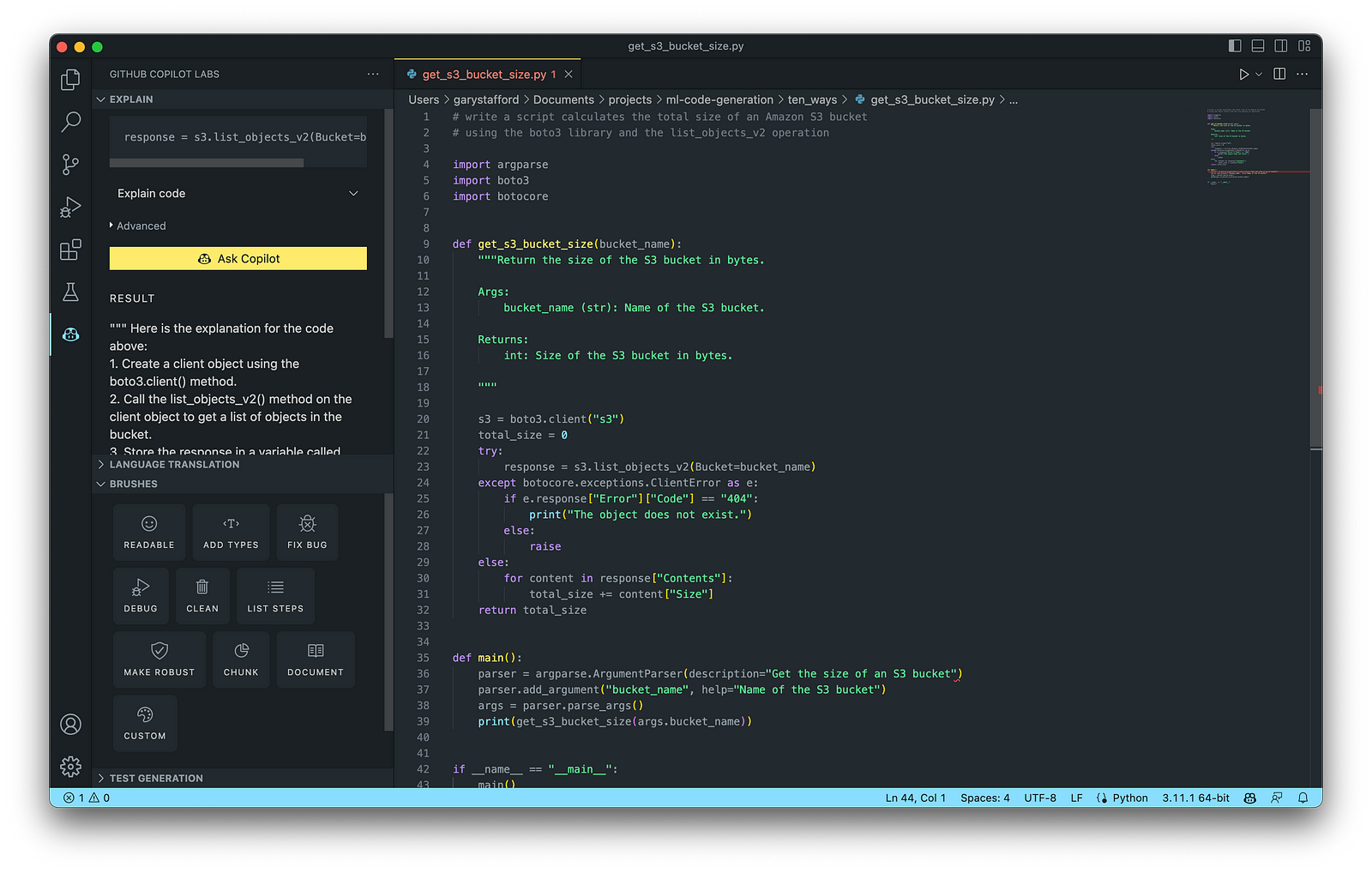

10. Utility Scripts

According to Bing AI — Search, utility scripts are small, simple snippets of code written as independent code files designed to perform a particular task. Utility scripts are commonly written in Bash, Shell, Python, Ruby, PowerShell, and PHP.

AWS utility scripts leverage the AWS Command Line Interface (AWS CLI) for Bash and Shell and AWS SDK for other programming languages. SDKs take the complexity out of coding by providing language-specific APIs for AWS services. For example, Boto3, AWS’s Python SDK, easily integrates your Python application, library, or script with AWS services, including Amazon S3, Amazon EC2, Amazon DynamoDB, and more.

An example of a Python script to calculate the total size of an Amazon S3 bucket, below, was inspired by 100daysofdevops/N-days-of-automation, a fantastic set of open source AWS-oriented automation scripts.

Conclusion

In this post, you learned ten ways to leverage Generative AI coding tools like GitHub Copilot for development on AWS. You saw how combining the latest generation of Generative AI coding tools, a mature and extensible IDE, and your coding experience will accelerate development, increase productivity, and reduce cost.

🔔 To keep up with future content, follow Gary Stafford on LinkedIn.

This blog represents my viewpoints and not those of my employer, Amazon Web Services (AWS). All product names, logos, and brands are the property of their respective owners.

Developing a Multi-Account AWS Environment Strategy

Posted by Gary A. Stafford in AWS, Cloud, DevOps, Enterprise Software Development, Technology Consulting on March 11, 2023

Explore twelve common patterns for developing an effective and efficient multi-account AWS environment strategy

Introduction

Every company is different: its organizational structure, the length of time it has existed, how fast it has grown, the industries it serves, its product and service diversity, public or private sector, and its geographic footprint. This uniqueness is reflected in how it organizes and manages its Cloud resources. Just as no two organizations are exactly alike, the structure of their AWS environments is rarely identical.

Some organizations successfully operate from a single AWS account, while others manage workloads spread across dozens or even hundreds of accounts. The volume and purpose of an organization’s AWS accounts are a result of multiple factors, including length of time spent on AWS, Cloud maturity, organizational structure and complexity, sectors, industries, and geographies served, product and service mix, compliance and regulatory requirements, and merger and acquisition activity.

“By design, all resources provisioned within an AWS account are logically isolated from resources provisioned in other AWS accounts, even within your own AWS Organizations.” (AWS)

Working with industry peers, the AWS community, and a wide variety of customers, one will observe common patterns for how organizations separate environments and workloads using AWS accounts. These patterns form an AWS multi-account strategy for operating securely and reliably in the Cloud at scale. The more planning an organization does in advance to develop a sound multi-account strategy, the less the burden that is required to manage changes as the organization grows over time.

The following post will explore twelve common patterns for effectively and efficiently organizing multiple AWS accounts. These patterns do not represent an either-or choice; they are designed to be purposefully combined to form a multi-account AWS environment strategy for your organization.

Patterns

- Pattern 1: Single “Uber” Account

- Pattern 2: Non-Prod/Prod Environments

- Pattern 3: Upper/Lower Environments

- Pattern 4: SDLC Environments

- Pattern 5: Major Workload Separation

- Pattern 6: Backup

- Pattern 7: Sandboxes

- Pattern 8: Centralized Management and Governance

- Pattern 9: Internal/External Environments

- Pattern 10: PCI DSS Workloads

- Pattern 11: Vendors and Contractors

- Pattern 12: Mergers and Acquisitions

Patterns 1–8 are progressively more mature multi-account strategies, while Patterns 9–12 represent special use cases for supplemental accounts.

Multi-Account Advantages

According to AWS’s whitepaper, Organizing Your AWS Environment Using Multiple Accounts, the benefits of using multiple AWS accounts include the following:

- Group workloads based on business purpose and ownership

- Apply distinct security controls by environment

- Constrain access to sensitive data (including compliance and regulation)

- Promote innovation and agility

- Limit the scope of impact from adverse events

- Support multiple IT operating models

- Manage costs (budgeting and cost attribution)

- Distribute AWS Service Quotas (fka limits) and API request rate limits

As we explore the patterns for organizing your AWS accounts, we will see how and to what degree each of these benefits is demonstrated by that particular pattern.

AWS Control Tower

Discussions about AWS multi-account environment strategies would not be complete without mentioning AWS Control Tower. According to the documentation, “AWS Control Tower offers a straightforward way to set up and govern an AWS multi-account environment, following prescriptive best practices.” AWS Control Tower includes Landing zone, described as “a well-architected, multi-account environment based on security and compliance best practices.”

AWS Control Tower is prescriptive in the Shared accounts it automatically creates within its AWS Organizations’ organizational units (OUs). Shared accounts created by AWS Control Tower include the Management, Log Archive, and Audit accounts. The previous standalone AWS service, AWS Landing Zone, maintained slightly different required accounts, including Shared Services, Log Archive, Security, and optional Network accounts. Although prescriptive, AWS Control Tower is also flexible and relatively unopinionated regarding the structure of Member accounts. Member accounts can be enrolled or unenrolled in AWS Control Tower.

You can decide whether or not to implement AWS Organizations or AWS Control Tower to set up and govern your AWS multi-account environment. Regardless, you will still need to determine how to reflect your organization’s unique structure and requirements in the purpose and quantity of the accounts you create within your AWS environment.

Common Multi-Account Patterns

While working with peers, community members, and a wide variety of customers, I regularly encounter the following twelve patterns for organizing AWS accounts. As noted earlier, these patterns do not represent an either-or choice; they are designed to be purposefully combined to form a multi-account AWS environment strategy for your organization.

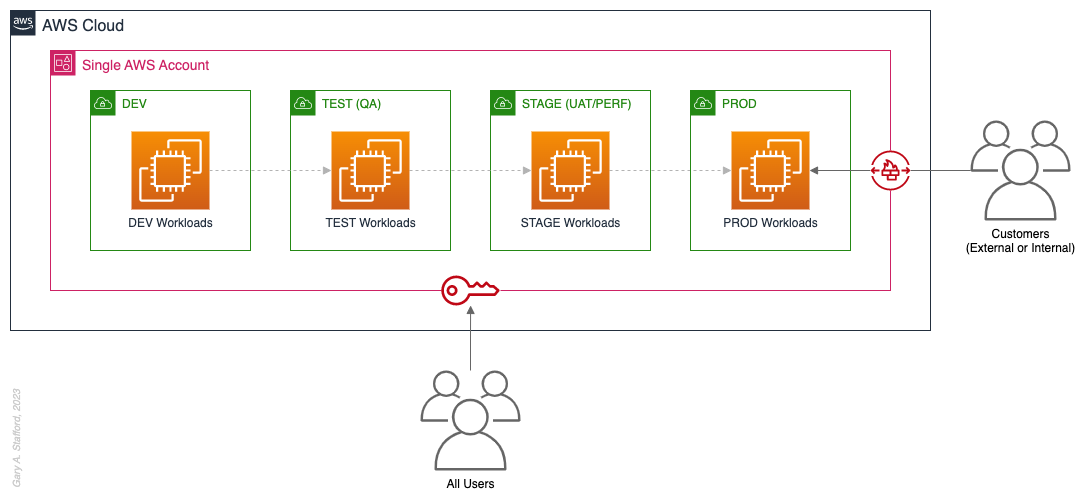

Pattern 1: Single “Uber” Account

Organizations that effectively implement Pattern 1: Single “Uber” Account organize and separate environments and workloads at the sub-account level. They often use Amazon Virtual Private Cloud (Amazon VPC), an AWS account-level construct, to organize and separate environments and workloads. They may also use Subnets (VPC-level construct) or AWS Regions and Availability Zones to further organize and separate environments and workloads.

Pros

- Few, if any, significant advantages, especially for customer-facing workloads

Cons

- Decreased ability to limit the scope of impact from adverse events (widest blast radius)

- If the account is compromised, then all the organization’s workloads and data, possibly the entire organization, are potentially compromised (e.g., Ransomware attacks such as Encryptors, Lockers, and Doxware)

- Increased risk that networking or security misconfiguration could lead to unintended access to sensitive workloads and data

- Increased risk that networking, security, or resource management misconfiguration could lead to broad or unintended impairment of all workloads

- Decreased ability to perform team-, environment-, and workload-level budgeting and cost attribution

- Increased risk of resource depletion (soft and hard service quotas)

- Reduced ability to conduct audits and demonstrate compliance

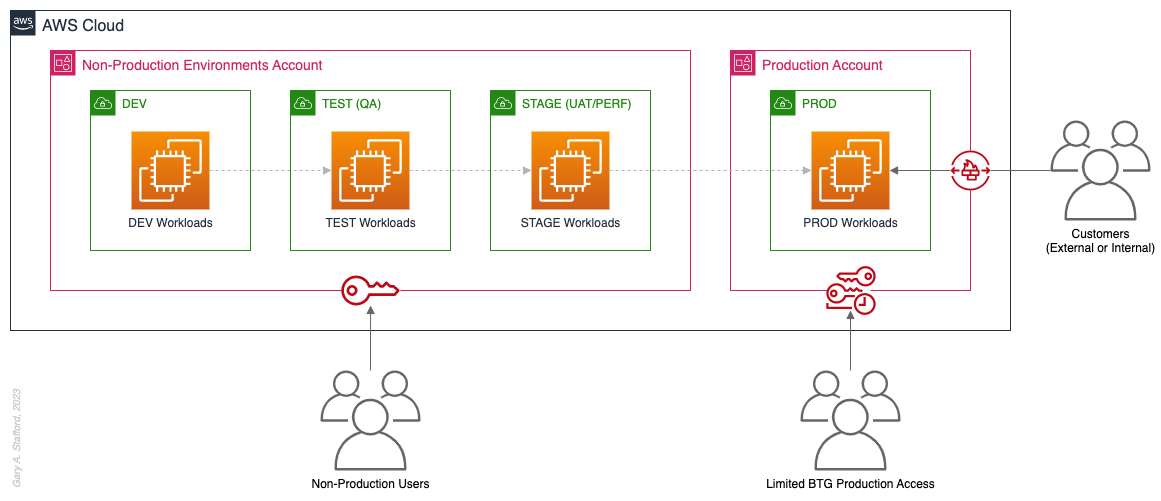

Pattern 2: Non-Prod/Prod Environments

Organizations that effectively implement Pattern 2: Non-Prod/Prod Environments organize and separate non-Production workloads from Production (PROD) workloads using separate AWS accounts. Most often, they use Amazon VPCs within the non-Production account to separate workloads or Software Development Lifecycle (SDLC) environments, most often Development (DEV), Testing (TEST) or Quality Assurance (QA), and Staging (STAGE). Alternatively, these environments might also be designated as “N-1” (previous release), “N” (current release), “N+1” (next release), “N+2” (in development), and so forth, based on the currency of that version of that workload.

In some organizations, the Staging environment is used for User Acceptance Testing (UAT), performance (PERF) testing, and load testing before releasing workloads to Production. While in other organizations, STAGE, UAT, and PERF are each treated as separate environments at the account or VPC level.

Isolating Production workloads into their own account(s) and strictly limiting access to those workloads represents a significant first step in improving the overall maturity of your multi-account AWS environment strategy.

Pros

- Limits the scope of impact on Production as a result of adverse non-Production events (narrower blast radius)

- Logical separation and security of Production workloads and data

- Tightly control and limit access to Production, including the use of Break-the-Glass procedures (aka Break-glass or BTG); draws its name from “breaking the glass to pull a fire alarm”

- Eliminate the risk that non-Production networking or security misconfiguration could lead to unintended access to sensitive Production workloads and data

- Eliminate the chance that non-Production networking, security, or resource management misconfiguration could lead to broad or unintended impairment of Production workloads

- Conduct audits and demonstrate compliance with Production workloads

Cons

- If the Production account is compromised, then all the organization’s customer-facing workloads and data are potentially compromised

- Decreased ability to perform team-, environment-, and workload-level budgeting and cost attribution in the shared non-Production environment

- Increased risk of resource depletion (soft and hard service quotas) in the non-Production environment account

Pattern 3: Upper/Lower Environments

The next pattern, Pattern 3: Upper/Lower Environments, is a finer-grain variation of Pattern 2. With Pattern 3, we split all “Lower” environments into a single account and each “Upper” environment into its own account. In the software development process, initial environments, such as CI/CD for automated testing of code and infrastructure, Development, Test, UAT, and Performance, are called “Lower” environments. Conversely, later environments, such as Staging, Production, and even Disaster Recovery (DR), are called “Upper” environments. Upper environments typically require isolation for stability during testing or security for Production workloads and sensitive data.

Often, courser-grain patterns like Patterns 1–3 are carryovers from more traditional on-premises data centers, where compute, storage, network, and security resources were more constrained. Although these patterns can be successfully reproduced in the Cloud, they may not be optimal compared to more “cloud native” patterns, which provide improved separation of concerns.

Pros

- Limits the scope of impact on individual Upper environments as a result of adverse Lower environment events (narrower blast radius)

- Increased stability of Staging environment for critical UAT, performance, and load testing

- Logical separation and security of Production workloads and data

- Tightly control and limit access to Production, including the use of BTG

- Eliminate the risk that non-Production networking or security misconfiguration could lead to unintended access to sensitive Production workloads and data

- Eliminate the chance that non-Production networking, security, or resource management misconfiguration could lead to broad or unintended impairment of Production workloads

- Conduct audits and demonstrate compliance with Production workloads

Cons

- If the Production account is compromised, then all the organization’s customer-facing workloads and data are potentially compromised (e.g., Ransomware attack)

- Decreased ability to perform team-, environment-, and workload-level budgeting and cost attribution in the shared Lower environment

- Increased risk of resource depletion (soft and hard service quotas) in the Lower environment account

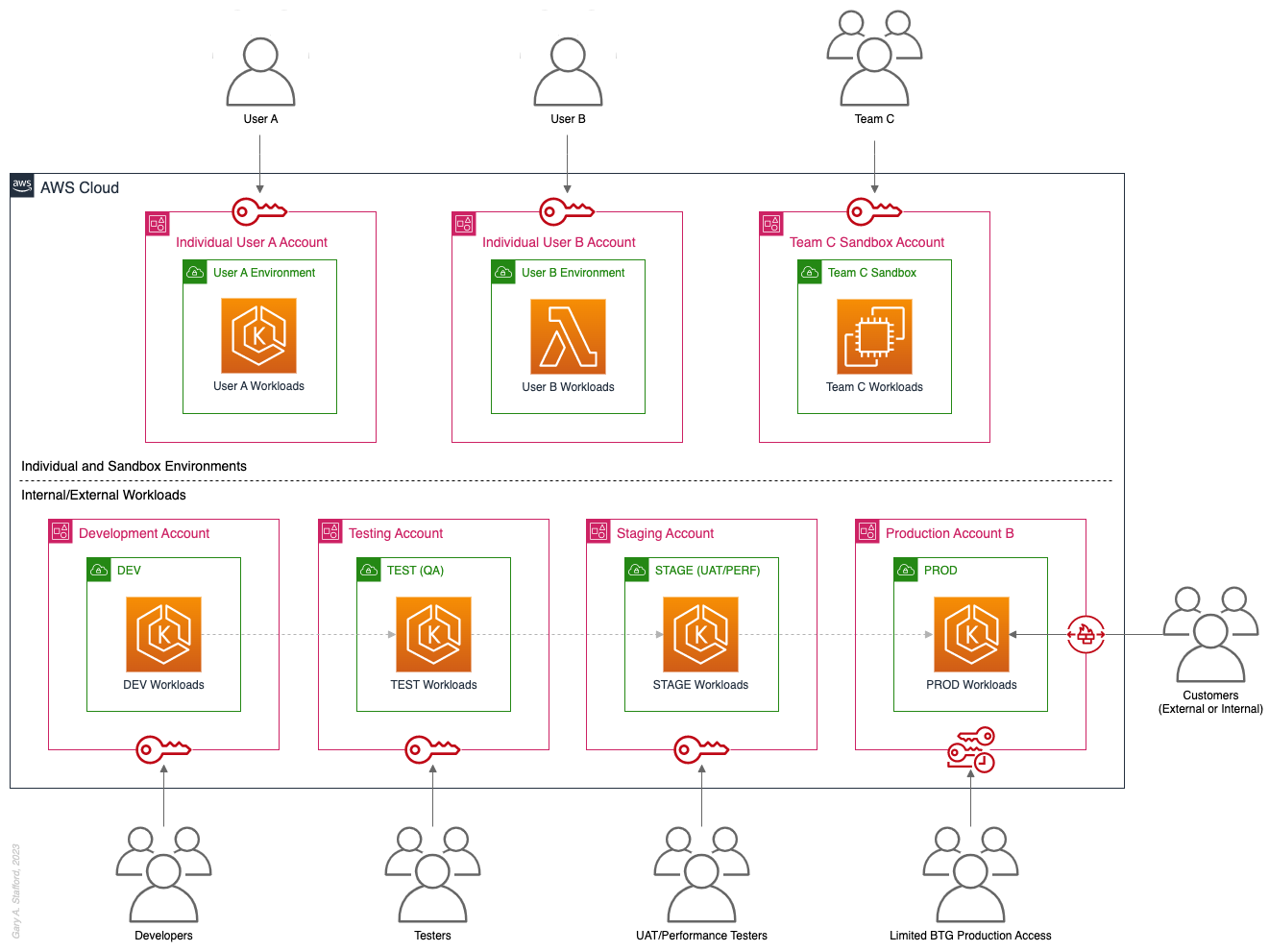

Pattern 4: SDLC Environments

The next pattern, Pattern 4: SDLC Environments, is a finer-grain variation of Pattern 3. With Pattern 4, we gain complete separation of each SDLC environment into its own AWS account. Using AWS services like AWS IAM Identity Center (fka AWS SSO), the Security team can enforce least-privilege permissions at an AWS Account level to individual groups of users, such as Developers, Testers, UAT, and Performance testers.

Based on my experience, Pattern 4 represents the minimal level of workload separation an organization should consider when developing its multi-account AWS environment strategy. Although Pattern 4 has a number of disadvantages, when combined with subsequent patterns and AWS best practices, this pattern begins to provide a scalable foundation for an organization’s growing workload portfolio.

Patterns, such as Pattern 4, not only apply to traditional software applications and services. These patterns can be applied to data analytics, AI/ML, IoT, media services, and similar workloads where separation of environments is required.

Pros

- Limits the scope of impact on one SDLC environment as a result of adverse events in another environment (narrower blast radius)

- Logical separation and security of Production workloads and data

- Increased stability of each SDLC environment

- Tightly control and limit access to Production, including the use of BTG

- Reduced risk that non-Production networking or security misconfiguration could lead to unintended access to sensitive Production workloads and data

- Reduced risk that non-Production networking, security, or resource management misconfiguration could lead to broad or unintended impairment of Production workloads

- Conduct audits and demonstrate compliance with Production workloads

- Increased ability to perform budgeting and cost attribution for each SDLC environment

- Reduced risk of resource depletion (soft and hard service limits) and IP conflicts and exhaustion within any single SDLC environment

Cons

- All workloads for each SDLC environment run within a single account, including Production, increasing the potential scope of impact from adverse events within that environment’s account

- If the Production account is compromised, then all the organization’s customer-facing workloads and data are potentially compromised

- Decreased ability to perform workload-level budgeting and cost attribution

Pattern 5: Major Workload Separation

The next pattern, Pattern 5: Major Workload Separation, is a finer-grain variation of Pattern 4. With Pattern 5, we separate each significant workload into its own separate SDLC environment account. The security team can enforce fine-grain least-privilege permissions at an AWS Account level to individual groups of users, such as Developers, Testers, UAT, and Performance testers, by their designated workload(s).

Pattern 5 has several advantages over the previous patterns. In addition to the increased workload-level security and reliability benefits, Pattern 5 can be particularly useful for organizations that operate significantly different technology stacks and specialized workloads, particularly at scale. Different technology stacks and specialized workloads often each have their own unique development, testing, deployment, and support processes. Isolating these types of workloads will help facilitate the support of multiple IT operating models.

Pros

- Limits the scope of impact on an individual workload as a result of adverse events from another workload or SDLC environment (narrowest blast radius)

- Increased ability to perform team-, environment-, and workload-level budgeting and cost attribution

Cons

- If the Production account is compromised, then all the organization’s customer-facing workloads and data are potentially compromised (e.g., Ransomware attack)

Pattern 6: Backup

In the earlier patterns, we mentioned that if the Production account were compromised, all the organization’s customer-facing workloads and data could be compromised. According to TechTarget, 2022 was a breakout year for Ransomware attacks. According to the US government’s CISA.gov website, “Ransomware is a form of malware designed to encrypt files on a device, rendering any files and the systems that rely on them unusable. Malicious actors then demand ransom in exchange for decryption.”

According to AWS best practices, one of the recommended preparatory actions to protect and recover from Ransomware attacks is backing up data to an alternate account using tools such as AWS Backup and an AWS Backup vault. Solutions such as AWS Backup protect and restore data regardless of how it was made inaccessible.

In Pattern 6: Backup, we create one or more Backup accounts to protect against unintended data loss or account compromise. In the example below, we have two Backup accounts, one for Production data and one for all non-Production data.

Pros

- If the Production account is compromised (e.g., Ransomware attacks such as Encryptors, Lockers, and Doxware), there are secure backups of data stored in a separate account, which can be used to restore or recreate the Production environment

Cons

- Few, if any, significant disadvantages when combined with previous patterns and AWS best-practices

Pattern 7: Sandboxes

The following pattern, Pattern 7: Sandboxes, supplements the previous patterns, designed to address the needs of an organization to allow individual users and teams to learn, build, experiment, and innovate on AWS without impacting the larger organization’s AWS environment. To quote the AWS blog, Best practices for creating and managing sandbox accounts in AWS, “Many organizations need another type of environment, one where users can build and innovate with AWS services that might not be permitted in production or development/test environments because controls have not yet been implemented.” Further, according to TechTarget, “a Sandbox is an isolated testing environment that enables users to run programs or open files without affecting the application, system, or platform on which they run.”

Due to the potential volume of individual user and team accounts, sometimes referred to as Sandbox accounts, mature infrastructure automation practices, cost controls, and self-service provisioning and de-provisioning of Sandbox accounts are critical capabilities for the organization.

Pros

- Allow individual users and teams to learn, build, experiment, and innovate on AWS without impacting the rest of the organization’s AWS environment

Cons

- Without mature automation practices, cost controls, and self-service capabilities, managing multiple individual and team Sandbox accounts can become unwieldy and costly

Pattern 8: Centralized Management and Governance

We discussed AWS Control Tower at the beginning of this post. AWS Control Tower is prescriptive in creating Shared accounts within its AWS Organizations’ organizational units (OUs), including the Management, Log Archive, and Audit accounts. AWS encourages using AWS Control Tower to orchestrate multiple AWS accounts and services on your behalf while maintaining your organization’s security and compliance needs.

As exemplified in Pattern 8: Centralized Management and Governance, many organizations will implement centralized management whether or not they decide to implement AWS Control Tower. In addition to the Management account (payer account, fka master account), organizations often create centralized logging accounts, and centralized tooling (aka Shared services) accounts for functions such as CI/CD, IaC provisioning, and deployment. Another common centralized management account is a Security account. Organizations use this account to centralize the monitoring, analysis, notification, and automated mitigation of potential security issues within their AWS environment. The Security accounts will include services such as Amazon Detective, Amazon Inspector, Amazon GuardDuty, and AWS Security Hub.

Pros

- Increased ability to manage and maintain multiple AWS accounts with fewer resources

- Reduced duplication of management resources across accounts

- Increased ability to use automation and improve the consistency of processes and procedures across multiple accounts

Cons

- Few, if any, significant disadvantages when combined with previous patterns and AWS best-practices

Pattern 9: Internal/External Environments

The next pattern, Pattern 9: Internal/External Environments, focuses on organizations with internal operational systems (aka Enterprise systems) in the Cloud and customer-facing workloads. Pattern 9 separates internal operational systems, platforms, and workloads from external customer-facing workloads. For example, an organization’s divisions and departments, such as Sales and Marketing, Finance, Human Resources, and Manufacturing, are assigned their own AWS account(s). Pattern 9 allows the Security team to ensure that internal departmental or divisional users are isolated from users who are responsible for developing, testing, deploying, and managing customer-facing workloads.

Note that the diagram for Pattern 9 shows remote users who access AWS End User Computing (EUC) services or Virtual Desktop Infrastructure (VDI), such as Amazon WorkSpaces and Amazon AppStream 2.0. In this example, remote workers have secure access to EUC services provisioned in a separate AWS account, and indirectly, internal systems, platforms, and workloads.

Pros

- Separation of internal operational systems, platforms, and workloads from external customer-facing workloads

Cons

- Few, if any, significant disadvantages when combined with previous patterns and AWS best-practices

Pattern 10: PCI DSS Workloads

The next pattern, Pattern 10: PCI DSS Workloads, is a variation of previous patterns, which assumes the existence of Payment Card Industry Data Security Standard (PCI DSS) workloads and data. According to the AWS, “PCI DSS applies to entities that store, process, or transmit cardholder data (CHD) or sensitive authentication data (SAD), including merchants, processors, acquirers, issuers, and service providers. The PCI DSS is mandated by the card brands and administered by the Payment Card Industry Security Standards Council.”

According to AWS’s whitepaper, Architecting for PCI DSS Scoping and Segmentation on AWS, “By design, all resources provisioned within an AWS account are logically isolated from resources provisioned in other AWS accounts, even within your own AWS Organizations. Using an isolated account for PCI workloads is a core best practice when designing your PCI application to run on AWS.” With Pattern 10, we separate non-PCI DSS and PCI DSS Production workloads and data. The assumption is that only Production contains PCI DSS data. Data in lower environments is synthetically generated or sufficiently encrypted, masked, obfuscated, or tokenized.

Note that the diagram for Pattern 10 shows Administrators. Administrators with different spans of responsibility and access are present in every pattern, whether specifically shown or not.

Pros

- Increased ability to meet compliance requirements by separating non-PCI DSS and PCI DSS Production workloads

Cons

- Few, if any, significant disadvantages when combined with previous patterns and AWS best-practices

Pattern 11: Vendors and Contractors

The next pattern, Pattern 11: Vendors and Contractors, is focused on organizations that employ contractors or use third-party vendors who provide products and services that interact with their AWS-based environment. Like Pattern 10, Pattern 11 allows the Security team to ensure that contractor and vendor-based systems’ access to internal systems and customer-facing workloads is tightly controlled and auditable.

Vendor-based products and services are often deployed within an organization’s AWS environment without external means of ingress or egress. Alternatively, a vendor’s product or service may have a secure means of ingress from or egress to external endpoints. Such is the case with some SaaS products, which ship an organization’s data to an external aggregator for analytics or a security vendor’s product that pre-filters incoming data, external to the organization’s AWS environment. Using separate AWS accounts can improve an organization’s security posture and mitigate the risk of adverse events on the organization’s overall AWS environment.

Pros

- Ensure access to internal systems and customer-facing workloads by contractors and vendor-based systems is tightly controlled and auditable

Cons

- Few, if any, significant disadvantages when combined with previous patterns and AWS best-practices

Pattern 12: Mergers and Acquisitions

The next pattern, Pattern 12: Mergers and Acquisitions, is focused on managing the integration of external AWS accounts as a result of a merger or acquisition. This is a common occurrence, but the exact details of how best to handle the integration of two or more integrations depend on several factors. Factors include the required level of integration, for example, maintaining separate AWS Organizations, maintaining different AWS accounts, or merging resources from multiple accounts. Other factors that might impact account structure include changes in ownership or payer of acquired accounts, existing acquired cost-savings agreements (e.g., EDPs, PPAs, RIs, and Savings Plans), and AWS Marketplace vendor agreements. Even existing authentication and authorization methods of the acquiree versus the acquirer (e.g., AWS IAM Identity Center, Microsoft Active Directory (AD), Azure AD, and external identity providers (IdP) like Okta or Auth0).

The diagram for Pattern 12 attempts to show a few different M&A account scenarios, including maintaining separate AWS accounts for the acquirer and acquiree (e.g., acquiree’s Manufacturing Division account). If desired, the accounts can be kept independent but managed within the acquirer’s AWS Organizations’ organization. The diagram also exhibits merging resources from the acquiree’s accounts into the acquirer’s accounts (e.g., Sales and Marketing accounts). Resources will be migrated or decommissioned, and the account will be closed.

Pros

- Maintain separation between an acquirer and an acquiree’s AWS accounts within a single organization’s AWS environment

- Potentially consolidate and maximize cost-saving advantages of volume-related financial agreements and vendor licensing

Cons

- Migrating workloads between different organizations, depending on their complexity, requires careful planning and testing

- Consolidating multiple authentication and authorization methods requires careful planning and testing to avoid improper privileges

- Consolidating and optimizing separate licensing and cost-saving agreements between multiple organizations requires careful planning and an in-depth understanding of those agreements

Multi-Account AWS Environment Example

You can form an efficient and effective multi-account strategy for your organization by purposefully combining multiple patterns. Below is an example of combining the features of several patterns: Major Workload Separation, Backup, Sandboxes, Centralized Management and Governance, Internal/External Environments, and Vendors and Contractors.

According to AWS, “You can use AWS Organizations’ organizational units (OUs) to group accounts together to administer as a single unit. This greatly simplifies the management of your accounts.” If you decide to use AWS Organizations, each set of accounts associated with a pattern could correspond to an OU: Major Workload A, Major Workload B, Sandboxes, Backups, Centralized Management, Internal Environments, Vendors, and Contractors.

Conclusion

This post taught us twelve common patterns for effectively and efficiently organizing your AWS accounts. Instead of an either-or choice, these patterns are designed to be purposefully combined to form a multi-account strategy for your organization. Having a sound multi-account strategy will improve your security posture, maintain compliance, decrease the impact of adverse events on your AWS environment, and improve your organization’s ability to safely and confidently innovate and experiment on AWS.

Recommended References

- Establishing Your Cloud Foundation on AWS (AWS Whitepaper)

- Organizing Your AWS Environment Using Multiple Accounts (AWS Whitepaper)

- Building a Cloud Operating Model (AWS Whitepaper)

- AWS Security Reference Architecture (AWS Prescriptive Guidance)

- AWS Security Maturity Model (AWS Whitepaper)

- AWS re:Invent 2022 — Best practices for organizing and operating on AWS (YouTube Video)

- AWS Break Glass Role (GitHub)

🔔 To keep up with future content, follow Gary Stafford on LinkedIn.

This blog represents my viewpoints and not those of my employer, Amazon Web Services (AWS). All product names, logos, and brands are the property of their respective owners.

Brief Introduction to Observability on AWS

Posted by Gary A. Stafford in AWS, Cloud, DevOps on March 9, 2023

Explore the wide variety of Application Performance Monitoring (APM) and Observability options on AWS

APM and Observability

Observability is “the extent to which the internal states of a system can be inferred from externally available data” (Gartner). The three pillars of observability data are metrics, logs, and traces. Application Performance Monitoring (APM), a term commonly associated with observability, is “software that enables the observation and analysis of application health, performance, and user experience” (Gartner).

Additional features often associated with APM and observability products and services include the following (in alphabetical order):

- Advanced Threat Protection (ATP)

- Endpoint Detection and Response (EDR)

- Incident Detection Response (IDR)

- Infrastructure Performance Monitoring (IPM)

- Network Device Monitoring (NDM)

- Network Performance Monitoring (NPM)

- OpenTelemetry (OTel)

- Operational (or Operations) Intelligence Platform

- Predictive Monitoring (predictive analytics / predictive modeling)

- Real User Monitoring (RUM)

- Security Information and Event Management (SIEM)

- Security Orchestration, Automation, and Response (SOAR)

- Synthetic Monitoring (directed monitoring / synthetic testing)

- Threat Visibility and Risk Scoring

- Unified Security and Observability Platform

- User Behavior Analytics (UBA)

Not all features are offered by all vendors. Most vendors tend to specialize in one or more areas. Determining which features are essential to your organization before choosing a solution is vital.

AI/ML

Given the growing volume and real-time nature of observability telemetry, many vendors have started incorporating AI and ML into their products and services to improve correlation, anomaly detection, and mitigation capabilities. Understand how these features can reduce operational burden, enhance insights, and simplify complexity.

Decision Factors

APM and observability tooling choices often come down to a “Build vs. Buy” decision for organizations. In the Cloud, this usually means integrating several individual purpose-built products and services, self-managed open-source projects, or investing in an end-to-end APM or unified observability platform. Other decision factors include the need for solutions to support:

- Hybrid cloud environments (on-premises/Cloud)

- Multi-cloud environments (Public Cloud, SaaS, Supercloud)

- Specialized workloads (e.g., Mainframes, HPC, VMware, SAP, SAS)

- Compliant workloads (e.g., PCI DSS, PII, GDPR, FedRAMP)

- Edge Computing and IoT/IIoT

- AI, ML, and Data Analytics monitoring (AIOps, MLOps, DataOps)

- SaaS observability (SaaS providers who offer monitoring to their end-users as part of their service offering)

- Custom log formats and protocols

Finally, the 5 V’s of big data: Velocity, Volume, Value, Variety, and Veracity, also influence the choice of APM and observability tooling. The real-time nature of the observability data, the sheer volume of the data, the source and type of data, and the sensitivity of the data, will all guide tooling choices based on features and cost.

Organizations can choose fully-managed native AWS services, AWS Partner products and services, often SaaS, self-managed open-source observability tooling, or a combination of options. Many AWS and Partner products and services are commercial versions of popular open-source software (COSS).

AWS Options

- Amazon CloudWatch: Observe and monitor resources and applications you run on AWS in real time. CloudWatch features include Amazon CloudWatch Container, Lambda, Contributor, and Application Insights.

- Amazon Managed Service for Prometheus (AMP): Prometheus-compatible service that monitors and provides alerts on containerized applications and infrastructure at scale. Prometheus is “an open-source systems monitoring and alerting toolkit originally built at SoundCloud.”

- Amazon Managed Grafana (AMG): Commonly paired with AMP, AMG is a fully managed service for Grafana, an open-source analytics platform that “enables you to query, visualize, alert on, and explore your metrics, logs, and traces wherever they are stored.”

- Amazon OpenSearch Service: Based on open-source OpenSearch, this service provides interactive log analytics and real-time application monitoring and includes OpenSearch Dashboards (comparable to Kibana). Recently announced new security analytics features provide threat monitoring, detection, and alerting capabilities.

- AWS X-Ray: Trace user requests through your application, viewed using the X-Ray service map console or integrated with CloudWatch using the CloudWatch console’s X-Ray Traces Service map.

Data Collection, Processing, and Forwarding

- AWS Distro for OpenTelemetry (ADOT): Open-source APIs, libraries, and agents to collect distributed traces and metrics for application monitoring. ADOT is an open-source distribution of OpenTelemetry, a “high-quality, ubiquitous, and portable telemetry [solution] to enable effective observability.”

- AWS for Fluent Bit: Fluent Bit image with plugins for both CloudWatch Logs and Kinesis Data Firehose. Fluent Bit is an open-source, “super fast, lightweight, and highly scalable logging and metrics processor and forwarder.”

Security-focused Monitoring

- AWS CloudTrail: Helps enable operational and risk auditing, governance, and compliance in your AWS environment. CloudTrail records events, including actions taken in the AWS Management Console, AWS Command Line Interface (CLI), AWS SDKs, and APIs.

Partner Options

According to the 2022 Gartner® Magic Quadrant™ — APM & Observability Report, leading vendors commonly used by AWS customers include the following (in alphabetical order):

- AppDynamics (Cisco)

- Datadog

- Dynatrace

- Elastic

- Honeycomb

- Instanta (IBM)

- logz.io

- New Relic

- Splunk

- Sumo Logic

Open Source Options

There are countless open-source observability projects to choose from, including the following (in alphabetical order):

- Elastic: Elastic Stack: Elasticsearch, Kibana, Beats, and Logstash

- Fluentd: Data collector for unified logging layer

- Grafana: Platform for monitoring and observability

- Jaeger: End-to-end distributed tracing

- Kiali: Configure, visualize, validate, and troubleshoot Istio Service Mesh

- Loki: Like Prometheus, but for logs

- OpenSearch: Scalable, flexible, and extensible software suite for search, analytics, and observability applications

- OpenTelemetry (OTel): Collection of tools, APIs, and SDKs used to instrument, generate, collect, and export telemetry data

- Prometheus: Monitoring system and time series database

- Zabbix: Single pane of glass view of your whole IT infrastructure stack

🔔 To keep up with future content, follow Gary Stafford on LinkedIn.

This blog represents my viewpoints and not those of my employer, Amazon Web Services (AWS). All product names, logos, and brands are the property of their respective owners.

Building and Deploying Cloud-Native Quarkus-based Java Applications to Kubernetes

Posted by Gary A. Stafford in AWS, Cloud, DevOps, Java Development, Kubernetes, Software Development on June 1, 2022

Developing, testing, building, and deploying Native Quarkus-based Java microservices to Kubernetes on AWS, using GitOps

Introduction

Although it may no longer be the undisputed programming language leader, according to many developer surveys, Java still ranks right up there with Go, Python, C/C++, and JavaScript. Given Java’s continued popularity, especially amongst enterprises, and the simultaneous rise of cloud-native software development, vendors have focused on creating purpose-built, modern JVM-based frameworks, tooling, and standards for developing applications — specifically, microservices.

Leading JVM-based microservice application frameworks typically provide features such as native support for a Reactive programming model, MicroProfile, GraalVM Native Image, OpenAPI and Swagger definition generation, GraphQL, CORS (Cross-Origin Resource Sharing), gRPC (gRPC Remote Procedure Calls), CDI (Contexts and Dependency Injection), service discovery, and distributed tracing.

Leading JVM-based Microservices Frameworks

Review lists of the most popular cloud-native microservices framework for Java, and you are sure to find Spring Boot with Spring Cloud, Micronaut, Helidon, and Quarkus at or near the top.

Spring Boot with Spring Cloud

According to their website, Spring makes programming Java quicker, easier, and safer for everybody. Spring’s focus on speed, simplicity, and productivity has made it the world’s most popular Java framework. Spring Boot makes it easy to create stand-alone, production-grade Spring based Applications that you can just run. Spring Boot’s many purpose-built features make it easy to build and run your microservices in production at scale. However, the distributed nature of microservices brings challenges. Spring Cloud can help with service discovery, load-balancing, circuit-breaking, distributed tracing, and monitoring with several ready-to-run cloud patterns. It can even act as an API gateway.

Helidon

Oracle’s Helidon is a cloud-native, open‑source set of Java libraries for writing microservices that run on a fast web core powered by Netty. Helidon supports MicroProfile, a reactive programming model, and, similar to Micronaut, Spring, and Quarkus, it supports GraalVM Native Image.

Micronaut

According to their website, the Micronaut framework is a modern, open-source, JVM-based, full-stack toolkit for building modular, easily testable microservice and serverless applications. Micronaut supports a polyglot programming model, discovery services, distributed tracing, and aspect-oriented programming (AOP). In addition, Micronaut offers quick startup time, blazing-fast throughput, and a minimal memory footprint.

Quarkus

Quarkus, developed and sponsored by RedHat, is self-described as the ‘Supersonic Subatomic Java.’ Quarkus is a cloud-native, Kubernetes-native, [Linux] container first, microservices first framework for writing Java applications. Quarkus is a Kubernetes Native Java stack tailored for OpenJDK HotSpot and GraalVM, crafted from over fifty best-of-breed Java libraries and standards.

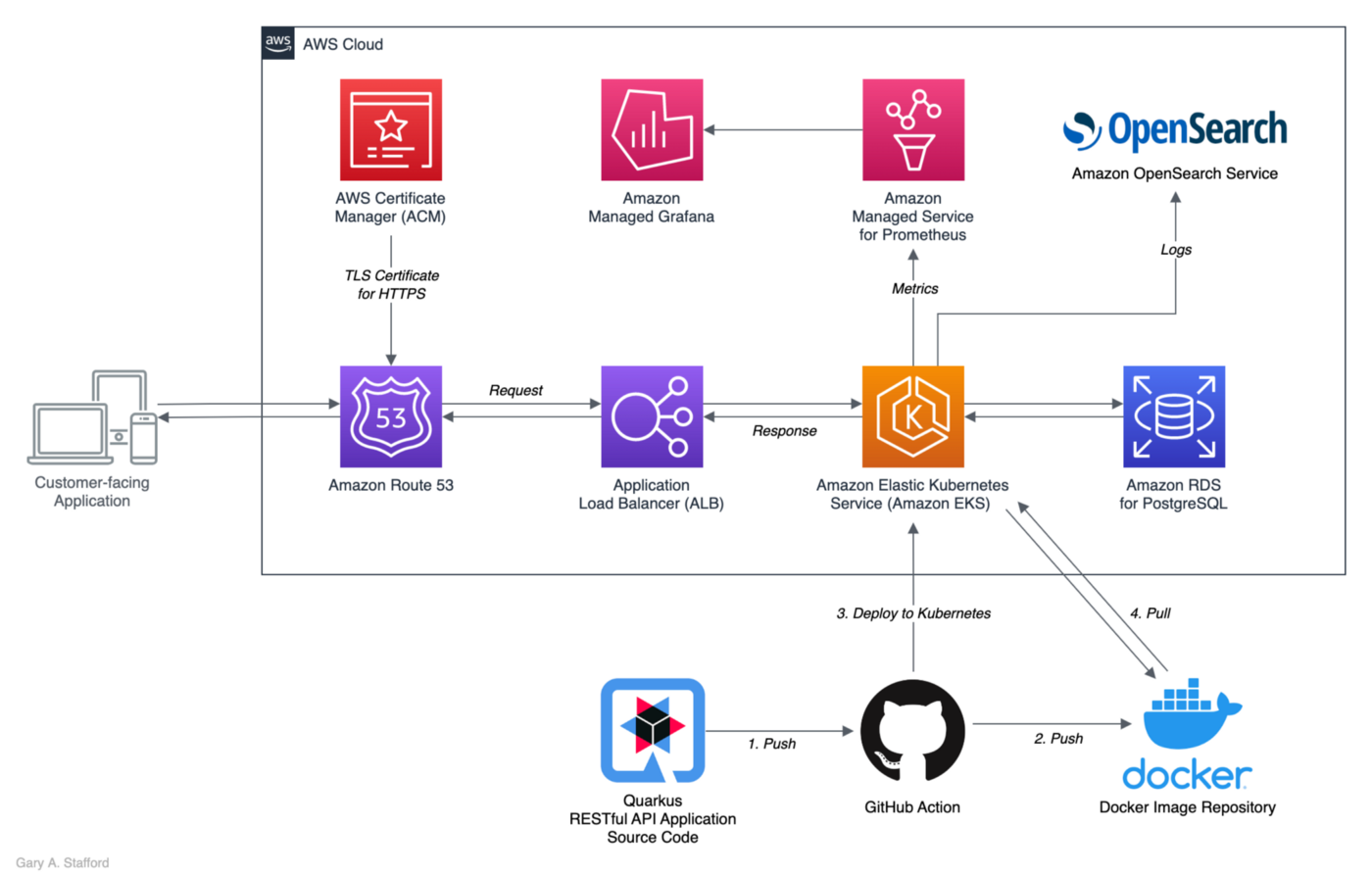

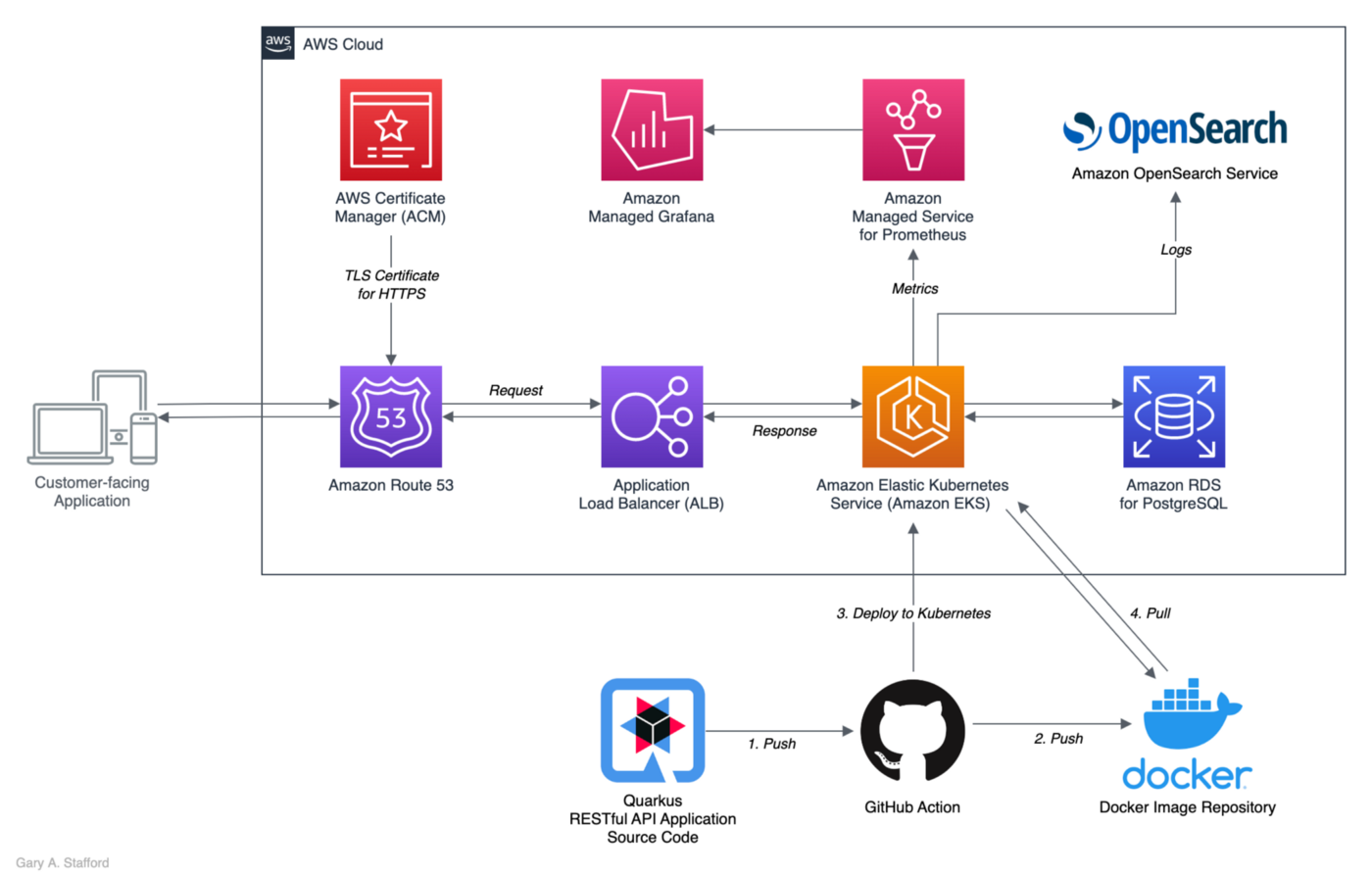

Developing Native Quarkus Microservices

In the following post, we will develop, build, test, deploy, and monitor a native Quarkus microservice application to Kubernetes. The RESTful service will expose a rich Application Programming Interface (API) and interacts with a PostgreSQL database on the backend.

Some of the features of the Quarkus application in this post include:

- Hibernate Object Relational Mapper (ORM), the de facto Jakarta Persistence API (formerly Java Persistence API) implementation

- Hibernate Reactive with Panache, a Quarkus-specific library that simplifies the development of Hibernate Reactive entities

- RESTEasy Reactive, a new JAX-RS implementation, works with the common Vert.x layer and is thus fully reactive

- Advanced RESTEasy Reactive Jackson support for JSON serialization

- Reactive PostgreSQL client

- Built on Mandrel, a downstream distribution of the GraalVM community edition

- Built with Gradle, the modern, open-source build automation tool focused on flexibility and performance

TL;DR

Do you want to explore the source code for this post’s Quarkus microservice application or deploy it to Kubernetes before reading the full article? All the source code and Kubernetes resources are open-source and available on GitHub:

git clone --depth 1 -b main \

https://github.com/garystafford/tickit-srv.git

The latest Docker Image is available on docker.io:

docker pull garystafford/tickit-srv:<latest-tag>

Quarkus Projects with IntelliJ IDE

Although not a requirement, I used JetBrains IntelliJ IDEA 2022 (Ultimate Edition) to develop and test the post’s Quarkus application. Bootstrapping Quarkus projects with IntelliJ is easy. Using the Quarkus plugin bundled with the Ultimate edition, developers can quickly create a Quarkus project.

The Quarkus plugin’s project creation wizard is based on code.quarkus.io. If you have bootstrapped a Spring Initializr project, code.quarkus.io works very similar to start.spring.io.

Visual Studio Code

RedHat also provides a Quarkus extension for the popular Visual Studio Code IDE.

Gradle

This post uses Gradle instead of Maven to develop, test, build, package, and deploy the Quarkus application to Kubernetes. Based on the packages selected in the new project setup shown above, the Quarkus plugin’s project creation wizard creates the following build.gradle file (Lombak added separately).

The wizard also created the following gradle.properties file, which has been updated to the latest release of Quarkus available at the time of this post, 2.9.2.

Gradle and Quarkus

You can use the Quarkus CLI or the Quarkus Maven plugin to scaffold a Gradle project. Taking a dependency on the Quarkus plugin adds several additional Quarkus tasks to Gradle. We will use Gradle to develop, test, build, containerize, and deploy the Quarkus microservice application to Kubernetes. The quarkusDev, quarkusTest, and quarkusBuild tasks will be particularly useful in this post.

Java Compilation

The Quarkus application in this post is compiled as a native image with the most recent Java 17 version of Mandrel, a downstream distribution of the GraalVM community edition.

GraalVM and Native Image

According to the documentation, GraalVM is a high-performance JDK distribution. It is designed to accelerate the execution of applications written in Java and other JVM languages while also providing runtimes for JavaScript, Ruby, Python, and other popular languages.

Further, according to GraalVM, Native Image is a technology to ahead-of-time compile Java code to a stand-alone executable, called a native image. This executable includes the application classes, classes from its dependencies, runtime library classes, and statically linked native code from the JDK. The Native Image builder (native-image) is a utility that processes all classes of an application and their dependencies, including those from the JDK. It statically analyzes data to determine which classes and methods are reachable during the application execution.

Mandrel

Mandrel is a downstream distribution of the GraalVM community edition. Mandrel’s main goal is to provide a native-image release specifically to support Quarkus. The aim is to align the native-image capabilities from GraalVM with OpenJDK and Red Hat Enterprise Linux libraries to improve maintainability for native Quarkus applications. Mandrel can best be described as a distribution of a regular OpenJDK with a specially packaged GraalVM Native Image builder (native-image).

Docker Image

Once complied, the native Quarkus executable will run within the quarkus-micro-image:1.0 base runtime image deployed to Kubernetes. Quarkus provides this base image to ease the containerization of native executables. It has a minimal footprint (10.9 compressed/29.5 MB uncompressed) compared to other images. For example, the latest UBI (Universal Base Image) Quarkus Mandrel image (ubi-quarkus-mandrel:22.1.0.0-Final-java17) is 714 MB uncompressed, while the OpenJDK 17 image (openjdk:17-jdk) is 471 MB uncompressed. Even RedHat’s Universal Base Image Minimal image (ubi-minimal:8.6) is 93.4 MB uncompressed.

An even smaller option from Quarkus is a distroless base image (quarkus-distroless-image:1.0) is only 9.2 MB compressed / 22.7 MB uncompressed. Quarkus is careful to note that distroless image support is experimental and should not be used in production without rigorous testing.

PostgreSQL Database

For the backend data persistence tier of the Quarkus application, we will use PostgreSQL. All DDL (Data Definition Language) and DML (Data Manipulation Language) statements used in the post were tested with the most current version of PostgreSQL 14.

There are many PostgreSQL-compatible sample databases available that could be used for this post. I am using the TICKIT sample database provided by AWS and designed for Amazon Redshift, AWS’s cloud data warehousing service. The database consists of seven tables — two fact tables and five dimensions tables — in a traditional data warehouse star schema.

For this post, I have remodeled the TICKIT database’s star schema into a normalized relational data model optimized for the Quarkus application. The most significant change to the database is splitting the original Users dimension table into two separate tables — buyer and seller. This change will allow for better separation of concerns (SoC), scalability, and increased protection of Personal Identifiable Information (PII).

Source Code

Each of the six tables in the PostgreSQL TICKIT database is represented by an Entity, Repository, and Resource Java class.

Entity Class

Java Persistence is the API for managing persistence and object/relational mapping. The Java Persistence API (JPA) provides Java developers with an object/relational mapping facility for managing relational data in Java applications. Each table in the PostgreSQL TICKIT database is represented by a Java Persistence Entity, as indicated by the Entity annotation on the class declaration. The annotation specifies that the class is an entity.

Each entity class extends the PanacheEntityBase class, part of the io.quarkus.hibernate.orm.panache package. According to the Quarkus documentation, You can specify your own custom ID strategy, which is done in this post’s example, by extending PanacheEntityBase instead of PanacheEntity.

If you do not want to bother defining getters/setters for your entities, which we did not in the post’s example, extending PanacheEntityBase, Quarkus will generate them for you. Alternately, extend PanacheEntity and take advantage of the default ID it provides if you are not using a custom ID strategy.

The example SaleEntity class shown below is typical of the Quarkus application’s entities. The entity class contains several additional JPA annotations in addition to Entity, including Table, NamedQueries, Id, SequenceGenerator, GeneratedValue, and Column. The entity class also leverages Project Lombok annotations. Lombok generates two boilerplate constructors, one that takes no arguments (NoArgsConstructor) and one that takes one argument for every field (AllArgsConstructor).

The SaleEntity class also defines two many-to-one relationships, with the ListingEntity and BuyerEntity entity classes. This relationship mirrors the database’s data model, as reflected in the schema diagram above. The relationships are defined using the ManyToOne and JoinColumn JPA annotations.

Given the relationships between the entities, a saleEntity object, represented as a nested JSON object, would look as follows:

Repository Class

Each table in the PostgreSQL TICKIT database also has a corresponding repository class, often referred to as the ‘repository pattern.’ The repository class implements the PanacheRepositoryBase interface, part of the io.quarkus.hibernate.orm.panache package. The PanacheRepositoryBase Java interface represents a Repository for a specific type of Entity. According to the documentation, if you are using repositories and have a custom ID strategy, then you will want to extend PanacheRepositoryBase instead of PanacheRepository and specify your ID type as an extra type parameter. Implementing the PanacheRepositoryBase will give you the same methods on the PanacheEntityBase.

The repository class allows us to leverage the methods already available through PanacheEntityBase and add additional custom methods. For example, the repository class contains a custom method listWithPaging. This method retrieves (GET) a list of SaleEntity objects with the added benefit of being able to indicate the page number, page size, sort by field, and sort direction.

Since there is a many-to-one relationship between the SaleEntity class and the ListingEntity and BuyerEntity entity classes, we also have two custom methods that retrieve all SaleEntity objects by either the BuyerEntity ID or the EventEntity ID. These two methods call the SQL queries in the SaleEntity, annotated with the JPA NamedQueries/NamedQuery annotations on the class declaration.

SmallRye Mutiny

Each method defined in the repository class returns a SmallRye Mutiny Uni<T>. According to the website, Mutiny is an intuitive, event-driven Reactive programming library for Java. Mutiny provides a simple but powerful asynchronous development model that lets you build reactive applications. Mutiny can be used in any Java application exhibiting asynchrony, including reactive microservices, data streaming, event processing, API gateways, and network utilities.

Uni

Again, according to Mutiny’s documentation, a Uni represents a stream that can only emit either an item or a failure event. A Uni<T> is a specialized stream that emits only an item or a failure. Typically, Uni<T> are great for representing asynchronous actions such as a remote procedure call, an HTTP request, or an operation producing a single result. A Uni represents a lazy asynchronous action. It follows the subscription pattern, meaning that the action is only triggered once a UniSubscriber subscribes to the Uni.

Resource Class

Lastly, each table in the PostgreSQL TICKIT database has a corresponding resource class. According to the Quarkus documentation, all the operations defined within PanacheEntityBase are available on your repository, so using it is exactly the same as using the active record pattern, except you need to inject it. We inject the corresponding repository class into the resource class, exposing all the available methods of the repository and PanacheRepositoryBase. For example, note the custom listWithPaging method below, which was declared in the SaleRepository class.

Similar to the repository class, each method defined in the resource class also returns a SmallRye Mutiny (io.smallrye.mutiny) Uni<T>.

The repository defines HTTP methods (POST, GET, PUT, and DELETE) corresponding to CRUD operations on the database (Create, Read, Update, and Delete). The methods are annotated with the corresponding javax.ws.rs annotation, indicating the type of HTTP request they respond to. The javax.ws.rs package contains high-level interfaces and annotations used to create RESTful service resources, such as our Quarkus application.

The POST, PUT, and DELETE annotated methods all have the io.quarkus.hibernate.reactive.panache.common.runtime package’s ReactiveTransactional annotation associated with them. We use this annotation on methods to run them in a reactive Mutiny.Session.Transation. If the annotated method returns a Uni, which they do, this has precisely the same behavior as if the method was enclosed in a call to Mutiny.Session.withTransaction(java.util.function.Function). If the method call fails, the complete transaction is rolled back.

Developer Experience

Quarkus has several features to enhance the developer experience. Features include Dev Services, Dev UI, live reload of code without requiring a rebuild and restart of the application, continuous testing where tests run immediately after code changes have been saved, configuration profiles, Hibernate ORM, JUnit, and REST Assured integrations. Using these Quarkus features, it’s easy to develop and test Quarkus applications.

Configuration Profiles

Similar to Spring, Quarkus works with configuration profiles. According to RedHat, you can use different configuration profiles depending on your environment. Configuration profiles enable you to have multiple configurations in the same application.properties file and select between them using a profile name. Quarkus recognizes three default profiles:

- dev: Activated in development mode

- test: Activated when running tests

- prod: The default profile when not running in development or test mode

In the application.properties file, the profile is prefixed using %environment. format. For example, when defining Quarkus’ log level as INFO, you add the common quarkus.log.level=INFO property. However, to change only the test environment’s log level to DEBUG, corresponding to the test profile, you would add a property with the %test. prefix, such as %test.quarkus.log.level=DEBUG.

Dev Services

Quarkus supports the automatic provisioning of unconfigured services in development and test mode, referred to as Dev Services. If you include an extension and do not configure it, then Quarkus will automatically start the relevant service using Test containers behind the scenes and wire up your application to use this service.

When developing your Quarkus application, you could create your own local PostgreSQL database, for example, with Docker:

And the corresponding application configuration properties:

Zero-Config Database

Alternately, we can rely on Dev Services, using a feature referred to as zero config setup. Quarkus provides you with a zero-config database out of the box; no database configuration is required. Quarkus takes care of provisioning the database, running your DDL and DML statements to create database objects and populate the database with test data, and finally, de-provisioning the database container when the development or test session is completed. The database Dev Services will be enabled when a reactive or JDBC datasource extension is present in the application and the database URL has not been configured.

Using the quarkusDev Gradle task, we can start the application running, as shown in the video below. Note the two new Docker containers that are created. Also, note the project’s import.sql SQL script is run automatically, executing all DDL and DML statements to prepare and populate the database.

Bootstrapping the TICKIT Database

When using Hibernate ORM with Quarkus, we have several options regarding how the database is handled when the Quarkus application starts. These are defined in the application.properties file. The quarkus.hibernate-orm.database.generation property determines whether the database schema is generated or not. drop-and-create is ideal in development mode, as shown above. This property defaults to none, however, if Dev Services is in use and no other extensions that manage the schema are present, this will default to drop-and-create. Accepted values: none, create, drop-and-create, drop, update, validate. For development and testing modes, we are using Dev Services with the default value of drop-and-create. For this post, we assume the database and schema already exist in production.

A second property, quarkus.hibernate-orm.sql-load-script, provides the path to a file containing the SQL statements to execute when Hibernate ORM starts. In dev and test modes, it defaults to import.sql. Simply add an import.sql file in the root of your resources directory, Hibernate will be picked up without having to set this property. The project contains an import.sql script to create all database objects and a small amount of test data. You can also explicitly set different files for different profiles and prefix the property with the profile (e.g., %dev. or %test.).

%dev.quarkus.hibernate-orm.database.generation=drop-and-create

%dev.quarkus.hibernate-orm.sql-load-script=import.sql

Another option is Flyway, the popular database migration tool commonly used in JVM environments. Quarkus provides first-class support for using Flyway.

Dev UI

According to the documentation, Quarkus now ships with a new experimental Dev UI, which is available in dev mode (when you start Quarkus with Gradle’s quarkusDev task) at /q/dev by default. It allows you to quickly visualize all the extensions currently loaded, see their status and go directly to their documentation. In addition to access to loaded extensions, you can review logs and run tests in the Dev UI.

Configuration

From the Dev UI, you can access and modify the Quarkus application’s application configuration.

You also can view the configuration of Dev Services, including the running containers and no-config database config.

Quarkus REST Score Console

With RESTEasy Reactive extension loaded, you can access the Quarkus REST Score Console from the Dev UI. The REST Score Console shows endpoint performance through scores and color-coding: green, yellow, or red. RedHat published a recent blog that talks about the scoring process and how to optimize the performance endpoints. Three measurements show whether a REST reactive application can be optimized further.

Application Testing

Quarkus enables robust JVM-based and Native continuous testing by providing integrations with common test frameworks, such as including JUnit, Mockito, and REST Assured. Many of Quarkus’ testing features are enabled through annotations, such as QuarkusTestResource, QuarkusTest, QuarkusIntegrationTest, and TransactionalQuarkusTest.

Quarkus supports the use of mock objects using two different approaches. You can either use CDI alternatives to mock out a bean for all test classes or use QuarkusMock to mock out beans on a per-test basis. This includes integration with Mockito.

The REST Assured integration is particularly useful for testing the Quarkus microservice API application. According to their website, REST Assured is a Java DSL for simplifying testing of REST-based services. It supports the most common HTTP request methods and can be used to validate and verify the response of these requests. REST Assured uses the given(), when(), then() methods of testing made popular as part of Behavior-Driven Development (BDD).

The tests can be run using the the quarkusTest Gradle task. The application contains a small number of integration tests to demonstrate this feature.

Swagger and OpenAPI

Quarkus provides the Smallrye OpenAPI extension compliant with the MicroProfile OpenAPI specification, which allows you to generate an API OpenAPI v3 specification and expose the Swagger UI. The /q/swagger-ui resource exposes the Swagger UI, allowing you to visualize and interact with the Quarkus API’s resources without having any implementation logic in place.

Resources can be tested using the Swagger UI without writing any code.

OpenAPI Specification (formerly Swagger Specification) is an API description format for REST APIs. The /q/openapi resource allows you to generate an OpenAPI v3 specification file. An OpenAPI file allows you to describe your entire API.

The OpenAPI v3 specification can be saved as a file and imported into applications like Postman, the API platform for building and using APIs.

GitOps with GitHub Actions

For this post, GitOps is used to continuously test, build, package, and deploy the Quarkus microservice application to Kubernetes. Specifically, the post uses GitHub Actions. GitHub Actions is a continuous integration and continuous delivery (CI/CD) platform that allows you to automate your build, test, and deployment pipelines. Workflows are defined in the .github/workflows directory in a repository, and a repository can have multiple workflows, each of which can perform a different set of tasks.

Two GitHub Actions are associated with this post’s GitHub repository. The first action, build-test.yml, natively builds and tests the source code in a native Mandrel container on each push to GitHub. The second action (shown below), docker-build-push.yml, builds and containerizes the natively-built executable, pushes it to Docker’s Container Registry (docker.io), and finally deploys the application to Kubernetes. This action is triggered by pushing a new Git Tag to GitHub.

There are several Quarkus configuration properties included in the action’s build step. Alternately, these properties could be defined in the application.properties file. However, I have decided to include them as part of the Gradle build task since they are specific to the type of build and container registry and Kubernetes platform I am pushing to artifacts.

Kubernetes Resources

The Kubernetes resources YAML file, created by the Quarkus build, is also uploaded and saved as an artifact in GitHub by the final step in the GitHub Action.

Quarkus automatically generates ServiceAccount, Role, RoleBinding, Service, Deployment resources.

Choosing a Kubernetes Platform

The only cloud provider-specific code is in the second GitHub action.

In this case, the application is being deployed to an existing Amazon Elastic Kubernetes Service (Amazon EKS), a fully managed, certified Kubernetes conformant service from AWS. These steps can be easily replaced with steps to deploy to other Cloud platforms, such as Microsoft’s Azure Kubernetes Service (AKS) or Google Cloud’s Google Kubernetes Engine (GKE).

GitHub Secrets

Some of the properties use GitHub environment variables, and others use secure GitHub repository encrypted secrets. Secrets are used to secure Docker credentials used to push the Quarkus application image to Docker’s image repository, AWS IAM credentials, and the base64 encoded contents of the kubeconfig file required to deploy to Kubernetes on AWS when using the kodermax/kubectl-aws-eks@master GitHub action.

Docker

Reviewing the configuration properties included in the action’s build step, note the Mandrel container used to build the native Quarkus application, quay.io/quarkus/ubi-quarkus-mandrel:22.1.0.0-Final-java17. Also, note the project’s Docker file is used to build the final Docker image, pushed to the image repository, and then used to provision containers on Kubernetes, src/main/docker/Dockerfile.native-micro. This Dockerfile uses the quay.io/quarkus/quarkus-micro-image:1.0 base image to containerize the native Quarkus application.

The properties also define the image’s repository name and tag (e.g., garystafford/tickit-srv:1.1.0).

Kubernetes

In addition to creating the ticket Namespace in advance, a Kubernetes secret is pre-deployed to the ticket Namespace. The GitHub Action also requires a Role and RoleBinding to deploy the workload to the Kubernetes cluster. Lastly, a HorizontalPodAutoscaler (HPA) is used to automatically scale the workload.

export NAMESPACE=tickit# Namespace

kubectl create namespace ${NAMESPACE}# Role and RoleBinding for GitHub Actions to deploy to Amazon EKS

kubectl apply -f kubernetes/github_actions_role.yml -n ${NAMESPACE}# Secret

kubectl apply -f kubernetes/secret.yml -n ${NAMESPACE}# HorizontalPodAutoscaler (HPA)

kubectl apply -f kubernetes/tickit-srv-hpa.yml -n ${NAMESPACE}

As part of the configuration properties included in the action’s build step, note the use of Kubernetes secrets.

-Dquarkus.kubernetes-config.secrets=tickit

-Dquarkus.kubernetes-config.secrets.enabled=true

This secret contains base64 encoded sensitive credentials and connection values to connect to the Production PostgreSQL database. For this post, I have pre-built an Amazon RDS for PostgreSQL database instance, created the ticket database and required database objects, and lastly, imported the sample data included in the GitHub repository, garystafford/tickit-srv-data.

The five keys seen in the Secret are used in the application.properties file to provide access to the Production PostgreSQL database from the Quakus application.

An even better alternative to using Kubernetes secrets on Amazon EKS is AWS Secrets and Configuration Provider (ASCP) for the Kubernetes Secrets Store CSI Driver. AWS Secrets Manager stores secrets as files mounted in Amazon EKS pods.

AWS Architecture