This two-part post explores a set of popular open-source observability tools that are easily integrated with the Istio service mesh. While these tools are not a part of Istio, they are essential to making the most of Istio’s observability features. The tools include Jaeger and Zipkin for distributed transaction monitoring, Prometheus for metrics collection and alerting, Grafana for metrics querying, visualization, and alerting, and Kiali for overall observability and management of Istio. We will round out the toolset with the addition of Fluent Bit for log processing and aggregation. We will observe a distributed, microservices-based reference application platform deployed to an Amazon Elastic Kubernetes Service (Amazon EKS) cluster using these tools. The platform, running on EKS, will use Amazon DocumentDB as a persistent data store and Amazon MQ to exchange messages.

Observability

Similar to quantum computing, big data, artificial intelligence, machine learning, and 5G, observability is currently a hot buzzword in the IT industry. According to Wikipedia, observability is a measure of how well the internal states of a system can be inferred from its external outputs. The O’Reilly book, Distributed Systems Observability, by Cindy Sridharan, describes The Three Pillars of Observability in Chapter 4: “Logs, metrics, and traces are often known as the three pillars of observability. While plainly having access to logs, metrics, and traces doesn’t necessarily make systems more observable, these are powerful tools that, if understood well, can unlock the ability to build better systems.”

Logs, metrics, and traces are often known as the three pillars of observability.

Cindy Sridharan

Honeycomb is a developer of observability tools for production systems. The honeycomb.io site includes articles, blog posts, whitepapers, and podcasts on observability. According to Honeycomb, “Observability is achieved when a system is understandable — which is difficult with complex systems, where most problems are the convergence of many things failing at once.”

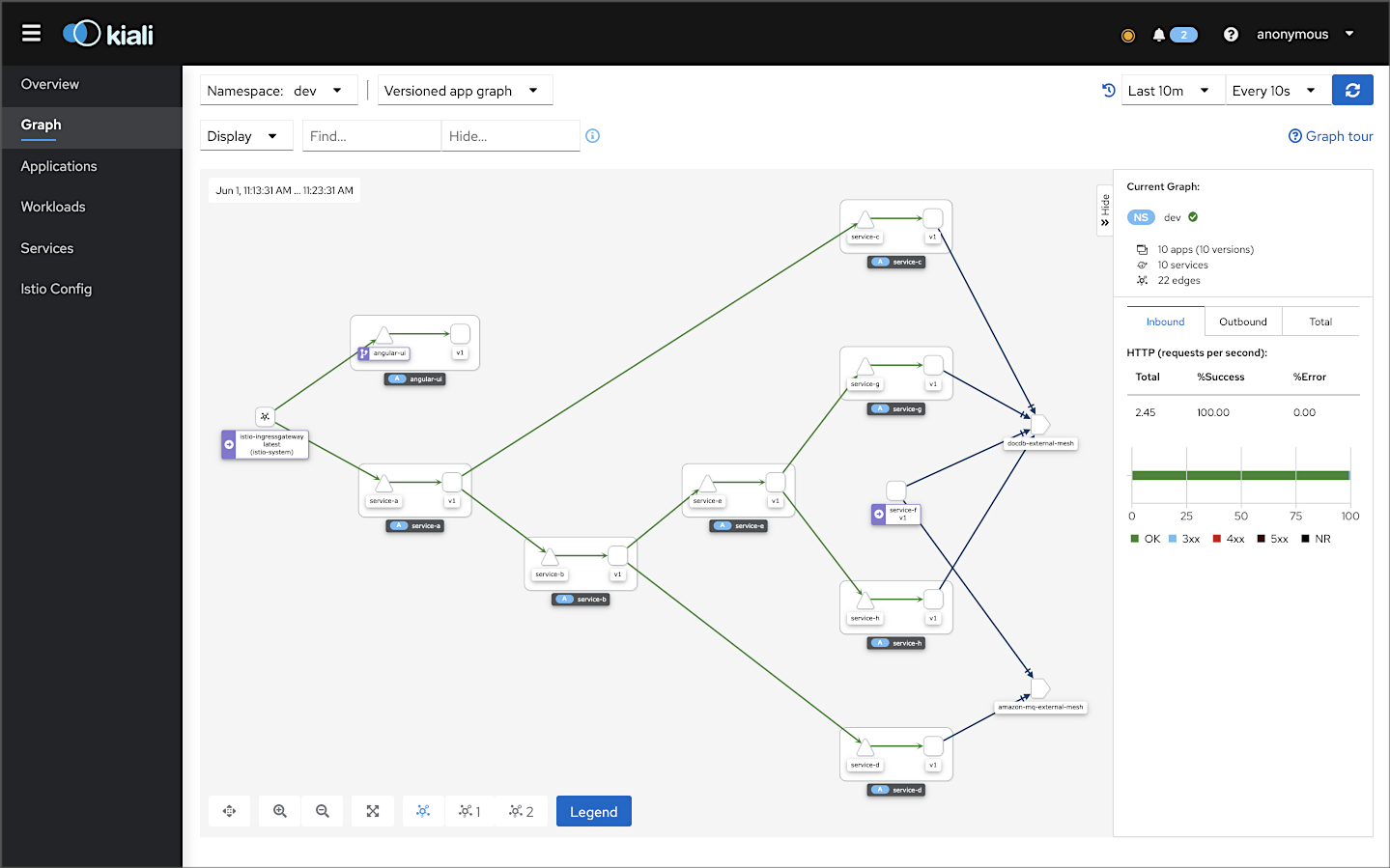

As modern distributed systems grow ever more complex, the ability to observe those systems demands equally modern tooling designed with this level of complexity in mind. Traditional logging and monitoring tools struggle with today’s polyglot, distributed, event-driven, ephemeral, containerized and serverless application environments. Tools like the Istio service mesh attempt to solve the observability challenge by offering easy integration with several popular open-source telemetry tools. Istio’s integrations include Jaeger for distributed tracing, Kiali for Istio service mesh-based microservice visualization, and Prometheus and Grafana for metric collection, monitoring, and alerting. Combined with cloud-native monitoring and logging tools such as Fluent Bit and Amazon CloudWatch Container Insights, we have a complete observability platform for modern distributed applications running on Amazon Elastic Kubernetes Service (Amazon EKS).

Traditional logging and monitoring tools struggle with today’s polyglot, distributed, event-driven, ephemeral, containerized and serverless application environments.

Gary Stafford

Reference Application Platform

To demonstrate Istio’s observability tools, we will deploy a reference application platform, written in Go and TypeScript with Angular, to EKS on AWS. The reference application platform was developed to demonstrate different Kubernetes platforms, such as EKS, GKE, and AKS, and concepts such as service mesh, API management, observability, DevOps, and Chaos Engineering. The platform is currently comprised of a backend containing eight Go-based microservices, labeled generically as Service A — Service H, one Angular 12 TypeScript-based frontend UI, four MongoDB databases, and one RabbitMQ message queue for event-based communications. The platform and all its source code are open-sourced on GitHub.

The reference application platform is designed to generate HTTP-based service-to-service, TCP-based service-to-database, and TCP-based service-to-queue-to-service IPC (inter-process communication). Service A calls Service B and Service C; Service B calls Service D and Service E; Service D produces a message on a RabbitMQ queue, which Service F consumes and writes to MongoDB, and so on. Distributed service communications can be observed using Istio’s observability tools when the system is deployed to a Kubernetes cluster running the Istio service mesh.

Service Responses

Each Go microservice contains a /greeting, /health, and /metrics endpoint. The service’s /health endpoint is used to configure Kubernetes Liveness, Readiness, and Startup Probes. The /metrics endpoint exposes metrics that Prometheus scraps. Lastly, upstream services respond to requests from downstream services when calling their /greeting endpoint by returning a small informational JSON payload — a greeting.

{

"id": "1f077127-2f9f-4a90-ad88-da52327c2620",

"service": "Service C",

"message": "Konnichiwa (こんにちは), from Service C!",

"created": "2021-06-04T04:34:02.901726709Z",

"hostname": "service-c-6d5cc8fdfd-stsq9"

}

The responses are aggregated across the service call chain, resulting in an array of service responses being returned to the edge service, Service A, and subsequently, the platform’s UI running in the end user’s web browser.

[

{

"id": "a9afab6a-3e2a-41a6-aec7-7257d2904076",

"service": "Service D",

"message": "Shalom (שָׁלוֹם), from Service D!",

"created": "2021-06-04T14:28:32.695151047Z",

"hostname": "service-d-565c775894-vdsjx"

},

{

"id": "6d4cc38a-b069-482c-ace5-65f0c2d82713",

"service": "Service G",

"message": "Ahlan (أهلا), from Service G!",

"created": "2021-06-04T14:28:32.814550521Z",

"hostname": "service-g-5b846ff479-znpcb"

},

{

"id": "988757e3-29d2-4f53-87bf-e4ff6fbbb105",

"service": "Service H",

"message": "Nǐ hǎo (你好), from Service H!",

"created": "2021-06-04T14:28:32.947406463Z",

"hostname": "service-h-76cb7c8d66-lkr26"

},

{

"id": "966b0bfa-0b63-4e21-96a1-22a76e78f9cd",

"service": "Service E",

"message": "Bonjour, from Service E!",

"created": "2021-06-04T14:28:33.007881464Z",

"hostname": "service-e-594d4754fc-pr7tc"

},

{

"id": "c612a228-704f-4562-90c5-33357b12ff8d",

"service": "Service B",

"message": "Namasté (नमस्ते), from Service B!",

"created": "2021-06-04T14:28:33.015985983Z",

"hostname": "service-b-697b78cf54-4lk8s"

},

{

"id": "b621bd8a-02ee-4f9b-ac1a-7d91ddad85f5",

"service": "Service C",

"message": "Konnichiwa (こんにちは), from Service C!",

"created": "2021-06-04T14:28:33.042001406Z",

"hostname": "service-c-7fd4dd5947-5wcgs"

},

{

"id": "52eac1fa-4d0c-42b4-984b-b65e70afd98a",

"service": "Service A",

"message": "Hello, from Service A!",

"created": "2021-06-04T14:28:33.093380628Z",

"hostname": "service-a-6f776d798f-5l5dz"

}

]

CORS

The platform’s backend edge service, Service A, is configured for Cross-Origin Resource Sharing (CORS) using the access-control-allow-origin response header. The CORS configuration allows the Angular UI, running in the end user’s web browser, to call Service A’s /greeting endpoint, which potentially resides in a different host from the UI. Shown below is the Go source code for Service A. Note the use of the ALLOWED_ORIGINS environment variable on lines 32 and 195, which allows you to configure the origins that are allowed from the service’s Deployment resource.

MongoDB- and RabbitMQ-as-a-Service

Using external services will help us understand how Istio and its observability tools collect telemetry for communications between the reference application platform on Kubernetes and external systems.

Amazon DocumentDB

For this demonstration, the reference application platform’s MongoDB databases will be hosted, external to EKS, on Amazon DocumentDB (with MongoDB compatibility). According to AWS, Amazon DocumentDB is a purpose-built database service for JSON data management at scale, fully managed and integrated with AWS, and enterprise-ready with high durability.

Amazon MQ

Similarly, the reference application platform’s RabbitMQ queue will be hosted, external to EKS, on Amazon MQ. AWS MQ is a managed message broker service for Apache ActiveMQ and RabbitMQ, making it easy to set up and operate message brokers on AWS. Amazon MQ reduces your operational responsibilities by managing the provisioning, setup, and maintenance of message brokers for you. For RabbitMQ, Amazon MQ provides access to the RabbitMQ web console. The console allows us to monitor and manage RabbitMQ.

Shown below is the Go source code for Service F. This service consumes messages from the RabbitMQ queue, placed there by Service D, and writes the messages to MongoDB. Services use Sean Treadway’s Go RabbitMQ Client Library and MongoDB’s MongoDB Go Driver for connectivity.

Source Code

All source code for this post is available on GitHub within two projects. Go-based microservices source code and Kubernetes resources are located in the k8s-istio-observe-backend project repository. The Angular UI TypeScript-based source code is located in the k8s-istio-observe-frontend project repository. You do not need to clone the Angular UI project for this demonstration. The demonstration uses the 2021-istio branch for both projects.

git clone --branch 2021-istio --single-branch \

https://github.com/garystafford/k8s-istio-observe-backend.git

# optional - not needed for demonstration

git clone --branch 2021-istio --single-branch \

https://github.com/garystafford/k8s-istio-observe-frontend.git

Docker images referenced in the Kubernetes Deployment resource files for the Go services and UI are all available on Docker Hub. The Go microservice Docker images were built using the official Golang Alpine image on DockerHub, containing Go version 1.16.4. Using the Alpine image to compile the Go source code ensures the containers will be as small as possible and minimize the container’s potential attack surface.

Prerequisites

This post will assume a basic level of knowledge of AWS EKS, Kubernetes, and Istio. Furthermore, the post assumes you have already installed recent versions of the AWS CLI v2, kubectl, Weaveworks’ eksctl, Docker, and Istio. Meaning that the aws, kubectl, eksctl, istioctl, and docker command tools are all available from the terminal.

CLI for Amazon EKS

Weaveworks’ eksctl is a simple CLI tool for creating and managing clusters on EKS — Amazon’s managed Kubernetes service for EC2. It is written in Go and uses CloudFormation.

CLI for Istio

The Istio configuration command-line utility, istioctl, is designed to help debug and diagnose the Istio mesh.

Set-up and Installation

To deploy the microservices platform to EKS, we will proceed in roughly the following order:

- Create a TLS certificate and Route53 hosted zone records for ALB;

- Create an Amazon DocumentDB database cluster;

- Create an Amazon MQ RabbitMQ message broker;

- Create an EKS cluster;

- Modify Kubernetes resources for your own environment;

- Deploy AWS Application Load Balancer (ALB) and associated resources;

- Deploy Istio to the EKS cluster;

- Deploy Fluent Bit to the EKS cluster;

- Deploy the reference platform to EKS;

- Test and troubleshoot the platform;

- Observe the results in part two;

Amazon DocumentDB

As previously mentioned, the MongoDB databases will be hosted, external to EKS, on Amazon DocumentDB, with MongoDB compatibility. Create a DocumentDB cluster. For the sake of simplicity and affordability of the demo, I recommend creating a single db.r5.large node cluster. We will connect from the microservices to Amazon DocumentDB using the supplied mongodb:// connection string.

Amazon DocumentDB clusters are deployed within an Amazon Virtual Private Cloud (Amazon VPC). If you are installing DocumentDB in a separate VPC than EKS, you will need to ensure that the EKS VPC can access the DocumentDB VPC. Per the DocumentDB documentation, DocumentDB clusters can be accessed directly by Amazon EC2 instances or other AWS services that are deployed in the same Amazon VPC. Additionally, Amazon DocumentDB can be accessed via EC2 instances or other AWS services from different VPCs in the same AWS Region or other Regions via VPC peering.

Amazon MQ

Similarly, the RabbitMQ queues will be hosted, external to EKS, on Amazon MQ. Create an Amazon MQ RabbitMQ broker. To ensure the simplicity and affordability of the demo, I recommend a single mq.m5.large instance broker. The broker is running the RabbitMQ engine and has TLS disabled. We will connect from the microservices to Amazon MQ using AMQP (Advanced Message Queuing Protocol). Amazon MQ provides an amqps:// endpoint. The amqps URI scheme is used to instruct a client to make a secure connection to the server. You can manage and observe RabbitMQ from the RabbitMQ web console provided by Amazon MQ.

Modify Kubernetes Resources

You will need to change several configuration settings in the GitHub project’s Kubernetes resource files to match your environment.

Istio ServiceEntry for Document DB

Modify the Istio ServiceEntry resource, external-mesh-document-db.yaml, adding your DocumentDB host address. This file allows egress traffic from the microservices on EKS to the DocumentDB cluster.

apiVersion: networking.istio.io/v1alpha3

kind: ServiceEntry

metadata:

name: docdb-external-mesh

spec:

hosts:

- {{ your_document_db_hostname }}

ports:

- name: mongo

number: 27017

protocol: MONGO

location: MESH_EXTERNAL

resolution: NONE

Istio ServiceEntry for Amazon MQ

Modify the Istio ServiceEntry resource, external-mesh-amazon-mq.yaml, adding your Amazon MQ host address. This file allows egress traffic from the microservices on EKS to the Amazon MQ RabbitMQ broker.

apiVersion: networking.istio.io/v1alpha3

kind: ServiceEntry

metadata:

name: amazon-mq-external-mesh

spec:

hosts:

- {{ your_amazon_mq_hostname }}

ports:

- name: rabbitmq

number: 5671

protocol: TCP

location: MESH_EXTERNAL

resolution: NONE

Istio Gateway

There are numerous strategies you can use to route traffic into the EKS cluster via Istio. For this demonstration, I am using an AWS Application Load Balancer (ALB). I have mapped one hostname, observe-ui.example-api.com, to the Angular UI application running on EKS. The backend microservice-based API, specifically the edge service, Service A, is mapped to a second hostname, observe-api.example-api.com.

According to Istio, the Gateway describes a load balancer operating at the edge of the mesh, receiving incoming or outgoing HTTP/TCP connections. Modify the Istio Ingress Gateway resource, gateway.yaml. Insert your own DNS entries into the hosts section. These are the only hosts that will be allowed into the mesh on port 80.

apiVersion: networking.istio.io/v1beta1

kind: Gateway

metadata:

name: istio-gateway

spec:

selector:

istio: ingressgateway # use istio default controller

servers:

- port:

number: 80

name: ui

protocol: HTTP

hosts:

- {{ your_ui_hostname }}

- {{ your_api_hostname }}

Istio VirtualService

According to Istio, a VirtualService defines a set of traffic routing rules to apply when a host is addressed. A VirtualService is bound to a Gateway to control the forwarding of traffic arriving at a particular host and port. Modify the project’s two Istio VirtualServices resources, virtualservices.yaml. Insert the corresponding DNS entries from the Istio Gateway.

---

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: angular-ui

spec:

hosts:

- {{ your_ui_hostname }}

gateways:

- istio-gateway

http:

- match:

- uri:

prefix: /

route:

- destination:

host: angular-ui.dev.svc.cluster.local

subset: v1

port:

number: 80

---

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: service-a

spec:

hosts:

- {{ your_api_hostname }}

gateways:

- istio-gateway

http:

- match:

- uri:

prefix: /api

route:

- destination:

host: service-a.dev.svc.cluster.local

subset: v1

port:

number: 8080

Kubernetes Secret

According to the Kubernetes project, Kubernetes Secrets lets you store and manage sensitive information, such as passwords, OAuth tokens, and SSH keys. Storing confidential information in a Secret is safer and more flexible than putting it verbatim in a Pod definition or in a container image.

The project contains a Kubernetes Opaque type Secret resource, go-srv-demo.yaml. The Secret contains several pieces of arbitrary user-defined data we want to secure. Data includes the full DocumentDB mongodb:// connection string and the Amazon MQ amqps:// connection string used by the microservices. We will use the Secret to secure the entire connection string, including the hostname, port, username, and password. The data also includes the DocumentDB host, username, and password, and an arbitrary username and password to login to Mongo Express using Basic Authentication.

You must encode your secret’s values using base64. On Linux and Mac, you can use the base64 program to encode the connection strings.

echo -n '{{ your_secret_to_encode }}' | base64

# e.g., echo -n 'amqps://username:password@hostname.mq.us-east-1.amazonaws.com:5671/' | base64

Add the base64 encoded values to the Secret resource.

apiVersion: v1

kind: Secret

metadata:

name: go-srv-config

namespace: dev

type: Opaque

data:

mongodb.conn: {{ your_base64_encoded_secret }}

rabbitmq.conn: {{ your_base64_encoded_secret }}

---

apiVersion: v1

kind: Secret

metadata:

name: mongo-express-config

namespace: mongo-express

type: Opaque

data:

me.basicauth.username: {{ your_base64_encoded_secret }}

me.basicauth.password: {{ your_base64_encoded_secret }}

mongodb.host: {{ your_base64_encoded_secret }}

mongodb.username: {{ your_base64_encoded_secret }}

mongodb.password: {{ your_base64_encoded_secret }}

AWS Load Balancer Controller

The project contains a Custom Resource Definition (CRD) and associated resources, aws-load-balancer-controller-v220-all.yaml. These resources configure the AWS Application Load Balancer (ALB) using the AWS Load Balancer Controller v2.2.0, aws-load-balancer-controller. The AWS Load Balancer Controller manages AWS Elastic Load Balancers (ELB) for a Kubernetes cluster. The controller provisions an AWS ALB when you create a Kubernetes Ingress.

Modify line 797 to include the name of your own cluster. I am using the cluster name istio-observe-demo throughout the demo.

spec:

containers:

- args:

- --cluster-name=istio-observe-demo

- --ingress-class=alb

image: amazon/aws-alb-ingress-controller:v2.2.0

livenessProbe:

failureThreshold: 2

httpGet:

path: /healthz

port: 61779

scheme: HTTP

EKS Cluster Config

The project contains an eksctl ClusterConfig resource, cluster.yaml. The ClusterConfig defines the configuration of the Amazon EKS cluster along with networking, security, and other associated resources. Instead of a pre-existing Amazon Virtual Private Cloud (Amazon VPC) for this demo, eksctl will create a VPC and associated AWS resources as part of cluster creation. Modify the file to match your AWS Region, desired EKS cluster name, and Kubernetes release. For the demo, I am using the latest Kubernetes 1.20 release.

apiVersion: eksctl.io/v1alpha5

kind: ClusterConfig

metadata:

name: istio-observe-demo

region: us-east-1

version: "1.20"

iam:

withOIDC: true

Set Environment Variables

Modify and set the following environment variables in your terminal. I will be using us-east-1 for all the demonstration’s AWS resources that are part of the demonstration. They should match the eksctl ClusterConfig resource above.

export AWS_ACCOUNT=$(aws sts get-caller-identity --output text --query 'Account')

export EKS_REGION="us-east-1"

export CLUSTER_NAME="istio-observe-demo"

Istio Home

Set your ISTIO_HOME directory. I have the latest Istio 1.10.0 installed and have theISTIO_HOME environment variable set in my Oh My Zsh .zshrc file. I have also set Istio’s bin/ subdirectory in my PATH environment variable. The bin/ subdirectory contains the istioctl executable.

echo $ISTIO_HOME

/Applications/Istio/istio-1.10.0

where istioctl

/Applications/Istio/istio-1.10.0/bin/istioctl

istioctl version

client version: 1.10.0

control plane version: 1.10.0

data plane version: 1.10.0 (4 proxies)

Create EKS Cluster

With the cluster.yaml file modified previously, deploy the EKS cluster to a new VPC on AWS.

eksctl create cluster -f ./resources/other/cluster.yaml

This step deploys a large number of resources using CloudFormation. The complete EKS provisioning process can take up to 15–20 minutes to complete.

For the complete demonstration, eksctl will deploy a total of four CloudFormation stacks to your AWS environment.

Once complete, configure kubectl so that you can connect to an Amazon EKS cluster.

aws eks --region ${EKS_REGION} update-kubeconfig \

--name ${CLUSTER_NAME}

Confirm that your cluster creation was successful with the following commands:

kubectl cluster-info

eksctl utils describe-stacks \

--region ${EKS_REGION} --cluster ${CLUSTER_NAME}

Use the EKS Management Console to review the new cluster’s details.

The EKS cluster in this demonstration was created with a single Amazon EKS managed node group, managed-ng-1. The managed node group contains three m5.large EC2 instances. The composition of the EKS cluster can be modified in the eksctl ClusterConfig resource, cluster.yaml.

Deploy AWS Load Balancer Controller

Using the aws-load-balancer-controller-v220-all.yaml file you previously modified, deploy the AWS Load Balancer Controller v2.2.0. Please carefully review the AWS Load Balancer Controller instructions to understand how this resource is configured and integrated with EKS.

curl -o resources/aws/iam-policy.json \

https://raw.githubusercontent.com/kubernetes-sigs/aws-load-balancer-controller/v2.2.0/docs/install/iam_policy.json

aws iam create-policy \

--policy-name AWSLoadBalancerControllerIAMPolicy220 \

--policy-document file://resources/aws/iam-policy.json

eksctl create iamserviceaccount \

--region ${EKS_REGION} \

--cluster ${CLUSTER_NAME} \

--namespace=kube-system \

--name=aws-load-balancer-controller \

--attach-policy-arn=arn:aws:iam::${AWS_ACCOUNT}:policy/AWSLoadBalancerControllerIAMPolicy220 \

--override-existing-serviceaccounts \

--approve

kubectl apply --validate=false \

-f https://github.com/jetstack/cert-manager/releases/download/v1.3.1/cert-manager.yaml

kubectl apply -f resources/other/aws-load-balancer-controller-v220-all.yaml

To confirm the aws-load-balancer-controller is deployed and ready, run the following command:

kubectl get deployment -n kube-system aws-load-balancer-controller

NAME READY UP-TO-DATE AVAILABLE AGE

aws-load-balancer-controller 1/1 1 1 55s

AWS Load Balancer Controller Policy

There is an OpenID Connect provider URL associated with the EKS cluster. To use IAM roles for service accounts, an IAM OIDC provider must exist for your cluster. Obtain the URL from the EKS Management Console’s Details tab.

You can also obtain the URL using the following AWS CLI commands:

aws eks describe-cluster --name ${CLUSTER_NAME}

aws iam list-open-id-connect-providers

The project contains a policy document, trust-eks-policy.json. Modify the policy document by adding the OpenID Connect information found above. Instructions are also included in the AWS Create an IAM OIDC provider for your cluster documentation.

{

"Version":"2012-10-17",

"Statement":[

{

"Effect":"Allow",

"Principal":{

"Federated":" {{ your_openid_connect_arn }}"

},

"Action":"sts:AssumeRoleWithWebIdentity",

"Condition":{

"StringEquals":{

"oidc.eks.us-east-1.amazonaws.com/id/{{ your_open_id_connect_id }}:sub":"system:serviceaccount:kube-system:alb-ingress-controller"

}

}

}

]

}

Create and attach the AWS Load Balancer Controller IAM policies and roles.

aws iam create-role \

--role-name eks-alb-ingress-controller-eks-istio-observe-demo \

--assume-role-policy-document file://resources/aws/trust-eks-policy.json

aws iam attach-role-policy \

--role-name eks-alb-ingress-controller-eks-istio-observe-demo \

--policy-arn="arn:aws:iam::${AWS_ACCOUNT}:policy/AWSLoadBalancerControllerIAMPolicy220"

aws iam attach-role-policy \

--role-name eks-alb-ingress-controller-eks-istio-observe-demo \

--policy-arn arn:aws:iam::aws:policy/AmazonEKSWorkerNodePolicy

aws iam attach-role-policy \

--role-name eks-alb-ingress-controller-eks-istio-observe-demo \

--policy-arn arn:aws:iam::aws:policy/AmazonEKS_CNI_Policy

Create Namespaces

Kubernetes supports multiple virtual clusters backed by the same physical cluster. These virtual clusters are called namespaces. The dev namespace will house the reference application platform for this demonstration — the Angular UI frontend and Go microservices backend. This namespace represents a development environment on EKS for our reference application platform. A second namespace, mongo-express, will be used to deploy Mongo Express later in the post.

kubectl apply -f ./minikube/resources/namespaces.yaml

Enable Automatic Sidecar Injection

To take advantage of Istio’s features, pods in the mesh must be running an Istio sidecar proxy. By setting the istio-injection=enabled label on a namespace and the injection webhook is enabled, any new pods created in that namespace will automatically have an Istio sidecar proxy added to them. Labeling the dev namespace for automatic sidecar injection ensures that our reference application platform — the UI and the microservices — will have Istio sidecar proxy automatically injected into their pods.

kubectl label namespace dev istio-injection=enabled

Deploy Secret Resources

Create the DocumentDB and Amazon MQ Secrets in the appropriate dev and mongo-express namespaces.

kubectl apply -f ./resources/secrets/secrets.yaml

Install Istio Configuration Profile

Istio comes with several built-in configuration profiles. The profiles provide customization of the Istio control plane and the sidecars for the Istio data plane.

istioctl profile list

Istio configuration profiles:

default

demo

empty

external

minimal

openshift

preview

remote

For this demonstration, use the default profile, which installs Istio core, istiod, istio-ingressgateway, and istio-egressgateway.

istioctl install --set profile=demo -y

✔ Istio core installed

✔ Istiod installed

✔ Ingress gateways installed

✔ Egress gateways installed

✔ Installation complete

Deploy Istio Gateway, VirtualService, and DestinationRule Resources

An Istio Gateway describes a load balancer operating at the edge of the mesh receiving incoming or outgoing HTTP/TCP connections. An Istio VirtualService defines a set of traffic routing rules to apply when a host is addressed. Lastly, an Istio DestinationRule defines policies that apply to traffic intended for a Service after routing has occurred. You need to deploy an Istio Gateway and a set of VirtualService. You will also need to deploy a set of DestinationRule resources. Create the Istio Gateway, Virtual Services, and Destination Rules, which you modified earlier.

kubectl apply -f resources/istio/gateway.yaml -n dev

kubectl apply -f resources/istio/virtualservices.yaml -n dev

kubectl apply -f resources/istio/destination-rules.yaml -n dev

Deploy Istio Telemetry Add-ons

The Istio project includes sample deployments of various telemetry add-ons that integrate with Istio. The add-ons include Jaeger, Zipkin, Kiali, Prometheus, and Grafana. While these applications are not a part of Istio, they are essential to making the most of Istio’s observability features. According to the Istio project, the deployments are meant to quickly get up and running and are optimized for this case. As a result, they may not be suitable for production. See the GitHub project for more info on integrating a production-grade version of each add-on.

Install the add-ons using the default configurations and then replace Prometheus with a modified version included in the project. The modified Kubernetes ConfigMap in the prometheus.yaml file has added configuration to scrape our reference platform’s /api/metrics endpoint.

kubectl apply -f $ISTIO_HOME/samples/addons

kubectl apply -f resources/istio/prometheus.yaml -n istio-system

You should see seven workloads in the namespace from the EKS Management Console’s Workloads tab, each with one pod up and running. The workloads include Grafana, Jaeger, Kiali, and Prometheus. Also included is the Istio Configuration demo Profile’s istiod, istio-ingressgateway, and istio-egressgateway, installed previously.

Deploy Kubernetes Web UI (Dashboard)

Kubernetes Web UI (Dashboard) is a web-based Kubernetes user interface. You can use the Dashboard to deploy containerized applications to a Kubernetes cluster, troubleshoot your containerized application, and manage cluster resources. You can use the Dashboard to get an overview of applications running on your cluster, as well as for creating or modifying individual Kubernetes resources.

To deploy the dashboard, follow the steps outlined in the Tutorial: Deploy the Kubernetes Dashboard (web UI).

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.5/aio/deploy/recommended.yaml

kubectl apply -f resources/aws/eks-admin-service-account.yaml

Each Service Account has a Secret with a valid Bearer Token that can be used to log in to the Dashboard. Use the following command to retrieve the token associated with the eks-admin Account.

kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep eks-admin | awk '{print $1}')

Start the kubectl proxy in a separate terminal window.

kubectl proxy

Use the eks-admin Account’s token to log in to the Kubernetes Dashboard at the following URL:

http://localhost:8001/api/v1/namespaces/kubernetes-dashboard/services/https:kubernetes-dashboard:/proxy/#!/login

Deploy Mongo Express

Mongo Express is a web-based MongoDB administrative interface written with Node.js, Express, and Bootstrap3. Install Mongo Express into the mongo-express namespace on the EKS cluster to manage the DocumentDB cluster.

kubectl apply -f ./resources/services/mongo-express.yaml -n mongo-express

Obtain the external IP address of any of the Kubernetes worker nodes and the NodePort of Mongo Express with the following two commands:

kubectl get nodes -o wide | awk {'print $1" " $2 " " $7'} | column -t

kubectl get service/mongo-express -n mongo-express

To ensure secure access to Mongo Express, create an Inbound Rule in your VPC’s Security Group that allows only your IP address (the ‘My IP’ option) access to Mongo Express running on the NodePort obtained above.

Start the kubectl proxy in a separate terminal window.

kubectl proxy

Use the external IP address of any of the Kubernetes worker nodes and current NodePort to access Mongo Express. Mongo Express will require you to enter the username and password you encoded in the Kubernetes Secret created earlier using basic authentication. Once you have deployed the reference application platform, later in the post, you will observe four databases: service-c, service-f, service-g, and service-h. The typical operational databases you would normally see with your own MongoDB installation are unavailable in the UI since DocumentDB is a managed service.

Modify and Deploy the ALB Ingress

The project contains an ALB Ingress resource, alb-ingress.yaml. The AWS Load Balancer Controller installed earlier is configured to limit the ingresses ALB ingress controller controls. By setting the --ingress-class=alb argument, it constrains the controller’s scope to ingresses with matching kubernetes.io/ingress.class: alb annotation. This is especially helpful when running multiple ingress controllers in the same cluster.

The ALB Ingress resource, alb-ingress.yaml, needs to be modified before deployment. First, update the alb.ingress.kubernetes.io/healthcheck-port annotation. The port value is derived from the status-port of the istio-ingressgateway, which was installed as part of the Istio demo configuration profile. To obtain the status-port from the istio-ingressgateway, run the following command:

kubectl -n istio-system get svc istio-ingressgateway \

-o jsonpath='{.spec.ports[?(@.name=="status-port")].nodePort}'

Next, insert the ARN of your SSL/TLS (Transport Layer Security) certificate that is associated with the domain listed in the external-dns.alpha.kubernetes.io/hostname annotation into the ALB Ingress resource, alb-ingress.yaml. Run the following command to insert the TLS certificate’s ARN into the alb.ingress.kubernetes.io/certificate-arn annotation. This command assumes that your SSL/TLS certificate is registered with AWS Certificate Manager (ACM).

export ALB_CERT=$(aws acm list-certificates --certificate-statuses ISSUED \

| jq -r '.CertificateSummaryList[] | select(.DomainName=="*.example-api.com") | .CertificateArn')

yq e '.metadata.annotations."alb.ingress.kubernetes.io/certificate-arn" = env(ALB_CERT)' -i resources/other/alb-ingress.yaml

The alb.ingress.kubernetes.io/actions.ssl-redirect annotation will redirect all HTTP traffic to HTTPS. The TLS certificate is used for HTTPS traffic. The ALB then terminates the HTTPS traffic at the ALB and forwards the unencrypted traffic to the EKS cluster on port 80.

Finally, update external-dns.alpha.kubernetes.io/hostname annotation with a common-delimited list of your platform’s UI and API hostnames. Below is the complete ALB Ingress resource, alb-ingress.yaml.

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: demo-ingress

namespace: istio-system

annotations:

kubernetes.io/ingress.class: alb

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/tags: Environment=dev

alb.ingress.kubernetes.io/healthcheck-port: '{{ your_status_port }}'

alb.ingress.kubernetes.io/healthcheck-path: /healthz/ready

alb.ingress.kubernetes.io/healthcheck-protocol: HTTP

alb.ingress.kubernetes.io/listen-ports: '[{"HTTP": 80}, {"HTTPS":443}]'

alb.ingress.kubernetes.io/actions.ssl-redirect: '{"Type": "redirect", "RedirectConfig": { "Protocol": "HTTPS", "Port": "443", "StatusCode": "HTTP_301"}}'

external-dns.alpha.kubernetes.io/hostname: "{{ your_ui_hostname, your_api_hostname }}"

alb.ingress.kubernetes.io/certificate-arn: "{{ your_ssl_tls_cert_arn }}"

alb.ingress.kubernetes.io/load-balancer-attributes: routing.http2.enabled=true,idle_timeout.timeout_seconds=30

labels:

app: reference-app

spec:

rules:

- http:

paths:

- pathType: Prefix

path: /

backend:

service:

name: ssl-redirect

port:

name: use-annotation

- pathType: Prefix

path: /

backend:

service:

name: istio-ingressgateway

port:

number: 80

- pathType: Prefix

path: /api

backend:

service:

name: istio-ingressgateway

port:

number: 80

To deploy the ALB Ingress resource, alb-ingress.yaml, run the following command:

kubectl apply -f resources/other/alb-ingress.yaml

To confirm the configuration of the AWS Load Balancer Controller and the ingresses ALB ingress controller controls, run the following command:

kubectl describe ingress.networking.k8s.io --all-namespaces

Any misconfigurations should show up as errors in the Events section.

Running the following command should display the public DNS address of the ALB associated with port 80.

kubectl -n istio-system get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

demo-ingress <none> * k8s-istiosys-demoingr-...us-east-1.elb.amazonaws.com 80 23m

Use the EC2 Load Balancer Management Console to review the new ALB’s details.

Deploy Fluent Bit

According to a recent AWS Blog post, Fluent Bit Integration in CloudWatch Container Insights for EKS, Fluent Bit is an open-source, multi-platform log processor and forwarder that allows you to collect data and logs from different sources and unify and send them to different destinations, including CloudWatch Logs. Fluent Bit is also fully compatible with Docker and Kubernetes environments. Using the newly launched Fluent Bit DaemonSet, you can send container logs from your EKS clusters to CloudWatch logs for logs storage and analytics.

We will use Fluent Bit to send the reference platform’s logs to Amazon CloudWatch Container Insights. To install Fluent Bit, I have used the procedure outlined in the AWS documentation: Quick Start Setup for Container Insights on Amazon EKS and Kubernetes. I recommend reviewing this documentation for detailed installation instructions.

kubectl apply -f https://raw.githubusercontent.com/aws-samples/amazon-cloudwatch-container-insights/latest/k8s-deployment-manifest-templates/deployment-mode/daemonset/container-insights-monitoring/cloudwatch-namespace.yaml

ClusterName=${CLUSTER_NAME}

RegionName=${EKS_REGION}

FluentBitHttpPort='2020'

FluentBitReadFromHead='Off'

[[ ${FluentBitReadFromHead} = 'On' ]] && FluentBitReadFromTail='Off'|| FluentBitReadFromTail='On'

[[ -z ${FluentBitHttpPort} ]] && FluentBitHttpServer='Off' || FluentBitHttpServer='On'

kubectl create configmap fluent-bit-cluster-info \

--from-literal=cluster.name=${ClusterName} \

--from-literal=http.server=${FluentBitHttpServer} \

--from-literal=http.port=${FluentBitHttpPort} \

--from-literal=read.head=${FluentBitReadFromHead} \

--from-literal=read.tail=${FluentBitReadFromTail} \

--from-literal=logs.region=${RegionName} -n amazon-cloudwatch

kubectl apply -f https://raw.githubusercontent.com/aws-samples/amazon-cloudwatch-container-insights/latest/k8s-deployment-manifest-templates/deployment-mode/daemonset/container-insights-monitoring/fluent-bit/fluent-bit.yaml

kubectl get pods -n amazon-cloudwatch

DASHBOARD_NAME=istio_observe_demo

REGION_NAME=${EKS_REGION}

CLUSTER_NAME=${CLUSTER_NAME}

curl https://raw.githubusercontent.com/aws-samples/amazon-cloudwatch-container-insights/latest/k8s-deployment-manifest-templates/deployment-mode/service/cwagent-prometheus/sample_cloudwatch_dashboards/fluent-bit/cw_dashboard_fluent_bit.json \

| sed "s/{{YOUR_AWS_REGION}}/${REGION_NAME}/g" \

| sed "s/{{YOUR_CLUSTER_NAME}}/${CLUSTER_NAME}/g" \

| xargs -0 aws cloudwatch put-dashboard --dashboard-name ${DASHBOARD_NAME} --dashboard-body

curl https://raw.githubusercontent.com/aws-samples/amazon-cloudwatch-container-insights/latest/k8s-deployment-manifest-templates/deployment-mode/daemonset/container-insights-monitoring/fluentd/fluentd.yaml | kubectl delete -f -

kubectl delete configmap cluster-info -n amazon-cloudwatch

From the EKS Management Console’s Workloads tab, you should see three fluent-bit pods up and running in the amazon-cloudwatch namespace. There is one fluent-bit pod per EKS worker node.

Once the reference application platform is deployed and running, you should be able to visualize the application in the Amazon CloudWatch Container Insights console’s Map view.

The reference platform’s cluster logs will also be available in Amazon CloudWatch. You should have access to individual Log groups for each application’s components.

Lastly, individual pod logs can also be viewed through the Kubernetes Dashboard. The microservice’s log verbosity level is set to info by default. This level can be changed using the LOG_LEVEL environment variable in the service’s Kubernetes Deployment resource.

Deploy ServiceEntry Resources

Using Istio ServiceEntry configurations, you can reach any publicly accessible service from within your Istio cluster. The Istio proxy can be configured to block any host without an HTTP service or service entry defined within the mesh. We will not go to this extreme in the demonstration. However, we will configure ServiceEntry configurations to monitor egress traffic to the reference platform’s two external services, DocumentDB and Amazon MQ.

Confirm the istio-egressgateway is running, then deploy the two ServiceEntry resources you modified earlier.

kubectl get pod -l istio=egressgateway -n istio-system

NAME READY STATUS RESTARTS AGE

istio-egressgateway-585f7668fc-74qtf 1/1 Running 0 14h

kubectl apply -f resources/istio/external-mesh-document-db-internal.yaml

kubectl apply -f resources/istio/external-mesh-amazon-mq-internal.yaml

Deploy the Reference Application Platform

Each of the platform’s components has a file in the project containing both the Kubernetes Service and corresponding Deployment resources.

Deploy the reference application platform’s frontend UI and eight backend microservices to the EKS cluster using the following commands:

kubectl apply -f ./resources/services/angular-ui.yaml -n dev

for service in a b c d e f g h; do

kubectl apply -f "./resources/services/service-$service.yaml" -n dev

done

From the EKS Management Console’s Workloads tab, you should observe that the three pods for each reference application platform component are up and running in the dev namespace.

You can also use the Kubernetes Dashboard to confirm that the deployments were successful to the dev namespace.

Test the Platform

You want to ensure the platform’s web-based UI is reachable via the AWS Application Load Balancer to EKS through Istio and to the UI’s FQDN (fully qualified domain name) of angular-ui.dev.svc.cluster.local. You want to ensure the platform’s eight microservices are communicating with each other and communicating with the external DocumentDB cluster and Amazon MQ RabbitMQ broker. The easiest way to test the cluster is by viewing the Angular UI in a web browser. For example, in my case, https://observe-ui.example-api.com.

The UI requires you to input the hostname of the backend, which is the edge service, Service A. For example, in my case, https://observe-api.example-api.com. Since you want to use your own hostname and the UI’s JavaScript code is running locally in your web browser, this option allows you to provide your own hostname. This is the same hostname you inserted into the Istio VirtualService for Service A. This hostname routes the API calls to the FQDN of Service A running in the dev namespace, service-a.dev.svc.cluster.local. You should observe seven greeting responses displayed in the UI, all but Service F.

You can also use tools like Postman to test the backend directly, using the same hostname of the backend, as above.

Load Testing with Hey

You can also use performance testing tools to load-test the platform. Many issues will not show up until the platform is placed under elevated load. I recently tried hey, a modern go-based load generator tool as a replacement for Apache Bench (ab), Unlike ab, hey supports HTTP/2 endpoints, which is required to test the platform on EKS with Istio. You can install hey with Homebrew.

brew install hey

Using hey, you can test the reference application platform by hitting the API hostname and /api/greeting endpoint. The command below generates 1,000 requests, simulates 25 concurrent users, and uses HTTP/2. Traffic will be generated across all the services, the RabbitMQ broker, and the DocumentDB databases.

hey -n 1000 -c 25 -h2 {{ your_api_hostname }}/api/greetingThe results show 1,000 successful HTTP 200 responses from the reference platform’s API in about 43 seconds with an average response time of 1.0430 seconds.

To generate a consistent level of traffic over a longer period of time, try this variation of the command:

hey -n25000-c 25-q 1-h2{{ your_api_hostname }}/api/greeting

This command generates a steady stream of traffic for about 18 minutes, making it more convenient when exploring and troubleshooting your observability tools.

Part Two

In part two of this post, we will explore each observability tool and see how they can help us manage the reference application platform running on the EKS cluster.

To tear down the EKS cluster, DocumentDB cluster, and Amazon MQ broker, use the commands below.

# EKS cluster

eksctl delete cluster --name $CLUSTER_NAME

# Amazon MQ

aws mq list-brokers | jq -r '.BrokerSummaries[] | .BrokerId'

aws mq delete-broker --broker-id {{ your_broker_id }}

# DocumentDB

aws docdb describe-db-clusters \

| jq -r '.DBClusters[] | .DbClusterResourceId'

aws docdb delete-db-cluster \

--db-cluster-identifier {{ your_cluster_id }}

This blog represents my own viewpoints and not of my employer, Amazon Web Services (AWS). All product names, logos, and brands are the property of their respective owners.