Posts Tagged cross-compile

Object Tracking on the Raspberry Pi with C++, OpenCV, and cvBlob

Posted by Gary A. Stafford in Bash Scripting, C++ Development, Raspberry Pi on February 9, 2013

Use C++ with OpenCV and cvBlob to perform image processing and object tracking on the Raspberry Pi, using a webcam.

Source code and compiled samples are now available on GitHub. The below post describes the original code on the ‘Master’ branch. As of May 2014, there is a revised and improved version of the project on the ‘rev05_2014’ branch, on GitHub. The README.md details the changes and also describes how to install OpenCV, cvBlob, and all dependencies!

Introduction

As part of a project with a local FIRST Robotics Competition (FRC) Team, I’ve been involved in developing a Computer Vision application for use on the Raspberry Pi. Our FRC team’s goal is to develop an object tracking and target acquisition application that could be run on the Raspberry Pi, as opposed to the robot’s primary embedded processor, a National Instrument’s NI cRIO-FRC II. We chose to work in C++ for its speed, We also decided to test two popular open-source Computer Vision (CV) libraries, OpenCV and cvBlob.

Due to its single ARM1176JZF-S 700 MHz ARM processor, a significant limitation of the Raspberry Pi is the ability to perform complex operations in real-time, such as image processing. In an earlier post, I discussed Motion to detect motion with a webcam on the Raspberry Pi. Although the Raspberry Pi was capable of running Motion, it required a greatly reduced capture size and frame-rate. And even then, the Raspberry Pi’s ability to process the webcam’s feed was very slow. I had doubts it would be able to meet the processor-intense requirements of this project.

Development for the Raspberry Pi

Using C++ in NetBeans 7.2.1 on Ubuntu 12.04.1 LTS and 12.10, I wrote several small pieces of code to demonstrate the Raspberry Pi’s ability to perform basic image processing and object tracking. Parts of the follow code are based on several OpenCV and cvBlob code examples, found in my research. Many of those examples are linked on the end of this article. Examples of cvBlob are especially hard to find.

The Code

There are five files: ‘main.cpp’, ‘testfps.cpp (testfps.h)’, and ‘testcvblob.cpp (testcvblob.h)’. The main.cpp file’s main method calls the test methods in the other two files. The cvBlob library only works with the pre-OpenCV 2.0. Therefore, I wrote all the code using the older objects and methods. The code is not written using the latest OpenCV 2.0 conventions. For example, cvBlob uses 1.0’s ‘IplImage’ image type instead 2.0’s newer ‘CvMat’ image type. My next projects is to re-write the cvBlob code to use OpenCV 2.0 conventions and/or find a newer library. The cvBlob library offered so many advantages, I felt not using the newer OpenCV 2.0 features was still worthwhile.

Main Program Method (main.cpp)

| /* | |

| * File: main.cpp | |

| * Author: Gary Stafford | |

| * Description: Program entry point | |

| * Created: February 3, 2013 | |

| */ | |

| #include <stdio.h> | |

| #include <sstream> | |

| #include <stdlib.h> | |

| #include <iostream> | |

| #include "testfps.hpp" | |

| #include "testcvblob.hpp" | |

| using namespace std; | |

| int main(int argc, char* argv[]) { | |

| int captureMethod = 0; | |

| int captureWidth = 0; | |

| int captureHeight = 0; | |

| if (argc == 4) { // user input parameters with call | |

| captureMethod = strtol(argv[1], NULL, 0); | |

| captureWidth = strtol(argv[2], NULL, 0); | |

| captureHeight = strtol(argv[3], NULL, 0); | |

| } else { // user did not input parameters with call | |

| cout << endl << "Demonstrations/Tests: " << endl; | |

| cout << endl << "(1) Test OpenCV - Show Webcam" << endl; | |

| cout << endl << "(2) Test OpenCV - No Webcam" << endl; | |

| cout << endl << "(3) Test cvBlob - Show Image" << endl; | |

| cout << endl << "(4) Test cvBlob - No Image" << endl; | |

| cout << endl << "(5) Test Blob Tracking - Show Webcam" << endl; | |

| cout << endl << "(6) Test Blob Tracking - No Webcam" << endl; | |

| cout << endl << "Input test # (1-6): "; | |

| cin >> captureMethod; | |

| // test 3 and 4 don't require width and height parameters | |

| if (captureMethod != 3 && captureMethod != 4) { | |

| cout << endl << "Input capture width (pixels): "; | |

| cin >> captureWidth; | |

| cout << endl << "Input capture height (pixels): "; | |

| cin >> captureHeight; | |

| cout << endl; | |

| if (!captureWidth > 0) { | |

| cout << endl << "Width value incorrect" << endl; | |

| return -1; | |

| } | |

| if (!captureHeight > 0) { | |

| cout << endl << "Height value incorrect" << endl; | |

| return -1; | |

| } | |

| } | |

| } | |

| switch (captureMethod) { | |

| case 1: | |

| TestFpsShowVideo(captureWidth, captureHeight); | |

| case 2: | |

| TestFpsNoVideo(captureWidth, captureHeight); | |

| break; | |

| case 3: | |

| DetectBlobsShowStillImage(); | |

| break; | |

| case 4: | |

| DetectBlobsNoStillImage(); | |

| break; | |

| case 5: | |

| DetectBlobsShowVideo(captureWidth, captureHeight); | |

| break; | |

| case 6: | |

| DetectBlobsNoVideo(captureWidth, captureHeight); | |

| break; | |

| default: | |

| break; | |

| } | |

| return 0; | |

| } |

Tests 1-2 (testcvblob.hpp)

| // -*- C++ -*- | |

| /* | |

| * File: testcvblob.hpp | |

| * Author: Gary Stafford | |

| * Created: February 3, 2013 | |

| */ | |

| #ifndef TESTCVBLOB_HPP | |

| #define TESTCVBLOB_HPP | |

| int DetectBlobsNoStillImage(); | |

| int DetectBlobsShowStillImage(); | |

| int DetectBlobsNoVideo(int captureWidth, int captureHeight); | |

| int DetectBlobsShowVideo(int captureWidth, int captureHeight); | |

| #endif /* TESTCVBLOB_HPP */ |

Tests 1-2 (testcvblob.cpp)

| /* | |

| * File: testcvblob.cpp | |

| * Author: Gary Stafford | |

| * Description: Track blobs using OpenCV and cvBlob | |

| * Created: February 3, 2013 | |

| */ | |

| #include <cv.h> | |

| #include <highgui.h> | |

| #include <cvblob.h> | |

| #include "testcvblob.hpp" | |

| using namespace cvb; | |

| using namespace std; | |

| // Test 3: OpenCV and cvBlob (w/ webcam feed) | |

| int DetectBlobsNoStillImage() { | |

| /// Variables ///////////////////////////////////////////////////////// | |

| CvSize imgSize; | |

| IplImage *image, *segmentated, *labelImg; | |

| CvBlobs blobs; | |

| unsigned int result = 0; | |

| /////////////////////////////////////////////////////////////////////// | |

| image = cvLoadImage("colored_balls.jpg"); | |

| if (image == NULL) { | |

| return -1; | |

| } | |

| imgSize = cvGetSize(image); | |

| cout << endl << "Width (pixels): " << image->width; | |

| cout << endl << "Height (pixels): " << image->height; | |

| cout << endl << "Channels: " << image->nChannels; | |

| cout << endl << "Bit Depth: " << image->depth; | |

| cout << endl << "Image Data Size (kB): " | |

| << image->imageSize / 1024 << endl << endl; | |

| segmentated = cvCreateImage(imgSize, 8, 1); | |

| cvInRangeS(image, CV_RGB(155, 0, 0), CV_RGB(255, 130, 130), segmentated); | |

| labelImg = cvCreateImage(cvGetSize(image), IPL_DEPTH_LABEL, 1); | |

| result = cvLabel(segmentated, labelImg, blobs); | |

| cvFilterByArea(blobs, 500, 1000000); | |

| cout << endl << "Blob Count: " << blobs.size(); | |

| cout << endl << "Pixels Labeled: " << result << endl << endl; | |

| cvReleaseBlobs(blobs); | |

| cvReleaseImage(&labelImg); | |

| cvReleaseImage(&segmentated); | |

| cvReleaseImage(&image); | |

| return 0; | |

| } | |

| // Test 4: OpenCV and cvBlob (w/o webcam feed) | |

| int DetectBlobsShowStillImage() { | |

| /// Variables ///////////////////////////////////////////////////////// | |

| CvSize imgSize; | |

| IplImage *image, *frame, *segmentated, *labelImg; | |

| CvBlobs blobs; | |

| unsigned int result = 0; | |

| bool quit = false; | |

| /////////////////////////////////////////////////////////////////////// | |

| cvNamedWindow("Processed Image", CV_WINDOW_AUTOSIZE); | |

| cvMoveWindow("Processed Image", 750, 100); | |

| cvNamedWindow("Image", CV_WINDOW_AUTOSIZE); | |

| cvMoveWindow("Image", 100, 100); | |

| image = cvLoadImage("colored_balls.jpg"); | |

| if (image == NULL) { | |

| return -1; | |

| } | |

| imgSize = cvGetSize(image); | |

| cout << endl << "Width (pixels): " << image->width; | |

| cout << endl << "Height (pixels): " << image->height; | |

| cout << endl << "Channels: " << image->nChannels; | |

| cout << endl << "Bit Depth: " << image->depth; | |

| cout << endl << "Image Data Size (kB): " | |

| << image->imageSize / 1024 << endl << endl; | |

| frame = cvCreateImage(imgSize, image->depth, image->nChannels); | |

| cvConvertScale(image, frame, 1, 0); | |

| segmentated = cvCreateImage(imgSize, 8, 1); | |

| cvInRangeS(image, CV_RGB(155, 0, 0), CV_RGB(255, 130, 130), segmentated); | |

| cvSmooth(segmentated, segmentated, CV_MEDIAN, 7, 7); | |

| labelImg = cvCreateImage(cvGetSize(frame), IPL_DEPTH_LABEL, 1); | |

| result = cvLabel(segmentated, labelImg, blobs); | |

| cvFilterByArea(blobs, 500, 1000000); | |

| cvRenderBlobs(labelImg, blobs, frame, frame, | |

| CV_BLOB_RENDER_BOUNDING_BOX | CV_BLOB_RENDER_TO_STD, 1.); | |

| cvShowImage("Image", frame); | |

| cvShowImage("Processed Image", segmentated); | |

| while (!quit) { | |

| char k = cvWaitKey(10)&0xff; | |

| switch (k) { | |

| case 27: | |

| case 'q': | |

| case 'Q': | |

| quit = true; | |

| break; | |

| } | |

| } | |

| cvReleaseBlobs(blobs); | |

| cvReleaseImage(&labelImg); | |

| cvReleaseImage(&segmentated); | |

| cvReleaseImage(&frame); | |

| cvReleaseImage(&image); | |

| cvDestroyAllWindows(); | |

| return 0; | |

| } | |

| // Test 5: Blob Tracking (w/ webcam feed) | |

| int DetectBlobsNoVideo(int captureWidth, int captureHeight) { | |

| /// Variables ///////////////////////////////////////////////////////// | |

| CvCapture *capture; | |

| CvSize imgSize; | |

| IplImage *image, *frame, *segmentated, *labelImg; | |

| int picWidth, picHeight; | |

| CvTracks tracks; | |

| CvBlobs blobs; | |

| CvBlob* blob; | |

| unsigned int result = 0; | |

| bool quit = false; | |

| /////////////////////////////////////////////////////////////////////// | |

| capture = cvCaptureFromCAM(-1); | |

| cvSetCaptureProperty(capture, CV_CAP_PROP_FRAME_WIDTH, captureWidth); | |

| cvSetCaptureProperty(capture, CV_CAP_PROP_FRAME_HEIGHT, captureHeight); | |

| cvGrabFrame(capture); | |

| image = cvRetrieveFrame(capture); | |

| if (image == NULL) { | |

| return -1; | |

| } | |

| imgSize = cvGetSize(image); | |

| cout << endl << "Width (pixels): " << image->width; | |

| cout << endl << "Height (pixels): " << image->height << endl << endl; | |

| frame = cvCreateImage(imgSize, image->depth, image->nChannels); | |

| while (!quit && cvGrabFrame(capture)) { | |

| image = cvRetrieveFrame(capture); | |

| cvConvertScale(image, frame, 1, 0); | |

| segmentated = cvCreateImage(imgSize, 8, 1); | |

| cvInRangeS(image, CV_RGB(155, 0, 0), CV_RGB(255, 130, 130), segmentated); | |

| //Can experiment either or both | |

| cvSmooth(segmentated, segmentated, CV_MEDIAN, 7, 7); | |

| cvSmooth(segmentated, segmentated, CV_GAUSSIAN, 9, 9); | |

| labelImg = cvCreateImage(cvGetSize(frame), IPL_DEPTH_LABEL, 1); | |

| result = cvLabel(segmentated, labelImg, blobs); | |

| cvFilterByArea(blobs, 500, 1000000); | |

| cvRenderBlobs(labelImg, blobs, frame, frame, 0x000f, 1.); | |

| cvUpdateTracks(blobs, tracks, 200., 5); | |

| cvRenderTracks(tracks, frame, frame, 0x000f, NULL); | |

| picWidth = frame->width; | |

| picHeight = frame->height; | |

| if (cvGreaterBlob(blobs)) { | |

| blob = blobs[cvGreaterBlob(blobs)]; | |

| cout << "Blobs found: " << blobs.size() << endl; | |

| cout << "Pixels labeled: " << result << endl; | |

| cout << "center-x: " << blob->centroid.x | |

| << " center-y: " << blob->centroid.y | |

| << endl; | |

| cout << "offset-x: " << ((picWidth / 2)-(blob->centroid.x)) | |

| << " offset-y: " << (picHeight / 2)-(blob->centroid.y) | |

| << endl; | |

| cout << "\n"; | |

| } | |

| char k = cvWaitKey(10)&0xff; | |

| switch (k) { | |

| case 27: | |

| case 'q': | |

| case 'Q': | |

| quit = true; | |

| break; | |

| } | |

| } | |

| cvReleaseBlobs(blobs); | |

| cvReleaseImage(&labelImg); | |

| cvReleaseImage(&segmentated); | |

| cvReleaseImage(&frame); | |

| cvReleaseImage(&image); | |

| cvDestroyAllWindows(); | |

| cvReleaseCapture(&capture); | |

| return 0; | |

| } | |

| // Test 6: Blob Tracking (w/o webcam feed) | |

| int DetectBlobsShowVideo(int captureWidth, int captureHeight) { | |

| /// Variables ///////////////////////////////////////////////////////// | |

| CvCapture *capture; | |

| CvSize imgSize; | |

| IplImage *image, *frame, *segmentated, *labelImg; | |

| CvPoint pt1, pt2, pt3, pt4, pt5, pt6; | |

| CvScalar red, green, blue; | |

| int picWidth, picHeight, thickness; | |

| CvTracks tracks; | |

| CvBlobs blobs; | |

| CvBlob* blob; | |

| unsigned int result = 0; | |

| bool quit = false; | |

| /////////////////////////////////////////////////////////////////////// | |

| cvNamedWindow("Processed Video Frames", CV_WINDOW_AUTOSIZE); | |

| cvMoveWindow("Processed Video Frames", 750, 400); | |

| cvNamedWindow("Webcam Preview", CV_WINDOW_AUTOSIZE); | |

| cvMoveWindow("Webcam Preview", 200, 100); | |

| capture = cvCaptureFromCAM(1); | |

| cvSetCaptureProperty(capture, CV_CAP_PROP_FRAME_WIDTH, captureWidth); | |

| cvSetCaptureProperty(capture, CV_CAP_PROP_FRAME_HEIGHT, captureHeight); | |

| cvGrabFrame(capture); | |

| image = cvRetrieveFrame(capture); | |

| if (image == NULL) { | |

| return -1; | |

| } | |

| imgSize = cvGetSize(image); | |

| cout << endl << "Width (pixels): " << image->width; | |

| cout << endl << "Height (pixels): " << image->height << endl << endl; | |

| frame = cvCreateImage(imgSize, image->depth, image->nChannels); | |

| while (!quit && cvGrabFrame(capture)) { | |

| image = cvRetrieveFrame(capture); | |

| cvFlip(image, image, 1); | |

| cvConvertScale(image, frame, 1, 0); | |

| segmentated = cvCreateImage(imgSize, 8, 1); | |

| //Blue paper | |

| cvInRangeS(image, CV_RGB(49, 69, 100), CV_RGB(134, 163, 216), segmentated); | |

| //Green paper | |

| //cvInRangeS(image, CV_RGB(45, 92, 76), CV_RGB(70, 155, 124), segmentated); | |

| //Can experiment either or both | |

| cvSmooth(segmentated, segmentated, CV_MEDIAN, 7, 7); | |

| cvSmooth(segmentated, segmentated, CV_GAUSSIAN, 9, 9); | |

| labelImg = cvCreateImage(cvGetSize(frame), IPL_DEPTH_LABEL, 1); | |

| result = cvLabel(segmentated, labelImg, blobs); | |

| cvFilterByArea(blobs, 500, 1000000); | |

| cvRenderBlobs(labelImg, blobs, frame, frame, CV_BLOB_RENDER_COLOR, 0.5); | |

| cvUpdateTracks(blobs, tracks, 200., 5); | |

| cvRenderTracks(tracks, frame, frame, CV_TRACK_RENDER_BOUNDING_BOX, NULL); | |

| red = CV_RGB(250, 0, 0); | |

| green = CV_RGB(0, 250, 0); | |

| blue = CV_RGB(0, 0, 250); | |

| thickness = 1; | |

| picWidth = frame->width; | |

| picHeight = frame->height; | |

| pt1 = cvPoint(picWidth / 2, 0); | |

| pt2 = cvPoint(picWidth / 2, picHeight); | |

| cvLine(frame, pt1, pt2, red, thickness); | |

| pt3 = cvPoint(0, picHeight / 2); | |

| pt4 = cvPoint(picWidth, picHeight / 2); | |

| cvLine(frame, pt3, pt4, red, thickness); | |

| cvShowImage("Webcam Preview", frame); | |

| cvShowImage("Processed Video Frames", segmentated); | |

| if (cvGreaterBlob(blobs)) { | |

| blob = blobs[cvGreaterBlob(blobs)]; | |

| pt5 = cvPoint(picWidth / 2, picHeight / 2); | |

| pt6 = cvPoint(blob->centroid.x, blob->centroid.y); | |

| cvLine(frame, pt5, pt6, green, thickness); | |

| cvCircle(frame, pt6, 3, green, 2, CV_FILLED, 0); | |

| cvShowImage("Webcam Preview", frame); | |

| cvShowImage("Processed Video Frames", segmentated); | |

| cout << "Blobs found: " << blobs.size() << endl; | |

| cout << "Pixels labeled: " << result << endl; | |

| cout << "center-x: " << blob->centroid.x | |

| << " center-y: " << blob->centroid.y | |

| << endl; | |

| cout << "offset-x: " << ((picWidth / 2)-(blob->centroid.x)) | |

| << " offset-y: " << (picHeight / 2)-(blob->centroid.y) | |

| << endl; | |

| cout << "\n"; | |

| } | |

| char k = cvWaitKey(10)&0xff; | |

| switch (k) { | |

| case 27: | |

| case 'q': | |

| case 'Q': | |

| quit = true; | |

| break; | |

| } | |

| } | |

| cvReleaseBlobs(blobs); | |

| cvReleaseImage(&labelImg); | |

| cvReleaseImage(&segmentated); | |

| cvReleaseImage(&frame); | |

| cvReleaseImage(&image); | |

| cvDestroyAllWindows(); | |

| cvReleaseCapture(&capture); | |

| return 0; | |

| } |

Tests 2-6 (testfps.hpp)

// -*- C++ -*-

/*

* File: testfps.hpp

* Author: Gary Stafford

* Created: February 3, 2013

*/

#ifndef TESTFPS_HPP

#define TESTFPS_HPP

int TestFpsNoVideo(int captureWidth, int captureHeight);

int TestFpsShowVideo(int captureWidth, int captureHeight);

#endif /* TESTFPS_HPP */

Tests 2-6 (testfps.cpp)

/*

* File: testfps.cpp

* Author: Gary Stafford

* Description: Test the fps of a webcam using OpenCV

* Created: February 3, 2013

*/

#include <cv.h>

#include <highgui.h>

#include <time.h>

#include <stdio.h>

#include "testfps.hpp"

using namespace std;

// Test 1: OpenCV (w/ webcam feed)

int TestFpsNoVideo(int captureWidth, int captureHeight) {

IplImage* frame;

CvCapture* capture = cvCreateCameraCapture(-1);

cvSetCaptureProperty(capture, CV_CAP_PROP_FRAME_WIDTH, captureWidth);

cvSetCaptureProperty(capture, CV_CAP_PROP_FRAME_HEIGHT, captureHeight);

time_t start, end;

double fps, sec;

int counter = 0;

char k;

time(&start);

while (1) {

frame = cvQueryFrame(capture);

time(&end);

++counter;

sec = difftime(end, start);

fps = counter / sec;

printf("FPS = %.2f\n", fps);

if (!frame) {

printf("Error");

break;

}

k = cvWaitKey(10)&0xff;

switch (k) {

case 27:

case 'q':

case 'Q':

break;

}

}

cvReleaseCapture(&capture);

return 0;

}

// Test 2: OpenCV (w/o webcam feed)

int TestFpsShowVideo(int captureWidth, int captureHeight) {

IplImage* frame;

CvCapture* capture = cvCreateCameraCapture(-1);

cvSetCaptureProperty(capture, CV_CAP_PROP_FRAME_WIDTH, captureWidth);

cvSetCaptureProperty(capture, CV_CAP_PROP_FRAME_HEIGHT, captureHeight);

cvNamedWindow("Webcam Preview", CV_WINDOW_AUTOSIZE);

cvMoveWindow("Webcam Preview", 300, 200);

time_t start, end;

double fps, sec;

int counter = 0;

char k;

time(&start);

while (1) {

frame = cvQueryFrame(capture);

time(&end);

++counter;

sec = difftime(end, start);

fps = counter / sec;

printf("FPS = %.2f\n", fps);

if (!frame) {

printf("Error");

break;

}

cvShowImage("Webcam Preview", frame);

k = cvWaitKey(10)&0xff;

switch (k) {

case 27:

case 'q':

case 'Q':

break;

}

}

cvDestroyWindow("Webcam Preview");

cvReleaseCapture(&capture);

return 0;

}

Compiling Locally on the Raspberry Pi

After writing the code, the first big challenge was cross-compiling the native C++ code, written on Intel IA-32 and 64-bit x86-64 processor-based laptops, to run on the Raspberry Pi’s ARM architecture. After failing to successfully cross-compile the C++ source code using crosstools-ng, mostly due to my lack of cross-compiling experience, I resorted to using g++ to compile the C++ source code directly on the Raspberry Pi.

First, I had to properly install the various CV libraries and the compiler on the Raspberry Pi, which itself is a bit daunting.

Compiling OpenCV 2.4.3, from the source-code, on the Raspberry Pi took an astounding 8 hours. Even though compiling the C++ source code takes longer on the Raspberry Pi, I could be assured the complied code would run locally. Below are the commands that I used to transfer and compile the C++ source code on my Raspberry Pi.

Copy and Compile Commands

| scp *.jpg *.cpp *.h {your-pi-user}@{your.ip.address}:your/file/path/ | |

| ssh {your-pi-user}@{your.ip.address} | |

| cd ~/your/file/path/ | |

| g++ `pkg-config opencv cvblob --cflags --libs` testfps.cpp testcvblob.cpp main.cpp -o FpsTest -v | |

| ./FpsTest |

Special Note About cvBlob on ARM

At first I had given up on cvBlob working on the Raspberry Pi. All the cvBlob tests I ran, no matter how simple, continued to hang on the Raspberry Pi after working perfectly on my laptop. I had narrowed the problem down to the ‘cvLabel’ method, but was unable to resolve. However, I recently discovered a documented bug on the cvBlob website. It concerned cvBlob and the very same ‘cvLabel’ method on ARM-based devices (ARM = Raspberry Pi!). After making a minor modification to cvBlob’s ‘cvlabel.cpp’ source code, as directed in the bug post, and re-compiling on the Raspberry Pi, the test worked perfectly.

Testing OpenCV and cvBlob

The code contains three pairs of tests (six total), as follows:

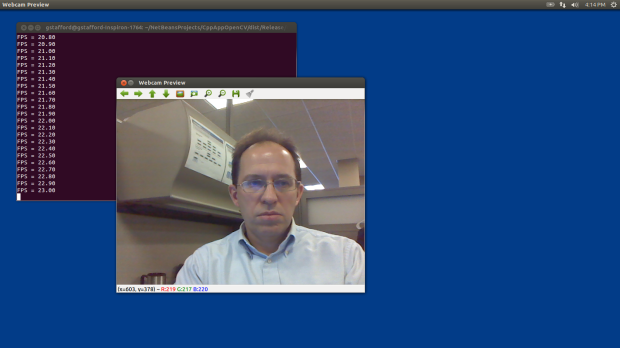

- OpenCV (w/ live webcam feed)

Determine if OpenCV is installed and functioning properly with the complied C++ code. Capture a webcam feed using OpenCV, and display the feed and frame rate (fps). - OpenCV (w/o live webcam feed)

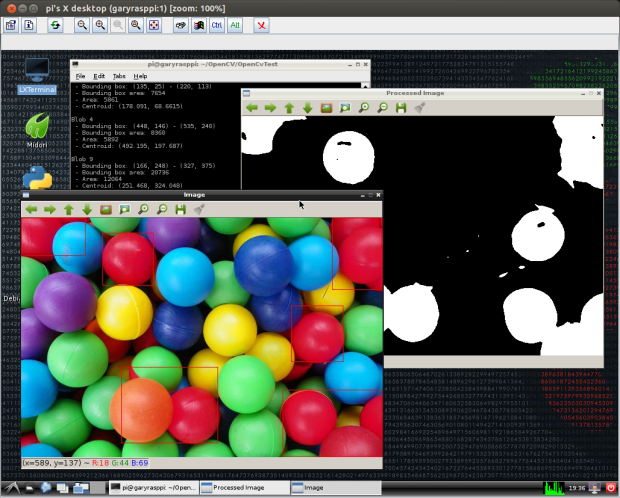

Same as Test #1, but only print the frame rate (fps). The computer doesn’t need display the video feed to process the data. More importantly, the webcam’s feed might unnecessarily tax the computer’s processor and GPU. - OpenCV and cvBlob (w/ live webcam feed)

Determine if OpenCV and cvBlob are installed and functioning properly with the complied C++ code. Detect and display all objects (blobs) in a specific red color range, contained in a static jpeg image. - OpenCV and cvBlob (w/o live webcam feed)

Same as Test #3, but only print some basic information about the static image and number of blobs detected. Again, the computer doesn’t need display the video feed to process the data. - Blob Tracking (w/ live webcam feed)

Detect, track, and display all objects (blobs) in a specific blue color range, along with the largest blob’s positional data. Captured with a webcam, using OpenCV and cvBlob. - Blob Tracking (w/o live webcam feed)

Same as Test #5, but only display the largest blob’s positional data. Again, the computer doesn’t need the display the webcam feed, to process the data. The feed taxes the computer’s processor unnecessarily, which is being consumed with detecting and tracking the blobs. The blob’s positional data it sent to the robot and used by its targeting system to position its shooting platform.

The Program

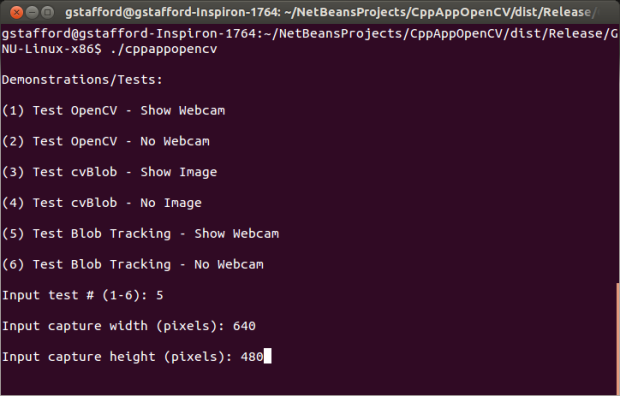

There are two ways to run this program. First, from the command line you can call the application and pass in three parameters. The parameters include:

- Test method you want to run (1-6)

- Width of the webcam capture window in pixels

- Height of the webcam capture window in pixels.

An example would be ‘./TestFps 2 640 480’ or ‘./TestFps 5 320 240’.

The second method to run the program and not pass in any parameters. In that case, the program will prompt you to input the test number and other parameters on-screen.

Test 1: Laptop versus Raspberry Pi

Test 3: Laptop versus Raspberry Pi

Test 5: Laptop versus Raspberry Pi

The Results

Each test was first run on two Linux-based laptops, with Intel 32-bit and 64-bit architectures, and with two different USB webcams. The laptops were used to develop and test the code, as well as provide a baseline for application performance. Many factors can dramatically affect the application’s ability do image processing. They include the computer’s processor(s), RAM, HDD, GPU, USB, Operating System, and the webcam’s video capture size, compression ratio, and frame-rate. There are significant differences in all these elements when comparing an average laptop to the Raspberry Pi.

Frame-rates on the Intel processor-based Ubuntu laptops easily performed at or beyond the maximum 30 fps rate of the webcams, at 640 x 480 pixels. On a positive note, the Raspberry Pi was able to compile and execute the tests of OpenCV and cvBlob (see bug noted at end of article). Unfortunately, at least in my tests, the Raspberry Pi could not achieve more than 1.5 – 2 fps at most, even in the most basic tests, and at a reduced capture size of 320 x 240 pixels. This can be seen in the first and second screen-grabs of Test #1, above. Although, I’m sure there are ways to improve the code and optimize the image capture, the results were much to slow to provide accurate, real-time data to the robot’s targeting system.

Links of Interest

Static Test Images Free from: http://www.rgbstock.com/

Great Website for OpenCV Samples: http://opencv-code.com/

Another Good Website for OpenCV Samples: http://opencv-srf.blogspot.com/2010/09/filtering-images.html

cvBlob Code Sample: https://code.google.com/p/cvblob/source/browse/samples/red_object_tracking.cpp

Detecting Blobs with cvBlob: http://8a52labs.wordpress.com/2011/05/24/detecting-blobs-using-cvblobs-library/

Best Post/Script to Install OpenCV on Ubuntu and Raspberry Pi: http://jayrambhia.wordpress.com/2012/05/02/install-opencv-2-3-1-and-simplecv-in-ubuntu-12-04-precise-pangolin-arch-linux/

Measuring Frame-rate with OpenCV: http://8a52labs.wordpress.com/2011/05/19/frames-per-second-in-opencv/

OpenCV and Raspberry Pi: http://mitchtech.net/raspberry-pi-opencv/