Archive for category Technology Consulting

Unlocking the Potential of Generative AI for Synthetic Data Generation

Posted by Gary A. Stafford in AI/ML, Cloud, Enterprise Software Development, Machine Learning, Python, Software Development, Technology Consulting on April 18, 2023

Explore the capabilities and applications of generative AI to create realistic synthetic data for software development, analytics, and machine learning

Introduction

Generative AI refers to a class of artificial intelligence algorithms capable of generating new data similar to a given dataset. These algorithms learn the underlying patterns and relationships in the data and use this knowledge to create new data consistent with the original dataset. Generative AI is a rapidly evolving field that has the potential to revolutionize the way we generate and use data.

Generative AI can generate synthetic data based on patterns and relationships learned from actual data. This ability to generate synthetic data has numerous applications, from creating realistic virtual environments for training and simulation to generating new data for machine learning models. In this article, we will explore the capabilities of generative AI and its potential to generate synthetic data, both directly and indirectly, for software development, data analytics, and machine learning.

Common Forms of Synthetic Data

According to AltexSoft, in their article Synthetic Data for Machine Learning: its Nature, Types, and Ways of Generation, common forms of synthetic data include:

- Tabular data: This type of synthetic data is often used to generate datasets that resemble real-world data in terms of structure and statistical properties.

- Time series data: This type of synthetic data generates datasets that resemble real-world time series data. It is commonly used when real-world time series data is unavailable or too expensive.

- Image and video data: This synthetic data is used to generate realistic images and videos for training machine learning models or simulations.

- Text data: This synthetic data generates realistic text for natural language processing tasks or for generating training data for machine learning models.

- Sound data: This synthetic data generates realistic sound for training machine learning models or simulations.

Synthetic Tabular Data Types

Synthetic tabular data refers to artificially generated datasets that resemble real-world tabular data in terms of structure and statistical properties. Tabular data is organized into rows and columns, like tables or spreadsheets. Some specific types of synthetic tabular data include:

- Financial data: Synthetic datasets that resemble real-world financial data such as bank transactions, stock prices, or credit card information.

- Customer data: Synthetic datasets that resemble real-world customer data, such as purchase history, demographic information, or customer behavior.

- Medical data: Synthetic datasets that resemble real-world medical data, such as patient records, medical test results, or treatment history.

- Sensor data: Synthetic datasets that resemble real-world sensor data such as temperature readings, humidity levels, or air quality measurements.

- Sales data: Synthetic datasets that resemble real-world sales data, such as sales transactions, product information, or customer behavior.

- Inventory data: Synthetic datasets that resemble real-world inventory data, such as stock levels, product information, or supplier information.

- Marketing data: Synthetic datasets that resemble real-world marketing data, such as campaign performance, customer behavior, or market trends.

- Human resources data: Synthetic datasets that resemble real-world human resources data, such as employee records, performance evaluations, or salary information.

Challenges with Creating Synthetic Data

According to sources including Towards Data Science, enov8, and J.P. Morgan, there are several challenges in creating synthetic data, including:

- Technical difficulty: Properly modeling complex real-world behaviors such as synthetic data is challenging, given available technologies.

- Biased behavior: The flexible nature of synthetic data makes it prone to potentially biased results.

- Privacy concerns: Care must be taken to ensure synthetic data does not reveal sensitive information.

- Quality of the data model: If the quality of the data model is not high, wrong conclusions can be reached.

- Time and effort: Synthetic data generation requires time and effort.

Difficult Patterns and Behaviors to Model

Many patterns and behaviors can be challenging to model in synthetic data, for example:

- Rare events: If certain events rarely occur in the real world, generating synthetic data that accurately reflects their distribution can be difficult.

- Complex relationships: Synthetic data generators may struggle to capture complex relationships between variables, such as non-linear interactions, feedback loops, or conditional dependencies.

- Contextual variability: Contextual factors, such as time, location, or individual differences, can have a significant impact on the distribution of data. Modeling this variability accurately can be challenging.

- Outliers and anomalies: Synthetic data generators may be unable to generate outliers or anomalies that are realistic and representative of the real world.

- Dynamic data: If the data is dynamic and changes over time, it can be challenging to generate synthetic data that captures these changes accurately.

- Unobserved variables: Sometimes, variables may be necessary for understanding the data distribution but are not directly observable. These variables can be challenging to model in synthetic data.

- Data bias: If the real-world data is biased in some way, such as over-representation of certain groups or under-representation of others, it can be challenging to generate synthetic data that is unbiased and representative of the population.

- Time-dependent patterns: If the data exhibits time-dependent patterns, such as seasonality or trends, it can be challenging to generate synthetic data that accurately reflects them.

- Spatial patterns: If the data has a spatial component, such as location data or images, it can be challenging to generate synthetic data that captures the spatial patterns realistically.

- Data sparsity: If the data is sparse or incomplete, it can be difficult to generate synthetic data that accurately reflects the distribution of the entire dataset.

- Human behavior: If the data involves human behavior, such as in social science or behavioral economics, it can be challenging to model the complex and nuanced behaviors of individuals and groups.

- Sensitive or confidential information: In some cases, the data may contain sensitive or confidential information that cannot be shared, making it challenging to generate synthetic data that preserves privacy while accurately reflecting the underlying distribution.

Overall, many patterns can be challenging to model accurately in synthetic data. It often requires careful consideration of the specific data characteristics and the synthetic data generation techniques’ limitations.

Easily Modeled Patterns and Behaviors

There are many simple and well-understood patterns that can be easily modeled in synthetic data, for example:

- Randomness: If the data is purely random, it can be easily generated using a random number generator or other simple techniques.

- Gaussian distribution: If the data follows a Gaussian or normal distribution, it can be generated using a Gaussian random number generator.

- Uniform distribution: If the data follows a uniform distribution, it can be easily generated using a uniform random number generator.

- Linear relationships: If the data follows a linear relationship between variables, it can be modeled using simple linear regression techniques.

- Categorical variables: If the data consists of categorical variables, such as gender or occupation, it can be generated using a categorical distribution.

- Text data: If the data consists of text, it can be generated using natural language processing techniques, such as language models or text generation algorithms.

- Time series: If the data consists of time series data, such as stock prices or weather data, it can be generated using time series models, such as Autoregressive integrated moving average (ARIMA) or Long short-term memory (LSTM).

- Seasonality: If the data exhibits seasonal patterns, such as higher sales data during holiday periods, it can be generated using seasonal time series models.

- Proportions and percentages: If the data consists of proportions or percentages, such as product sales distribution across different regions, it can be generated using beta or Dirichlet distributions.

- Multivariate normal distribution: If the data follows a multivariate normal distribution, it can be generated using a multivariate Gaussian random number generator.

- Networks: If the data consists of network or graph data, such as social networks or transportation networks, it can be generated using network models, such as Erdos-Renyi or Barabasi-Albert models.

- Binary data: If the data consists of binary data, such as whether a customer churned, it can be generated using a Bernoulli distribution.

- Geospatial data: If it involves geospatial data, such as the location of points of interest, it can be easily using geospatial models, such as point processes or spatial point patterns.

- Customer behaviors: If the data involves customer behaviors, such as browsing or purchase histories, it can be generated using customer journey models, such as Markov models.

In general, simple and well-understood patterns can be easily modeled using synthetic data techniques. In contrast, more complex and nuanced patterns may require more sophisticated modeling techniques and a deeper understanding of the underlying data characteristics.

Creating Synthetic Data with Generative AI

We can use many popular generative AI-powered tools to create synthetic data for testing applications, constructing analytics pipelines, and building machine learning models. Tools include OpenAI ChatGPT, Microsoft’s all-new Bing Chat, ChatSonic, Tabnine, GitHub Copilot, and Amazon CodeWhisperer. For more information on these tools, check out my recent blog post:

Accelerating Development with Generative AI-Powered Coding Tools

Explore six popular generative AI-powered tools, including ChatGPT, Copilot, CodeWhisperer, Tabnine, Bing, and…garystafford.medium.com

Let’s start with a simple example of generating synthetic sales data. Suppose we have created a new sales forecasting application for coffee shops that we need to test using synthetic data. We might start by prompting a generative AI tool like OpenAI’s ChatGPT for some data:

Create a CSV file with 25 random sales records for a coffee shop.

Each record should include the following fields:

- id (incrementing integer starting at 1)

- date (random date between 1/1/2022 and 12/31/2022)

- time (random time between 6:00am and 9:00pm in 1-minute increments)

- product_id (incrementing integer starting at 1)

- product

- calories

- price in USD

- type (drink or food)

- quantity (random integer between 1 and 3)

- amount (price * quantity)

- payment type (cash, credit, debit, or gift card)

The content and structure of a prompt can vary, and this can strongly influence ChatGPT’s response. Based on the above prompt, the results were accurate but not very useful for testing our application in this format. ChatGPT cannot create a physical CSV file. Furthermore, ChatGPT’s response length is limited; only about twenty records were returned. According to ChatGPT, in general, ChatGPT can generate responses of up to 2,048 tokens, the maximum output length allowed by the GPT-3 model.

Instead of outputting the actual synthetic data, we could ask ChatGPT to write a program that can, in turn, generate the synthetic data. This option is certainly more scalable. Let’s prompt ChatGPT to write a Python program to generate synthetic sales data with the same characteristics as before:

Create a Python3 program to generate 100 sales of common items

sold in a coffee shop. The data should be written to a CSV file

and include a header row. Each record should include the following fields:

- id (incrementing integer starting at 1)

- date (random date between 1/1/2022 and 12/31/2022)

- time (random time between 6:00am and 9:00pm in 1-minute increments)

- product_id (incrementing integer starting at 1)

- product

- calories

- price in USD

- type (drink or food)

- quantity (random integer between 1 and 3)

- amount (price * quantity)

- payment type (cash, credit, debit, or gift card)

Using a single concise prompt, ChatGPT generated a complete Python program, including code comments, to generate synthetic sales data. Unfortunately, given ChatGPT’s response size limitation, the coffee shop menu was limited to just six items. Reprompting for more items would result in the truncation of the output and, thus, the program, making it unrunnable. Instead, we could use an additional prompt to generate a longer Python list of menu items and combine the two pieces of code in our IDE. Regardless, we will still need to copy and paste the code into our IDE to review, debug, test, and run.

ChatGPT’s Python program, copied and pasted into VS Code, ran without modifications, and wrote 100 synthetic sales records to a CSV file!

Using IDE-based Generative AI Tools

Although generating synthetic data directly or snippets of code in chat-based generative AI tools are helpful for limited use cases, writing code in IDE gives us several advantages:

- Code does not need to be copied and pasted from external sources into an IDE

- Consecutive lines of code, method, and block code completion overcome the single response size limits of chat-based tools like OpenAI ChatGPT

- Code can be reused and adapted to evolving use cases over time

- Python interpreter and debugger or equivalent for other languages

- Automatic code formatting, linting, and code style enforcement

- Unit, integration, and functional testing

- Static code analysis (SCA)

- Vulnerability scanning

- IntelliSense for code completion

- Source code management (SCM) / version control

Let’s use the same techniques we used with ChatGPT, but from within an IDE to generate three types of synthetic data. We will choose Microsoft’s VS Code with GitHub Copilot and Python as our programming language.

Source Code

All the code examples shown in this post can be found on GitHub.

Example #1: Coffee Shop Sales Data

First, we will start by outlining the program’s objective using code comments on the top of our Python file. This detailed context helps us to clearly express our goal and enables Copilot to generate an accurate response.

# Write a program that creates synthetic sales data for a coffee shop.

# The program should accept a command line argument that specifies the number of records to generate.

# The program should write the sales data to a file called 'coffee_shop_sales_data.csv'.

# The program should contain the following functions:

# - main() function that calls the other functions

# - function that returns one random product from a list of dictionaries

# - function that returns a dictionary containing one sales record

# - function that writes the sales records to a file

Following the import statements also generated with the assistance of Copilot, we will write the first function to return a random product from a list of 25 products. Again, we will use code comments as a prompt to generate the code. Copilot was able to generate 100% of the function’s code from the comments.

# Write a function to create list of dictionaries.

# The list of dictionaries should contain 15 drink items and 10 food items sold in a coffee shop.

# Include the product id, product name, calories, price, and type (Food or Drink).

# Capilize the first letter of each product name.

# Return a random item from the list of dictionaries.

Below is an example of Copilot’s ability to generate complete lines of code. Ultimately, it generated 100% of the function including choosing the items sold in a coffee shop, with a reasonable price and caloric count. Copilot is not limited to just understanding code.

Next, we will write a function to return a random sales record.

# Write a function to return a random sales record.

# The record should be a dictionary with the following fields:

# - id (an incrementing integer starting at 1)

# - date (a random date between 1/1/2022 and 12/31/2022)

# - time (a random time between 6:00am and 9:00pm in 1 minute increments)

# - product_id, product, calories, price, and type (from the get_product function)

# - quantity (a random integer between 1 and 3)

# - amount (price * quantity)

# - payment type (Cash, Credit, Debit, Gift Card, Apple Pay, Google Pay, or Venmo)

Again, Copilot generated 100% of the function’s code as a single block based on the code comments.

Lastly, we will create a function to write the sales data into a CSV file using Copilot’s help.

# Write a function to write the sales records to a CSV file called 'coffee_shop_sales.csv'.

# Use an input parameter to specify the number of records to write.

# The CSV file must have a header row and be comma delimited.

# All string values must be enclosed in double quotes.

Again below, we see an example of Copilot’s ability to generate an entire Python function. I needed to correct a few problems with the generated code. First, there was a lack of quotes for string values, which I added to the function (quotechar='"', quoting=csv.QUOTE_NONNUMERIC). Also, the function was missing a key line of code, sale = get_sales_record(), which would have caused the code to fail. Remember, just because the code was generated does not mean it is correct.

Here is the complete program that creates synthetic sales data for a coffee shop with Copilot assistance:

Copilot generated an astounding 80–85% of the program’s final code. The initial program took 10–15 minutes to write using code comments. I then added a few new features, including the ability to pass in the record count on the command line and the hash-based transaction id, which took another 5 minutes. Finally, I used GitHub Code Brushes to optimize the code and generate the Python docstrings, and Black Formatter and Flake8 extensions to format and lint, all of which took less than 5 minutes. With testing and debugging, the total time was about 25–30 minutes.

The most significant difference with Copilot was that I never had to leave the IDE to look up code references or find existing sales datasets or even a coffee shop menu to duplicate. The code, as well as the list of products, price, calories, and product type, were all generated by Copilot.

To make this example more realistic, you could use Copilot’s assistance to write algorithms capable of reflecting daily, weekly, and seasonal variations in product choice and sales volumes. This might include simulating increased sales during the busy morning rush hour or a preference for iced drinks in the summer months versus hot drinks during the winter months.

Here is an example of the synthetic sales data output by the example application:

Example #2: Residential Address Data

We could use these same techniques to generate a list of residential addresses. To start, we can prompt Copilot for the values in a list of common street names and street types in the United States:

# Write a function that creates a list of common street names

# in the United States, in alphabetical order.

# List should be in alphabetical order. Each name should be unique.

# Return a random street name.

def get_street_name():

street_names = [

"Ash", "Bend", "Bluff", "Branch", "Bridge", "Broadway", "Brook", "Burg",

"Bury", "Canyon", "Cape", "Cedar", "Cove", "Creek", "Crest", "Crossing",

"Dale", "Dam", "Divide", "Downs", "Elm", "Estates", "Falls", "Fifth",

"First", "Fork", "Fourth", "Glen", "Green", "Grove", "Harbor", "Heights",

"Hickory", "Hill", "Hollow", "Island", "Isle", "Knoll", "Lake", "Landing",

...

]

return random.choice(street_names)

# Write a function that creates a list of common street types

# in the United States, in alphabetical order.

# List should be in alphabetical order. Each name should be unique.

# Return a random street type.

def get_street_type():

street_types = [

"Alley", "Avenue", "Bend", "Bluff", "Boulevard", "Branch", "Bridge", "Brook",

"Burg", "Circle", "Commons", "Court", "Drive", "Highway", "Lane", "Parkway",

"Place", "Road", "Square", "Street", "Terrace", "Trail", "Way"

]

return random.choice(street_types)

Next, we can create a function that returns a property type based on a categorical distribution of common residential property types with the prompt:

# Write a function to return a random property type.

# Accept a random value between 0 and 1 as an input parameter.

# The function must return one of the following values based on the %:

# 63% Single-family, 26% Multi-family, 4% Condo,

# 3% Townhouse, 2% Mobile home, 1% Farm, 1% Other.

Again, Copilot generated 100% of the function’s code as a single block based on the code comments.

Additionally, we could have Copilot help us generate a list of the 50 largest cities in the United States with state, zip code, and population, with the prompt:

# Write a function to returns the 50 largest cities in the United States.

# List should be sorted in descending order by population.

# Include the city, state abbreviation, zip code, and population.

# Return a list of dictionaries.

Once again, Copilot generated 100% of the function’s code using a combination of single lines and code blocks based on the code comments.

When randomly choosing a city, we can use a categorical distribution of populations of all the cities to control the distribution of cities in the final synthetic dataset. For example, there will be more addresses in larger cities like New York City or Los Angeles than in smaller cities like Buffalo or Virginia Beach, with the prompt:

# Write a function that calculates the total population of the list of cities.

# Add a 'pcnt_of_total_population' and 'pcnt_running_total' columns to list.

# Returns a sorted list of cities by population.

Here is the complete program that creates synthetic US-based address data with Copilot assistance:

To make this example more realistic, you could use Copilot’s assistance to write algorithms capable of accurately reflecting assessed property values based on the type of residence and the zip code.

Here is an example of the synthetic US-based residential address data output by the example application:

Example #3: Demographic Data

We could use the same techniques again to generate synthetic demographic data. With the assistance of Copilot, we can write functions that randomly return typical feminine or masculine first names (forenames) and common last names (surnames) found in the United States, for this use case.

# Write a function that generates a list of common feminine first names in the United States.

# List should be in alphabetical order.

# Each name should be unique.

# Return random first name.

def get_first_name_feminine():

first_name_feminine = [

"Alice", "Amanda", "Amy", "Angela", "Ann", "Anna", "Barbara", "Betty",

"Brenda", "Carol", "Carolyn", "Catherine", "Christine", "Cynthia", "Deborah", "Debra",

"Diane", "Donna", "Doris", "Dorothy", "Elizabeth", "Frances", "Gloria", "Heather",

"Helen", "Janet", "Jennifer", "Jessica", "Joyce", "Julie", "Karen", "Kathleen", "Kimberly",

]

return random.choice(first_name_feminine)

With the assistance of Copilot, we can also write functions that return demographic information, such as age, gender, race, marital status, religion, and political affiliation. Similar to the previous sales data example, we can influence the final synthetic dataset based on categorical distributions of different demographic categories, for instance, with the prompt:

# Write a function that returns a person's martial status.

# Accept a random value between 0 and 1 as an input parameter.

# The function must return one of the following values based on the %:

# 50% Married, 33% Single, 17% Unknown.

def get_martial_status(rnd_value):

if rnd_value < 0.50:

return "Married"

elif rnd_value < 0.83:

return "Single"

else:

return "Unknown"

By altering the categorical distributions, we can quickly alter the resulting synthetic dataset to reflect differing demographic characteristics: an older or younger population, the predominance of a single race, religious affiliation, or marital status, or the ratio of males to females.

Next, we can use a Gaussian distribution (aka normal distribution) to return the year of birth in a bell-shaped curve, given a mean year and a standard deviation, using Python’s random.normalvariate function.

# Write a function that generates a normal distribution of date of births.

# with a mean year of 1975 and a standard deviation of 10.

# Return random date of birth as a string in the format YYYY-MM-DD

def get_dob():

day_of_year = random.randint(1, 365)

year_of_birth = int(random.normalvariate(1975, 10))

dob = date(int(year_of_birth), 1, 1) + timedelta(day_of_year - 1)

dob = dob.strftime("%Y-%m-%d")

return dob

Here is the complete program that creates synthetic demographic data with Copilot’s assistance:

To make this example more realistic, you could use Copilot’s assistance to write algorithms capable of more accurately representing the nuanced associations and correlations between age, gender, race, marital status, religion, and political affiliation.

Here is an example of the synthetic demographic data output by the example application:

Generative AI Tools for Unit Testing

In addition to writing code and documentation, a common use of generative AI code assistants like Copilot is unit tests. For example, we can create unit tests for each function in our coffee shop sales data code generator, using the same method of prompting with code comments.

Conclusion

In this post, we learned how Generative AI could assist us in creating synthetic data for software development, analytics, and machine learning. The examples herein generated data using simple techniques. Using advanced modeling techniques, we could generate increasingly complex, realistic synthetic data.

To learn about other ways Generative AI can be used to assist in writing code, please read my previous article, Ten Ways to Leverage Generative AI for Development on AWS.

🔔 To keep up with future content, follow Gary Stafford on LinkedIn.

This blog represents my viewpoints and not those of my employer, Amazon Web Services (AWS). All product names, logos, and brands are the property of their respective owners.

Navigating the World of Generative AI: A Guide to Essential Terminology

Posted by Gary A. Stafford in AI/ML, Machine Learning, Technology Consulting on April 9, 2023

Learn the essential terms and concepts you need to know to navigate the rapidly evolving world of generative AI

Generative AI is a fascinating and rapidly evolving field that has the potential to transform the way we interact with technology. However, with so much buzz and hype surrounding this topic, making sense of it all can be challenging. In this article, we’ll cut through the noise and gain a clear understanding of the essential terminology you need to know to navigate the world of generative AI.

According to a variety of sources, including McKinsey & Company and Vox Media, the critical difference between generative AI and other emerging technologies is that millions of people can — and already are — using it to create new content, such as text, photos, video, code, and 3D renderings, from data it is trained on. Recent breakthroughs in the field have the potential to drastically change the way we approach content creation. This has led to widespread excitement and some understandable apprehension about the potential for generative AI to impact virtually every aspect of society and disrupt industries, including media and entertainment, healthcare and life sciences, education, advertising, legal services, and finance.

Even if your current role is not in technology, it is highly likely that generative AI will have a direct impact on both your personal and professional life.

Gary Stafford

Even if your current role is not in technology, it is highly likely that generative AI will have a direct impact on both your personal and professional life. Familiarizing yourself with basic terminology related to generative AI can help you better comprehend the discussions on social media and in the news.

Terminology

Let’s explore the following terminology (in alphabetical order):

- Artificial General Intelligence (AGI)

- Artificial Intelligence (AI)

- ChatGPT

- DALL·E

- Deep Learning (DL)

- Generative AI

- Generative Pre-trained Transformer (GPT)

- Intelligence Amplification (IA)

- Large Language Model (LLM)

- Machine Learning (ML)

- Neural Network

- OpenAI

- Prompt Engineering

- Reinforcement Learning with Human Feedback (RLHF).

Below is a knowledge graph, created with OpenAI ChatGPT, showing the approximate relationships between the post’s terms.

Artificial General Intelligence (AGI)

According to the all-new Bing Chat, based on ChatGPT, artificial general intelligence (AGI) is the ability of an intelligent agent to understand or learn any intellectual task that human beings or other animals can. It is a primary goal of some artificial intelligence research and a common topic in science fiction and Futurism. According to Forbes, which also prompted ChatGPT, Artificial General Intelligence (AGI) refers to a theoretical type of artificial intelligence that possesses human-like cognitive abilities, such as the ability to learn, reason, solve problems, and communicate in natural language.

Eliezer Yudkowsky is an American researcher, writer, and philosopher on the topic of AI. The podcast Eliezer Yudkowsky: Dangers of AI and the End of Human Civilization, by prominent MIT Research Scientist Lex Fridman, explores various aspects of artificial general intelligence against the backdrop of the recent release of OpenAI’s GPT-4.

Artificial Intelligence (AI)

According to the Brookings Institute, AI is generally thought to refer to machines that respond to stimulation consistent with traditional responses from humans, given the human capacity for contemplation, judgment, and intention. Similarly, according to the U.S. Department of State, the term artificial intelligence refers to a machine-based system that can, for a given set of human-defined objectives, make predictions, recommendations, or decisions influencing real or virtual environments.

ChatGPT

ChatGPT, according to ChatGPT, is a large language model developed by OpenAI. I was trained on a massive dataset of human-written text using a deep neural network (DNN) architecture called GPT (Generative Pre-trained Transformer). Its purpose is to generate human-like responses to questions and prompts, engage in conversations, and perform various language-related tasks. It is a virtual assistant capable of understanding and generating natural language.

DALL·E

According to Wikipedia, DALL·E is a deep learning model developed by OpenAI to generate digital images from natural language descriptions, called prompts. DALL·E is a portmanteau of the names of the animated robot Pixar character WALL-E and the Spanish surrealist artist Salvador Dalí. According to OpenAI, DALL·E is an AI system that can create realistic images and art from a description in natural language. OpenAI introduced DALL·E in January 2021. One year later, in April 2022, they announced their newest system, DALL·E 2, which generates more realistic and accurate images with 4x greater resolution. DALL·E 2 can create original, realistic images and art from a text description. It can combine concepts, attributes, and styles.

Deep Learning (DL)

According to IBM, deep learning is a subset of machine learning (ML), which is essentially a neural network with three or more layers. These neural networks attempt to simulate the behavior of the human brain — albeit far from matching its ability — allowing it to “learn” from large amounts of data. While a neural network with a single layer can still make approximate predictions, additional hidden layers can help to optimize and refine for accuracy.

Generative AI

According to Wikipedia, generative artificial intelligence (AI), aka generative AI, is a type of AI system capable of generating text, images, or other media in response to prompts. Generative AI systems use generative models such as large language models (LLMs) to statistically sample new data based on the training data set used to create them.

Generative Pre-trained Transformer (GPT)

According to ChatGPT, Generative Pre-trained Transformer (GPT) is a deep learning architecture used for natural language processing (NLP) tasks, such as text generation, summarization, and question-answering. It uses a transformer neural network architecture with a self-attention mechanism, allowing the model to understand each word’s context in a sentence or text. The success of GPT models lies in their ability to generate natural-sounding and coherent text similar to human-written language. The term “pre-trained” refers to the fact that the model is trained on large amounts of unlabeled text data, such as books or web pages, to learn general language patterns and features, before being fine-tuned on smaller labeled datasets for specific tasks.

According to ZDNET, GPT-4, announced on March 14, 2023, is the newest version of OpenAI’s language model systems. Its previous version, GPT 3.5, powered the company’s wildly popular ChatGPT chatbot when it launched in November 2022. According to OpenAI, GPT-4 is the latest milestone in OpenAI’s effort to scale up deep learning. GPT-4 is a large multimodal model (accepting image and text inputs, emitting text outputs) that, while less capable than humans in many real-world scenarios, exhibits human-level performance on various professional and academic benchmarks.

Intelligence Amplification

According to Wikipedia, intelligence amplification (IA) (aka cognitive augmentation, machine-augmented intelligence, or enhanced intelligence) refers to the effective use of information technology in augmenting human intelligence. Similarly, Harvard Business Review describes intelligence amplification as the use of technology to augment human intelligence. And a paradigm shift is on the horizon, where new devices will offer less intrusive, more intuitive ways to amplify our intelligence.

In his latest book, Impromptu: Amplifying Our Humanity Through AI, co-authored by ChatGPT-4, Greylock general partner Reid Hoffman discusses the subject of intelligence amplification and AI’s ability to amplify human ability. The topic was also explored in Hoffman’s interview with OpenAI CEO Sam Altman on Greylock’s podcast series AI Field Notes.

Large Language Model (LLM)

According to Wikipedia, a large language model (LLM) is a language model consisting of a neural network with many parameters (typically billions of weights or more), trained on large quantities of unlabelled text using self-supervised learning. Though the term large language model has no formal definition, it often refers to deep learning models having a parameter count on the order of billions or more.

Machine Learning (ML)

According to MIT, machine learning (ML) is a subfield of artificial intelligence, which is broadly defined as the capability of a machine to imitate intelligent human behavior. Artificial intelligence systems are used to perform complex tasks in a way that is similar to how humans solve problems. The function of a machine learning system can be descriptive, meaning that the system uses the data to explain what happened; predictive, meaning the system uses the data to predict what will happen; or prescriptive, meaning the system will use the data to make suggestions about what action to take.

Neural Network

According to MathWorks, a neural network (aka artificial neural network or ANN) is an adaptive system that learns by using interconnected nodes or neurons in a layered structure that resembles a human brain. A neural network can learn from data to be trained to recognize patterns, classify data, and forecast future events. Similarly, according to AWS, a neural network is a method in artificial intelligence that teaches computers to process data in a way that is inspired by the human brain. It is a type of machine learning process called deep learning that uses interconnected nodes or neurons in a layered structure that resembles the human brain. It creates an adaptive system that computers use to learn from their mistakes and improve continuously.

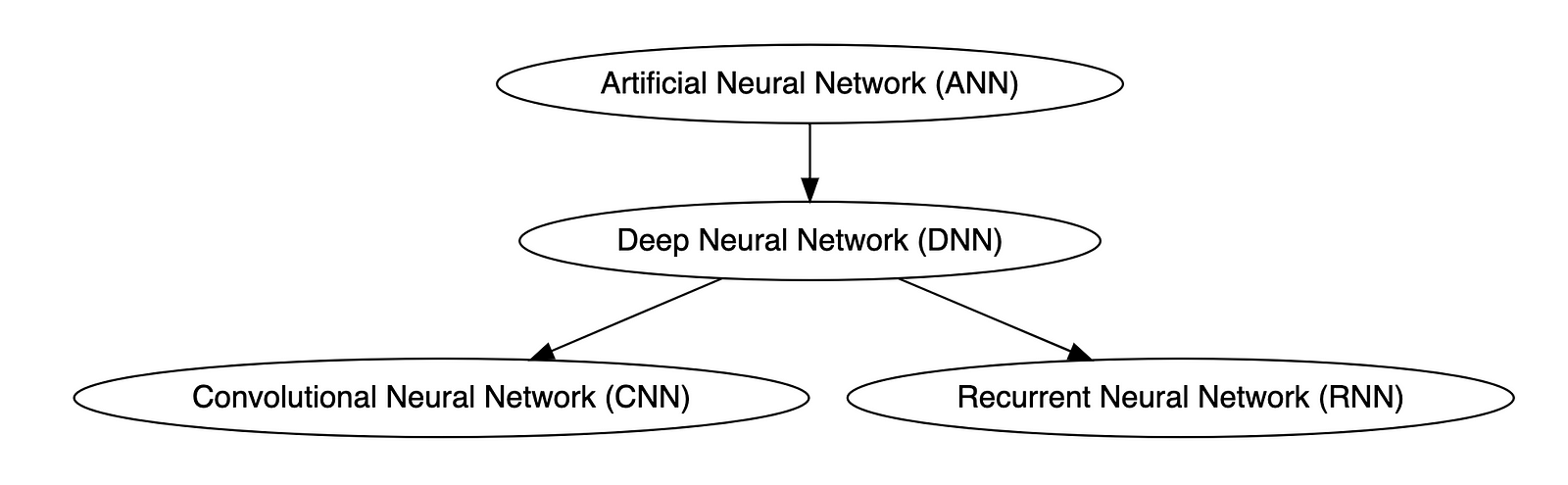

Types of Neural Networks

Deep neural networks (DNNs) are improved versions of conventional artificial neural networks (ANNs) with multiple layers. While ANNs consist of one or two hidden layers to process data, DNNs contain multiple layers between the input and output layers. Convolutional neural networks (CNNs) are another kind of DNN. CNNs have a convolution layer, which uses filters to convolve an area of input data into a smaller area, detecting important or specific parts within the area. Recurrent neural networks (RNNs) can be considered a type of DNN. DNNs are neural networks with multiple layers between the input and output layers. RNNs can have multiple layers and can be used to process sequential data, making them a type of DNN.

OpenAI

OpenAI is a San Francisco-based AI research and deployment company whose mission is to “ensure that artificial general intelligence benefits all of humanity.” According to Wikipedia, OpenAI was founded in 2015 by current CEO Sam Altman, Greylock general partner Reid Hoffman, Y Combinator founding partner Jessica Livingston, Elon Musk, Ilya Sutskever, Peter Thiel, and others. OpenAI’s current products include GPT-4, DALL·E 2, Whisper, ChatGPT, and OpenAI Codex.

Prompt Engineering

According to Cohere, prompting (aka prompt engineering) is at the heart of working with LLMs. The prompt provides context for the text we want the model to generate. The prompts we create can be anything from simple instructions to more complex pieces of text, and they are used to encourage the model to produce a specific type of output. Cohere’s Generative AI with Cohere blog post series is an excellent resource on the topic of Prompting. Similarly, according to Dataconomy, using prompts to get the desired result from an AI tool is known as AI prompt engineering. A prompt can be a statement or a block of code, but it can also just be a string of words. Similar to how you may prompt a person as a starting point for writing an essay, you can use prompts to teach an AI model to produce the desired results when given a specific task.

Reinforcement Learning with Human Feedback (RLHF)

According to Scale AI in their blog, Why is ChatGPT so good?, instead of simply predicting the next word(s), large language models (LLMs) can now follow human instructions and provide useful responses. These advancements are made possible by fine-tuning them with specialized instruction datasets and a technique called reinforcement learning with human feedback (RLHF). Similarly, according to Hugging Face, RLHF (aka RL from human preferences) uses methods from reinforcement learning to directly optimize LLMs with human feedback. RLHF has enabled language models to begin to align a model trained on a geprogneral corpus of text data to that of complex human values.

Ready for More?

Mastered all the terminology, ready for more? Here are some additional generative AI terms for you to learn:

- Alignment (AI Alignment, Aligned AI)

- Attention (Self-attention)

- Backpropagation

- Embeddings

- Foundational Model

- Next-Token Predictors

- Parameter

- Reinforcement Learning (RL)

- Self-Supervised Learning (SSL)

- Temperature

- Tokenization

- Transformer

- Vector

- Weight

🔔 To keep up with future content, follow Gary Stafford on LinkedIn.

This blog represents my viewpoints and not those of my employer, Amazon Web Services (AWS). All product names, logos, and brands are the property of their respective owners.

Developing a Multi-Account AWS Environment Strategy

Posted by Gary A. Stafford in AWS, Cloud, DevOps, Enterprise Software Development, Technology Consulting on March 11, 2023

Explore twelve common patterns for developing an effective and efficient multi-account AWS environment strategy

Introduction

Every company is different: its organizational structure, the length of time it has existed, how fast it has grown, the industries it serves, its product and service diversity, public or private sector, and its geographic footprint. This uniqueness is reflected in how it organizes and manages its Cloud resources. Just as no two organizations are exactly alike, the structure of their AWS environments is rarely identical.

Some organizations successfully operate from a single AWS account, while others manage workloads spread across dozens or even hundreds of accounts. The volume and purpose of an organization’s AWS accounts are a result of multiple factors, including length of time spent on AWS, Cloud maturity, organizational structure and complexity, sectors, industries, and geographies served, product and service mix, compliance and regulatory requirements, and merger and acquisition activity.

“By design, all resources provisioned within an AWS account are logically isolated from resources provisioned in other AWS accounts, even within your own AWS Organizations.” (AWS)

Working with industry peers, the AWS community, and a wide variety of customers, one will observe common patterns for how organizations separate environments and workloads using AWS accounts. These patterns form an AWS multi-account strategy for operating securely and reliably in the Cloud at scale. The more planning an organization does in advance to develop a sound multi-account strategy, the less the burden that is required to manage changes as the organization grows over time.

The following post will explore twelve common patterns for effectively and efficiently organizing multiple AWS accounts. These patterns do not represent an either-or choice; they are designed to be purposefully combined to form a multi-account AWS environment strategy for your organization.

Patterns

- Pattern 1: Single “Uber” Account

- Pattern 2: Non-Prod/Prod Environments

- Pattern 3: Upper/Lower Environments

- Pattern 4: SDLC Environments

- Pattern 5: Major Workload Separation

- Pattern 6: Backup

- Pattern 7: Sandboxes

- Pattern 8: Centralized Management and Governance

- Pattern 9: Internal/External Environments

- Pattern 10: PCI DSS Workloads

- Pattern 11: Vendors and Contractors

- Pattern 12: Mergers and Acquisitions

Patterns 1–8 are progressively more mature multi-account strategies, while Patterns 9–12 represent special use cases for supplemental accounts.

Multi-Account Advantages

According to AWS’s whitepaper, Organizing Your AWS Environment Using Multiple Accounts, the benefits of using multiple AWS accounts include the following:

- Group workloads based on business purpose and ownership

- Apply distinct security controls by environment

- Constrain access to sensitive data (including compliance and regulation)

- Promote innovation and agility

- Limit the scope of impact from adverse events

- Support multiple IT operating models

- Manage costs (budgeting and cost attribution)

- Distribute AWS Service Quotas (fka limits) and API request rate limits

As we explore the patterns for organizing your AWS accounts, we will see how and to what degree each of these benefits is demonstrated by that particular pattern.

AWS Control Tower

Discussions about AWS multi-account environment strategies would not be complete without mentioning AWS Control Tower. According to the documentation, “AWS Control Tower offers a straightforward way to set up and govern an AWS multi-account environment, following prescriptive best practices.” AWS Control Tower includes Landing zone, described as “a well-architected, multi-account environment based on security and compliance best practices.”

AWS Control Tower is prescriptive in the Shared accounts it automatically creates within its AWS Organizations’ organizational units (OUs). Shared accounts created by AWS Control Tower include the Management, Log Archive, and Audit accounts. The previous standalone AWS service, AWS Landing Zone, maintained slightly different required accounts, including Shared Services, Log Archive, Security, and optional Network accounts. Although prescriptive, AWS Control Tower is also flexible and relatively unopinionated regarding the structure of Member accounts. Member accounts can be enrolled or unenrolled in AWS Control Tower.

You can decide whether or not to implement AWS Organizations or AWS Control Tower to set up and govern your AWS multi-account environment. Regardless, you will still need to determine how to reflect your organization’s unique structure and requirements in the purpose and quantity of the accounts you create within your AWS environment.

Common Multi-Account Patterns

While working with peers, community members, and a wide variety of customers, I regularly encounter the following twelve patterns for organizing AWS accounts. As noted earlier, these patterns do not represent an either-or choice; they are designed to be purposefully combined to form a multi-account AWS environment strategy for your organization.

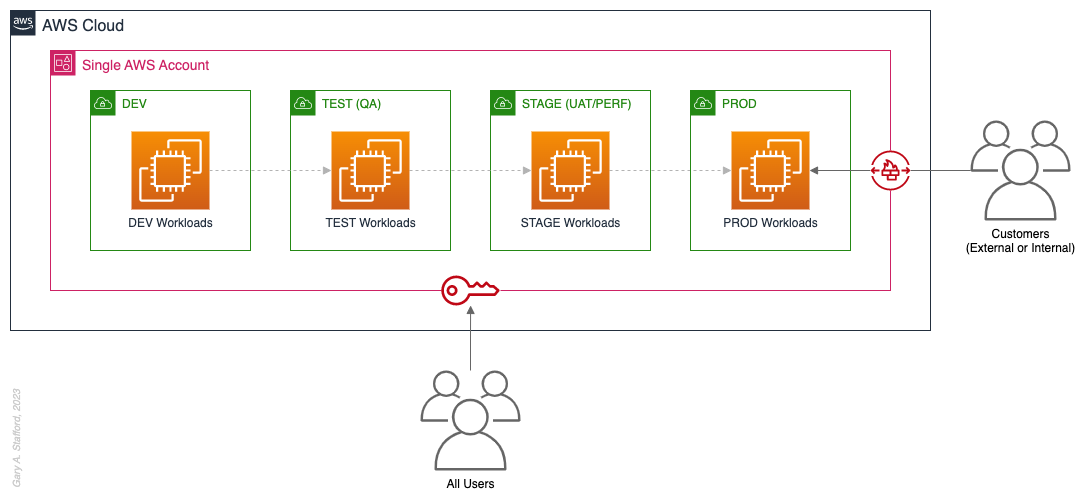

Pattern 1: Single “Uber” Account

Organizations that effectively implement Pattern 1: Single “Uber” Account organize and separate environments and workloads at the sub-account level. They often use Amazon Virtual Private Cloud (Amazon VPC), an AWS account-level construct, to organize and separate environments and workloads. They may also use Subnets (VPC-level construct) or AWS Regions and Availability Zones to further organize and separate environments and workloads.

Pros

- Few, if any, significant advantages, especially for customer-facing workloads

Cons

- Decreased ability to limit the scope of impact from adverse events (widest blast radius)

- If the account is compromised, then all the organization’s workloads and data, possibly the entire organization, are potentially compromised (e.g., Ransomware attacks such as Encryptors, Lockers, and Doxware)

- Increased risk that networking or security misconfiguration could lead to unintended access to sensitive workloads and data

- Increased risk that networking, security, or resource management misconfiguration could lead to broad or unintended impairment of all workloads

- Decreased ability to perform team-, environment-, and workload-level budgeting and cost attribution

- Increased risk of resource depletion (soft and hard service quotas)

- Reduced ability to conduct audits and demonstrate compliance

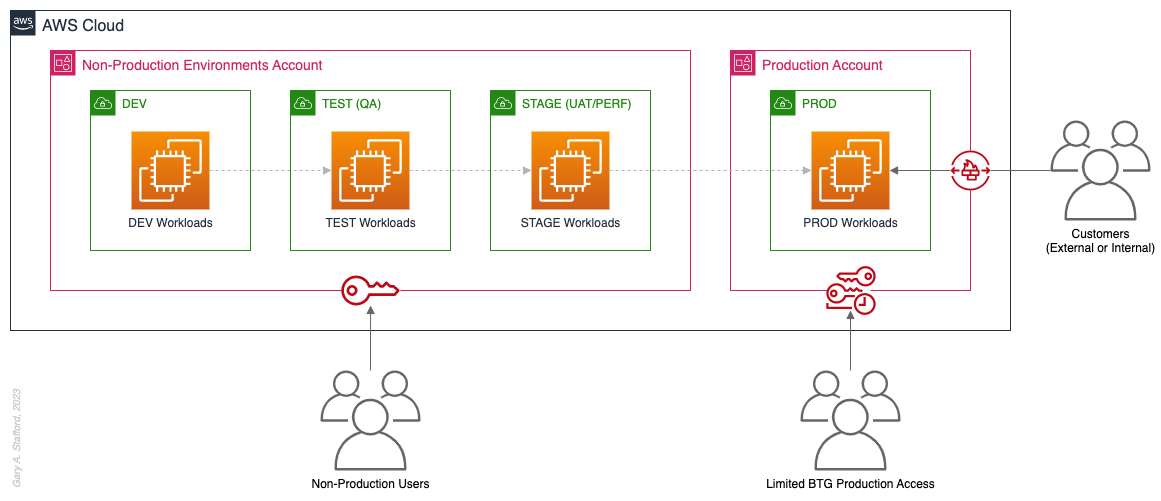

Pattern 2: Non-Prod/Prod Environments

Organizations that effectively implement Pattern 2: Non-Prod/Prod Environments organize and separate non-Production workloads from Production (PROD) workloads using separate AWS accounts. Most often, they use Amazon VPCs within the non-Production account to separate workloads or Software Development Lifecycle (SDLC) environments, most often Development (DEV), Testing (TEST) or Quality Assurance (QA), and Staging (STAGE). Alternatively, these environments might also be designated as “N-1” (previous release), “N” (current release), “N+1” (next release), “N+2” (in development), and so forth, based on the currency of that version of that workload.

In some organizations, the Staging environment is used for User Acceptance Testing (UAT), performance (PERF) testing, and load testing before releasing workloads to Production. While in other organizations, STAGE, UAT, and PERF are each treated as separate environments at the account or VPC level.

Isolating Production workloads into their own account(s) and strictly limiting access to those workloads represents a significant first step in improving the overall maturity of your multi-account AWS environment strategy.

Pros

- Limits the scope of impact on Production as a result of adverse non-Production events (narrower blast radius)

- Logical separation and security of Production workloads and data

- Tightly control and limit access to Production, including the use of Break-the-Glass procedures (aka Break-glass or BTG); draws its name from “breaking the glass to pull a fire alarm”

- Eliminate the risk that non-Production networking or security misconfiguration could lead to unintended access to sensitive Production workloads and data

- Eliminate the chance that non-Production networking, security, or resource management misconfiguration could lead to broad or unintended impairment of Production workloads

- Conduct audits and demonstrate compliance with Production workloads

Cons

- If the Production account is compromised, then all the organization’s customer-facing workloads and data are potentially compromised

- Decreased ability to perform team-, environment-, and workload-level budgeting and cost attribution in the shared non-Production environment

- Increased risk of resource depletion (soft and hard service quotas) in the non-Production environment account

Pattern 3: Upper/Lower Environments

The next pattern, Pattern 3: Upper/Lower Environments, is a finer-grain variation of Pattern 2. With Pattern 3, we split all “Lower” environments into a single account and each “Upper” environment into its own account. In the software development process, initial environments, such as CI/CD for automated testing of code and infrastructure, Development, Test, UAT, and Performance, are called “Lower” environments. Conversely, later environments, such as Staging, Production, and even Disaster Recovery (DR), are called “Upper” environments. Upper environments typically require isolation for stability during testing or security for Production workloads and sensitive data.

Often, courser-grain patterns like Patterns 1–3 are carryovers from more traditional on-premises data centers, where compute, storage, network, and security resources were more constrained. Although these patterns can be successfully reproduced in the Cloud, they may not be optimal compared to more “cloud native” patterns, which provide improved separation of concerns.

Pros

- Limits the scope of impact on individual Upper environments as a result of adverse Lower environment events (narrower blast radius)

- Increased stability of Staging environment for critical UAT, performance, and load testing

- Logical separation and security of Production workloads and data

- Tightly control and limit access to Production, including the use of BTG

- Eliminate the risk that non-Production networking or security misconfiguration could lead to unintended access to sensitive Production workloads and data

- Eliminate the chance that non-Production networking, security, or resource management misconfiguration could lead to broad or unintended impairment of Production workloads

- Conduct audits and demonstrate compliance with Production workloads

Cons

- If the Production account is compromised, then all the organization’s customer-facing workloads and data are potentially compromised (e.g., Ransomware attack)

- Decreased ability to perform team-, environment-, and workload-level budgeting and cost attribution in the shared Lower environment

- Increased risk of resource depletion (soft and hard service quotas) in the Lower environment account

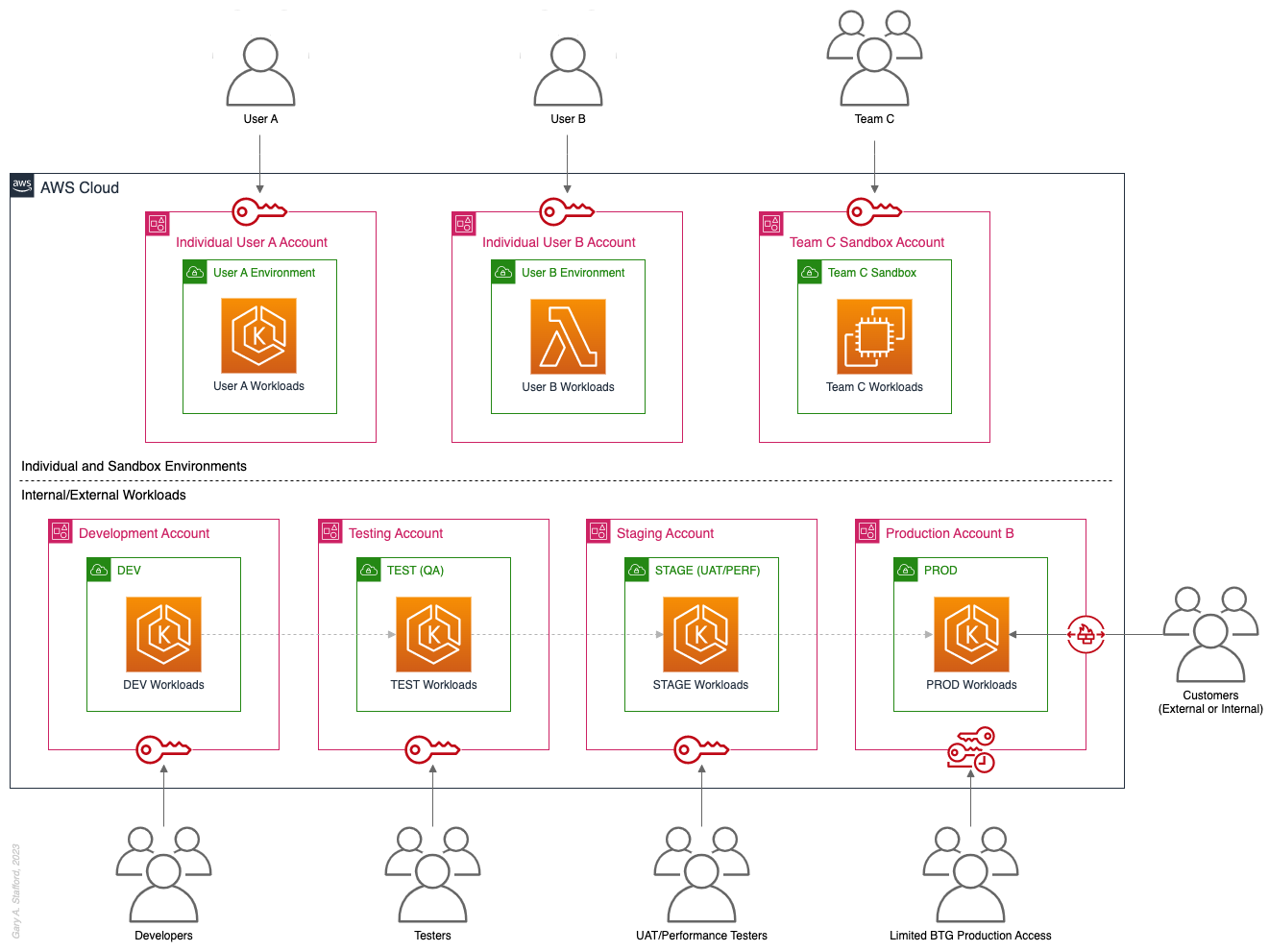

Pattern 4: SDLC Environments

The next pattern, Pattern 4: SDLC Environments, is a finer-grain variation of Pattern 3. With Pattern 4, we gain complete separation of each SDLC environment into its own AWS account. Using AWS services like AWS IAM Identity Center (fka AWS SSO), the Security team can enforce least-privilege permissions at an AWS Account level to individual groups of users, such as Developers, Testers, UAT, and Performance testers.

Based on my experience, Pattern 4 represents the minimal level of workload separation an organization should consider when developing its multi-account AWS environment strategy. Although Pattern 4 has a number of disadvantages, when combined with subsequent patterns and AWS best practices, this pattern begins to provide a scalable foundation for an organization’s growing workload portfolio.

Patterns, such as Pattern 4, not only apply to traditional software applications and services. These patterns can be applied to data analytics, AI/ML, IoT, media services, and similar workloads where separation of environments is required.

Pros

- Limits the scope of impact on one SDLC environment as a result of adverse events in another environment (narrower blast radius)

- Logical separation and security of Production workloads and data

- Increased stability of each SDLC environment

- Tightly control and limit access to Production, including the use of BTG

- Reduced risk that non-Production networking or security misconfiguration could lead to unintended access to sensitive Production workloads and data

- Reduced risk that non-Production networking, security, or resource management misconfiguration could lead to broad or unintended impairment of Production workloads

- Conduct audits and demonstrate compliance with Production workloads

- Increased ability to perform budgeting and cost attribution for each SDLC environment

- Reduced risk of resource depletion (soft and hard service limits) and IP conflicts and exhaustion within any single SDLC environment

Cons

- All workloads for each SDLC environment run within a single account, including Production, increasing the potential scope of impact from adverse events within that environment’s account

- If the Production account is compromised, then all the organization’s customer-facing workloads and data are potentially compromised

- Decreased ability to perform workload-level budgeting and cost attribution

Pattern 5: Major Workload Separation

The next pattern, Pattern 5: Major Workload Separation, is a finer-grain variation of Pattern 4. With Pattern 5, we separate each significant workload into its own separate SDLC environment account. The security team can enforce fine-grain least-privilege permissions at an AWS Account level to individual groups of users, such as Developers, Testers, UAT, and Performance testers, by their designated workload(s).

Pattern 5 has several advantages over the previous patterns. In addition to the increased workload-level security and reliability benefits, Pattern 5 can be particularly useful for organizations that operate significantly different technology stacks and specialized workloads, particularly at scale. Different technology stacks and specialized workloads often each have their own unique development, testing, deployment, and support processes. Isolating these types of workloads will help facilitate the support of multiple IT operating models.

Pros

- Limits the scope of impact on an individual workload as a result of adverse events from another workload or SDLC environment (narrowest blast radius)

- Increased ability to perform team-, environment-, and workload-level budgeting and cost attribution

Cons

- If the Production account is compromised, then all the organization’s customer-facing workloads and data are potentially compromised (e.g., Ransomware attack)

Pattern 6: Backup

In the earlier patterns, we mentioned that if the Production account were compromised, all the organization’s customer-facing workloads and data could be compromised. According to TechTarget, 2022 was a breakout year for Ransomware attacks. According to the US government’s CISA.gov website, “Ransomware is a form of malware designed to encrypt files on a device, rendering any files and the systems that rely on them unusable. Malicious actors then demand ransom in exchange for decryption.”

According to AWS best practices, one of the recommended preparatory actions to protect and recover from Ransomware attacks is backing up data to an alternate account using tools such as AWS Backup and an AWS Backup vault. Solutions such as AWS Backup protect and restore data regardless of how it was made inaccessible.

In Pattern 6: Backup, we create one or more Backup accounts to protect against unintended data loss or account compromise. In the example below, we have two Backup accounts, one for Production data and one for all non-Production data.

Pros

- If the Production account is compromised (e.g., Ransomware attacks such as Encryptors, Lockers, and Doxware), there are secure backups of data stored in a separate account, which can be used to restore or recreate the Production environment

Cons

- Few, if any, significant disadvantages when combined with previous patterns and AWS best-practices

Pattern 7: Sandboxes

The following pattern, Pattern 7: Sandboxes, supplements the previous patterns, designed to address the needs of an organization to allow individual users and teams to learn, build, experiment, and innovate on AWS without impacting the larger organization’s AWS environment. To quote the AWS blog, Best practices for creating and managing sandbox accounts in AWS, “Many organizations need another type of environment, one where users can build and innovate with AWS services that might not be permitted in production or development/test environments because controls have not yet been implemented.” Further, according to TechTarget, “a Sandbox is an isolated testing environment that enables users to run programs or open files without affecting the application, system, or platform on which they run.”

Due to the potential volume of individual user and team accounts, sometimes referred to as Sandbox accounts, mature infrastructure automation practices, cost controls, and self-service provisioning and de-provisioning of Sandbox accounts are critical capabilities for the organization.

Pros

- Allow individual users and teams to learn, build, experiment, and innovate on AWS without impacting the rest of the organization’s AWS environment

Cons

- Without mature automation practices, cost controls, and self-service capabilities, managing multiple individual and team Sandbox accounts can become unwieldy and costly

Pattern 8: Centralized Management and Governance

We discussed AWS Control Tower at the beginning of this post. AWS Control Tower is prescriptive in creating Shared accounts within its AWS Organizations’ organizational units (OUs), including the Management, Log Archive, and Audit accounts. AWS encourages using AWS Control Tower to orchestrate multiple AWS accounts and services on your behalf while maintaining your organization’s security and compliance needs.

As exemplified in Pattern 8: Centralized Management and Governance, many organizations will implement centralized management whether or not they decide to implement AWS Control Tower. In addition to the Management account (payer account, fka master account), organizations often create centralized logging accounts, and centralized tooling (aka Shared services) accounts for functions such as CI/CD, IaC provisioning, and deployment. Another common centralized management account is a Security account. Organizations use this account to centralize the monitoring, analysis, notification, and automated mitigation of potential security issues within their AWS environment. The Security accounts will include services such as Amazon Detective, Amazon Inspector, Amazon GuardDuty, and AWS Security Hub.

Pros

- Increased ability to manage and maintain multiple AWS accounts with fewer resources

- Reduced duplication of management resources across accounts

- Increased ability to use automation and improve the consistency of processes and procedures across multiple accounts

Cons

- Few, if any, significant disadvantages when combined with previous patterns and AWS best-practices

Pattern 9: Internal/External Environments

The next pattern, Pattern 9: Internal/External Environments, focuses on organizations with internal operational systems (aka Enterprise systems) in the Cloud and customer-facing workloads. Pattern 9 separates internal operational systems, platforms, and workloads from external customer-facing workloads. For example, an organization’s divisions and departments, such as Sales and Marketing, Finance, Human Resources, and Manufacturing, are assigned their own AWS account(s). Pattern 9 allows the Security team to ensure that internal departmental or divisional users are isolated from users who are responsible for developing, testing, deploying, and managing customer-facing workloads.

Note that the diagram for Pattern 9 shows remote users who access AWS End User Computing (EUC) services or Virtual Desktop Infrastructure (VDI), such as Amazon WorkSpaces and Amazon AppStream 2.0. In this example, remote workers have secure access to EUC services provisioned in a separate AWS account, and indirectly, internal systems, platforms, and workloads.

Pros

- Separation of internal operational systems, platforms, and workloads from external customer-facing workloads

Cons

- Few, if any, significant disadvantages when combined with previous patterns and AWS best-practices

Pattern 10: PCI DSS Workloads

The next pattern, Pattern 10: PCI DSS Workloads, is a variation of previous patterns, which assumes the existence of Payment Card Industry Data Security Standard (PCI DSS) workloads and data. According to the AWS, “PCI DSS applies to entities that store, process, or transmit cardholder data (CHD) or sensitive authentication data (SAD), including merchants, processors, acquirers, issuers, and service providers. The PCI DSS is mandated by the card brands and administered by the Payment Card Industry Security Standards Council.”

According to AWS’s whitepaper, Architecting for PCI DSS Scoping and Segmentation on AWS, “By design, all resources provisioned within an AWS account are logically isolated from resources provisioned in other AWS accounts, even within your own AWS Organizations. Using an isolated account for PCI workloads is a core best practice when designing your PCI application to run on AWS.” With Pattern 10, we separate non-PCI DSS and PCI DSS Production workloads and data. The assumption is that only Production contains PCI DSS data. Data in lower environments is synthetically generated or sufficiently encrypted, masked, obfuscated, or tokenized.

Note that the diagram for Pattern 10 shows Administrators. Administrators with different spans of responsibility and access are present in every pattern, whether specifically shown or not.

Pros

- Increased ability to meet compliance requirements by separating non-PCI DSS and PCI DSS Production workloads

Cons

- Few, if any, significant disadvantages when combined with previous patterns and AWS best-practices

Pattern 11: Vendors and Contractors

The next pattern, Pattern 11: Vendors and Contractors, is focused on organizations that employ contractors or use third-party vendors who provide products and services that interact with their AWS-based environment. Like Pattern 10, Pattern 11 allows the Security team to ensure that contractor and vendor-based systems’ access to internal systems and customer-facing workloads is tightly controlled and auditable.

Vendor-based products and services are often deployed within an organization’s AWS environment without external means of ingress or egress. Alternatively, a vendor’s product or service may have a secure means of ingress from or egress to external endpoints. Such is the case with some SaaS products, which ship an organization’s data to an external aggregator for analytics or a security vendor’s product that pre-filters incoming data, external to the organization’s AWS environment. Using separate AWS accounts can improve an organization’s security posture and mitigate the risk of adverse events on the organization’s overall AWS environment.

Pros

- Ensure access to internal systems and customer-facing workloads by contractors and vendor-based systems is tightly controlled and auditable

Cons

- Few, if any, significant disadvantages when combined with previous patterns and AWS best-practices

Pattern 12: Mergers and Acquisitions

The next pattern, Pattern 12: Mergers and Acquisitions, is focused on managing the integration of external AWS accounts as a result of a merger or acquisition. This is a common occurrence, but the exact details of how best to handle the integration of two or more integrations depend on several factors. Factors include the required level of integration, for example, maintaining separate AWS Organizations, maintaining different AWS accounts, or merging resources from multiple accounts. Other factors that might impact account structure include changes in ownership or payer of acquired accounts, existing acquired cost-savings agreements (e.g., EDPs, PPAs, RIs, and Savings Plans), and AWS Marketplace vendor agreements. Even existing authentication and authorization methods of the acquiree versus the acquirer (e.g., AWS IAM Identity Center, Microsoft Active Directory (AD), Azure AD, and external identity providers (IdP) like Okta or Auth0).

The diagram for Pattern 12 attempts to show a few different M&A account scenarios, including maintaining separate AWS accounts for the acquirer and acquiree (e.g., acquiree’s Manufacturing Division account). If desired, the accounts can be kept independent but managed within the acquirer’s AWS Organizations’ organization. The diagram also exhibits merging resources from the acquiree’s accounts into the acquirer’s accounts (e.g., Sales and Marketing accounts). Resources will be migrated or decommissioned, and the account will be closed.

Pros

- Maintain separation between an acquirer and an acquiree’s AWS accounts within a single organization’s AWS environment

- Potentially consolidate and maximize cost-saving advantages of volume-related financial agreements and vendor licensing

Cons

- Migrating workloads between different organizations, depending on their complexity, requires careful planning and testing

- Consolidating multiple authentication and authorization methods requires careful planning and testing to avoid improper privileges

- Consolidating and optimizing separate licensing and cost-saving agreements between multiple organizations requires careful planning and an in-depth understanding of those agreements

Multi-Account AWS Environment Example

You can form an efficient and effective multi-account strategy for your organization by purposefully combining multiple patterns. Below is an example of combining the features of several patterns: Major Workload Separation, Backup, Sandboxes, Centralized Management and Governance, Internal/External Environments, and Vendors and Contractors.

According to AWS, “You can use AWS Organizations’ organizational units (OUs) to group accounts together to administer as a single unit. This greatly simplifies the management of your accounts.” If you decide to use AWS Organizations, each set of accounts associated with a pattern could correspond to an OU: Major Workload A, Major Workload B, Sandboxes, Backups, Centralized Management, Internal Environments, Vendors, and Contractors.

Conclusion

This post taught us twelve common patterns for effectively and efficiently organizing your AWS accounts. Instead of an either-or choice, these patterns are designed to be purposefully combined to form a multi-account strategy for your organization. Having a sound multi-account strategy will improve your security posture, maintain compliance, decrease the impact of adverse events on your AWS environment, and improve your organization’s ability to safely and confidently innovate and experiment on AWS.

Recommended References

- Establishing Your Cloud Foundation on AWS (AWS Whitepaper)

- Organizing Your AWS Environment Using Multiple Accounts (AWS Whitepaper)

- Building a Cloud Operating Model (AWS Whitepaper)

- AWS Security Reference Architecture (AWS Prescriptive Guidance)

- AWS Security Maturity Model (AWS Whitepaper)

- AWS re:Invent 2022 — Best practices for organizing and operating on AWS (YouTube Video)

- AWS Break Glass Role (GitHub)

🔔 To keep up with future content, follow Gary Stafford on LinkedIn.

This blog represents my viewpoints and not those of my employer, Amazon Web Services (AWS). All product names, logos, and brands are the property of their respective owners.

Monolith to Microservices: Refactoring Relational Databases

Posted by Gary A. Stafford in Enterprise Software Development, SQL, Technology Consulting on April 14, 2022

Exploring common patterns for refactoring relational database models as part of a microservices architecture

Introduction

There is no shortage of books, articles, tutorials, and presentations on migrating existing monolithic applications to microservices, nor designing new applications using a microservices architecture. It has been one of the most popular IT topics for the last several years. Unfortunately, monolithic architectures often have equally monolithic database models. As organizations evolve from monolithic to microservices architectures, refactoring the application’s database model is often overlooked or deprioritized. Similarly, as organizations develop new microservices-based applications, they frequently neglect to apply a similar strategy to their databases.

The following post will examine several basic patterns for refactoring relational databases for microservices-based applications.

Terminology

Monolithic Architecture

A monolithic architecture is “the traditional unified model for the design of a software program. Monolithic, in this context, means composed all in one piece.” (TechTarget). A monolithic application “has all or most of its functionality within a single process or container, and it’s componentized in internal layers or libraries” (Microsoft). A monolith is usually built, deployed, and upgraded as a single unit of code.

Microservices Architecture

A microservices architecture (aka microservices) refers to “an architectural style for developing applications. Microservices allow a large application to be separated into smaller independent parts, with each part having its own realm of responsibility” (Google Cloud).

According to microservices.io, the advantages of microservices include:

- Highly maintainable and testable

- Loosely coupled

- Independently deployable

- Organized around business capabilities

- Owned by a small team

- Enables rapid, frequent, and reliable delivery

- Allows an organization to [more easily] evolve its technology stack

Database

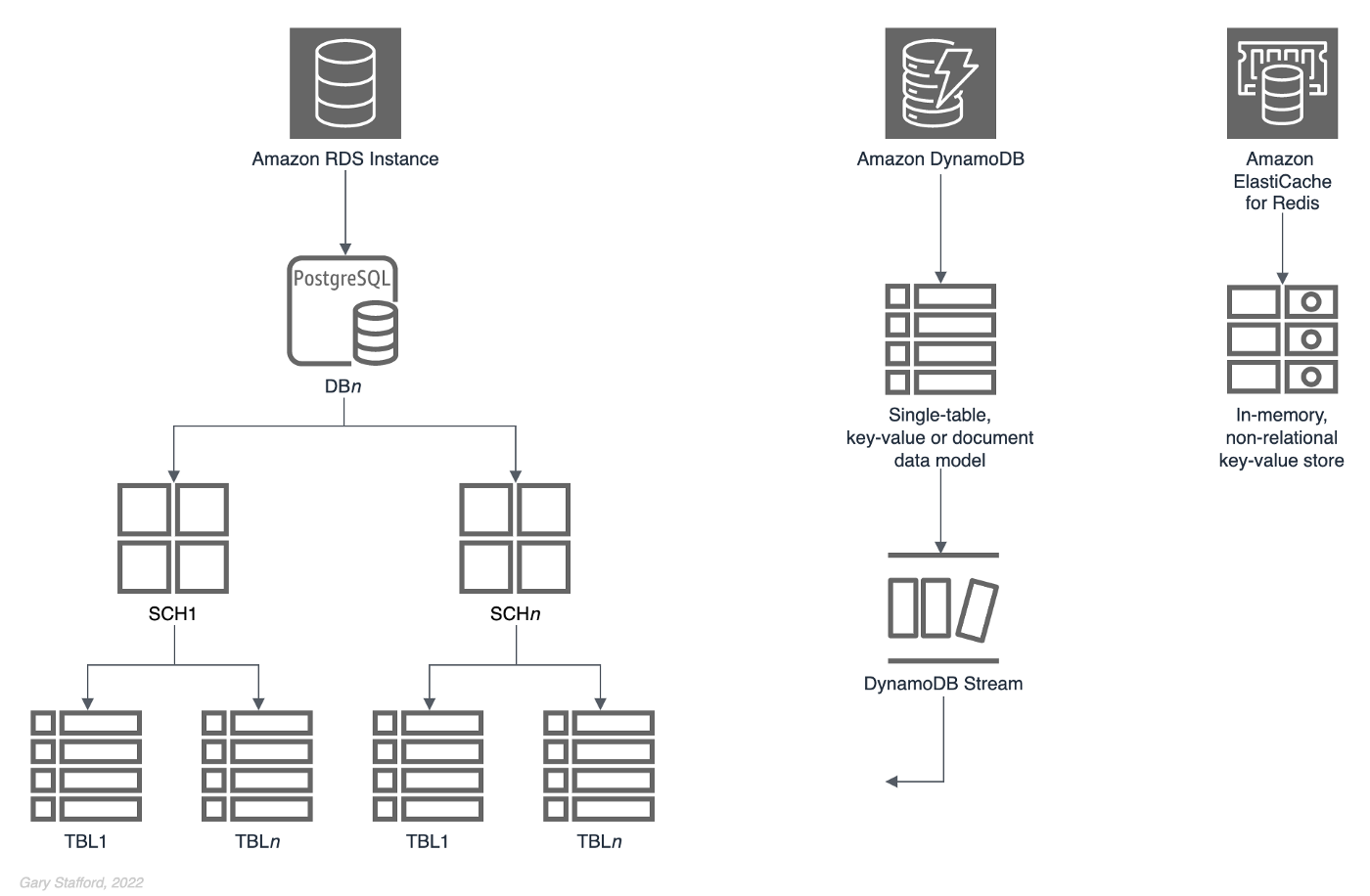

A database is “an organized collection of structured information, or data, typically stored electronically in a computer system” (Oracle). There are many types of databases. The most common database engines include relational, NoSQL, key-value, document, in-memory, graph, time series, wide column, and ledger.

PostgreSQL

In this post, we will use PostgreSQL (aka Postgres), a popular open-source object-relational database. A relational database is “a collection of data items with pre-defined relationships between them. These items are organized as a set of tables with columns and rows. Tables are used to hold information about the objects to be represented in the database” (AWS).

Amazon RDS for PostgreSQL

We will use the fully managed Amazon RDS for PostgreSQL in this post. Amazon RDS makes it easy to set up, operate, and scale PostgreSQL deployments in the cloud. With Amazon RDS, you can deploy scalable PostgreSQL deployments in minutes with cost-efficient and resizable hardware capacity. In addition, Amazon RDS offers multiple versions of PostgreSQL, including the latest version used for this post, 14.2.

The patterns discussed here are not specific to Amazon RDS for PostgreSQL. There are many options for using PostgreSQL on the public cloud or within your private data center. Alternately, you could choose Amazon Aurora PostgreSQL-Compatible Edition, Google Cloud’s Cloud SQL for PostgreSQL, Microsoft’s Azure Database for PostgreSQL, ElephantSQL, or your own self-manage PostgreSQL deployed to bare metal servers, virtual machine (VM), or container.

Database Refactoring Patterns

There are many ways in which a relational database, such as PostgreSQL, can be refactored to optimize efficiency in microservices-based application architectures. As stated earlier, a database is an organized collection of structured data. Therefore, most refactoring patterns reorganize the data to optimize for an organization’s functional requirements, such as database access efficiency, performance, resilience, security, compliance, and manageability.

The basic building block of Amazon RDS is the DB instance, where you create your databases. You choose the engine-specific characteristics of the DB instance when you create it, such as storage capacity, CPU, memory, and EC2 instance type on which the database server runs. A single Amazon RDS database instance can contain multiple databases. Those databases contain numerous object types, including tables, views, functions, procedures, and types. Tables and other object types are organized into schemas. These hierarchal constructs — instances, databases, schemas, and tables — can be arranged in different ways depending on the requirements of the database data producers and consumers.

Sample Database

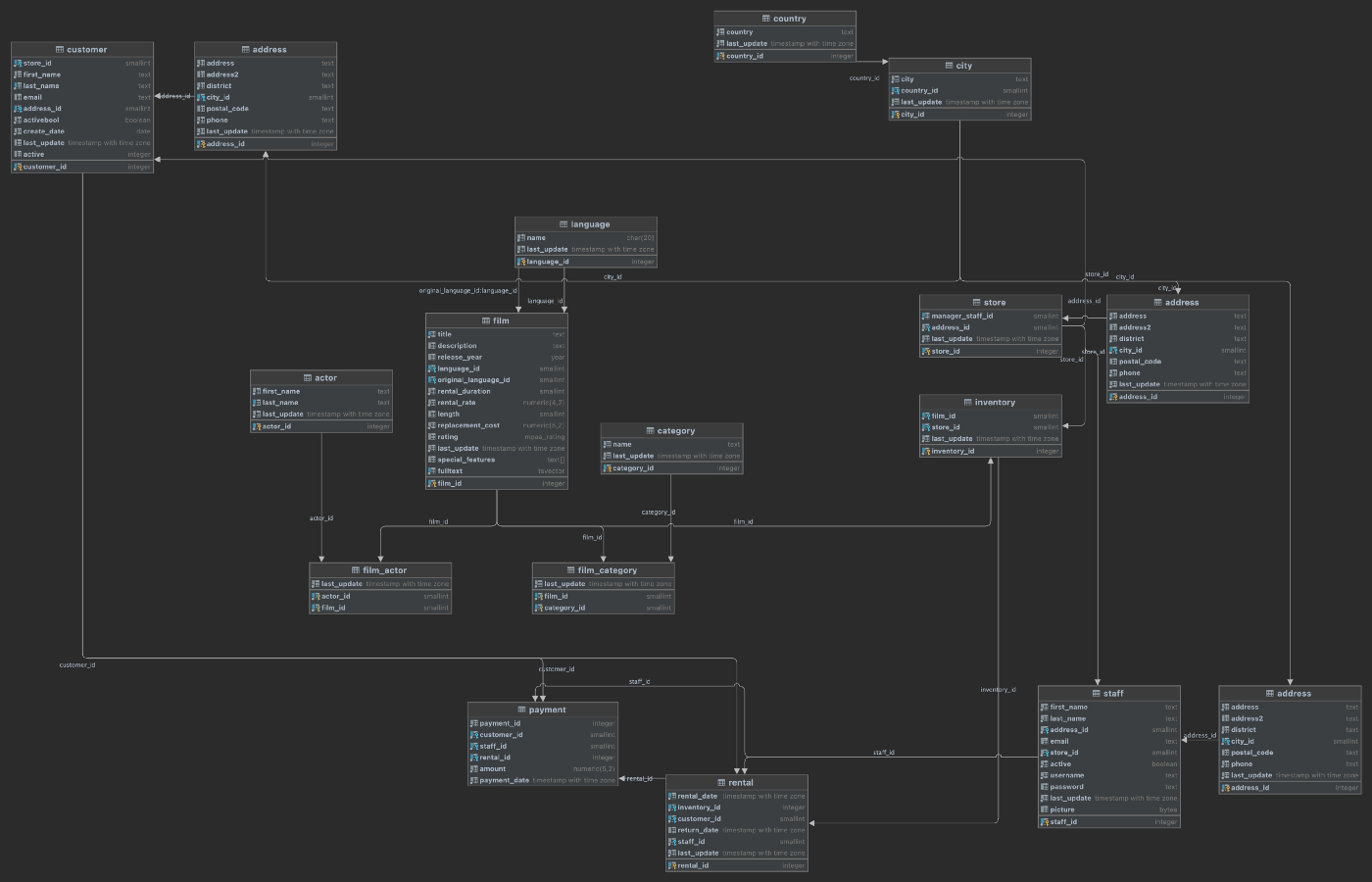

To demonstrate different patterns, we need data. Specifically, we need a database with data. Conveniently, due to the popularity of PostgreSQL, there are many available sample databases, including the Pagila database. I have used it in many previous articles and demonstrations. The Pagila database is available for download from several sources.

The Pagila database represents a DVD rental business. The database is well-built, small, and adheres to a third normal form (3NF) database schema design. The Pagila database has many objects, including 1 schema, 15 tables, 1 trigger, 7 views, 8 functions, 1 domain, 1 type, 1 aggregate, and 13 sequences. Pagila’s tables contain between 2 and 16K rows.

Pattern 1: Single Schema

Pattern 1: Single Schema is one of the most basic database patterns. There is one database instance containing a single database. That database has a single schema containing all tables and other database objects.

As organizations begin to move from monolithic to microservices architectures, they often retain their monolithic database architecture for some time.

Frequently, the monolithic database’s data model is equally monolithic, lacking proper separation of concerns using simple database constructs such as schemas. The Pagila database is an example of this first pattern. The Pagila database has a single schema containing all database object types, including tables, functions, views, procedures, sequences, and triggers.

To create a copy of the Pagila database, we can use pg_restore to restore any of several publically available custom-format database archive files. If you already have the Pagila database running, simply create a copy with pg_dump.

Below we see the table layout of the Pagila database, which contains the single, default public schema.

-----------+----------+--------+------------

Instance | Database | Schema | Table

-----------+----------+--------+------------

postgres1 | pagila | public | actor

postgres1 | pagila | public | address

postgres1 | pagila | public | category

postgres1 | pagila | public | city

postgres1 | pagila | public | country

postgres1 | pagila | public | customer

postgres1 | pagila | public | film

postgres1 | pagila | public | film_actor

postgres1 | pagila | public | film_category

postgres1 | pagila | public | inventory

postgres1 | pagila | public | language

postgres1 | pagila | public | payment

postgres1 | pagila | public | rental

postgres1 | pagila | public | staff

postgres1 | pagila | public | store

Using a single schema to house all tables, especially the public schema is generally considered poor database design. As a database grows in complexity, creating, organizing, managing, and securing dozens, hundreds, or thousands of database objects, including tables, within a single schema becomes impossible. For example, given a single schema, the only way to organize large numbers of database objects is by using lengthy and cryptic naming conventions.

Public Schema